Two days ago, we wrote a single task version of crawler, which crawled the user information of treasure net, so how about its performance?

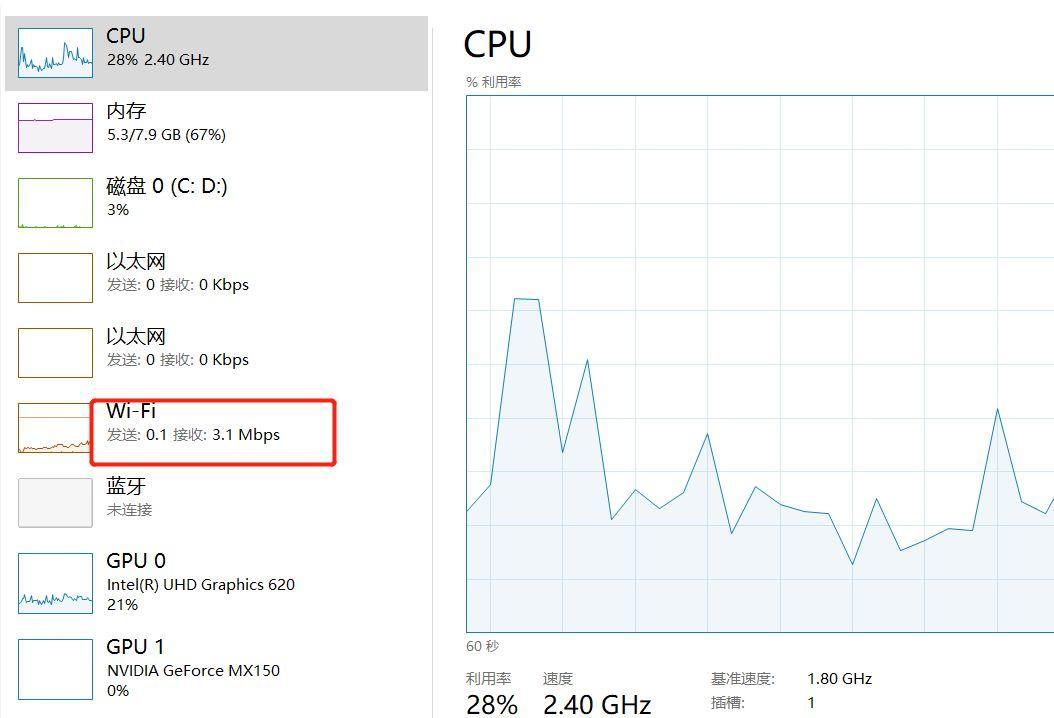

We can see from the network utilization. We can see from the performance analysis window in the task manager that the download rate is about 200kbps, which is quite slow.

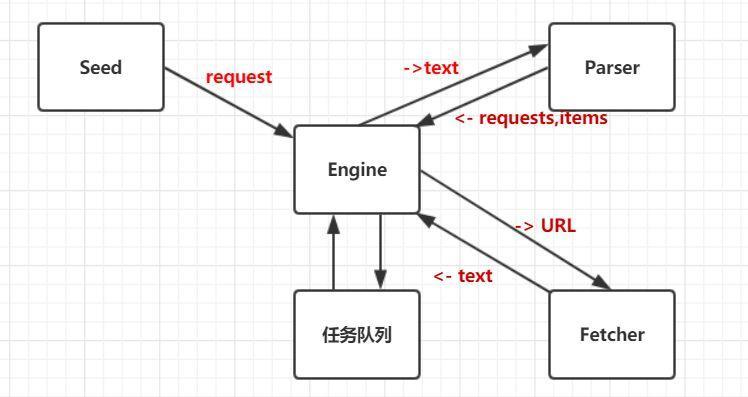

We analyze the design of single task crawler as follows:

As can be seen from the figure above, the engine takes the request out of the task queue, sends it to the Fetcher to get the resource, waits for the data to return, then sends the returned data to the Parser to analyze, waits for its return, adds the returned request to the task queue, and prints the item.

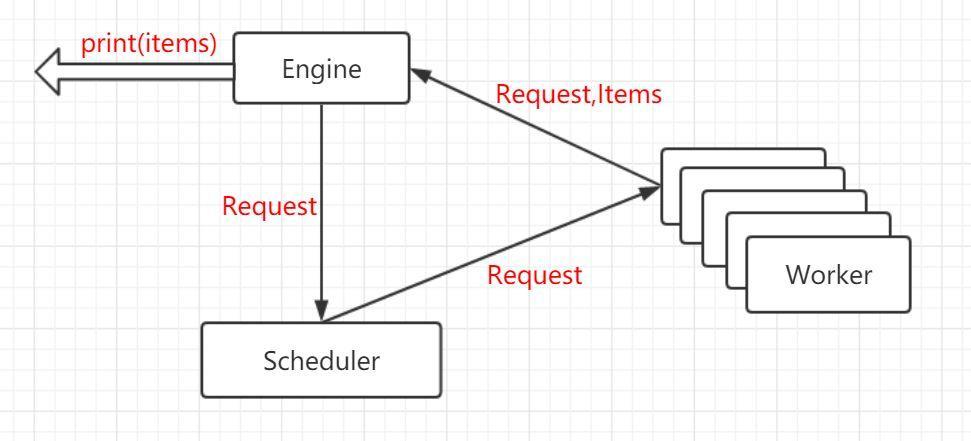

In fact, we can send multiple feeders and parsers at the same time, waiting for them to return, and we can do other processing. This is easy to implement using go's concurrent syntax sugar.

In the above figure, the Worker is the combination of the catcher and the Parser. The Scheduler distributes many requests to different workers. The Worker returns the requests and Items to the Engine, prints out the Items, and then puts the Request into the Scheduler.

Based on this, it is realized by code:

Engine:

package engine

import (

"log"

)

type ConcurrentEngine struct {

Scheduler Scheduler

WokerCount int

}

type Scheduler interface {

Submit(Request)

ConfigureMasterWorkerChan(chan Request)

}

func (e *ConcurrentEngine) Run(seeds ...Request) {

in := make(chan Request)

out := make(chan ParserResult)

e.Scheduler.ConfigureMasterWorkerChan(in)

//Create Worker

for i := 0; i < e.WokerCount; i++ {

createWorker(in, out)

}

//Distribute tasks to workers

for _, r := range seeds {

e.Scheduler.Submit(r)

}

for {

//Print out items

result := <- out

for _, item := range result.Items {

log.Printf("Get Items: %v\n", item)

}

//Send out requests to the Scheduler

for _, r := range result.Requests {

e.Scheduler.Submit(r)

}

}

}

//workerConut goroutine to exec worker for Loop

func createWorker(in chan Request, out chan ParserResult) {

go func() {

for {

request := <-in

parserResult, err := worker(request)

//Something went wrong continue to the next

if err != nil {

continue

}

//Send parserResult out

out <- parserResult

}

}()

}Scheduler:

package scheduler

import "crawler/engine"

//SimpleScheduler one workChan to multi worker

type SimpleScheduler struct {

workChan chan engine.Request

}

func (s *SimpleScheduler) ConfigureMasterWorkerChan(r chan engine.Request) {

s.workChan = r

}

func (s *SimpleScheduler) Submit(r engine.Request) {

go func() { s.workChan <- r }()

}Worker:

func worker(r Request) (ParserResult, error) {

log.Printf("fetching url:%s\n", r.Url)

//Crawling data

body, err := fetcher.Fetch(r.Url)

if err != nil {

log.Printf("fetch url: %s; err: %v\n", r.Url, err)

//An error occurred and continue to crawl the next url

return ParserResult{}, err

}

//Analyze the result of crawling

return r.ParserFunc(body), nil

}main function:

package main

import (

"crawler/engine"

"crawler/zhenai/parser"

"crawler/scheduler"

)

func main() {

e := &engine.ConcurrentEngine{

Scheduler: &scheduler.SimpleScheduler{},

WokerCount :100,

}

e.Run(

engine.Request{

Url: "http://www.zhenai.com/zhenghun",

ParserFunc: parser.ParseCityList,

})

}Here, open 100 workers, check the network utilization again after running, and it becomes more than 3M.

Due to the long code length, the students who need to pay attention to the public number reply: go crawler.

This public account provides free csdn download service and massive it learning resources. If you are ready to enter the IT pit and aspire to become an excellent program ape, these resources are suitable for you, including but not limited to java, go, python, springcloud, elk, embedded, big data, interview materials, front-end and other resources. At the same time, we have set up a technology exchange group. There are many big guys who will share technology articles from time to time. If you want to learn and improve together, you can reply [2] in the background of the public account. Free invitation plus technology exchange groups will learn from each other and share programming it related resources from time to time.

Scan the code to pay attention to the wonderful content and push it to you at the first time