Target website: Cat eye movie

Main process

- Crawl the url corresponding to each movie

- Crawl the source code corresponding to the specific movie

- Analyze the source code and download the corresponding font

- Use fontTools to draw the corresponding number

- Using machine learning method to identify corresponding numbers

- Replace the corresponding place with the identified number in the source code

Pit experience

- When using pyquery's. text() method, the html is automatically de escaped. The replacement process fails. A heap of garbled code is printed directly. lxml must not be used instead

- At first, I saw a lot of Chinese characters on the Internet by converting woff font file to xml and analyzing glyf in xml, but after reading several, I found that even the x and y values corresponding to the same number are not exactly the same

- Not familiar with the use of multithreading

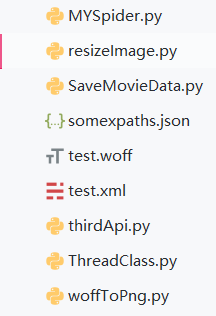

The list of items is as follows

The specific code is as follows

Myspider.py

import requests

import fake_useragent

import re

import os

from woffToPng import woff_to_image

from resizeImage import resize_img

from lxml import etree

from html import unescape

from ThreadClass import SpiderThread

from SaveMovieData import SaveInfo

from pyquery import PyQuery as pq

from pprint import pprint

import json

import time

# It's a bit hard to implement with a co program. Try multithreading for a while

# Storage urls

film_urls = []

def verify_img(img_dir):

api_url = "http://127.0.0.1:6000/b"

img_to_num = {}

for file in os.listdir(img_dir):

file_name = os.path.join(img_dir, file)

# Rebuild picture size

resize_img(file_name, file_name)

files = {"image_file": ("image_file", open(file_name, "rb"), "application")}

r = requests.post(url=api_url, files=files, timeout=None)

if r.status_code == 200:

# Get the name of the picture, that is, the unicode code corresponding to the number

num_id = os.path.splitext(file)[0][3:]

img_to_num[str(int(num_id, 16))] = r.json().get("value")

return img_to_num

def find_certain_part(html, xpath_format):

try:

return html.xpath(xpath_format)[0]

except Exception:

return "null"

def parse_data_by_lxml(source_code, img_to_num, saver):

html = etree.HTML(source_code)

xpaths = json.loads(open("somexpaths.json", "r").read())

movie_name = find_certain_part(html, xpaths.get("movie_name"))

movie_ename = find_certain_part(html, xpaths.get("movie_ename"))

movie_classes = find_certain_part(html, xpaths.get("movie_classes")).strip()

movie_length = find_certain_part(html, xpaths.get("movie_length")).strip()

movie_showtime = find_certain_part(html, xpaths.get("movie_showtime")).strip()

text_pattern = re.compile('.*?class="stonefont">(.*?)</span>')

data_to_be_replace = []

movie_score = find_certain_part(html, xpaths.get("movie_score"))

movie_score_num = find_certain_part(html, xpaths.get("movie_score_num"))

if movie_score != "null":

movie_score = text_pattern.search(etree.tostring(movie_score).decode("utf8")).group(1)

if movie_score_num != "null":

movie_score_num = text_pattern.search(etree.tostring(movie_score_num).decode("utf8")).group(1)

data_to_be_replace.append(movie_score)

data_to_be_replace.append(movie_score_num)

movie_box = find_certain_part(html, xpaths.get("movie_box"))

if movie_box != "null":

movie_box = text_pattern.search(etree.tostring(movie_box).decode("utf8")).group(1)

movie_box_unit = find_certain_part(html, xpaths.get("movie_box_unit"))

data_to_be_replace.append(movie_box)

# Check if it is a string

for item in data_to_be_replace:

assert isinstance(item, str)

# Convert unicode encoded strings to numbers

for key, value in img_to_num.items():

new_key = f"&#{key};"

for i in range(len(data_to_be_replace)):

if data_to_be_replace[i] == "null":

continue

if new_key in data_to_be_replace[i]:

data_to_be_replace[i] = data_to_be_replace[i].replace(new_key, value)

movie_score, movie_score_num, movie_box = [unescape(item) for item in data_to_be_replace]

# If there is no score, it is regarded as 0

if movie_score == "null":

movie_score = "0"

if movie_box != "null":

movie_box = movie_box + movie_box_unit.strip()

movie_brief_info = find_certain_part(html, xpaths.get("movie_brief_info"))

assert(isinstance(movie_brief_info, str))

# There is a little problem with the implementation logic here, because the first one is the director by default

movie_director, *movie_actors = [item.strip() for item in html.xpath("//body//div[@id='app']//div//div//div//div[@class='tab-content-container']//div//div[@class='mod-content']//div//div//ul//li//div//a/text()")]

movie_actors = ",".join(movie_actors)

movie_comments = {}

try:

names = html.xpath("//body//div[@id='app']//div//div//div//div//div[@class='module']//div[@class='mod-content']//div[@class='comment-list-container']//ul//li//div//div[@class='user']//span[@class='name']/text()")

comments = html.xpath("//body//div[@id='app']//div//div//div//div//div[@class='module']//div[@class='mod-content']//div[@class='comment-list-container']//ul//li//div[@class='main']//div[@class='comment-content']/text()")

assert(len(names) == len(comments))

for name, comment in zip(names, comments):

movie_comments[name] = comment

except Exception:

pass

save_id = saver.insert_dict({

"Name": movie_name,

"alias": movie_ename,

"category": movie_classes,

"Duration": movie_length,

"Release time": movie_showtime,

"score": float(movie_score),

"Score number": movie_score_num,

"box office": movie_box,

"brief introduction": movie_brief_info,

"director": movie_director,

"performer": movie_actors,

"Hot Reviews": movie_comments

})

print(f"{save_id} Save successfully")

# Crawls the source code, processes the font file after obtaining the source code, and replaces it after processing the font file

def get_one_film(url, ua, film_id, saver):

headers = {

"User-Agent": ua,

"Host": "maoyan.com"

}

r = requests.get(url=url, headers=headers)

if r.status_code == 200:

source_code = r.text

font_pattern = re.compile("url\(\'(.*?\.woff)\'\)")

font_url = "http:" + font_pattern.search(r.text).group(1).strip()

del headers["Host"]

res = requests.get(url=font_url, headers=headers)

# Download fonts and identify them

if res.status_code == 200:

if os.path.exists(film_id):

os.system(f"rmdir /s /q {film_id}")

os.makedirs(film_id)

woff_path = os.path.join(film_id, "temp.woff")

img_dir = os.path.join(film_id, "images")

os.makedirs(img_dir)

with open(woff_path, "wb") as f:

f.write(res.content)

woff_to_image(woff_path, img_dir)

# Try to realize Chinese character recognition with CO process later

# Direct identification first

# Stored in a dictionary, {"img_id": "img_num"}

img_to_num = verify_img(img_dir)

# Delete created files

os.system(f"rmdir /s /q {film_id}")

# Further processing of obtained data and replaceable information

parse_data_by_lxml(source_code, img_to_num, saver)

def get_urls(url, ua, showType, offset):

base_url = "https://maoyan.com"

headers = {

"User-Agent": ua,

"Host": "maoyan.com"

}

params = {

"showType": showType,

"offset": offset

}

urls = []

r = requests.get(url=url, headers=headers, params=params)

if r.status_code == 200:

doc = pq(r.text)

for re_url in doc("#app div div[class='movies-list'] dl dd div[class='movie-item'] a[target='_blank']").items():

urls.append(base_url + re_url.attr("href"))

film_urls.extend(urls)

print(f"Current capture url{len(film_urls)}individual")

if __name__ == "__main__":

# test

ua = fake_useragent.UserAgent()

tasks_one = []

try:

for i in range(68):

tasks_one.append(SpiderThread(get_urls, args=("https://maoyan.com/films", ua.random, "3", str(30*i))))

for task in tasks_one:

task.start()

for task in tasks_one:

task.join()

except Exception as e:

print(e.args)

saver = SaveInfo()

film_ids = [url.split("/")[-1] for url in film_urls]

print(f"Capture movies url common{len(film_urls)}strip")

tasks_two = []

count = 0

try:

for film_url, film_id in zip(film_urls, film_ids):

tasks_two.append(SpiderThread(get_one_film, args=(film_url, ua.random, film_id, saver)))

for task in tasks_two:

task.start()

count += 1

if count % 4 == 0:

time.sleep(5)

for task in tasks_two:

task.join()

except Exception as e:

print(e.args)

print("After grabbing")

resizeimage.py

from PIL import Image

import os

def resize_img(img_path, write_path):

crop_size = (120, 200)

img = Image.open(img_path)

new_img = img.resize(crop_size, Image.ANTIALIAS)

new_img.save(write_path, quality=100)

if __name__ == "__main__":

for root, dirs, files in os.walk("verify_images"):

for file in files:

img_path = os.path.join(root, file)

write_path = os.path.join("resized_images", file)

resize_img(img_path, write_path)SaveMovieData.py

import pymongo

class SaveInfo:

def __init__(self, host="localhost", port=27017, db="MovieSpider",

collection="maoyan"):

self._client = pymongo.MongoClient(host=host, port=port)

self._db = self._client[db]

self._collection = self._db[collection]

def insert_dict(self, data: dict):

result = self._collection.insert_one(data)

return result.inserted_idwoffToPng.py

from __future__ import print_function, division, absolute_import

from fontTools.ttLib import TTFont

from fontTools.pens.basePen import BasePen

from reportlab.graphics.shapes import Path

from reportlab.lib import colors

from reportlab.graphics import renderPM

from reportlab.graphics.shapes import Group, Drawing

class ReportLabPen(BasePen):

"""A pen for drawing onto a reportlab.graphics.shapes.Path object."""

def __init__(self, glyphSet, path=None):

BasePen.__init__(self, glyphSet)

if path is None:

path = Path()

self.path = path

def _moveTo(self, p):

(x, y) = p

self.path.moveTo(x, y)

def _lineTo(self, p):

(x, y) = p

self.path.lineTo(x, y)

def _curveToOne(self, p1, p2, p3):

(x1, y1) = p1

(x2, y2) = p2

(x3, y3) = p3

self.path.curveTo(x1, y1, x2, y2, x3, y3)

def _closePath(self):

self.path.closePath()

def woff_to_image(fontName, imagePath, fmt="png"):

font = TTFont(fontName)

gs = font.getGlyphSet()

glyphNames = font.getGlyphNames()

for i in glyphNames:

if i == 'x' or i == "glyph00000": # Skip '. notdef', '.null'

continue

g = gs[i]

pen = ReportLabPen(gs, Path(fillColor=colors.black, strokeWidth=5))

g.draw(pen)

w, h = 600, 1000

g = Group(pen.path)

g.translate(0, 200)

d = Drawing(w, h)

d.add(g)

imageFile = imagePath + "/" + i + ".png"

renderPM.drawToFile(d, imageFile, fmt)

import threading

class SpiderThread(threading.Thread):

def __init__(self, func, args=()):

super().__init__()

self.func = func

self.args = args

def run(self) -> None:

self.result = self.func(*self.args)

# Equivalent to no multithreading

# def get_result(self):

# threading.Thread.join(self)

# try:

# return self.result

# except Exception as e:

# print(e.args)

# return None

somexpaths.json

{

"movie_name": "//body//div[@class='banner']//div//div[@class='movie-brief-container']//h3/text()",

"movie_ename": "//body//div[@class='banner']//div//div[@class='movie-brief-container']//div/text()",

"movie_classes": "//body//div[@class='banner']//div//div[@class='movie-brief-container']//ul//li[1]/text()",

"movie_length": "//body//div[@class='banner']//div//div[@class='movie-brief-container']//ul//li[2]/text()",

"movie_showtime": "//body//div[@class='banner']//div//div[@class='movie-brief-container']//ul//li[3]/text()",

"movie_score": "//body//div[@class='banner']//div//div[@class='movie-stats-container']//div//span[@class='index-left info-num ']//span",

"movie_score_num": "//body//div[@class='banner']//div//div[@class='movie-stats-container']//div//span[@class='score-num']//span",

"movie_box": "//body//div[@class='wrapper clearfix']//div//div//div//div[@class='movie-index-content box']//span[@class='stonefont']",

"movie_box_unit": "//body//div[@class='wrapper clearfix']//div//div//div//div[@class='movie-index-content box']//span[@class='unit']/text()",

"movie_brief_info": "//body//div[@class='container']//div//div//div//div[@class='tab-content-container']//div//div//div[@class='mod-content']//span[@class='dra']/text()",

"movie_director_and_actress": "//body//div[@id='app']//div//div//div//div[@class='tab-content-container']//div//div[@class='mod-content']//div//div//ul//li//div//a/text()",

"commenter_names": "//body//div[@id='app']//div//div//div//div//div[@class='module']//div[@class='mod-content']//div[@class='comment-list-container']//ul//li//div//div[@class='user']//span[@class='name']/text()",

"commenter_comment": "//body//div[@id='app']//div//div//div//div//div[@class='module']//div[@class='mod-content']//div[@class='comment-list-container']//ul//li//div[@class='main']//div[@class='comment-content']/text()"

}