Why use distributed locks? Before discussing this issue, let's look at a business scenario.

Why use distributed locks?

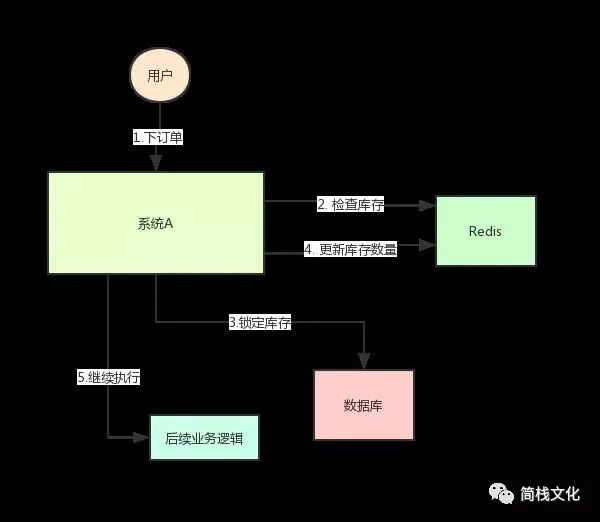

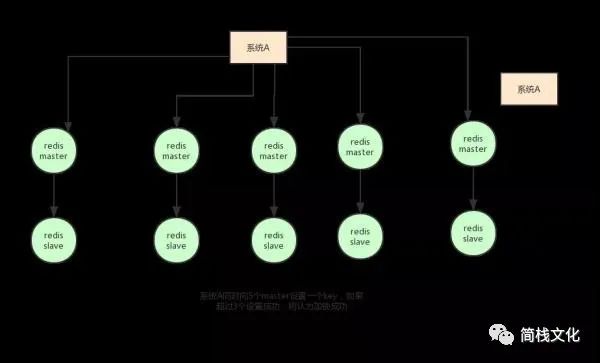

System A is an e-commerce system. At present, it is deployed on A machine. There is an interface for users to place orders. However, users must check the inventory before placing orders to ensure that the inventory is sufficient.

Because the system has certain concurrency, the inventory of goods will be saved in Redis in advance. When users place an order, the inventory of Redis will be updated.

The system architecture is as follows:

img

But this will cause a problem: if at a certain time, the inventory of a commodity in Redis is 1.

At this time, two requests come at the same time. One of the requests is executed to step 3 of the above figure. The inventory of the database is updated to 0, but step 4 has not been executed yet.

When another request is executed to step 2, if it is found that the inventory is still 1, continue to step 3. As a result, two goods were sold, but there was only one in stock.

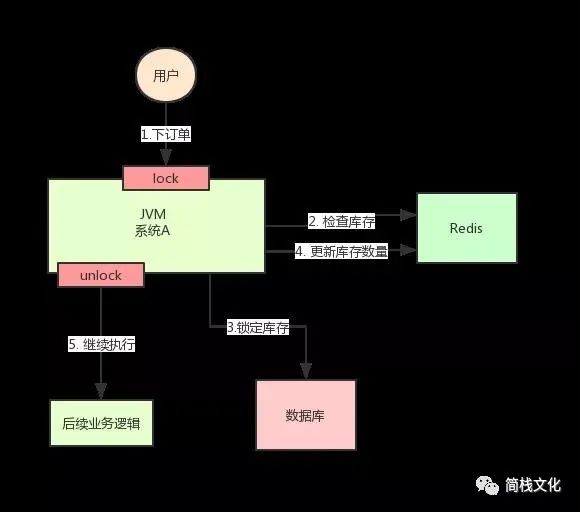

It's obviously wrong! This is a typical inventory oversold problem. At this time, it is easy to think of a solution: Lock steps 2, 3 and 4 with a lock. After they are executed, another thread can come in and execute step 2.

http://static.cyblogs.com/ce0fee95ef6880c9ac5ba670fe5be268.jpg

According to the figure above, when executing step 2, use Synchronized or ReentrantLock provided by Java to lock, and then release the lock after executing step 4.

In this way, the steps 2, 3 and 4 are "locked", and multiple threads can only be executed serially.

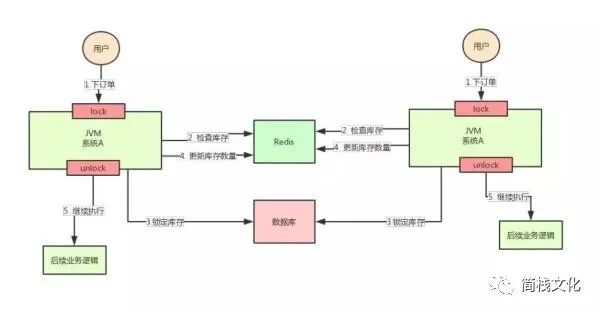

But the good time is not long. The concurrency of the whole system soars, and one machine can't carry it. Now add a machine, as shown in the following figure:

http://static.cyblogs.com/99f3874bd484b4ea8000ca41023be3dc.jpg-wh_600x-s_592420448.jpg

After adding the machine, the system becomes as shown in the figure above, my God! Suppose that the requests of two users arrive at the same time, but fall on different machines, can the two requests be executed at the same time, or will there be an oversold problem.

Why? Because the two A systems in the above figure run in two different JVMs, the locks they add are only valid for the threads belonging to their own JVMs, but not for the threads of other JVMs.

Therefore, the problem here is that the native lock mechanism provided by Java fails in the multi machine deployment scenario because the locks added by two machines are not the same lock (the two locks are in different JVM s).

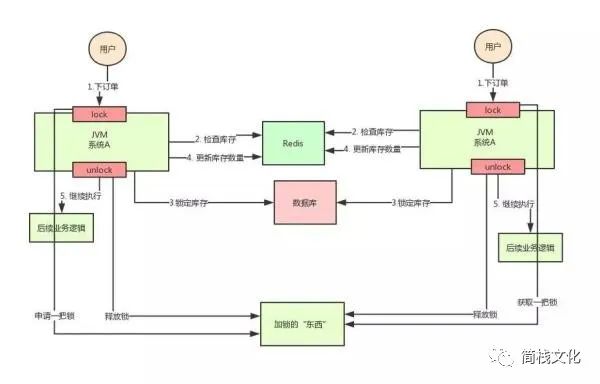

Well, as long as we ensure that the locks added by the two machines are the same lock, won't the problem be solved? At this point, it's time for the distributed lock to make a grand debut.

The idea of distributed lock is to provide a global and unique "thing" to obtain the lock in the whole system, and then each system asks this "thing" to get a lock when it needs to be locked, so that different systems can be regarded as the same lock.

As for this "thing", it can be Redis, Zookeeper or database. The text description is not very intuitive. Let's look at the following figure:

http://static.cyblogs.com/3667d7cff8435d47f5a187ad596bdb09.jpg-wh_600x-s_1198484587.jpg

Through the above analysis, we know the inventory oversold scenario. In the case of distributed deployment system, using Java's native locking mechanism can not ensure thread safety, so we need to use the distributed locking scheme.

So, how to implement distributed locks? Keep looking down!

Redis based distributed lock

The above analysis shows why distributed locks are used. Here, let's take a specific look at how distributed locks should be handled when landing.

"① a common solution is to use Redis as a distributed lock"

The idea of using Redis as a distributed lock is like this: set a value in Redis to indicate that the lock is added, and then delete the Key when the lock is released.

The specific code is as follows:

// Acquire lock

// NX means that if the key does not exist, it succeeds. If the key exists, it returns false. PX can specify the expiration time

SET anyLock unique_value NX PX 30000

// Release lock: by executing a lua script

// Releasing a lock involves two instructions that are not atomic

// The lua script support feature of redis is required. Redis executes lua scripts atomically

if redis.call("get",KEYS[1]) == ARGV[1] then

return redis.call("del",KEYS[1])

else

return 0

end

There are several key points to this approach:

- Be sure to use the SET key value NX PX milliseconds command. If not, first set the value and then set the expiration time. This is not an atomic operation. It may be down before setting the expiration time, resulting in deadlock (Key * * * exists)

- Value must be unique. This is to verify that the value is consistent with the locked value before deleting the Key.

At this time, a situation is avoided: suppose a acquires the lock, the expiration time is 30s, and after 35s, the lock has been automatically released. A releases the lock, but B may acquire the lock at this time. A client cannot delete B's lock.

In addition to how the client implements distributed locks, Redis deployment should also be considered.

Redis has three deployment modes:

- standalone mode

- Master slave + sentinel election mode

- Redis Cluster mode

The disadvantage of using Redis as a distributed lock is that if the single machine deployment mode is adopted, there will be a single point of problem as long as Redis fails. You can't lock it.

In the Master slave mode, only one node is locked when locking. Even if high availability is made through Sentinel, if the Master node fails and Master-Slave switching occurs, the problem of lock loss may occur.

Based on the above considerations, the author of Redis also considered this problem and proposed a RedLock algorithm.

The meaning of this algorithm is roughly as follows: suppose that the deployment mode of Redis is Redis Cluster, with a total of 5 Master nodes.

Obtain a lock through the following steps:

- Gets the current timestamp in milliseconds.

- Try to create locks on each Master node in turn. The expiration time is set to be short, usually tens of milliseconds.

- Try to establish a lock on most nodes. For example, five nodes require three nodes (n / 2 +1).

- The client calculates the time to establish the lock. If the time to establish the lock is less than the timeout, the establishment is successful.

- If the lock creation fails, delete the lock in turn.

- As long as someone else establishes a distributed lock, you have to constantly poll to try to obtain the lock.

However, such an algorithm is still controversial, and there may be many problems, which can not guarantee that the locking process must be correct.

http://static.cyblogs.com/da6b5468501519f5688e47824b56ba21.jpg-wh_600x-s_4091681668.jpg

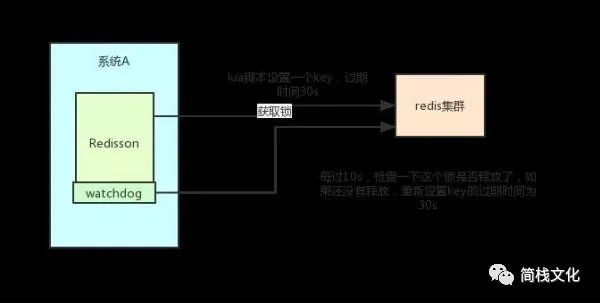

"② another way: Redisson"

In addition, the Redis distributed lock can be implemented based on the Redis Client native API, and the open source framework: Redission can also be used.

Redisson is an enterprise level open source Redis Client, which also provides distributed lock support. I also highly recommend it. Why?

Recall the above, if you write code to set a value through Redis, it is set through the following command:

SET anyLock unique_value NX PX 30000

The timeout set here is 30s. If I haven't completed the business logic for more than 30s, the Key will expire and other threads may obtain the lock.

In this way, * * * threads have not finished executing business logic, and thread safety problems will also occur when the second thread comes in.

So we need to maintain this expiration time. It's too troublesome ~ let's see how Redisson implements it?

Let's first feel the pleasure of using Redission:

Config config = new Config();

config.useClusterServers()

.addNodeAddress("redis://192.168.31.101:7001")

.addNodeAddress("redis://192.168.31.101:7002")

.addNodeAddress("redis://192.168.31.101:7003")

.addNodeAddress("redis://192.168.31.102:7001")

.addNodeAddress("redis://192.168.31.102:7002")

.addNodeAddress("redis://192.168.31.102:7003");

RedissonClient redisson = Redisson.create(config);

RLock lock = redisson.getLock("anyLock");

lock.lock();

lock.unlock();

It's that simple. We only need Lock and Unlock in its API to complete the distributed Lock. He helped us consider many details:

- All Redisson instructions are executed through Lua scripts. Redis supports the atomic execution of lua scripts.

- Redisson sets the default expiration time of a Key to 30s. What if a client holds a lock for more than 30s?

- There is a Watchdog concept in Redisson, which translates into a Watchdog. It will help you set the timeout of the Key to 30s every 10s after you obtain the lock.

In this way, even if the lock is held all the time, the Key will not expire and other threads will get the lock.

- Redisson's "watchdog" logic ensures that no deadlock occurs. (if the machine goes down, the watchdog will disappear. At this time, the expiration time of the Key will not be extended. It will expire automatically after 30s, and other threads can obtain the lock.)

http://static.cyblogs.com/a7fbd80edbe1bc035e0a6e1c3c691ed7.jpg-wh_600x-s_1313345474.jpg

The implementation code is posted here:

// Lock logic

private <T> RFuture<Long> tryAcquireAsync(long leaseTime, TimeUnit unit, final long threadId) {

if (leaseTime != -1) {

return tryLockInnerAsync(leaseTime, unit, threadId, RedisCommands.EVAL_LONG);

}

// Call a lua script and set some key s and expiration time

RFuture<Long> ttlRemainingFuture = tryLockInnerAsync(commandExecutor.getConnectionManager().getCfg().getLockWatchdogTimeout(), TimeUnit.MILLISECONDS, threadId, RedisCommands.EVAL_LONG);

ttlRemainingFuture.addListener(new FutureListener<Long>() {

@Override

public void operationComplete(Future<Long> future) throws Exception {

if (!future.isSuccess()) {

return;

}

Long ttlRemaining = future.getNow();

// lock acquired

if (ttlRemaining == null) {

// Watchdog logic

scheduleExpirationRenewal(threadId);

}

}

});

return ttlRemainingFuture;

}

<T> RFuture<T> tryLockInnerAsync(long leaseTime, TimeUnit unit, long threadId, RedisStrictCommand<T> command) {

internalLockLeaseTime = unit.toMillis(leaseTime);

return commandExecutor.evalWriteAsync(getName(), LongCodec.INSTANCE, command,

"if (redis.call('exists', KEYS[1]) == 0) then " +

"redis.call('hset', KEYS[1], ARGV[2], 1); " +

"redis.call('pexpire', KEYS[1], ARGV[1]); " +

"return nil; " +

"end; " +

"if (redis.call('hexists', KEYS[1], ARGV[2]) == 1) then " +

"redis.call('hincrby', KEYS[1], ARGV[2], 1); " +

"redis.call('pexpire', KEYS[1], ARGV[1]); " +

"return nil; " +

"end; " +

"return redis.call('pttl', KEYS[1]);",

Collections.<Object>singletonList(getName()), internalLockLeaseTime, getLockName(threadId));

}

// The watchdog will eventually call here

private void scheduleExpirationRenewal(final long threadId) {

if (expirationRenewalMap.containsKey(getEntryName())) {

return;

}

// This task will be delayed by 10s

Timeout task = commandExecutor.getConnectionManager().newTimeout(new TimerTask() {

@Override

public void run(Timeout timeout) throws Exception {

// This operation will reset the expiration time of the key to 30s

RFuture<Boolean> future = renewExpirationAsync(threadId);

future.addListener(new FutureListener<Boolean>() {

@Override

public void operationComplete(Future<Boolean> future) throws Exception {

expirationRenewalMap.remove(getEntryName());

if (!future.isSuccess()) {

log.error("Can't update lock " + getName() + " expiration", future.cause());

return;

}

if (future.getNow()) {

// reschedule itself

// By calling this method recursively, * * * loops to extend the expiration time

scheduleExpirationRenewal(threadId);

}

}

});

}

}, internalLockLeaseTime / 3, TimeUnit.MILLISECONDS);

if (expirationRenewalMap.putIfAbsent(getEntryName(), new ExpirationEntry(threadId, task)) != null) {

task.cancel();

}

}

In addition, Redisson also provides support for Redlock algorithm. Its usage is also very simple:

RedissonClient redisson = Redisson.create(config);

RLock lock1 = redisson.getFairLock("lock1");

RLock lock2 = redisson.getFairLock("lock2");

RLock lock3 = redisson.getFairLock("lock3");

RedissonRedLock multiLock = new RedissonRedLock(lock1, lock2, lock3);

multiLock.lock();

multiLock.unlock();

Summary: this section analyzes the specific implementation scheme and some limitations of using Redis as a distributed lock, and then introduces redison, a Redis client framework, which I recommend you to use. There will be less Care details than writing your own code.

Implementation of distributed lock based on Zookeeper

In the common distributed lock implementation schemes, in addition to Redis, Zookeeper can also be used to implement distributed locks.

Before introducing the mechanism of zookeeper (replaced by ZK below) to realize distributed lock, let's briefly introduce what ZK is: ZK is a centralized service that provides configuration management, distributed collaboration and naming.

ZK's model is as follows: ZK contains a series of nodes called Znode, just like a file system. Each Znode represents a directory.

Then Znode has some characteristics:

- Ordered node: if a parent node is / lock, we can create a child node under the parent node. ZK provides an optional ordered feature.

For example, if we can create a child node "/ lock/node -" and indicate order, ZK will automatically add an integer sequence number according to the current number of child nodes when generating child nodes.

That is, if * * * child nodes are created, the generated child node is / lock/node-0000000000, the next node is / lock/node-0000000001, and so on.

- Temporary node: the client can establish a temporary node. ZK will automatically delete the node after the session ends or the session times out.

- Event monitoring: when reading data, we can set event monitoring for the node at the same time. ZK will notify the client when the node data or structure changes.

Currently, ZK has the following four events:

- Node creation

- Node deletion

- Node data modification

- Child node change

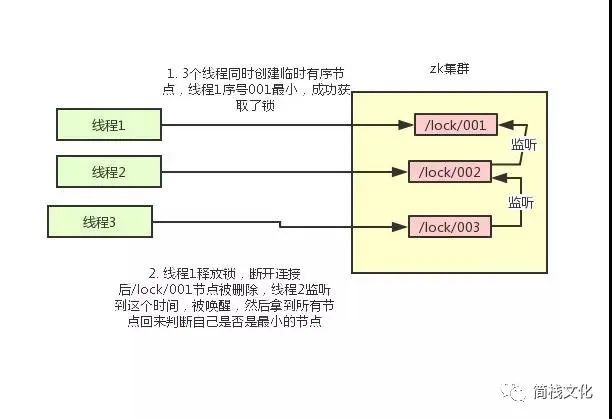

Based on the above ZK characteristics, we can easily come to the landing scheme of using ZK to realize distributed lock:

- Using ZK's temporary nodes and ordered nodes, each thread acquiring a lock is to create a temporary ordered node in ZK, such as in the / lock / directory.

- After the node is successfully created, obtain all temporary nodes in the / lock directory, and then judge whether the node created by the current thread is the node with the lowest sequence number of all nodes.

- If the node created by the current thread is the node with the smallest sequence number of all nodes, it is considered to have succeeded in obtaining the lock.

- If the node created by the current thread is not the node with the smallest node sequence number, add an event listener to the previous node of the node sequence number.

For example, if the node sequence number obtained by the current thread is / lock/003, and then the list of all nodes is [/ lock/001, / lock/002, / lock/003], add an event listener to the node / lock/002.

If the lock is released, the node with the next sequence number will be awakened, and then step 3 will be performed again to determine whether its own node sequence number is the smallest.

For example, / lock/001 is released and / lock/002 listens to the time. At this time, the node set is [/ lock/002, / lock/003], then / lock/002 is the minimum sequence number node and obtains the lock.

The whole process is as follows:

http://static.cyblogs.com/fb6c27bb22de93cbeb965718156f578f.jpg

The specific implementation idea is like this. As for how to write the code, it is more complex and will not be posted.

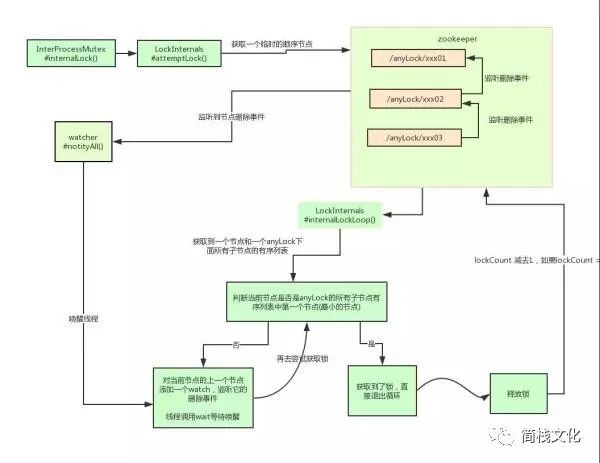

"Introduction to cursor"

Cursor is an open source client of ZK and also provides the implementation of distributed locks. It is also easy to use:

InterProcessMutex interProcessMutex = new InterProcessMutex(client,"/anyLock"); interProcessMutex.acquire(); interProcessMutex.release();

The core source code for implementing distributed locks is as follows:

private boolean internalLockLoop(long startMillis, Long millisToWait, String ourPath) throws Exception

{

boolean haveTheLock = false;

boolean doDelete = false;

try {

if ( revocable.get() != null ) {

client.getData().usingWatcher(revocableWatcher).forPath(ourPath);

}

while ( (client.getState() == CuratorFrameworkState.STARTED) && !haveTheLock ) {

// Gets the sorted collection of all current nodes

List<String> children = getSortedChildren();

// Gets the name of the current node

String sequenceNodeName = ourPath.substring(basePath.length() + 1); // +1 to include the slash

// Judge whether the current node is the smallest node

PredicateResults predicateResults = driver.getsTheLock(client, children, sequenceNodeName, maxLeases);

if ( predicateResults.getsTheLock() ) {

// Get lock

haveTheLock = true;

} else {

// If the lock is not obtained, register a listener for the previous node of the current node

String previousSequencePath = basePath + "/" + predicateResults.getPathToWatch();

synchronized(this){

Stat stat = client.checkExists().usingWatcher(watcher).forPath(previousSequencePath);

if ( stat != null ){

if ( millisToWait != null ){

millisToWait -= (System.currentTimeMillis() - startMillis);

startMillis = System.currentTimeMillis();

if ( millisToWait <= 0 ){

doDelete = true; // timed out - delete our node

break;

}

wait(millisToWait);

}else{

wait();

}

}

}

// else it may have been deleted (i.e. lock released). Try to acquire again

}

}

}

catch ( Exception e ) {

doDelete = true;

throw e;

} finally{

if ( doDelete ){

deleteOurPath(ourPath);

}

}

return haveTheLock;

}

In fact, the underlying principle of Curator for implementing distributed locks is similar to that analyzed above. Here we describe its principle in detail with a diagram:

https://s5.51cto.com/oss/201907/16/84636c96585ff2611ff55e284a57c6ab.jpg-wh_600x-s_3685227374.jpg

Summary: this section introduces ZK's scheme of implementing distributed lock and the basic use of ZK's open source client, and briefly introduces its implementation principle.

Comparison of advantages and disadvantages of the two schemes

After learning the two distributed lock implementation schemes, this section needs to discuss the advantages and disadvantages of Redis and ZK implementation schemes.

For Redis distributed locks, it has the following disadvantages:

- The way it obtains locks is simple and rough. If it can't obtain locks, it directly and continuously tries to obtain locks, which consumes performance.

- On the other hand, the design orientation of Redis determines that its data is not highly consistent. In some extreme cases, problems may occur. The lock model is not robust enough.

- Even if the Redlock algorithm is used to implement it, in some complex scenarios, it can not be guaranteed that its implementation is 100% free. For the discussion of Redlock, see How to do distributed locking.

- Redis distributed locks actually need to constantly try to obtain locks, which consumes performance.

On the other hand, it is very common for many enterprises to implement distributed locks using Redis, and in most cases, they will not encounter the so-called "extremely complex scenario".

Therefore, using Redis as a distributed lock is also a good scheme. The most important thing is that Redis has high performance and can support highly concurrent lock acquisition and release operations.

For ZK distributed locks:

- ZK's natural design orientation is distributed coordination and strong consistency. The model of lock is robust, easy to use and suitable for distributed lock.

- If you can't get the lock, you just need to add a listener. You don't have to poll all the time, and the performance consumption is small.

However, ZK also has its disadvantages: if more clients frequently apply for locking and releasing locks, the pressure on ZK cluster will be greater.

Summary: To sum up, Redis and ZK have their advantages and disadvantages. We can use these problems as reference factors when making technology selection.

some suggestions

Through the previous analysis, two common schemes for implementing distributed locks: Redis and ZK, each of which has its own advantages. How to select the type?

Personally, I highly recommend the lock implemented by ZK: there may be hidden dangers in Redis, which may lead to incorrect data. However, how to choose depends on the specific scenario in the company.

If the company has ZK cluster conditions, ZK is preferred. However, if the company only has Redis cluster, there is no condition to build ZK cluster.

In addition, the system designer may choose Redis when considering that the system already has Redis but does not want to introduce some external dependencies again. This should be considered by the system designer based on the architecture.

Reference address

- https://developer.51cto.com/art/201907/599642.htm

If you like my article, you can pay attention to your personal subscription number. Welcome to leave messages and communicate at any time. If you want to join the wechat group for discussion, please add the administrator Jian stack culture - little assistant (lastpass4u), who will pull you into the group.