Redis 5.0 was suddenly released by author Antirez, adding many new features. The biggest new feature of Redis 5.0 is the addition of a data structure Stream, which is a new powerful and persistent message queue supporting multi-cast. The author admits that Redis Stream has learned a lot from Kafka's design.

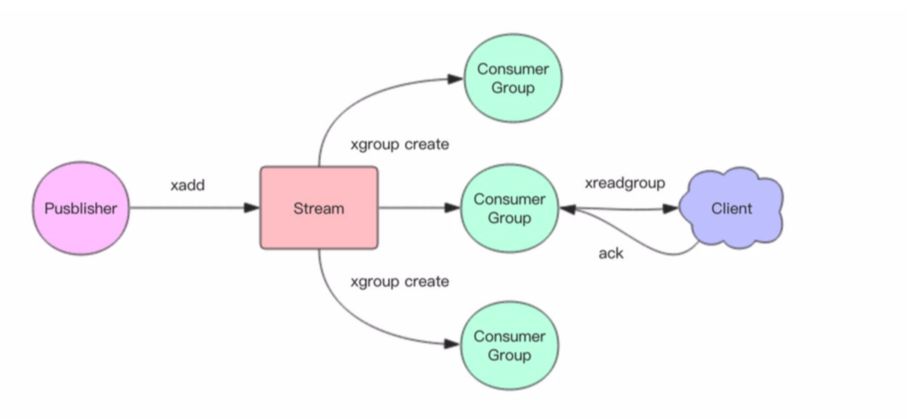

The structure of Redis Stream is shown in the figure above.

It has a message link list, which links all the joined messages together. Each message has a unique ID and corresponding content.

The message is persistent and the content is still there after Redis restarts.

Each Stream has a unique name, the key of Redis, which is automatically created when we first use the xadd instruction to append messages.

Each Stream can have multiple consumption groups, each of which has a cursor last_delivery_id moving forward on top of the Stream array.

Which message has been consumed by the current consumer group?

Each consumer group has a unique name in Stream, and the consumer group is not automatically created.

It requires a separate instruction xgroup create to be created, and it needs to specify that consumption begins with a message ID of Stream.

This ID is used to initialize the last_delivered_id variable.

The status of each consumer group is independent and independent of each other.

That is to say, the same Stream message will be consumed by each consumer group.

The same consumer group can connect multiple consumers, which are competing with each other.

Any consumer reading a message moves the cursor last_delivered_id forward. Each consumer has a unique name within a group.

There will be a state variable pending_ids inside the Consumer that records messages that are currently read by the client, but there is no ack.

If the client does not have ack, the message ID in this variable will increase, and once a message is ack, it will begin to decrease.

This pending_ids variable is officially called PEL in Redis, or Pending Entries List.

This is a core data structure that ensures that the client consumes the message at least once.

And will not be lost in the middle of network transmission without processing.

Message ID

The message ID takes the form of timestamp InMillis-sequence, such as 1527846880572-5, which indicates that the current message was generated at the millimeter timestamp 1527846880572 and is the fifth message generated within that millisecond.

Message IDs can be automatically generated by the server or specified by the client itself, but the form must be integer-integer, and the ID of the message added later must be larger than that of the previous message.

Message content is a key-value pair, such as a hash structure key-value pair, which is nothing special.

crud

- xadd additional information

- xdel deletes messages, where the deletion is just a flag bit, without affecting the total length of the message

- xrange gets the message list and automatically filters deleted messages

- xlen message length

- del Deletes Stream

# * Number indicates that the server automatically generates ID, followed by a stack of key/value

# His name is laoqian. He is 30 years old.

127.0.0.1:6379> xadd codehole * name laoqian age 30

1527849609889-0 # Generated message ID 127.0.0.1:6379> xadd codehole * name xiaoyu age 29

1527849629172-0 127.0.0.1:6379> xadd codehole * name xiaoqian age 1

1527849637634-0 127.0.0.1:6379> xlen codehole

(integer) 3 # - Represents the minimum, +the maximum

127.0.0.1:6379> xrange codehole - +

1) 1) 1527849609889-0

2) 1) "name"

2) "laoqian"

3) "age"

4) "30"

2) 1) 1527849629172-0

2) 1) "name"

2) "xiaoyu"

3) "age"

4) "29" 3) 1) 1527849637634-0

2) 1) "name"

2) "xiaoqian"

3) "age"

4) "1" # List specifying minimum message ID

127.0.0.1:6379> xrange codehole 1527849629172-0 +

1) 1) 1527849629172-0

2) 1) "name"

2) "xiaoyu"

3) "age"

4) "29" 2) 1) 1527849637634-0

2) 1) "name"

2) "xiaoqian"

3) "age"

4) "1" # List specifying the maximum message ID

127.0.0.1:6379> xrange codehole - 1527849629172-0

1) 1) 1527849609889-0

2) 1) "name"

2) "laoqian"

3) "age"

4) "30" 2) 1) 1527849629172-0

2) 1) "name"

2) "xiaoyu"

3) "age"

4) "29" 127.0.0.1:6379> xdel codehole 1527849609889-0

(integer) 1

# Length is unaffected 127.0.0.1:6379> xlen codehole

(integer) 3 # The deleted message is gone.

127.0.0.1:6379> xrange codehole - +

1) 1) 1527849629172-0

2) 1) "name"

2) "xiaoyu"

3) "age"

4) "29" 2) 1) 1527849637634-0

2) 1) "name"

2) "xiaoqian"

3) "age"

4) "1" # Delete the entire Stream

127.0.0.1:6379> del codehole

(integer) 1

Independent consumption

We can independently consume Stream messages without defining consumer groups, and even block waiting when Stream does not have new messages.

Redis designed a separate consumption instruction xread that can be used as a common message queue.

When using xread, we can completely ignore the existence of consumer groups, just as Stream is a common list.

# Read two messages from Stream header

127.0.0.1:6379> xread count 2 streams codehole 0-0

1) 1) "codehole"

2) 1) 1) 1527851486781-0

2) 1) "name"

2) "laoqian"

3) "age"

4) "30"

2) 1) 1527851493405-0

2) 1) "name"

2) "yurui"

3) "age"

4) "29"

# Reading a message from the end of Stream, there is no doubt that no message will be returned here.

127.0.0.1:6379> xread count 1 streams codehole $

(nil) # Waiting for new messages from the tail block, the following instructions block until new messages arrive

127.0.0.1:6379> xread block 0 count 1 streams codehole $ # We open a new window where we can send messages to Stream.

127.0.0.1:6379> xadd codehole * name youming age 60

1527852774092-0 # Switching to the front window, we can see that the blocking has been lifted and a new message content has been returned. # It also shows a waiting time, where we waited 93s.

127.0.0.1:6379> xread block 0 count 1 streams codehole $

1) 1) "codehole"

2) 1) 1) 1527852774092-0

2) 1) "name"

2) "youming"

3) "age"

4) "60"

(93.11s)

If the client wants to use xread for sequential consumption, it must remember where the current consumption is, that is, the message ID returned. The next time you continue to call xread, you can continue to consume subsequent messages by passing in the last message ID returned as a parameter.

block 0 means always blocking until the message arrives, block 1000 means blocking for 1s, and if no message arrives within 1s, it returns to nil.

127.0.0.1:6379> xread block 1000 count 1 streams codehole $ (nil) (1.07s)

Creating Consumption Groups

Stream creates a Consumer Group through the xgroup create instruction.

The start message ID parameter needs to be passed to initialize the last_delivery_id variable.

# Represents spending from scratch

127.0.0.1:6379> xgroup create codehole cg1 0-0

OK # $means to start consuming from the tail and only accept new messages. Current Stream messages will all be ignored.

127.0.0.1:6379> xgroup create codehole cg2 $

OK # Get Stream information

127.0.0.1:6379> xinfo stream codehole

1) length

2)(integer)3 #A total of three news 3) radix-tree-keys

4) (integer) 1

5) radix-tree-nodes

6) (integer) 2

7) groups

8) (integer) 2 # Two Consumption Groups

9) first-entry # First message

10) 1) 1527851486781-0

2) 1) "name"

2) "laoqian"

3) "age"

4) "30"

11) last-entry # Last message 12) 1) 1527851498956-0

2) 1) "name"

2) "xiaoqian"

3) "age"

4) "1"

# Getting Stream's Consumer Group Information

127.0.0.1:6379> xinfo groups codehole

1) 1) name

2) "cg1"

3) consumers

4) (integer) 0 # The consumer group has no consumers yet.

5) pending

6) (integer) 0 # The consumer group has no messages being processed

2) 1) name

2) "cg2"

3) consumers

4) (integer) 0

5) pending

6) (integer) 0 # The consumer group has no messages being processed

consumption

Stream provides the xreadgroup instruction to consume within a consumer group. It needs to provide the consumer group name, consumer name, and initial message ID. Like xread, it can also block waiting for new messages. After reading the new message, the corresponding message ID will enter the consumer's PEL (message being processed) structure. After the client has finished processing, the server will be notified by xack instruction. When the message has been processed, the message ID will be removed from the PEL.

# > Number means to start reading after last_delivered_id of the current consumer group

# Whenever a consumer reads a message, the last_delivered_id variable advances.127.0.0.1:6379> xreadgroup GROUP cg1 c1 count 1 streams codehole >

- "codehole"

- 1527851486781-0

- "name"

- "laoqian"

- "age"

- "30"

127.0.0.1:6379> xreadgroup GROUP cg1 c1 count 1 streams codehole >

1) 1) "codehole"

2) 1) 1) 1527851493405-0

2) 1) "name"

2) "yurui"

3) "age"

4) "29" 127.0.0.1:6379> xreadgroup GROUP cg1 c1 count 2 streams codehole >

1) 1) "codehole"

2) 1) 1) 1527851498956-0

2) 1) "name"

2) "xiaoqian"

3) "age"

4) "1"

2) 1) 1527852774092-0

2) 1) "name"

2) "youming"

3) "age"

4) "60" # Read on and there will be no new messages.

127.0.0.1:6379> xreadgroup GROUP cg1 c1 count 1 streams codehole >

(nil) # Let's wait in a jam.

127.0.0.1:6379> xreadgroup GROUP cg1 c1 block 0 count 1 streams codehole > # Open another window and fill in the message.

127.0.0.1:6379> xadd codehole * name lanying age 61

1527854062442-0

# Back to the previous window, the blockage was lifted and new messages were received.

127.0.0.1:6379> xreadgroup GROUP cg1 c1 block 0 count 1 streams codehole >

1) 1) "codehole"

2) 1) 1) 1527854062442-0

2) 1) "name"

2) "lanying"

3) "age"

4) "61"

(36.54s) # Observing Consumer Group Information

127.0.0.1:6379> xinfo groups codehole

1) 1) name

2) "cg1"

3) consumers

4) (integer) 1 # A consumer

5) pending

6) (integer) 5 # Five pieces of information being processed and whether there is an ack

2) 1) name

2) "cg2"

3) consumers

4) (integer) 0 # There is no change in the consumer group cg2, because we have been manipulating cg1 before.

5) pending

6) (integer) 0 # If there are more than one consumer in the same consumer group, we can observe the status of each consumer through the xinfo consumers directive.

127.0.0.1:6379> xinfo consumers codehole cg1 # There is still one consumer.

1) 1) name

2) "c1"

3) pending

4) (integer) 5 # Five messages to be processed

5) idle

6) (integer) 418715 # How long did ms not read messages when it was idle? # Next we ack a message

127.0.0.1:6379> xack codehole cg1 1527851486781-0

(integer) 1127.0.0.1:6379> xinfo consumers codehole cg1

- name

- "c1"

- pending

4)(integer)4 #It became five.- idle

- (integer) 668504

# The following ack all messages

127.0.0.1:6379> xack codehole cg1 1527851493405-0 1527851498956-0 1527852774092-0 1527854062442-0

(integer) 4 127.0.0.1:6379> xinfo consumers codehole cg1

- name

- "c1"

- pending

- (integer) 0 # pel is empty.

- idle

- (integer) 745505

What if Stream has too many messages?

Readers can easily imagine that Stream's list is not very long and its content will explode if messages accumulate too much. The xdel instruction does not delete messages, it just marks them.

Redis naturally takes this into account, so it provides a fixed-length Stream function. Providing a fixed length of maxlen in xadd's instructions can eliminate old messages and ensure that they do not exceed the specified length at most.

127.0.0.1:6379> xlen codehole (integer) 5 127.0.0.1:6379> xadd codehole maxlen 3 * name xiaorui age 1 1527855160273-0 127.0.0.1:6379> xlen codehole (integer) 3

What happens if the message forgets ACK?

Stream keeps the message ID list PEL under processing in each consumer structure. If the consumer receives the message but does not reply ack, the PEL list will grow continuously. If there are many consumer groups, the memory occupied by the PEL will be enlarged.

How can PEL avoid message loss?

When the client consumer reads Stream message, the Redis server replies the message to the client. The client suddenly disconnects and the message is lost. But the message ID has been saved in the PEL. After the client reconnects, the message ID list in the PEL can be received again. However, the initial message ID of xreadgroup can not be a parameter > but must be any valid message ID. Generally, the parameter is set to 0-0, which means reading all PEL messages and new messages after last_delivered_id.

High Availability of Stream

Stream's high availability is based on master-slave replication, which is no different from other data structure replication mechanisms. That is to say, Stream can support high availability in entinel and Cluster cluster environment. However, since Redis's instruction replication is asynchronous, when failover occurs, Redis may lose a very small amount of data, which is the same with other data structures of Redis.

Partition

Redis servers do not have native partitioning support. If you want to use partitioning, you need to allocate multiple Stream, and then use a certain strategy on the client to produce messages to different Stream. You might think Kafka is a lot more advanced. It supports Partition natively. I don't agree with that. Remember that Kafka's client also has HashStrategy, because it also uses the client's hash algorithm to cram different messages into different partitions.

In addition, Kafka supports the ability to dynamically increase the number of partitions, but this adjustment ability is also very poor, it will not rehash existing content, will not re-partition historical data. This simple dynamic adjustment capability can be achieved by adding new Stream.

Summary

Stream's consumption model draws on Kafka's concept of consumer grouping, which makes up for Redis Pub/Sub's inability to persist messages. But it's different from kafka, where messages can be partition ed, but Stream can't. If you have to divide the parity, you have to do it on the client side, provide different Stream names, and hash the message to choose which Stream to plug in.

If readers have studied Disque, another open source project of Redis authors, it is likely that the author realized that Disque project was not active enough, so he transplanted the content of Disque into Redis. This is my guess, not necessarily the author's original intention. If readers have different ideas, they can participate in the discussion in the comments section.