1. Preface

Redis is the largest cache middleware we use today, and it is widely used because of its powerful, efficient and convenient features.

Redis released 3.0.0 in 2015, officially supporting redis clusters.This brings an end to the era of redis without clusters, which used to be Twemproxy published by twitter and codis developed by pea pods.This article will understand and practice the redis cluster.Next, I will try to explain it in a familiar and understandable way.

2. Design essentials of redis Cluster

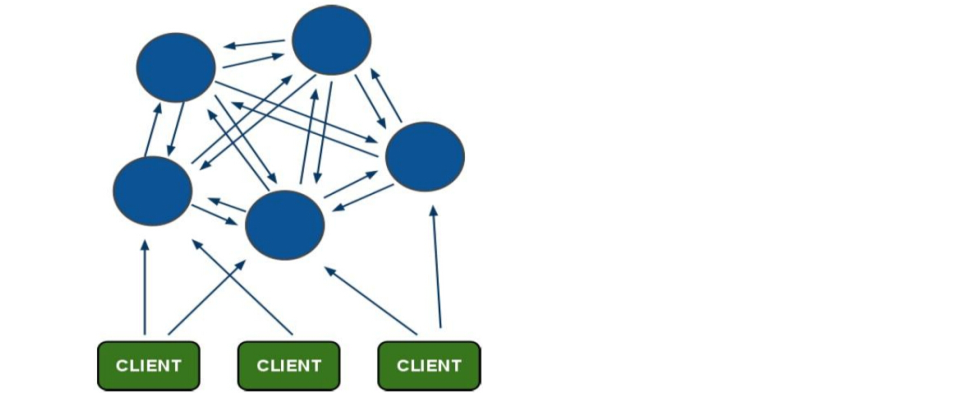

Redis clusters are designed with the idea of de-centralization and de-middleware in mind, that is, each node in the cluster has an equal relationship, is peer-to-peer, and each node holds its own data and the state of the entire cluster.Each node connects to all other nodes, and these connections remain active, which ensures that we only need to connect to any one node in the cluster to get data from the other nodes.

3, redis cluster principles

We know that each node in a cluster is a peer-to-peer relationship and holds its own data, so how does redis allocate these nodes and data appropriately?

Redis Cluster does not use the traditional consistent hash to allocate data, but uses a different hash slot to allocate data.

The redis cluster allocates 16384 slots by default. When we set a key, we use the CRC16 algorithm to model the slot and divide the key into the nodes of the hash slot interval. The algorithm is CRC16 (key)%16384.

It is important to note that a Redis cluster requires at least three nodes, because the voting fault tolerance mechanism requires more than half of the nodes to think that a node is hung, so two nodes cannot form a cluster.

So let's assume that three nodes are now deployed as redis cluster s: A, B, C, which can be three ports on a machine or three different servers; then 16384 slots are allocated using hash slot s, and the slot intervals assumed by the three nodes are:

Node A covers 0-5461; Node B covers 5462-10922; Node C covers 10923-16383;

So, now I want to set a key, such as my_name:set my_name linux

According to the hash slot algorithm of redis cluster: CRC16('my_name')%16384 = 2412.Then the key's storage is allocated to A.

Similarly, when I connect (A,B,C) any node to get the key my_name, it will do the same, then jump internally to get data on node A.

The benefit of this hash slot allocation is that it's clear, for example, that I want to add a new node ID, which redis cluster does by taking a portion of each slot from the front of each node onto D.Roughly this will happen: (I'll try it out in the next few exercises)

Node A covers 1365-5460; Node B covers 6827-10922; Node C covers 12288-16383; Node D covers 0-1364, 5461-6826, 10923-12287;

Similarly, deleting a node is similar, and it can be deleted once the move is complete.

So the redis cluster is such a shape:

4, redis cluster master-slave mode

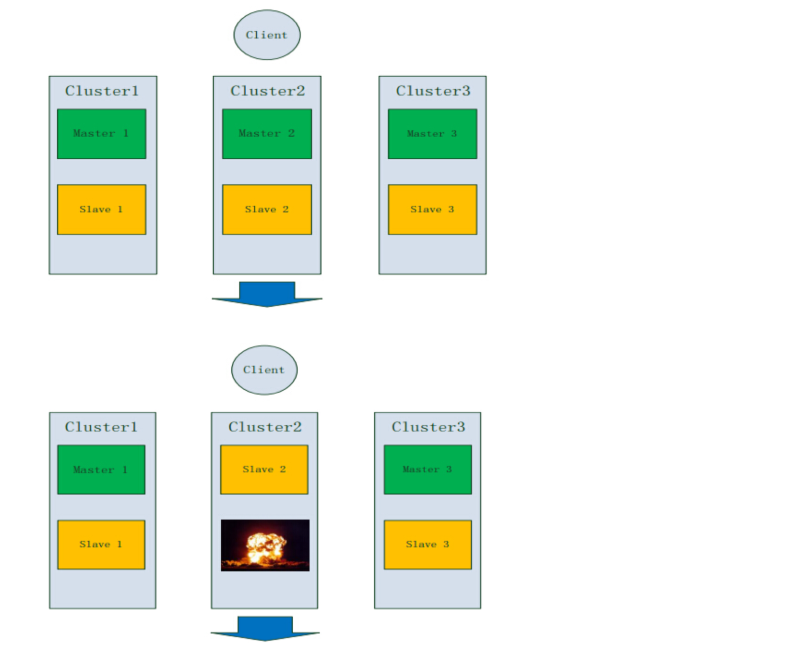

The redis cluster adds a master-slave mode to ensure high availability of data. A master node corresponds to one or more slave nodes. The master node provides data access, while the slave node pulls data backups from the master node. When the master node hangs up, this slave node accesses one to act as the master node, thereby ensuring that the cluster does not hang up.

In the example above, the cluster has three primary ABC nodes. If none of these three nodes join the slave node, if B hangs up, we will not be able to access the entire cluster.A and C slot s are also inaccessible.So when we build a cluster, we must add slave nodes for each master node. For example, like this, if the cluster contains master nodes A, B, C, and slave nodes A1, B1, C1, then the system can continue to work correctly even if B is suspended.Since the B1 node is substituted for the B node, the redis cluster will select the B1 node as the new primary node, and the cluster will continue to provide services correctly.It is important to note that when B is reopened, it becomes a slave node of B1 instead of a primary node.

If nodes B and B1 are hung at the same time, the Redis cluster will no longer be able to properly provide services. Normally, both nodes will not and will not be allowed to be hung at the same time.

The following diagram shows the process:

Setting up a redis highly available cluster

According to the principle of redis cluster internal failover implementation, reids cluster requires at least three nodes, and to ensure high availability of the cluster, each node needs a slave node, so building a redis cluster requires at least six servers, three masters and three slaves.

Limited conditions and a test environment, so we created a pseudo cluster on two machines that started multiple redis instances through different TCP ports to form a cluster. Of course, the Redis cluster in the actual production environment is the same as here.

There are two ways to set up a redis cluster:

1. Build manually, that is, execute the cluster command manually to complete the building process step by step.

2, set up automatically, even with the cluster management tools provided by the government.

The two methods work the same way, but the automatic build only encapsulates the redis command that needs to be executed in the manual build into an executable program.In production environments, it is recommended to use automatic construction, which is simple, fast and error-prone; both methods will be mentioned in this demonstration.

Environment description:

Host A:172.16.1.100 (CentOS 7.3), starting three instances 7000,7001,7002; all predominantly Host B:172.16.1.110 (CentOS 7.3), starting three instances 8000,8001,8002; all from

1. Build manually

1, install redis

A Host: [root@redis01-server ~]# tar zxf redis-4.0.14.tar.gz [root@redis01-server ~]# mv redis-4.0.14 /usr/local/redis [root@redis01-server ~]# cd /usr/local/redis/ [root@redis01-server redis]# make && make install #Installation complete, modify configuration file: [root@redis01-server ~]# vim /usr/local/redis/redis.conf 69 bind 172.16.1.100 #Set to ip address of current redis host 92 port 7000 #Set the listening port for redis 136 daemonize yes #Run redis instance as daemon 814 cluster-enabled yes #Start cluster mode 822 cluster-config-file nodes-7000.conf #Set current node cluster profile path 828 cluster-node-timeout 5000 #Set current connection timeout seconds 672 appendonly yes #Turn on AOF persistence mode 676 appendfilename "appendonly-7000.aof" #The name of the AOF file where the data is saved B Host: [root@redis02-server ~]# tar zxf redis-4.0.14.tar.gz [root@redis02-server ~]# mv redis-4.0.14 /usr/local/redis [root@redis02-server ~]# cd /usr/local/redis [root@redis02-server redis]# make && make install [root@redis02-server ~]# vim /usr/local/redis/redis.conf bind 172.16.1.110 port 8000 daemonize yes cluster-enabled yes cluster-config-file nodes-8000.conf cluster-node-timeout 5000 appendonly yes appendfilename "appendonly-8000.aof"

2, according to the above plan, create a storage directory for each node boot profile

A Host:

[root@redis01-server ~]# mkdir /usr/local/redis-cluster

[root@redis01-server ~]# cd /usr/local/redis-cluster/

[root@redis01-server redis-cluster]# mkdir {7000,7001,7002}

B Host:

[root@redis02-server ~]# mkdir /usr/local/redis-cluster

[root@redis02-server ~]# cd /usr/local/redis-cluster/

[root@redis02-server redis-cluster]# mkdir {8000,8001,8002}3. Copy the master file to the corresponding directory

A Host: [root@redis01-server ~]# cp /usr/local/redis/redis.conf /usr/local/redis-cluster/7000/ [root@redis01-server ~]# cp /usr/local/redis/redis.conf /usr/local/redis-cluster/7001/ [root@redis01-server ~]# cp /usr/local/redis/redis.conf /usr/local/redis-cluster/7002/ B Host: [root@redis02-server ~]# cp /usr/local/redis/redis.conf /usr/local/redis-cluster/8000/ [root@redis02-server ~]# cp /usr/local/redis/redis.conf /usr/local/redis-cluster/8001/ [root@redis02-server ~]# cp /usr/local/redis/redis.conf /usr/local/redis-cluster/8002/ #Modify their listening TCP port numbers: A Host: [root@redis01-server ~]# sed -i "s/7000/7001/g" /usr/local/redis-cluster/7001/redis.conf [root@redis01-server ~]# sed -i "s/7000/7002/g" /usr/local/redis-cluster/7002/redis.conf B Host: [root@redis02-server ~]# sed -i "s/8000/8001/g" /usr/local/redis-cluster/8001/redis.conf [root@redis02-server ~]# sed -i "s/8000/8002/g" /usr/local/redis-cluster/8002/redis.conf

4, Start the reids service

A Host: [root@redis01-server ~]# ln -s /usr/local/bin/redis-server /usr/local/sbin/ [root@redis01-server ~]# redis-server /usr/local/redis-cluster/7000/redis.conf [root@redis01-server ~]# redis-server /usr/local/redis-cluster/7001/redis.conf [root@redis01-server ~]# redis-server /usr/local/redis-cluster/7002/redis.conf #Ensure that each node service is functioning properly: [root@redis01-server ~]# ps -ef | grep redis root 19595 1 0 04:55 ? 00:00:00 redis-server 172.16.1.100:7000 [cluster] root 19602 1 0 04:56 ? 00:00:00 redis-server 172.16.1.100:7001 [cluster] root 19607 1 0 04:56 ? 00:00:00 redis-server 172.16.1.100:7002 [cluster] root 19612 2420 0 04:58 pts/0 00:00:00 grep --color=auto redis B Host: [root@redis02-server ~]# ln -s /usr/local/bin/redis-server /usr/local/sbin/ [root@redis02-server ~]# redis-server /usr/local/redis-cluster/8000/redis.conf [root@redis02-server ~]# redis-server /usr/local/redis-cluster/8001/redis.conf [root@redis02-server ~]# redis-server /usr/local/redis-cluster/8002/redis.conf [root@redis02-server ~]# ps -ef | grep redis root 18485 1 0 00:17 ? 00:00:00 redis-server 172.16.1.110:8000 [cluster] root 18490 1 0 00:17 ? 00:00:00 redis-server 172.16.1.110:8001 [cluster] root 18495 1 0 00:17 ? 00:00:00 redis-server 172.16.1.110:8002 [cluster] root 18501 1421 0 00:19 pts/0 00:00:00 grep --color=auto redis

5, node handshake

Although all six nodes above have cluster support enabled, by default they are not trusted or connected.Node handshake is the creation of links between nodes (each node is connected to other nodes) to form a complete network, that is, a cluster.

The commands for node handshake are as follows:

cluster meet ip port

The six nodes we created can be connected to node A through redis-cli to perform the following five sets of commands to complete the handshake:

Note: The firewalls of the hosts in the cluster need to be closed: systemctl stop firewalld, otherwise the handshake cannot be completed

[root@redis01-server ~]# redis-cli -h 172.16.1.100 -p 7000 172.16.1.100:7000> cluster meet 172.16.1.100 7001 OK 172.16.1.100:7000> cluster meet 172.16.1.100 7002 OK 172.16.1.100:7000> cluster meet 172.16.1.110 8000 OK 172.16.1.100:7000> cluster meet 172.16.1.110 8001 OK 172.16.1.100:7000> cluster meet 172.16.1.110 8002 OK #Check if the handshake is working: 172.16.1.100:7000> cluster nodes 060a11f6985df66e4b9cf596355bbe334f843587 172.16.1.100:7001@17001 master - 0 1584155029000 1 connected 2fb26d79f703f9fbd8841e4ee93ea88f7df5dad9 172.16.1.110:8002@18002 master - 0 1584155029000 5 connected 6d3ac8cf0dc3c8400d2df8d0559fbe8bdce0c34d 172.16.1.110:8000@18000 master - 0 1584155029243 3 connected cc3b16e067bf1ce9978c13870f0e1d538102a733 172.16.1.110:8001@18001 master - 0 1584155030000 0 connected 0f74b9e2d07e159fdc0fc1edffd3d0b305adc2fd 172.16.1.100:7000@17000 myself,master - 0 1584155028000 2 connected c0fefd1442b3fa4e41eb6fba5073dcc1427ca812 172.16.1.100:7002@17002 master - 0 1584155030249 4 connected

You can see that all the nodes in the cluster have already established links, and the node handshake has been completed since then.

#Although the nodes are linked, the redis cluster is not online at this time. Execute the cluster info command to see how the current cluster is running:

172.16.1.100:7000> cluster info cluster_state:fail #Indicates that the current cluster is offline cluster_slots_assigned:0 #0 means that all slots are not currently assigned to nodes cluster_slots_ok:0 cluster_slots_pfail:0 cluster_slots_fail:0 cluster_known_nodes:6 cluster_size:0 cluster_current_epoch:5 cluster_my_epoch:2 cluster_stats_messages_ping_sent:612 cluster_stats_messages_pong_sent:627 cluster_stats_messages_meet_sent:10 cluster_stats_messages_sent:1249

6, allocate slots

Only when slots are assigned to all the primary nodes in the cluster can the cluster come online normally. The command for slot allocation is as follows:

cluster addslots slot [slot ...]

#According to prior planning, 16384 hash slots need to be manually allocated roughly equally to primary nodes A, B, C using the cluster addslots command.

[root@redis01-server ~]# redis-cli -h 172.16.1.100 -p 7000 cluster addslots {0..5461}

OK

[root@redis01-server ~]# redis-cli -h 172.16.1.100 -p 7001 cluster addslots {5462..10922}

OK

[root@redis01-server ~]# redis-cli -h 172.16.1.100 -p 7002 cluster addslots {10923..16383}

OKOnce slot allocation is complete, you can review the current cluster status again:

[root@redis01-server ~]# redis-cli -h 172.16.1.100 -p 7000 cluster info cluster_state:ok cluster_slots_assigned:16384 cluster_slots_ok:16384 cluster_slots_pfail:0 cluster_slots_fail:0 cluster_known_nodes:6 cluster_size:3 cluster_current_epoch:5 cluster_my_epoch:2 cluster_stats_messages_ping_sent:2015 cluster_stats_messages_pong_sent:2308 cluster_stats_messages_meet_sent:10 cluster_stats_messages_sent:4333 cluster_stats_messages_ping_received:2308 cluster_stats_messages_pong_received:2007 cluster_stats_messages_received:4315

cluster_state:ok certifies that the redis cluster was successfully brought online.

#To delete allocation slots, execute the cluster delslots command, for example:

[root@redis01-server ~]# redis-cli -h 172.16.1.100 -p 7000 cluster delslots {10923..16383}

#View slot allocation: [root@redis01-server ~]# redis-cli -h 172.16.1.100 -p 7000 cluster nodes 060a11f6985df66e4b9cf596355bbe334f843587 172.16.1.100:7001@17001 master - 0 1584156388000 1 connected 5462-10922 2fb26d79f703f9fbd8841e4ee93ea88f7df5dad9 172.16.1.110:8002@18002 master - 0 1584156387060 5 connected 6d3ac8cf0dc3c8400d2df8d0559fbe8bdce0c34d 172.16.1.110:8000@18000 master - 0 1584156386056 3 connected cc3b16e067bf1ce9978c13870f0e1d538102a733 172.16.1.110:8001@18001 master - 0 1584156387000 0 connected 0f74b9e2d07e159fdc0fc1edffd3d0b305adc2fd 172.16.1.100:7000@17000 myself,master - 0 1584156387000 2 connected 0-5461 c0fefd1442b3fa4e41eb6fba5073dcc1427ca812 172.16.1.100:7002@17002 master - 0 1584156386000 4 connected 10923-16383

You can see that three master node slots have been allocated, but three slave nodes are not in use. If one master node fails at this time, the entire cluster will hang up, so we need to configure the master node for the slave nodes to be highly available.

7, master-slave replication

The cluster replication commands are as follows: cluster replicate node-id

1) Connect any node in the cluster to get the node-id of all the primary nodes

[root@redis01-server ~]# redis-cli -h 172.16.1.110 -p 8000 cluster nodes 2fb26d79f703f9fbd8841e4ee93ea88f7df5dad9 172.16.1.110:8002@18002 master - 0 1584157179543 5 connected c0fefd1442b3fa4e41eb6fba5073dcc1427ca812 172.16.1.100:7002@17002 master - 0 1584157179946 4 connected 10923-16383 6d3ac8cf0dc3c8400d2df8d0559fbe8bdce0c34d 172.16.1.110:8000@18000 myself,master - 0 1584157179000 3 connected 060a11f6985df66e4b9cf596355bbe334f843587 172.16.1.100:7001@17001 master - 0 1584157180952 1 connected 5462-10922 cc3b16e067bf1ce9978c13870f0e1d538102a733 172.16.1.110:8001@18001 master - 0 1584157180549 0 connected 0f74b9e2d07e159fdc0fc1edffd3d0b305adc2fd 172.16.1.100:7000@17000 master - 0 1584157179544 2 connected 0-5461

2) Execute the following three sets of commands to specify its master node from the slave node so that the cluster can automatically complete master-slave replication

[root@redis02-server ~]# redis-cli -h 172.16.1.110 -p 8000 cluster replicate 0f74b9e2d07e159fdc0fc1edffd3d0b305adc2fd OK [root@redis02-server ~]# redis-cli -h 172.16.1.110 -p 8001 cluster replicate 060a11f6985df66e4b9cf596355bbe334f843587 OK [root@redis02-server ~]# redis-cli -h 172.16.1.110 -p 8002 cluster replicate c0fefd1442b3fa4e41eb6fba5073dcc1427ca812 OK

3) View replication status information for each node in the cluster:

[root@redis02-server ~]# redis-cli -h 172.16.1.110 -p 8000 cluster nodes 2fb26d79f703f9fbd8841e4ee93ea88f7df5dad9 172.16.1.110:8002@18002 slave c0fefd1442b3fa4e41eb6fba5073dcc1427ca812 0 1584157699631 5 connected c0fefd1442b3fa4e41eb6fba5073dcc1427ca812 172.16.1.100:7002@17002 master - 0 1584157700437 4 connected 10923-16383 6d3ac8cf0dc3c8400d2df8d0559fbe8bdce0c34d 172.16.1.110:8000@18000 myself,slave 0f74b9e2d07e159fdc0fc1edffd3d0b305adc2fd 0 1584157699000 3 connected 060a11f6985df66e4b9cf596355bbe334f843587 172.16.1.100:7001@17001 master - 0 1584157701442 1 connected 5462-10922 cc3b16e067bf1ce9978c13870f0e1d538102a733 172.16.1.110:8001@18001 slave 060a11f6985df66e4b9cf596355bbe334f843587 0 1584157699932 1 connected 0f74b9e2d07e159fdc0fc1edffd3d0b305adc2fd 172.16.1.100:7000@17000 master - 0 1584157700000 2 connected 0-5461

You can see that all slave nodes act as backup nodes for the corresponding primary node, and thus a redis cluster has been successfully set up manually.

Summarize the key steps for manually building a redis cluster:

1, install redis at each node 2, Modify the configuration file to turn on cluster mode 3, Start each node redis service 4, node handshake 5. Allocate slots for the primary node 6, the master-slave node establishes the replication relationship

Recommended blog posts:

The difference between rdb and aof persistence for redis: https://www.cnblogs.com/shizhengwen/p/9283973.html

2. Automatically set up

After Redis version 3.0, a cluster management tool, redis-trib.rb, was officially released and integrated into the src directory of the Redis source package.It encapsulates the cluster commands provided by redis and is easy and convenient to use.

Environment description:

Host A:172.16.1.100 (CentOS 7.3), starting three instances 7000,7001,7002; Host B:172.16.1.110 (CentOS 7.3), starting three instances 8000,8001,8002;

1, follow steps 1-4 above to manually set up redis (until redis are started (to ensure the redis service is running properly), and close the firewall.

2. Building Cluster Management Tools

redis-trib.rb was developed by the Redis authors in Ruby, so you need to install the Ruby environment on your machine before using this tool.

Note that this tool has been integrated into redis-cli since Redis 5.0 and is available with the--cluster parameter, where the create command can be used to create clusters.

1)install Ruby Environment and other dependencies (two hosts) [root@redis-01 ~]# yum -y install ruby ruby-devel rubygems rpm-build openssl openssl-devel #Confirm installation version: [root@redis-01 ~]# ruby -v ruby 2.0.0p648 (2015-12-16) [x86_64-linux]

2) Build clusters using redis-trib.rb script

[root@redis-01 ~]# ln -s /usr/local/redis/src/redis-trib.rb /usr/local/sbin/

[root@redis-01 ~]# redis-trib.rb create --replicas 1 172.16.1.100:7000 172.16.1.100:7001 172.16.1.100:7002 172.16.1.110:8000 172.16.1.110:8001 172.16.1.110:8002

#Using the create command here, the--replicas 1 parameter represents the creation of a slave node (randomly assigned) for each primary node, and the other parameters are the address set of the instance

/usr/share/rubygems/rubygems/core_ext/kernel_require.rb:55:in `require': cannot load such file -- redis (LoadError)

from /usr/share/rubygems/rubygems/core_ext/kernel_require.rb:55:in `require'

from /usr/local/sbin/redis-trib.rb:25:in `<main>'The above error is that a redis gem package is required to install the ruby and redis interfaces, install the gem package, and address: https://rubygems.org/gems/redis/ Select the corresponding version to download, here select version 3.3.0:

[root@redis-01 ~]# gem install -l redis-3.3.0.gem Successfully installed redis-3.3.0 Parsing documentation for redis-3.3.0 Installing ri documentation for redis-3.3.0 1 gem installed

#Re-create Cluster

[root@redis-01 ~]# redis-trib.rb create --replicas 1 172.16.1.100:7000 172.16.1.100:7001 172.16.1.100:7002 172.16.1.110:8000 172.16.1.110:8001 172.16.1.110:8002 >>> Creating cluster >>> Performing hash slots allocation on 6 nodes... Using 3 masters: 172.16.1.100:7000 172.16.1.110:8000 172.16.1.100:7001 Adding replica 172.16.1.110:8002 to 172.16.1.100:7000 Adding replica 172.16.1.100:7002 to 172.16.1.110:8000 Adding replica 172.16.1.110:8001 to 172.16.1.100:7001 M: 8613a457f8009aaf784df0ac6d7039034b16b6a6 172.16.1.100:7000 slots:0-5460 (5461 slots) master M: 5721b77733b1809449c6fc5806f38f1cacb1de8c 172.16.1.100:7001 slots:10923-16383 (5461 slots) master S: d161ab43746405c2b517e3ffc98321956431191c 172.16.1.100:7002 replicates 4debd0b5743826d203d1af777824eb1b83105d21 M: 4debd0b5743826d203d1af777824eb1b83105d21 172.16.1.110:8000 slots:5461-10922 (5462 slots) master S: 948421116dd1859002c78a2df0b9845bdc7db631 172.16.1.110:8001 replicates 5721b77733b1809449c6fc5806f38f1cacb1de8c S: 3cc268dfbb918a99159900643b318ec87ba03ad9 172.16.1.110:8002 replicates 8613a457f8009aaf784df0ac6d7039034b16b6a6 Can I set the above configuration? (type 'yes' to accept): yes >>> Nodes configuration updated >>> Assign a different config epoch to each node >>> Sending CLUSTER MEET messages to join the cluster Waiting for the cluster to join.. >>> Performing Cluster Check (using node 172.16.1.100:7000) M: 8613a457f8009aaf784df0ac6d7039034b16b6a6 172.16.1.100:7000 slots:0-5460 (5461 slots) master 1 additional replica(s) S: 3cc268dfbb918a99159900643b318ec87ba03ad9 172.16.1.110:8002 slots: (0 slots) slave replicates 8613a457f8009aaf784df0ac6d7039034b16b6a6 S: 948421116dd1859002c78a2df0b9845bdc7db631 172.16.1.110:8001 slots: (0 slots) slave replicates 5721b77733b1809449c6fc5806f38f1cacb1de8c M: 4debd0b5743826d203d1af777824eb1b83105d21 172.16.1.110:8000 slots:5461-10922 (5462 slots) master 1 additional replica(s) S: d161ab43746405c2b517e3ffc98321956431191c 172.16.1.100:7002 slots: (0 slots) slave replicates 4debd0b5743826d203d1af777824eb1b83105d21 M: 5721b77733b1809449c6fc5806f38f1cacb1de8c 172.16.1.100:7001 slots:10923-16383 (5461 slots) master 1 additional replica(s) [OK] All nodes agree about slots configuration. >>> Check for open slots... >>> Check slots coverage... [OK] All 16384 slots covered.

redis-trib will prompt you to configure what you have done, enter yes to accept, and the cluster will be configured and joined, meaning the instances will be started after communicating with each other.

At this point, the cluster can be said to be built, a command to solve, can be said to be very convenient.

3, Test Cluster

1) Test the state of the cluster:

[root@redis-01 ~]# redis-trib.rb check 172.16.1.100:7000 >>> Performing Cluster Check (using node 172.16.1.100:7000) M: 8613a457f8009aaf784df0ac6d7039034b16b6a6 172.16.1.100:7000 slots:0-5460 (5461 slots) master 1 additional replica(s) S: 3cc268dfbb918a99159900643b318ec87ba03ad9 172.16.1.110:8002 slots: (0 slots) slave replicates 8613a457f8009aaf784df0ac6d7039034b16b6a6 S: 948421116dd1859002c78a2df0b9845bdc7db631 172.16.1.110:8001 slots: (0 slots) slave replicates 5721b77733b1809449c6fc5806f38f1cacb1de8c M: 4debd0b5743826d203d1af777824eb1b83105d21 172.16.1.110:8000 slots:5461-10922 (5462 slots) master 1 additional replica(s) S: d161ab43746405c2b517e3ffc98321956431191c 172.16.1.100:7002 slots: (0 slots) slave replicates 4debd0b5743826d203d1af777824eb1b83105d21 M: 5721b77733b1809449c6fc5806f38f1cacb1de8c 172.16.1.100:7001 slots:10923-16383 (5461 slots) master 1 additional replica(s)

You can see three primary nodes (M), 7000, 8000, 7001, and three slave nodes (S), 7002, 8001, 8002, respectively.Each node is in a successful connection state.

2) Test Connection Cluster

The cluster was built successfully; according to the redis cluster feature, it is de-centralized and each node is peer-to-peer, so any node you connect can get and set up data, and then test:

[root@redis-01 ~]# Redis-cli-h 172.16.1.100-p 7000-c //cluster mode requires the -c parameter to be added

172.16.1.100:7000> set my_name linux //Set a value

-> Redirected to slot [12803] located at 172.16.1.100:7001

//As mentioned earlier, when assigning keys, it uses the CRC16('my_name')%16384 algorithm to calculate which node to place this key on, which is assigned to 12803, so slot s are assigned to 7001 (range: 10923-16383) nodes.

OK

172.16.1.100:7001> get my_name //Get the data value

"linux"The redis cluster takes a very direct approach. After creating the key, it jumps directly to the 7001 node instead of the 7000 node that is still there. Now we connect the 8002 slave node:

[root@redis-01 ~]# redis-cli -h 172.16.1.110 -p 8002 -c 172.16.1.110:8002> get my_name -> Redirected to slot [12803] located at 172.16.1.100:7001 "linux" //It also gets the value of key (my_name), which also jumps to 7001 and returns the data value

3) High Availability of Test Cluster

Currently, my redis cluster has three primary nodes (7000, 8000, 7001) providing data storage and reading, three secondary nodes (7002, 8001, 8002) responsible for synchronizing the data of the primary node to their own nodes, so let's look at the content of appendonly.aof from the node (because the value just created is assigned to 7001 nodes, while 7001 primary node corresponds to 8001 secondary nodes, whereTake us to view the AOF file for 8001)

[root@redis-02 ~]# cat appendonly-8001.aof *2 $6 SELECT $1 0 *3 $3 set $7 my_name $5 linux

You do see data synchronized from the primary node.

Note: Under which path your redis start, the dump.rdb file or appendonly.aof file will be generated in the directory where they start. If you want to customize the path, you can modify the configuration file:

263 dir. / #Change relative path to absolute path

#Below, we simulate one of the master master master master servers hanging up:

[root@redis-01 ~]# ps -ef | grep redis root 5598 1 0 01:02 ? 00:00:06 redis-server 172.16.1.100:7000 [cluster] root 5603 1 0 01:02 ? 00:00:06 redis-server 172.16.1.100:7001 [cluster] root 5608 1 0 01:02 ? 00:00:06 redis-server 172.16.1.100:7002 [cluster] root 19735 2242 0 03:32 pts/0 00:00:00 grep --color=auto redis [root@redis-01 ~]# kill 5598

#Test the state of the cluster:

[root@redis-01 ~]# redis-trib.rb check 172.16.1.100:7000 [ERR] Sorry, can't connect to node 172.16.1.100:7000 [root@redis-01 ~]# redis-trib.rb check 172.16.1.100:7001 >>> Performing Cluster Check (using node 172.16.1.100:7001) M: 5721b77733b1809449c6fc5806f38f1cacb1de8c 172.16.1.100:7001 slots:10923-16383 (5461 slots) master 1 additional replica(s) S: d161ab43746405c2b517e3ffc98321956431191c 172.16.1.100:7002 slots: (0 slots) slave replicates 4debd0b5743826d203d1af777824eb1b83105d21 S: 948421116dd1859002c78a2df0b9845bdc7db631 172.16.1.110:8001 slots: (0 slots) slave replicates 5721b77733b1809449c6fc5806f38f1cacb1de8c M: 4debd0b5743826d203d1af777824eb1b83105d21 172.16.1.110:8000 slots:5461-10922 (5462 slots) master 1 additional replica(s) M: 3cc268dfbb918a99159900643b318ec87ba03ad9 172.16.1.110:8002 slots:0-5460 (5461 slots) master 0 additional replica(s)

As you can see from the above results, when the 7000 master node hangs up, then only 8002 slave nodes of 7000 will be selected as master node at this time.The data on the original 7000 nodes will not be lost, but will be transferred to the 8002 nodes. When the user retrieves the data again, it is retrieved from the 8002 nodes.

Since the 7000-node server is down for some reason, but when we resolve the problem and re-join the 7000-node cluster, what role will the 7000-node play in the cluster?

[root@redis-01 ~]# redis-server /usr/local/redis-cluster/7000/redis.conf [root@redis-01 ~]# ps -ef | grep redis root 5603 1 0 01:02 ? 00:00:08 redis-server 172.16.1.100:7001 [cluster] root 5608 1 0 01:02 ? 00:00:08 redis-server 172.16.1.100:7002 [cluster] root 19771 1 0 03:50 ? 00:00:00 redis-server 172.16.1.100:7000 [cluster] root 19789 2242 0 03:51 pts/0 00:00:00 grep --color=auto redis #View the status of the cluster: [root@redis-01 ~]# redis-trib.rb check 172.16.1.100:7001 >>> Performing Cluster Check (using node 172.16.1.100:7001) M: 5721b77733b1809449c6fc5806f38f1cacb1de8c 172.16.1.100:7001 slots:10923-16383 (5461 slots) master 1 additional replica(s) S: d161ab43746405c2b517e3ffc98321956431191c 172.16.1.100:7002 slots: (0 slots) slave replicates 4debd0b5743826d203d1af777824eb1b83105d21 S: 948421116dd1859002c78a2df0b9845bdc7db631 172.16.1.110:8001 slots: (0 slots) slave replicates 5721b77733b1809449c6fc5806f38f1cacb1de8c M: 4debd0b5743826d203d1af777824eb1b83105d21 172.16.1.110:8000 slots:5461-10922 (5462 slots) master 1 additional replica(s) M: 3cc268dfbb918a99159900643b318ec87ba03ad9 172.16.1.110:8002 slots:0-5460 (5461 slots) master 1 additional replica(s) S: 8613a457f8009aaf784df0ac6d7039034b16b6a6 172.16.1.100:7000 slots: (0 slots) slave replicates 3cc268dfbb918a99159900643b318ec87ba03ad9

You can see that 7000 nodes have successfully joined the cluster, but it serves as a slave node for 8002.

4, Add new nodes to the cluster

There are two ways to add a new node: 1 as the primary node and 2 as the secondary node of the primary node. Let's practice them separately.

1, join as primary node

1) Create a new 7003 node to join as a new master node:

[root@redis-01 ~]# mkdir /usr/local/redis-cluster/7003 [root@redis-01 ~]# cd /usr/local/redis-cluster/ [root@redis-01 redis-cluster]# cp 7000/redis.conf 7003/ [root@redis-01 redis-cluster]# sed -i "s/7000/7003/g" 7003/redis.conf #Start the 7003 redis service: [root@redis-01 ~]# redis-server /usr/local/redis-cluster/7003/redis.conf [root@redis-01 ~]# ps -ef | grep redis root 5603 1 0 01:02 ? 00:00:09 redis-server 172.16.1.100:7001 [cluster] root 5608 1 0 01:02 ? 00:00:09 redis-server 172.16.1.100:7002 [cluster] root 19771 1 0 03:50 ? 00:00:00 redis-server 172.16.1.100:7000 [cluster] root 19842 1 0 04:06 ? 00:00:00 redis-server 172.16.1.100:7003 [cluster] root 19847 2242 0 04:06 pts/0 00:00:00 grep --color=auto redis

2) Join 7003 nodes in cluster

[root@redis-01 ~]# Redis-trib.rb add-node 172.16.1.100:7003 172.16 >>> Adding node 172.16.1.100:7003 to cluster 172.16.1.100:7000 >>> Performing Cluster Check (using node 172.16.1.100:7000) S: 8613a457f8009aaf784df0ac6d7039034b16b6a6 172.16.1.100:7000 slots: (0 slots) slave replicates 3cc268dfbb918a99159900643b318ec87ba03ad9 S: 948421116dd1859002c78a2df0b9845bdc7db631 172.16.1.110:8001 slots: (0 slots) slave replicates 5721b77733b1809449c6fc5806f38f1cacb1de8c M: 3cc268dfbb918a99159900643b318ec87ba03ad9 172.16.1.110:8002 slots:0-5460 (5461 slots) master 1 additional replica(s) M: 5721b77733b1809449c6fc5806f38f1cacb1de8c 172.16.1.100:7001 slots:10923-16383 (5461 slots) master 1 additional replica(s) S: d161ab43746405c2b517e3ffc98321956431191c 172.16.1.100:7002 slots: (0 slots) slave replicates 4debd0b5743826d203d1af777824eb1b83105d21 M: 4debd0b5743826d203d1af777824eb1b83105d21 172.16.1.110:8000 slots:5461-10922 (5462 slots) master 1 additional replica(s) [OK] All nodes agree about slots configuration. >>> Check for open slots... >>> Check slots coverage... [OK] All 16384 slots covered. >>> Send CLUSTER MEET to node 172.16.1.100:7003 to make it join the cluster. [OK] New node added correctly.

Indicates that the new node has successfully connected and joined the cluster. Let's check again:

[root@redis-01 ~]# redis-trib.rb check 172.16.1.100:7003 >>> Performing Cluster Check (using node 172.16.1.100:7003) M: edd51c8389ba069d49fe54c24c535716ce06e62b 172.16.1.100:7003 slots: (0 slots) master 0 additional replica(s) M: 3cc268dfbb918a99159900643b318ec87ba03ad9 172.16.1.110:8002 slots:0-5460 (5461 slots) master 1 additional replica(s) M: 5721b77733b1809449c6fc5806f38f1cacb1de8c 172.16.1.100:7001 slots:10923-16383 (5461 slots) master 1 additional replica(s) S: d161ab43746405c2b517e3ffc98321956431191c 172.16.1.100:7002 slots: (0 slots) slave replicates 4debd0b5743826d203d1af777824eb1b83105d21 S: 948421116dd1859002c78a2df0b9845bdc7db631 172.16.1.110:8001 slots: (0 slots) slave replicates 5721b77733b1809449c6fc5806f38f1cacb1de8c S: 8613a457f8009aaf784df0ac6d7039034b16b6a6 172.16.1.100:7000 slots: (0 slots) slave replicates 3cc268dfbb918a99159900643b318ec87ba03ad9 M: 4debd0b5743826d203d1af777824eb1b83105d21 172.16.1.110:8000 slots:5461-10922 (5462 slots) master 1 additional replica(s)

You can see that there are seven nodes in the cluster and 7003 is also the master node, but you notice that the slots of the 7003 node are 0; that is, although it is now the master node, it has not allocated any slots to it, so it is not yet responsible for data access.So we need to manually re-fragment the cluster migration;

3) Migrate slot nodes

#This command is used to migrate slot nodes. The 172.16.1.100:7000 at the back indicates which cluster the port is and which node is allowed: [root@redis-01 ~]# redis-trib.rb reshard 172.16.1.100:7000 How many slots do you want to move (from 1 to 16384)? #After returning, it tells us how many slots we need to migrate to 7003, so we can figure out: 16384/4 = 4096, which means we need to move 4096 slots to 7003 for load balancing How many slots do you want to move (from 1 to 16384)? 4096 What is the receiving node ID? #It also tells us what node ID s are accepted, 7003 IDS we can get from the above information What is the receiving node ID? edd51c8389ba069d49fe54c24c535716ce06e62b Please enter all the source node IDs. Type 'all' to use all the nodes as source nodes for the hash slots. Type 'done' once you entered all the source nodes IDs. Source node #1:

#redis-trib then asks you which node to repartition the source node, from which 4096 hash slots are to be removed and moved to 7003.If we do not intend to remove a specified number of hash slots from a particular node, then we can enter all into redis-trib, in which case all the primary nodes in the cluster will become source nodes, redis-trib will take out some hash slots from each source node, totaling 4096, and then move to 7003 nodes, so we enter all:

Source node #1:all #The migration will start next and you will be asked if you are sure: Do you want to proceed with the proposed reshard plan (yes/no)? yes

Once yes is entered, redis-trib will officially begin the resplication operation, moving the specified hash slot from the source node one by one onto the 7003 node.After the migration is complete, let's check:

[root@redis-01 ~]# redis-trib.rb check 172.16.1.100:7000 >>> Performing Cluster Check (using node 172.16.1.100:7000) S: 8613a457f8009aaf784df0ac6d7039034b16b6a6 172.16.1.100:7000 slots: (0 slots) slave replicates 3cc268dfbb918a99159900643b318ec87ba03ad9 S: 948421116dd1859002c78a2df0b9845bdc7db631 172.16.1.110:8001 slots: (0 slots) slave replicates 5721b77733b1809449c6fc5806f38f1cacb1de8c M: edd51c8389ba069d49fe54c24c535716ce06e62b 172.16.1.100:7003 slots:0-1364,5461-6826,10923-12287 (4096 slots) master 0 additional replica(s) M: 3cc268dfbb918a99159900643b318ec87ba03ad9 172.16.1.110:8002 slots:1365-5460 (4096 slots) master 1 additional replica(s) M: 5721b77733b1809449c6fc5806f38f1cacb1de8c 172.16.1.100:7001 slots:12288-16383 (4096 slots) master 1 additional replica(s) S: d161ab43746405c2b517e3ffc98321956431191c 172.16.1.100:7002 slots: (0 slots) slave replicates 4debd0b5743826d203d1af777824eb1b83105d21 M: 4debd0b5743826d203d1af777824eb1b83105d21 172.16.1.110:8000 slots:6827-10922 (4096 slots) master 1 additional replica(s)

We focus on 7003: "0-1364,5461-6826,10923-12287 (4096 slots)"

These slot s were originally migrated to 7003 on other nodes.Originally, it was only an interval movement, not an overall movement of convergence, so let's verify whether there is data on top of 7003 nodes:

[root@redis-01 ~]# redis-cli -h 172.16.1.100 -p 7003 -c 172.16.1.100:7003> get my_name -> Redirected to slot [12803] located at 172.16.1.100:7001 "linux" //Proves that the 7003 primary node is working properly.

2, join as from node

1) Create a new 8003 node as the slave node of 7003, which is omitted here. After starting the redis service of 8003, we join it to the slave node of the cluster:

/Use add-node -slave --master-id Command, master-id Points to which node you need to select as the primary node for the newly joined slave node, 172.16.1.110:8003 Indicates that you need to join a new slave node, and finally select any of the nodes in the current cluster. [root@redis-02 ~]# redis-trib.rb add-node --slave --master-id edd51c8389ba069d49fe54c24c535716ce06e62b 172.16.1.110:8003 172.16.1.110:8000 >>> Adding node 172.16.1.110:8003 to cluster 172.16.1.110:8000 >>> Performing Cluster Check (using node 172.16.1.110:8000) M: 4debd0b5743826d203d1af777824eb1b83105d21 172.16.1.110:8000 slots:6827-10922 (4096 slots) master 1 additional replica(s) M: 3cc268dfbb918a99159900643b318ec87ba03ad9 172.16.1.110:8002 slots:1365-5460 (4096 slots) master 1 additional replica(s) M: edd51c8389ba069d49fe54c24c535716ce06e62b 172.16.1.100:7003 slots:0-1364,5461-6826,10923-12287 (4096 slots) master 0 additional replica(s) S: d161ab43746405c2b517e3ffc98321956431191c 172.16.1.100:7002 slots: (0 slots) slave replicates 4debd0b5743826d203d1af777824eb1b83105d21 M: 5721b77733b1809449c6fc5806f38f1cacb1de8c 172.16.1.100:7001 slots:12288-16383 (4096 slots) master 1 additional replica(s) S: 948421116dd1859002c78a2df0b9845bdc7db631 172.16.1.110:8001 slots: (0 slots) slave replicates 5721b77733b1809449c6fc5806f38f1cacb1de8c S: 8613a457f8009aaf784df0ac6d7039034b16b6a6 172.16.1.100:7000 slots: (0 slots) slave replicates 3cc268dfbb918a99159900643b318ec87ba03ad9 [OK] All nodes agree about slots configuration. >>> Check for open slots... >>> Check slots coverage... [OK] All 16384 slots covered. >>> Send CLUSTER MEET to node 172.16.1.110:8003 to make it join the cluster. Waiting for the cluster to join. >>> Configure node as replica of 172.16.1.100:7003. [OK] New node added correctly.

The top tip says that 7003 has been selected as the master node and succeeded.Let's examine the status of each node in the cluster:

[root@redis-02 ~]# redis-trib.rb check 172.16.1.110:8003 >>> Performing Cluster Check (using node 172.16.1.110:8003) S: 4ffabff843d32364fc506f3445980f5a04aa4292 172.16.1.110:8003 slots: (0 slots) slave replicates edd51c8389ba069d49fe54c24c535716ce06e62b M: 4debd0b5743826d203d1af777824eb1b83105d21 172.16.1.110:8000 slots:6827-10922 (4096 slots) master 1 additional replica(s) S: 8613a457f8009aaf784df0ac6d7039034b16b6a6 172.16.1.100:7000 slots: (0 slots) slave replicates 3cc268dfbb918a99159900643b318ec87ba03ad9 M: 3cc268dfbb918a99159900643b318ec87ba03ad9 172.16.1.110:8002 slots:1365-5460 (4096 slots) master 1 additional replica(s) M: 5721b77733b1809449c6fc5806f38f1cacb1de8c 172.16.1.100:7001 slots:12288-16383 (4096 slots) master 1 additional replica(s) M: edd51c8389ba069d49fe54c24c535716ce06e62b 172.16.1.100:7003 slots:0-1364,5461-6826,10923-12287 (4096 slots) master 1 additional replica(s) S: d161ab43746405c2b517e3ffc98321956431191c 172.16.1.100:7002 slots: (0 slots) slave replicates 4debd0b5743826d203d1af777824eb1b83105d21 S: 948421116dd1859002c78a2df0b9845bdc7db631 172.16.1.110:8001 slots: (0 slots) slave replicates 5721b77733b1809449c6fc5806f38f1cacb1de8c

#Verify that the slave node can communicate in the cluster: [root@redis-02 ~]# redis-cli -h 172.16.1.110 -p 8003 -c 172.16.1.110:8003> get my_name -> Redirected to slot [12803] located at 172.16.1.100:7001 "linux" //Prove that join from node was successful and working

5, Remove Nodes from Cluster

If you add a node in the redis cluster, you will certainly need to remove it. The redis cluster also supports the ability to remove nodes, as is the use of redis-trib.rb.

Grammar Format: redis-trib del-node ip:port`<node-id>`

1, remove the primary node

//Unlike the new node, remove the node-id that requires the node.So we're trying to remove the 8000 primary node: [root@redis-02 ~]# redis-trib.rb del-node 172.16.1.110:8000 4debd0b5743826d203d1af777824eb1b83105d21 >>> Removing node 4debd0b5743826d203d1af777824eb1b83105d21 from cluster 172.16.1.110:8000 [ERR] Node 172.16.1.110:8000 is not empty! Reshard data away and try again.

Error, it prompts us to say that since there is already data in the 8000 node, it cannot be removed. First transfer its data out, that is, it has to be re-fragmented, so use the same way as after adding a new node above, and then re-fragment again:

[root@redis-01 ~]# redis-trib.rb reshard 172.16.1.100:7000 #Tip, how many slots do we have to divide? Since there are 4096 slots on 8000, fill in 4096 here How many slots do you want to move (from 1 to 16384)? 4096 #Prompt us which id we need to move to, then choose to move to the 8002 master node What is the receiving node ID? 3cc268dfbb918a99159900643b318ec87ba03ad9 Please enter all the source node IDs. Type 'all' to use all the nodes as source nodes for the hash slots. Type 'done' once you entered all the source nodes IDs. Source node #1: #Here's the key. He asked us which node to transfer data from to 8002, because we were removing 8000, so we had to fill in the id of 8000 nodes: Source node #1:4debd0b5743826d203d1af777824eb1b83105d21 Source node #2:done //Enter the do command to end Do you want to proceed with the proposed reshard plan (yes/no)? yes //Enter yes

ok, so the original data in the 8000 master node will migrate successfully.

Let's check the status of the node:

[root@redis-01 ~]# redis-trib.rb check 172.16.1.100:7000 >>> Performing Cluster Check (using node 172.16.1.100:7000) S: 8613a457f8009aaf784df0ac6d7039034b16b6a6 172.16.1.100:7000 slots: (0 slots) slave replicates 3cc268dfbb918a99159900643b318ec87ba03ad9 S: 4ffabff843d32364fc506f3445980f5a04aa4292 172.16.1.110:8003 slots: (0 slots) slave replicates edd51c8389ba069d49fe54c24c535716ce06e62b S: 948421116dd1859002c78a2df0b9845bdc7db631 172.16.1.110:8001 slots: (0 slots) slave replicates 5721b77733b1809449c6fc5806f38f1cacb1de8c M: edd51c8389ba069d49fe54c24c535716ce06e62b 172.16.1.100:7003 slots:0-1364,5461-6826,10923-12287 (4096 slots) master 1 additional replica(s) M: 3cc268dfbb918a99159900643b318ec87ba03ad9 172.16.1.110:8002 slots:1365-5460,6827-10922 (8192 slots) master 2 additional replica(s) M: 5721b77733b1809449c6fc5806f38f1cacb1de8c 172.16.1.100:7001 slots:12288-16383 (4096 slots) master 1 additional replica(s) S: d161ab43746405c2b517e3ffc98321956431191c 172.16.1.100:7002 slots: (0 slots) slave replicates 3cc268dfbb918a99159900643b318ec87ba03ad9 M: 4debd0b5743826d203d1af777824eb1b83105d21 172.16.1.110:8000 slots: (0 slots) master 0 additional replica(s) //You can see that the slots on the 8000 node are already zero, and the slots on top of it have been migrated to the 8002 node.

#Remove the 8000 primary node now:

[root@redis-02 ~]# redis-trib.rb del-node 172.16.1.110:8000 4debd0b5743826d203d1af777824eb1b83105d21 >>> Removing node 4debd0b5743826d203d1af777824eb1b83105d21 from cluster 172.16.1.110:8000 >>> Sending CLUSTER FORGET messages to the cluster... >>> SHUTDOWN the node.

[root@redis-02 ~]# redis-trib.rb check 172.16.1.110:8000 [ERR] Sorry, can't connect to node 172.16.1.110:8000

ok, the primary node was removed successfully.

2, Remove slave nodes

Removing a slave node is much simpler, because without considering the migration of the data, we will remove the 7002 slave node:

[root@redis-02 ~]# redis-trib.rb del-node 172.16.1.100:7002 d161ab43746405c2b517e3ffc98321956431191c >>> Removing node d161ab43746405c2b517e3ffc98321956431191c from cluster 172.16.1.100:7002 >>> Sending CLUSTER FORGET messages to the cluster... >>> SHUTDOWN the node. //Indicates that 7002 has been successfully removed from the node.

[root@redis-02 ~]# redis-trib.rb check 172.16.1.100:7000 //Check the status information of the current cluster >>> Performing Cluster Check (using node 172.16.1.100:7000) S: 8613a457f8009aaf784df0ac6d7039034b16b6a6 172.16.1.100:7000 slots: (0 slots) slave replicates 3cc268dfbb918a99159900643b318ec87ba03ad9 S: 4ffabff843d32364fc506f3445980f5a04aa4292 172.16.1.110:8003 slots: (0 slots) slave replicates edd51c8389ba069d49fe54c24c535716ce06e62b S: 948421116dd1859002c78a2df0b9845bdc7db631 172.16.1.110:8001 slots: (0 slots) slave replicates 5721b77733b1809449c6fc5806f38f1cacb1de8c M: edd51c8389ba069d49fe54c24c535716ce06e62b 172.16.1.100:7003 slots:0-1364,5461-6826,10923-12287 (4096 slots) master 1 additional replica(s) M: 3cc268dfbb918a99159900643b318ec87ba03ad9 172.16.1.110:8002 slots:1365-5460,6827-10922 (8192 slots) master 1 additional replica(s) M: 5721b77733b1809449c6fc5806f38f1cacb1de8c 172.16.1.100:7001 slots:12288-16383 (4096 slots) master 1 additional replica(s) [OK] All nodes agree about slots configuration. >>> Check for open slots... >>> Check slots coverage... [OK] All 16384 slots covered.

Removal was successful.