- Environmental preparation

- Docker and docker compose installation( https://docs.docker.com/compose/install/)

#docker installation curl -sSL https://get.daocloud.io/docker | sh #Docker compose installation curl -L \ https://get.daocloud.io/docker/compose/releases/download/1.23.2/docker-compose-`uname -s`-`uname -m` \ > /usr/local/bin/docker-compose chmod +x /usr/local/bin/docker-compose #View installation results docker-compose -v

- Create elasticsearch data and log storage directory

#Create a data / log directory. Here we deploy three nodes

mkdir /root/app/elasticsearch/data/{node0,node1,node2} -p

mkdir /root/app/elasticsearch/logs/{node0,node1,node2} -p

cd /opt/elasticsearch

#jurisdiction

chmod 0777 data/* -R && chmod 0777 logs/* -R

#Prevent JVM from reporting errors

echo vm.max_map_count=262144 >> /etc/sysctl.conf

sysctl -p- Create docker compose orchestration file

Create a docker-compose.yml file in the newly created directory (/ root/app/elasticsearch), based on the image elasticsearch:7.4.0

ersion: '2.2'

services:

es01:

image: docker.elastic.co/elasticsearch/elasticsearch:7.4.0

container_name: es01

environment:

- node.name=es01

- cluster.name=es-docker-cluster

- discovery.seed_hosts=es02,es03

- cluster.initial_master_nodes=es01,es02,es03

- bootstrap.memory_lock=true

- "ES_JAVA_OPTS=-Xms512m -Xmx512m"

ulimits:

memlock:

soft: -1

hard: -1

volumes:

- ./data/node0:/usr/share/elasticsearch/data

- ./logs/node0:/usr/share/elasticsearch/logs

ports:

- 9200:9200

networks:

- elastic

es02:

image: docker.elastic.co/elasticsearch/elasticsearch:7.4.0

container_name: es02

environment:

- node.name=es02

- cluster.name=es-docker-cluster

- discovery.seed_hosts=es01,es03

- cluster.initial_master_nodes=es01,es02,es03

- bootstrap.memory_lock=true

- "ES_JAVA_OPTS=-Xms512m -Xmx512m"

ulimits:

memlock:

soft: -1

hard: -1

volumes:

- ./data/node1:/usr/share/elasticsearch/data

- ./logs/node1:/usr/share/elasticsearch/logs

networks:

- elastic

es03:

image: docker.elastic.co/elasticsearch/elasticsearch:7.4.0

container_name: es03

environment:

- node.name=es03

- cluster.name=es-docker-cluster

- discovery.seed_hosts=es01,es02

- cluster.initial_master_nodes=es01,es02,es03

- bootstrap.memory_lock=true

- "ES_JAVA_OPTS=-Xms512m -Xmx512m"

ulimits:

memlock:

soft: -1

hard: -1

volumes:

- ./logs/node2:/usr/share/elasticsearch/data

- ./logs/node2:/usr/share/elasticsearch/logs

networks:

- elastic

networks:

elastic:

driver: bridgeParameter Description:

- Cluster name: cluster. Name = es docker cluster

- Node name: node.name=es01

- Can I be the primary node: node.master=true

- Whether to store data: node.data=true

- Lock the physical memory address of the process to avoid swapped to improve performance: bootstrap.memory_lock=true

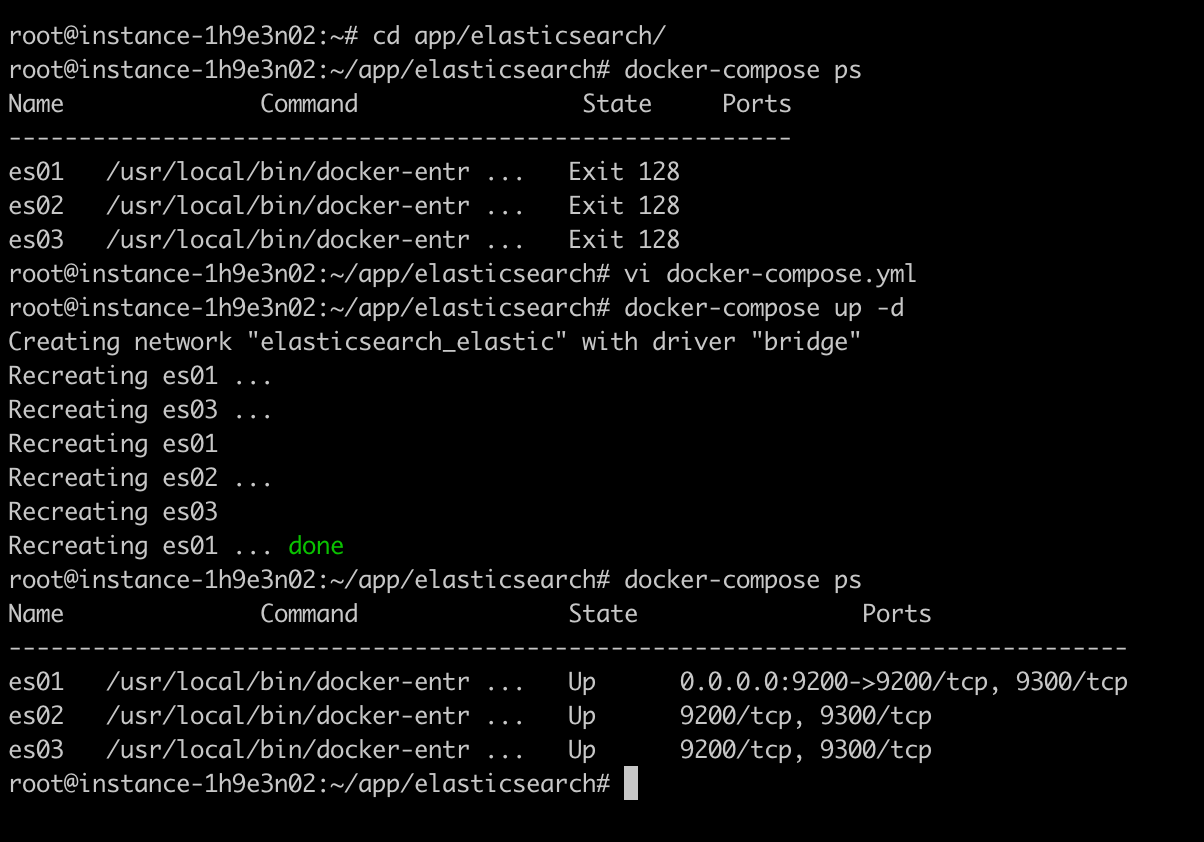

- Create and start services

#start-up docker-compose up -d #see docker-compose ps

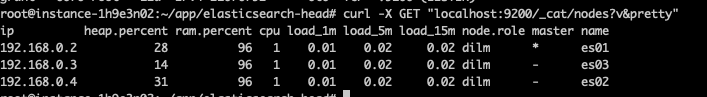

You can run curl -X GET "localhost: 9200 / _cat / nodes? V & pretty" to view the cluster information

- Install the Head plug-in( https://github.com/mobz/elasticsearch-head)

git clone git://github.com/mobz/elasticsearch-head.git

- Download node.js( https://nodejs.org/en/download)

After installation, execute node -v in the cmd window to view the version number of node.js and check whether the installation is successful

- Install grunt

npm install -g grunt-cli

Through npm, the package manager of node.js, you can install grunt as a global command. Grunt is a project building tool based on node.js

- Execute npm install (if you do not execute this command, an error will be reported if you use the grunt server command)

npm install

- Start the elasticsearch head service

cd ~/app/elasticsearch-head grunt server #If the background starts, run nohup grunt server & exit

- Stop elasticsearch head service

If the elasticsearch head service is running in the background, the service can only be stopped through the kill port

#Find the process (PID) corresponding to the port through lsof -i lsof -i:9100 #kill the corresponding process kill -9 6076

Note: if an error is reported during startup:

1. >>Local Npm module "grunt-contrib-clean" not found. Is it installed? 2. >> Local Npm module "grunt-contrib-concat" not found. Is it installed? 3. >> Local Npm module "grunt-contrib-watch" not found. Is it installed? 4. >> Local Npm module "grunt-contrib-connect" not found. Is it installed? 5. >> Local Npm module "grunt-contrib-copy" not found. Is it installed? 6. >> Local Npm module "grunt-contrib-jasmine" not found. Is it installed? Warning: Task "connect:server" not found. Use --force to continue.

Run the following command in the elasticsearch head Directory:

npm install grunt-contrib-clean --registry=https://registry.npm.taobao.org npm install grunt-contrib-concat --registry=https://registry.npm.taobao.org npm install grunt-contrib-watch --registry=https://registry.npm.taobao.org npm install grunt-contrib-connect --registry=https://registry.npm.taobao.org npm install grunt-contrib-copy --registry=https://registry.npm.taobao.org npm install grunt-contrib-jasmine --registry=https://registry.npm.taobao.org

- View effect

The head master page can be displayed, but the display connection fails and the "cluster health value: not connected" appears. How to solve this problem?

- Check if cross domain is enabled

When this error occurs, first check whether the cross domain (HTTP. CORS. Enabled) is enabled in the configuration file And http.cors.allow-origin, http.cors.enabled: the default is false, indicating whether to run cross domain; http.cors.allow-origin: when cross domain is allowed, the default is *, indicating that all domain names are supported. If we only allow some websites to access, regular expressions can be used. For example, only local addresses are allowed. / https?:\/\/localhost(:[0-9]+)?/

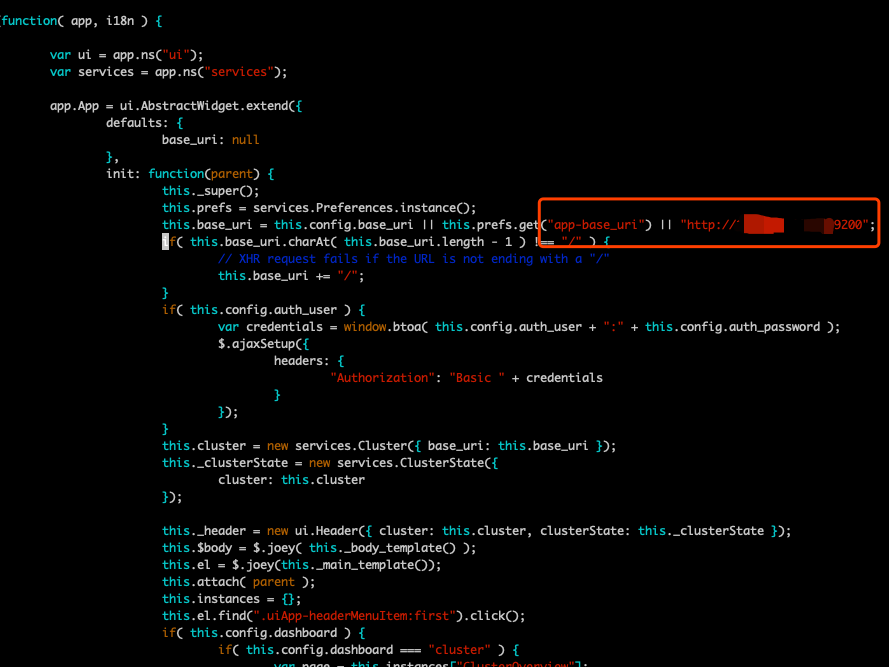

- If it is your own server, you need to make the following modifications

#Modify elasticsearch head / _site / app.js #Find the app base_uri keyword and change localhost to its own ip address

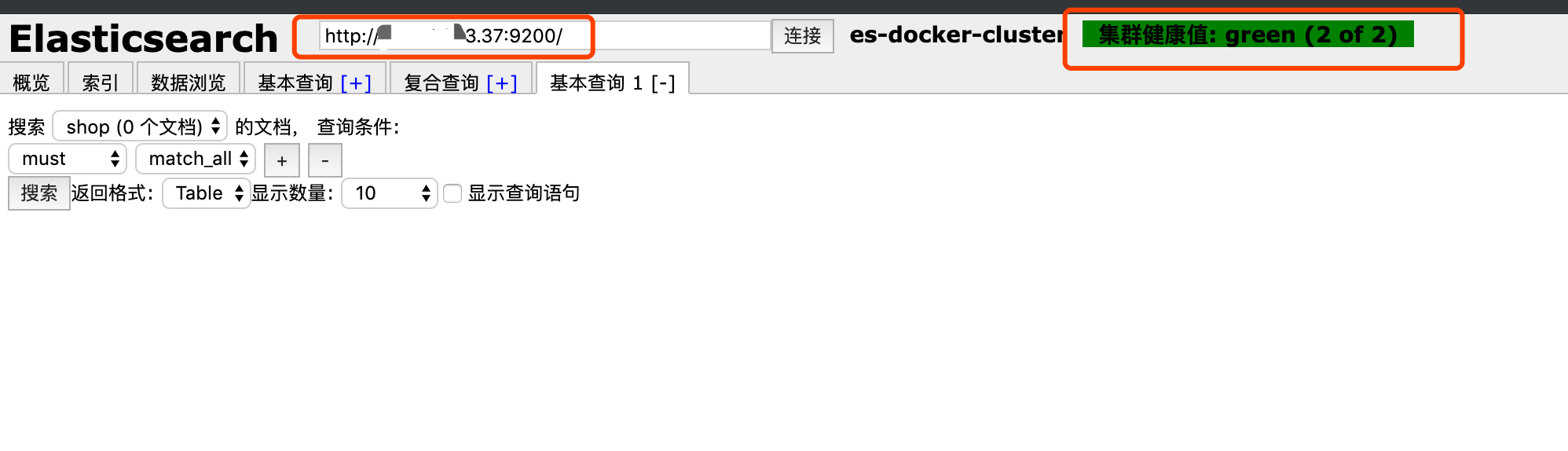

- See the effect again

The connection address is successfully changed to its own IP, and the cluster health value turns green

Subsequent articles describe how to use