1. What is RAID:

- The full name of the disk array is "Redundant Arrays of Inexpensive Disks, RAID". The English version means: fault-tolerant, inexpensive disk array.

- RAID can integrate multiple smaller disks into a larger disk device using a single technology (software or hardware); this larger disk function is more than storage; it also has data protection capabilities.

- The entire RAID has different capabilities due to different level s of selection.

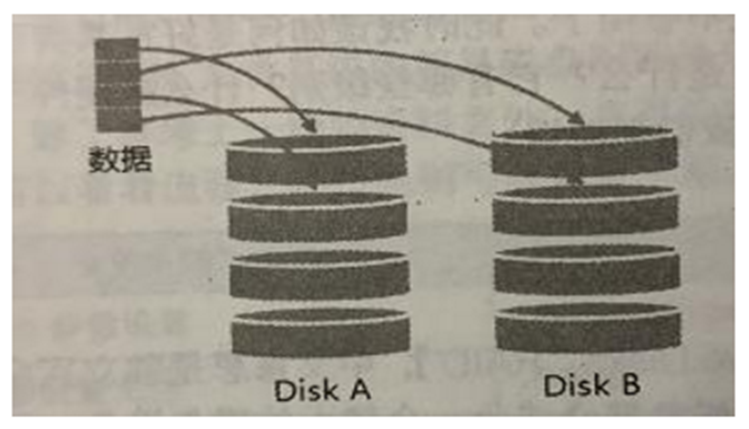

1. RAID-0 (stripe equivalent mode): Best performance

- This mode works better if you use disks of the same size and capacity.

- The RAID in this mode will cut the disk out of equal blocks (named chunk, which can generally be set between 4K and 1M), then when a file is written to the RAID, it will be cut according to the size of the chunk and placed on each disk in sequence.

- Since each disk stores data staggered, when your data is written to the RAID, it is placed on each disk in equal amounts.

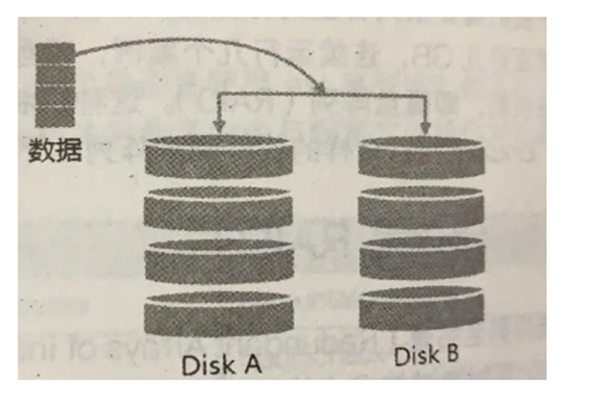

2. RAID-1 (map mode, mirror): full backup

- This mode also needs the same disk capacity, preferably the same disk!

- If the RAID-1 is made up of disks of different capacities, the total capacity will be dominated by the smallest disk!This mode is mainly "Let the same data be completely stored on two disks"

- For example, if I have a 100MB file and I only have two disks that make up RAID-1, they will be written to their storage space at the same time as 100MB.As a result, the overall RAID capacity is almost 50%.Because the contents of the two hard disks are identical, as if they were mirrored, we also call them mirror mode.

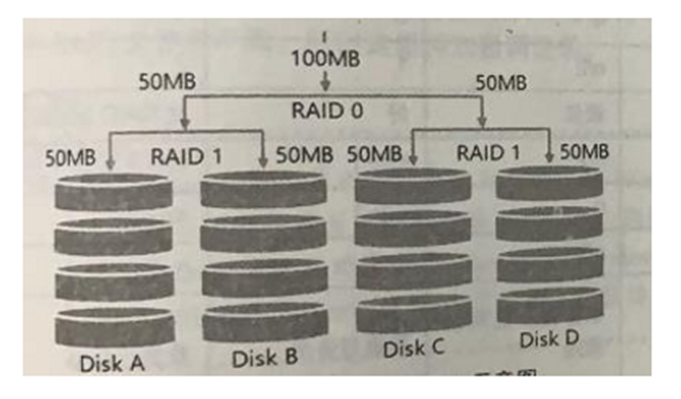

3,RAID 1+0,RAID 0+1

- RAID-0 has good performance but unsafe data, and RAID-1 has secure but poor performance. Can you combine the two to set RAID?

- RAID 1+0 is:

(1) Let two disks make up RAID 1 first, and there are two groups of such settings;

(2) Re-group the two sets of RAID 1 into a set of RAID 0.This is RAID 1+0 - RAID 0+1 is:

(1) Let two disks make up RAID 0 first, and there are two groups of such settings;

(2) Re-compose the two sets of RAID 0 into a set of RAID 1.This is RAID 0+1

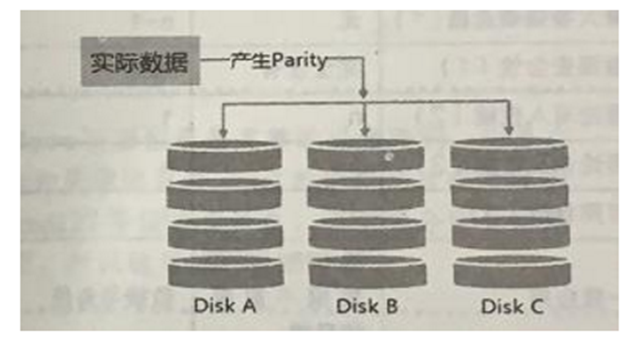

4. RAID5: Balance of performance with data backup (focus)

- RAID-5 requires at least three disks to make up this type of disk array.

- The data write of this disk array is a bit like RAID-0, but during each striping cycle, a Parity check data is added to each disk, which records backup data from other disks for rescue when the disk is damaged.

How RAID5 works:

- As each cycle writes, some parity codes are recorded, and the recorded parity codes are recorded on different disks each time, so any disk that is damaged can be used to rebuild the data in the original disk with the check codes of other disks.However, it is important to note that the total capacity of RAID 5 is one less than the total number of disks due to the parity check code.

- The original 3 disks will only have (3-1) = 2 disks left.

- When the number of disks damaged is greater than or equal to two, the entire set of RAID 5 data is damaged.Because RAID 5 only supports one disk corruption by default.

RAID 6 can support two disk corruptions

SPare Disk:

In order for the system to rebuild actively in real time when the hard disk is damaged, the spare disk is needed.The so-called spare disk is one or more disks that are not included in the original disk array level. This disk is not normally used by the disk array. When there is any disk damage to the disk array, this spare disk will be actively pulled into the disk array and the damaged hard disk will be moved out of the disk array!Then rebuild the data system immediately.

Advantages of disk arrays:

- Data security and reliability: refers not to network information security, but to whether the data can be rescued or used safely when the hardware (referring to the disk) is damaged;

- Read and write performance: RAID 0, for example, can enhance read and write performance and improve the I/O part of your system;

- Capacity: Multiple disks can be combined, so a single file system can have considerable capacity.

2. Software, hardware RAID:

.

Why are disk arrays divided into hardware and software?

The so-called hardware RAID (Hardware RAID) accomplishes the purpose of an array by means of a disk array card.A dedicated chip on the disk array card handles RAID tasks, so it will perform better.In many tasks, such as RAID 5 parity check code calculation, the disk array does not consume the I/O bus of the original system repeatedly, and the performance will be better in theory.In addition, the current general medium and high-order disk array cards support hot-plug, that is, swapping damaged disks without shutting down, which is very useful for system recovery and data reliability.

Software disk array emulates the tasks of the array mainly by software, so it will consume more system resources, such as CPU operation and I/O bus resources.But now our personal computers are really fast, so the previous speed limit is no longer there!

The software disk array provided by our CentOS is mdadm, which uses partition or disk as the unit of disk. That is, you don't need more than two disks, you only need more than two partitions to design your disk array.

In addition, mdadm supports RAID0/RAID1/RAID5/spare disk, etc., which we have just mentioned!And the management mechanism provided can also achieve hotplug-like functions, which can be partitioned on-line (normal file system use)!It is also very convenient to use!

3. Configuration of software disk arrays:

So much chatter, let's configure the software disk array:

Approximate steps:

- RAID 5 is composed of four partition s;

- Each partition is about 1GB in size, so make sure that each partition is as large as the others.

- Use a partition set to spare disk chunk as large as 256K!

- This spare disk is as big as the other RAID required partition s!

- Mount this RAID 5 device in the / srv/raid directory

Start configuring:

1. Partition

[root@raid5 /]# gdisk /dev/sdb # Create partitions using the gdisk command, or use fdisk

Command (? for help): n # Add a new partition

Partition number (1-128, default 1): 1 # Partition number is 1

First sector (34-41943006, default = 2048) or {+-}size{KMGTP}:

Last sector (2048-41943006, default = 41943006) or {+-}size{KMGTP}: +1G # Size 1G

Current type is 'Linux filesystem'

Hex code or GUID (L to show codes, Enter = 8300): # GUID Number

Changed type of partition to 'Linux filesystem'

#Follow this command to create four partitions

Command (? for help): P # View created partitions

.......................// Omit Part

Number Start (sector) End (sector) Size Code Name

1 2048 2099199 1024.0 MiB 8300 Linux filesystem

2 2099200 4196351 1024.0 MiB 8300 Linux filesystem

3 4196352 6293503 1024.0 MiB 8300 Linux filesystem

4 6293504 8390655 1024.0 MiB 8300 Linux filesystem

5 8390656 10487807 1024.0 MiB 8300 Linux filesystem

# Save Exit

[root@raid5 /]# lsblk # View Disk List

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sda 8:0 0 100G 0 disk

├─sda1 8:1 0 1G 0 part /boot

└─sda2 8:2 0 99G 0 part

├─cl-root 253:0 0 50G 0 lvm /

├─cl-swap 253:1 0 2G 0 lvm [SWAP]

└─cl-home 253:2 0 47G 0 lvm /home

sdb 8:16 0 20G 0 disk # You can see that we have four partitions on our sdb disk

├─sdb1 8:17 0 1G 0 part

├─sdb2 8:18 0 1G 0 part

├─sdb3 8:19 0 1G 0 part

└─sdb4 8:20 0 1G 0 part

└─sdb5 8:21 0 1G 0 part # The fifth is reserved disks

sr0 11:0 1 1024M 0 rom 2. Create

[root@raid5 /]# mdadm --create /dev/md0 --auto=yes --level=5 --chunk=256K --raid-devices=4 --spare-devices=1 /dev/sdb1 /dev/sdb2 /dev/sdb3 /dev/sdb4 /dev/sdb5

mdadm: Defaulting to version 1.2 metadata

mdadm: array /dev/md0 started.

--create: To create RAID Options for

--auto=yes: Decide to create a subsequent software disk array device, that is md [0-9]

--chunk=256K: Deciding on this device chunk Size can also be used as stripe Size, generally 64 K Or 512 K

--raid-devices=4: Devices that use several disks or partitions as disk arrays

--spare-devices=1: Use several disks or partitions as backup devices

--level=5: Set the level of this set of disk arrays. We recommend using only 0, 1, 5

--detail: Details of the disk array device you connect to later

[root@raid5 /]# mdadm --detail /dev/md0

/dev/md0: # Device File Name for RAID

Version : 1.2

Creation Time : Thu Nov 7 20:26:03 2019 # Creation Time

Raid Level : raid5 # Rank of RAID

Array Size : 3142656 (3.00 GiB 3.22 GB) # Available capacity for the entire set of RAID s

Used Dev Size : 1047552 (1023.00 MiB 1072.69 MB) # Capacity per disk

Raid Devices : 4 # Number of disks that make up the RAID

Total Devices : 5 # Total disks including spare

Persistence : Superblock is persistent

Update Time : Thu Nov 7 20:26:08 2019

State : clean # Current usage status of this disk array

Active Devices : 4 # Number of devices started

Working Devices : 5 # Number of devices currently used in this array

Failed Devices : 0 # Number of damaged devices

Spare Devices : 1 # Number of reserved disks

Layout : left-symmetric

Chunk Size : 256K # This is chunk's block capacity

Name : raid5:0 (local to host raid5)

UUID : facfa60d:c92b4ced:3f519b65:d135fd98

Events : 18

Number Major Minor RaidDevice State

0 8 17 0 active sync /dev/sdb1

1 8 18 1 active sync /dev/sdb2

2 8 19 2 active sync /dev/sdb3

5 8 20 3 active sync /dev/sdb4

4 8 21 - spare /dev/sdb5 # See sdb5 as standby in the waiting area

# The last five lines are the current status of the five devices, RaidDevice refers to the order of disks within the Raid

[root@raid5 /]# cat /proc/mdstat

Personalities : [raid6] [raid5] [raid4]

md0 : active raid5 sdb4[5] sdb5[4](S) sdb3[2] sdb2[1] sdb1[0] # first line

3142656 blocks super 1.2 level 5, 512k chunk, algorithm 2 [4/4] [UUUU] # Second line

unused devices: <none>The first line part: indicates that md0 is raid5 and uses four disk devices, sdb1,sdb2,sdb3,sdb4, etc.The number in the bracket [] after each device is the order in which the disk is placed in the RAID (RaidDevice); the [S] after sdb5 means that sdb5 is spare.

Section 2: This disk array has 3142656 blocks (1 K per block), so the total capacity is about 3GB, using RAID 5 level, 256K chunk size to write to disk, using algorithm 2 disk array algorithm.[m/n] means that M devices are required for this array and N devices are functioning properly.Therefore, this md0 requires four devices and these four devices are working properly.The following [UUUU] represents the start-up of the four required devices (that is, m inside [m/n]), U represents normal operation, and _represents abnormal operation.

3. Format and mount using

[root@raid5 /]# mkfs.xfs -f -d su=256k,sw=3 -r extsize=768k /dev/md0 # Note that this format is md0

meta-data=/dev/md0 isize=512 agcount=8, agsize=98176 blks

= sectsz=512 attr=2, projid32bit=1

= crc=1 finobt=0, sparse=0

data = bsize=4096 blocks=785408, imaxpct=25

= sunit=128 swidth=384 blks

naming =version 2 bsize=4096 ascii-ci=0 ftype=1

log =internal log bsize=4096 blocks=2560, version=2

= sectsz=512 sunit=8 blks, lazy-count=1

realtime =none extsz=1572864 blocks=0, rtextents=0

[root@raid5 /]# mkdir /srv/raid

[root@raid5 /]# mount /dev/md0 /srv/raid/

[root@raid5 /]# df -TH /srv/raid/ # See that we have mounted successfully

Filesystem Type Size Used Avail Use% Mounted on

/dev/md0 xfs 3.3G 34M 3.2G 2% /srv/raid4. Rescue of simulated RAID errors

As the saying goes, "Everything happens unexpectedly. Everyone has a misfortune." Nobody knows when the device in your disk array will go wrong, so it is necessary to know about the rescue of software disk array.Let's mimic RAID errors and rescue them.

[root@raid5 /]# cp -a /var/log/ /srv/raid/ # Copy some data to the mount point first

[root@raid5 /]# df -TH /srv/raid/ ; du -sm /srv/raid/* # See that there is already data in it

Filesystem Type Size Used Avail Use% Mounted on

/dev/md0 xfs 3.3G 39M 3.2G 2% /srv/raid

5 /srv/raid/log

[root@raid5 /]# mdadm --manage /dev/md0 --fail /dev/sdb3

mdadm: set /dev/sdb3 faulty in /dev/md0 # Display devices that have become errors

.............................. // Omit some content

Update Time : Thu Nov 7 20:55:31 2019

State : clean

Active Devices : 4

Working Devices : 4

Failed Devices : 1 # An error occurred on a disk

Spare Devices : 0 # The preparation here has changed to 0, indicating that it has been replaced. It's a bit slow here, otherwise it's still 1

............................ // Omit some content

Number Major Minor RaidDevice State

0 8 17 0 active sync /dev/sdb1

1 8 18 1 active sync /dev/sdb2

4 8 21 2 active sync /dev/sdb5 # Here you can see that sdb5 has been replaced

5 8 20 3 active sync /dev/sdb4

2 8 19 - faulty /dev/sdb3 # sdb3 diedNow you can unplug the bad disk and replace it with a new one

[root@raid5 /]# mdadm --manage /dev/md0 --remove /dev/sdb3 # Simulate unplugging old disks

mdadm: hot removed /dev/sdb3 from /dev/md0

[root@raid5 /]# mdadm --manage /dev/md0 --add /dev/sdb3 # Insert a new disk

mdadm: added /dev/sdb3

[root@raid5 /]# mdadm --detail /dev/md0 # See

........................... // Omit some content

Number Major Minor RaidDevice State

0 8 17 0 active sync /dev/sdb1

1 8 18 1 active sync /dev/sdb2

4 8 21 2 active sync /dev/sdb5

5 8 20 3 active sync /dev/sdb4

6 8 19 - spare /dev/sdb3 # We will find that sdb3 is already waiting here as a reserve disk5. Set up boot-up self-starting RAID and automount

[root@raid5 /]# mdadm --detail /dev/md0 | grep -i uuid

UUID : facfa60d:c92b4ced:3f519b65:d135fd98

[root@raid5 /]# vim /etc/mdadm.conf

ARRAY /dev/md0 UUID=facfa60d:c92b4ced:3f519b65:d135fd98

# RAID Device ID Content

[root@raid5 /]# blkid /dev/md0

/dev/md0: UUID="bc2a589c-7df0-453c-b971-1c2c74c39075" TYPE="xfs"

[root@raid5 /]# vim /etc/fstab # Set up boot-up automount

............................ // Omit some content

/dev/md0 /srv/raid xfs defaults 0 0

#Start with UUID

[root@raid5 /]# df -Th /srv/raid/ # Can be restarted for testing

Filesystem Type Size Used Avail Use% Mounted on

/dev/md0 xfs 3.0G 37M 3.0G 2% /srv/raid