Thank you for your reference- http://bjbsair.com/2020-04-01/tech-info/18382.html

Current restriction at the consumer end#

1. Why limit the flow to the consumer#

Suppose a scenario, first of all, our Rabbitmq server has a backlog of tens of thousands of unprocessed messages. If we open a consumer client, we will see this situation: a huge amount of messages are pushed all at once, but our single client cannot process so much data at the same time!

When the amount of data is very large, it is certainly unscientific for us to restrict the flow at the production end, because sometimes the concurrent amount is very large, sometimes the concurrent amount is very small, we cannot restrict the production end, which is the behavior of users. Therefore, we should limit the flow to the consumer side to maintain the stability of the consumer side. When the number of messages surges, it is likely to cause resource exhaustion and affect the performance of the service, resulting in system stuck or even directly crash.

2. api explanation of current limiting#

RabbitMQ provides a QoS (quality of service) function, that is, on the premise of not automatically confirming messages, if a certain number of messages (by setting the value of QoS based on consumption or channel) are not confirmed, new messages will not be consume d.

Copy/** * Request specific "quality of service" settings. * These settings impose limits on the amount of data the server * will deliver to consumers before requiring acknowledgements. * Thus they provide a means of consumer-initiated flow control. * @param prefetchSize maximum amount of content (measured in * octets) that the server will deliver, 0 if unlimited * @param prefetchCount maximum number of messages that the server * will deliver, 0 if unlimited * @param global true if the settings should be applied to the * entire channel rather than each consumer * @throws java.io.IOException if an error is encountered */ void basicQos(int prefetchSize, int prefetchCount, boolean global) throws IOException;

- prefetchSize: 0, single message size limit, 0 means unlimited

- prefetchCount: the number of messages consumed at one time. RabbitMQ will be told not to push more than N messages to a consumer at the same time, that is, once there are N messages without ACK, the consumer will block until there is ack.

- global: true, false whether to apply the above settings to the channel, in short, whether the above limit is channel level or consumer level. When we set it to false, it takes effect. When we set it to true, there is no current limiting function, because the channel level has not been implemented.

- Note: for prefetchSize and global, rabbitmq has not been implemented and will not be studied for now. In particular, prefetchCount takes effect only when no_ask=false, that is, the two values do not take effect in the case of automatic response.

3. How to limit the flow of consumer#

- First of all, since we want to use the current restriction on the consumer side, we need to turn off the automatic ack and set the autoAck to false channel.basicconsume (queuename, false, consumer);

- The second step is to set the specific current limiting size and quantity. channel.basicQos(0, 15, false);

- The third step is to manually ack in the consumer's handleDelivery consumption method, and set the batch processing ack response to truechannel.basicAck(envelope.getDeliveryTag(), true);

This is the production side code. There is no change to the production side code in the previous chapters. The main operation is focused on the consumer side.

Copyimport com.rabbitmq.client.Channel; import com.rabbitmq.client.Connection; import com.rabbitmq.client.ConnectionFactory; public class QosProducer { public static void main(String[] args) throws Exception { //1. Create a ConnectionFactory and set it ConnectionFactory factory = new ConnectionFactory(); factory.setHost("localhost"); factory.setVirtualHost("/"); factory.setUsername("guest"); factory.setPassword("guest"); //2. Create a connection through a connection factory Connection connection = factory.newConnection(); //3. Create Channel through Connection Channel channel = connection.createChannel(); //4. statement String exchangeName = "test_qos_exchange"; String routingKey = "item.add"; //5. send String msg = "this is qos msg"; for (int i = 0; i < 10; i++) { String tem = msg + " : " + i; channel.basicPublish(exchangeName, routingKey, null, tem.getBytes()); System.out.println("Send message : " + tem); } //6. Close the connection channel.close(); connection.close(); } }

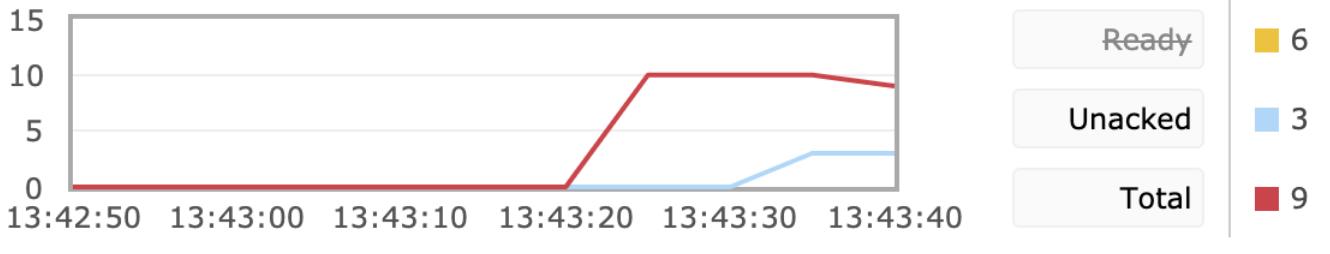

Here we create a consumer to verify that the current limiting effect and the global parameter setting to true do not work. We use Thread.sleep(5000); to slow down the ack processing process, so that we can clearly observe the flow restriction from the background management tool.

Copyimport com.rabbitmq.client.*; import java.io.IOException; public class QosConsumer { public static void main(String[] args) throws Exception { //1. Create a ConnectionFactory and set it ConnectionFactory factory = new ConnectionFactory(); factory.setHost("localhost"); factory.setVirtualHost("/"); factory.setUsername("guest"); factory.setPassword("guest"); factory.setAutomaticRecoveryEnabled(true); factory.setNetworkRecoveryInterval(3000); //2. Create a connection through a connection factory Connection connection = factory.newConnection(); //3. Create Channel through Connection final Channel channel = connection.createChannel(); //4. statement String exchangeName = "test_qos_exchange"; String queueName = "test_qos_queue"; String routingKey = "item.#"; channel.exchangeDeclare(exchangeName, "topic", true, false, null); channel.queueDeclare(queueName, true, false, false, null); channel.basicQos(0, 3, false); //In general, code binding is not required, but manual binding is required in the management interface channel.queueBind(queueName, exchangeName, routingKey); //5. Create consumers and receive messages Consumer consumer = new DefaultConsumer(channel) { @Override public void handleDelivery(String consumerTag, Envelope envelope, AMQP.BasicProperties properties, byte[] body) throws IOException { try { Thread.sleep(5000); } catch (InterruptedException e) { e.printStackTrace(); } String message = new String(body, "UTF-8"); System.out.println("[x] Received '" + message + "'"); channel.basicAck(envelope.getDeliveryTag(), true); } }; //6. Set Channel consumer binding queue channel.basicConsume(queueName, false, consumer); channel.basicConsume(queueName, false, consumer1); } }

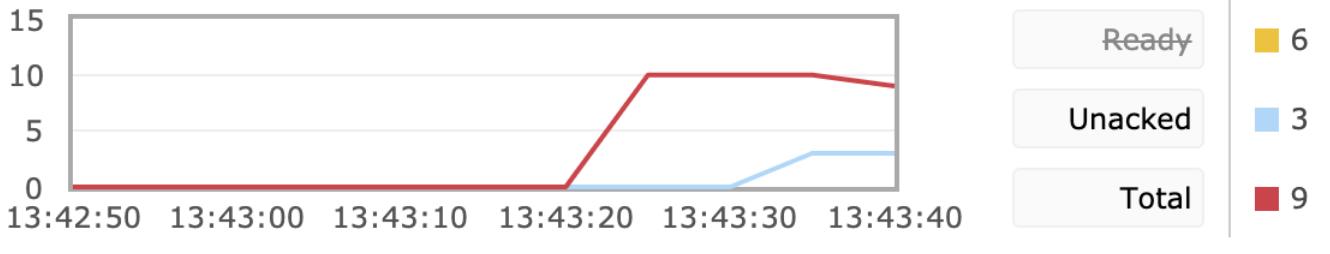

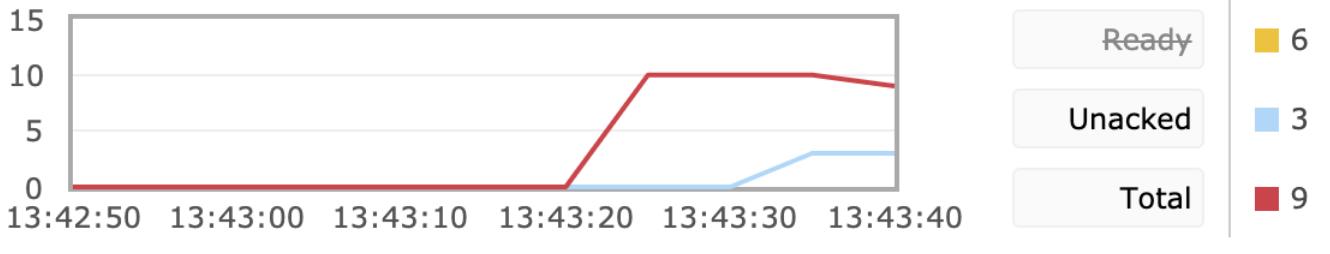

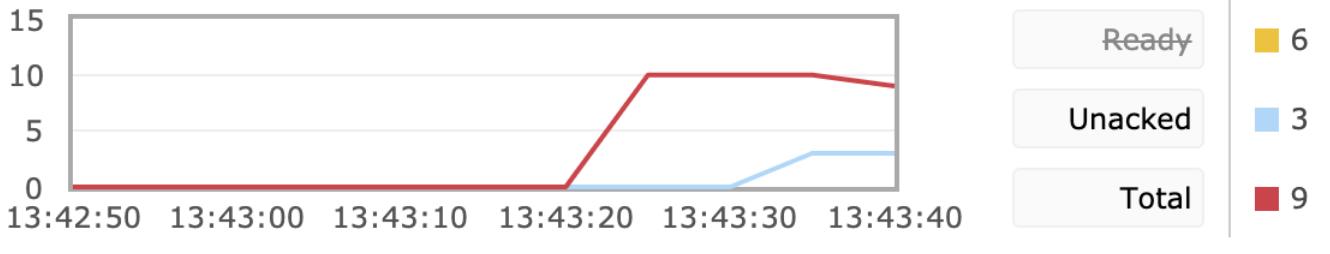

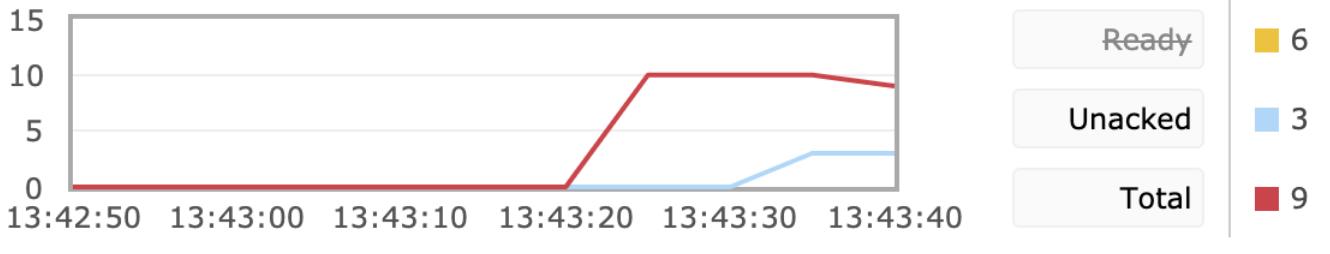

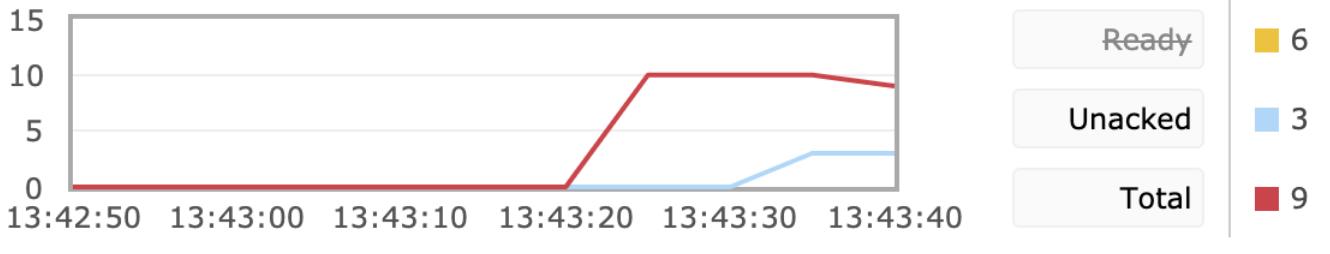

From the figure below, we find that the Unacked value is always 3, and the consumption of a message every 5 seconds, i.e. Ready and Total, is reduced by 3. The Unacked value here represents the message that the consumer is processing. Through our experiment, we find that the consumer can process up to 3 messages at a time, which achieves the expected function of consumer flow restriction.

When we set the global in void basicQos(int prefetchSize, int prefetchCount, boolean global) to true, we find that it has no current limiting effect.

TTL#

TTL is the abbreviation of Time To Live, that is, Time To Live. RabbitMQ supports message expiration time, which can be specified when sending messages. RabbitMQ supports the expiration time of queues, which is calculated from the time messages enter the queue. As long as the queue timeout configuration is exceeded, messages will be automatically cleared.

This is similar to the concept of expiration time in Redis. We should reasonably use TTL technology, which can effectively deal with expired garbage messages, so as to reduce the load of the server and maximize the performance of the server.

RabbitMQ allows you to set TTL (time to live) for both messages and queues. This can be done using optional queue arguments or policies (the latter option is recommended). Message TTL can be enforced for a single queue, a group of queues or applied for individual messages.

RabbitMQ Allows you to set up for messages and queues TTL(Time to live). This can be done using optional queue parameters or policies (the latter option is recommended). Messages can be enforced on a single queue, a group of queues TTL,You can also apply messages to a single message TTL.

-Excerpt from RabbitMQ Official documents

1. TTL of message#

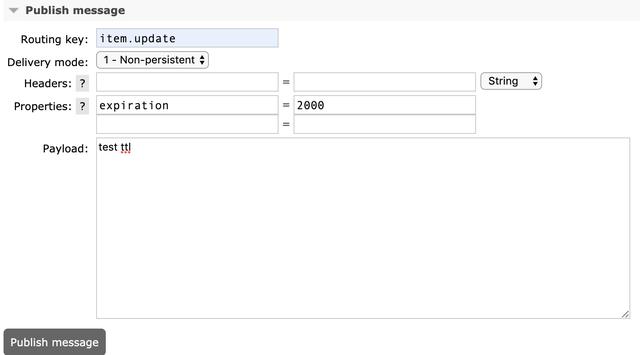

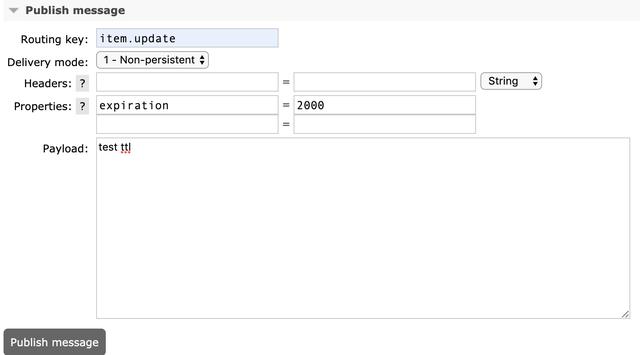

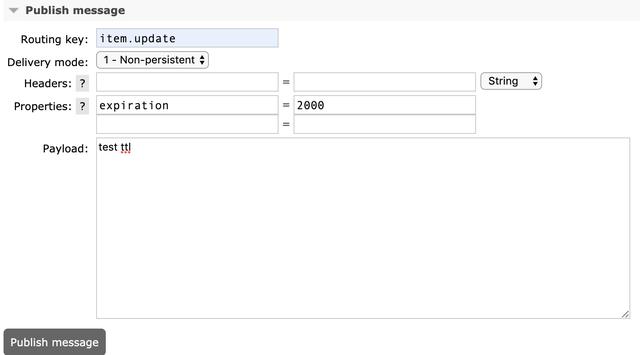

When sending messages at the production side, we can specify the expiration property in properties to set the message expiration time, in milliseconds (ms).

Copy /** * deliverMode Set to 2 for persistent messages * expiration It means to set the validity period of the message, which will be automatically deleted after it is not received by the consumer for more than 10 seconds * headers Some custom properties * */ //5. send Map<String, Object> headers = new HashMap<String, Object>(); headers.put("myhead1", "111"); headers.put("myhead2", "222"); AMQP.BasicProperties properties = new AMQP.BasicProperties().builder() .deliveryMode(2) .contentEncoding("UTF-8") .expiration("100000") .headers(headers) .build(); String msg = "test message"; channel.basicPublish("", queueName, properties, msg.getBytes());

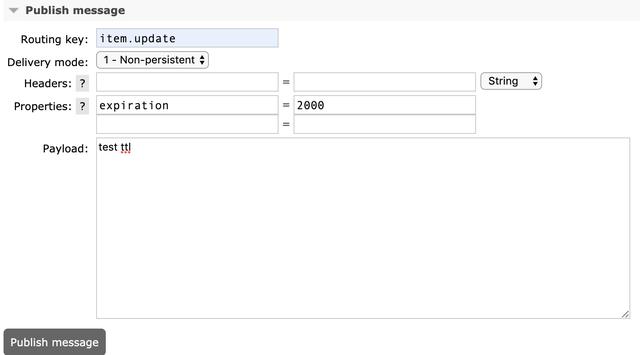

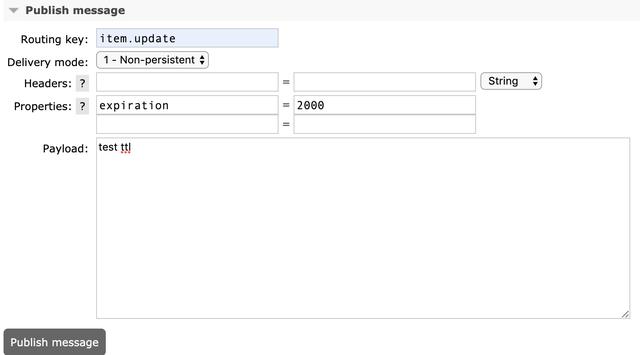

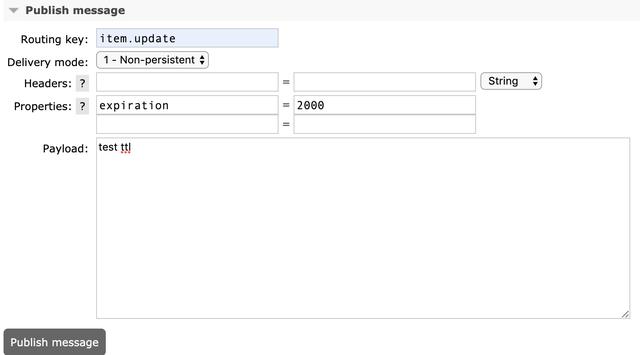

We can also enter the Exchange send message to specify expiration in the background management page

2. TTL of the queue#

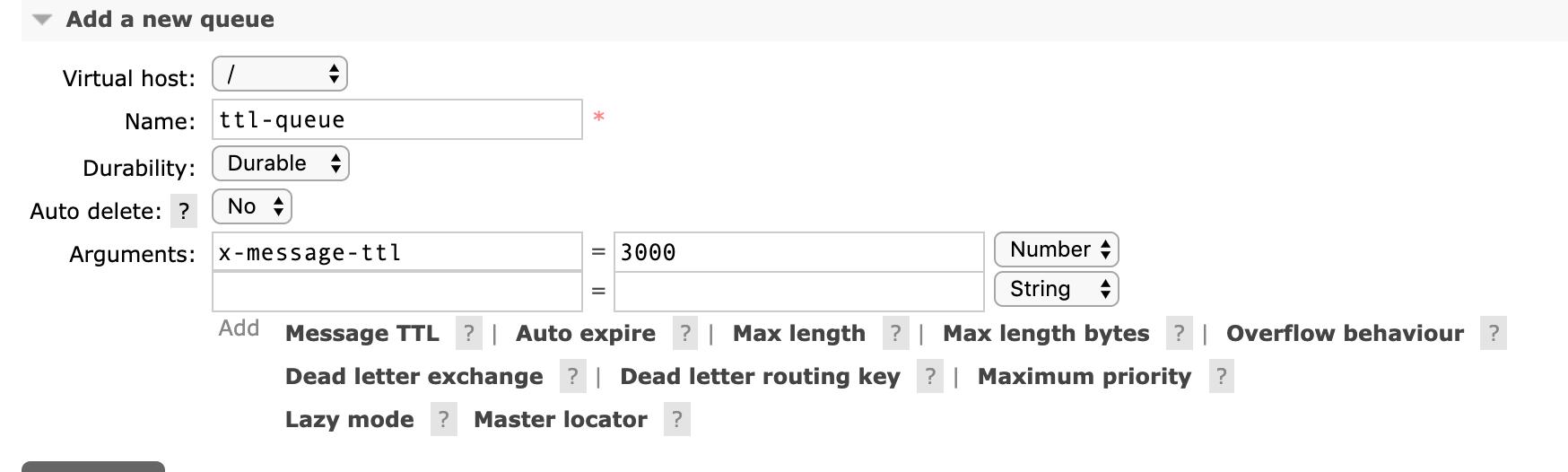

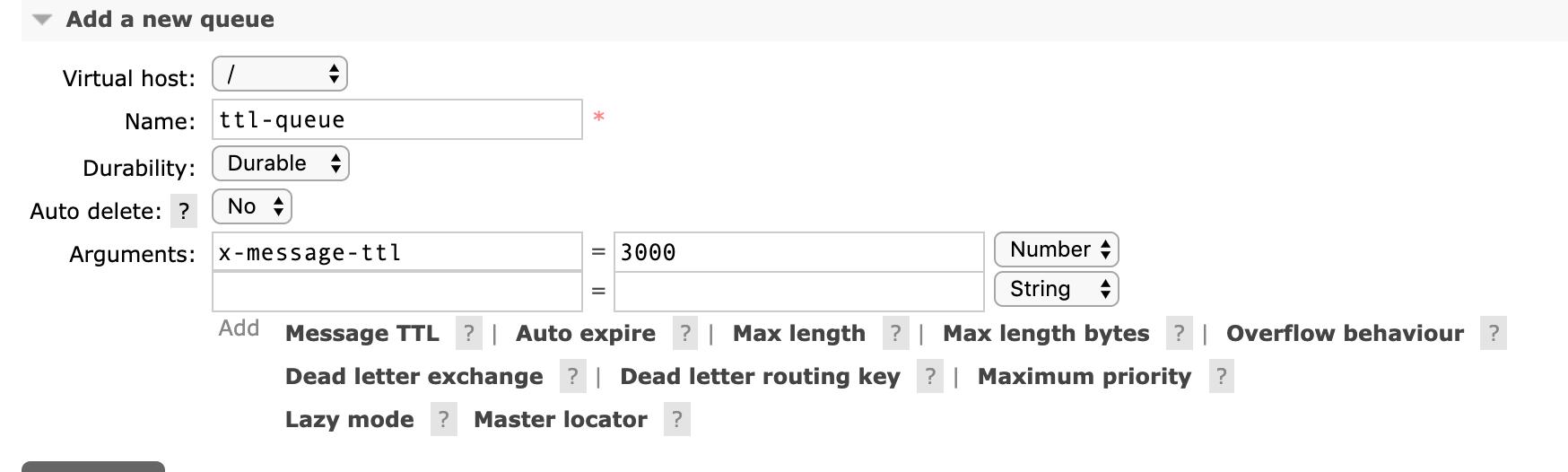

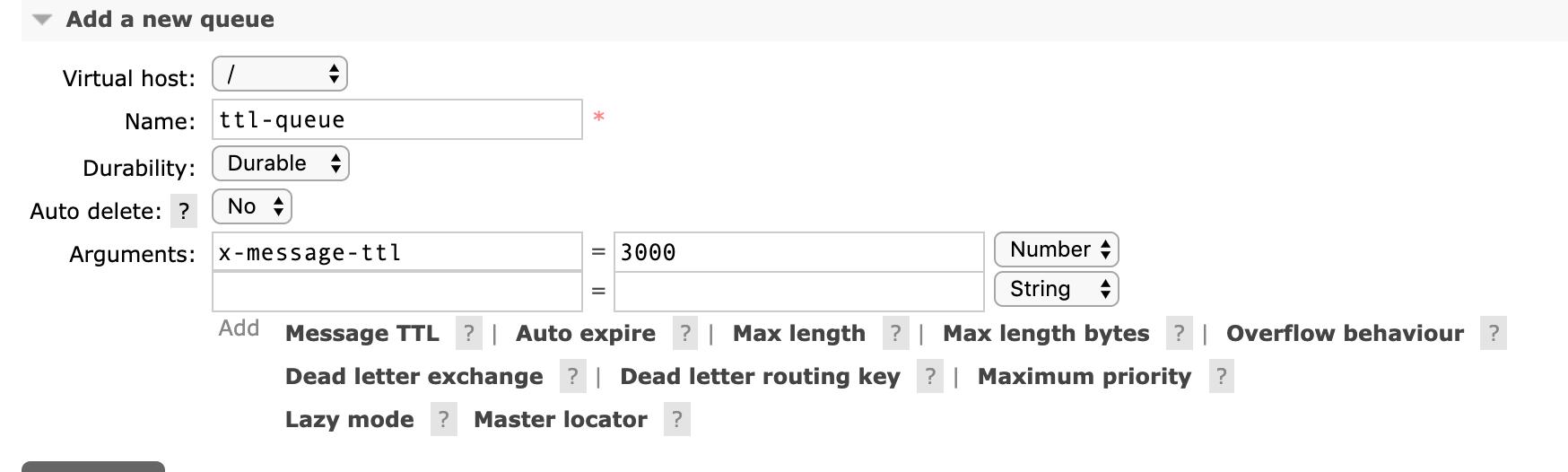

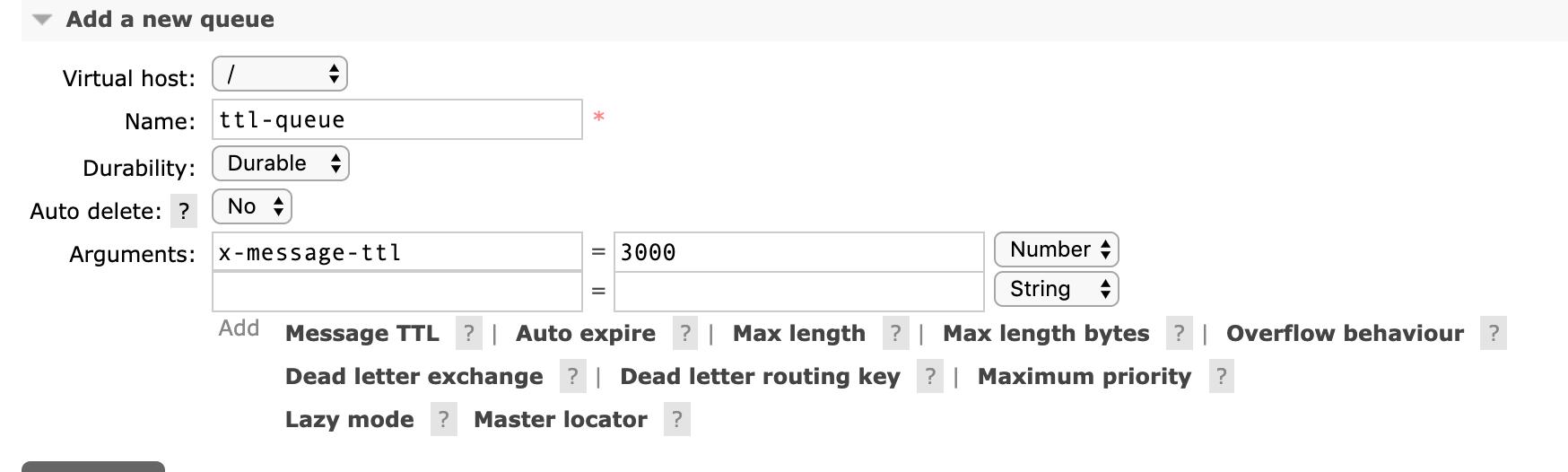

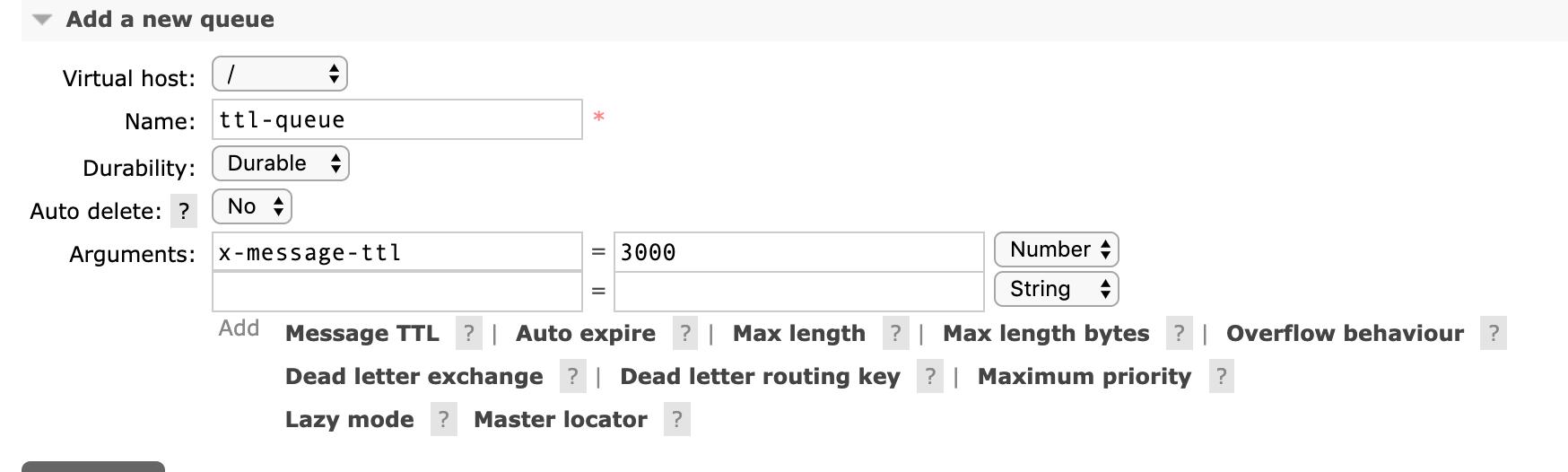

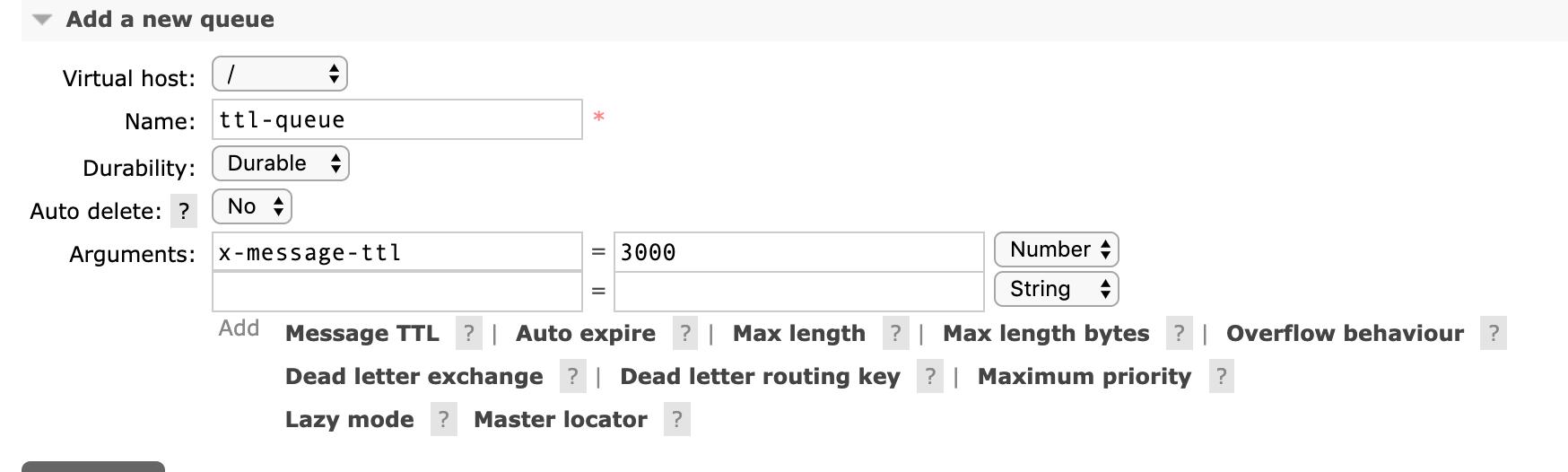

We can also add a queue in the background management interface. ttl can be set when creating. Messages in the queue that have exceeded this time will be removed.

Dead letter queue#

Dead letter queue: the queue of messages not consumed in time

Reasons for the news not being consumed in time:

- a. Message rejected (basic.reject/ basic.nack) and no longer resend request = false

- b. TTL (time to live) message timeout not consumed

- c. Maximum queue length reached

Steps to implement dead letter queue#

- First, you need to set exchange and queue of dead letter queue, and then bind: CopyExchange: dlx.exchange Queue: dlx.queue RoutingKey: ා for receiving all routing keys

- Then we declare the switch, queue and binding normally, but we need to add a parameter to the normal queue: arguments. Put ("x-dead-letter-exchange", 'Dlx. Exchange')

- In this way, when the message expires, the request fails, and the queue reaches the maximum length, the message can be directly routed to the dead letter queue!

Copyimport com.rabbitmq.client.AMQP; import com.rabbitmq.client.Channel; import com.rabbitmq.client.Connection; import com.rabbitmq.client.ConnectionFactory; public class DlxProducer { public static void main(String[] args) throws Exception { //Setting up connections and creating channel lake green String exchangeName = "test_dlx_exchange"; String routingKey = "item.update"; String msg = "this is dlx msg"; //We set the message expiration time, and then consume it 10 seconds later to send the message to the dead letter queue AMQP.BasicProperties properties = new AMQP.BasicProperties().builder() .deliveryMode(2) .expiration("10000") .build(); channel.basicPublish(exchangeName, routingKey, true, properties, msg.getBytes()); System.out.println("Send message : " + msg); channel.close(); connection.close(); } }

Copyimport com.rabbitmq.client.*; import java.io.IOException; import java.util.HashMap; import java.util.Map; public class DlxConsumer { public static void main(String[] args) throws Exception { //Create a connection, create a channel, and ignore the content, which can be obtained in the above code String exchangeName = "test_dlx_exchange"; String queueName = "test_dlx_queue"; String routingKey = "item.#"; //Parameters must be set in arguments Map<String, Object> arguments = new HashMap<String, Object>(); arguments.put("x-dead-letter-exchange", "dlx.exchange"); channel.exchangeDeclare(exchangeName, "topic", true, false, null); //Put arguments in the declaration of the queue channel.queueDeclare(queueName, true, false, false, arguments); //In general, code binding is not required, but manual binding is required in the management interface channel.queueBind(queueName, exchangeName, routingKey); //Declare dead letter queue channel.exchangeDeclare("dlx.exchange", "topic", true, false, null); channel.queueDeclare("dlx.queue", true, false, false, null); //If the routing key is ා, all messages can be routed channel.queueBind("dlx.queue", "dlx.exchange", "#"); Consumer consumer = new DefaultConsumer(channel) { @Override public void handleDelivery(String consumerTag, Envelope envelope, AMQP.BasicProperties properties, byte[] body) throws IOException { String message = new String(body, "UTF-8"); System.out.println(" [x] Received '" + message + "'"); } }; //6. Set Channel consumer binding queue channel.basicConsume(queueName, true, consumer); } }

Sum up

DLX is also a normal Exchange, which is no different from general Exchange. It can be specified on any queue, in fact, it is to set the properties of a queue. When there is dead message in the queue, RabbitMQ will automatically republish the message to the set Exchange, and then it will be routed to another queue. You can listen to messages in this queue and process them accordingly.

Source: https://www.cnblogs.com/haixiang/p/10905189.html Thank you for your reference- http://bjbsair.com/2020-04-01/tech-info/18382.html

Current restriction at the consumer end#

1. Why limit the flow to the consumer#

Suppose a scenario, first of all, our Rabbitmq server has a backlog of tens of thousands of unprocessed messages. If we open a consumer client, we will see this situation: a huge amount of messages are pushed all at once, but our single client cannot process so much data at the same time!

When the amount of data is very large, it is certainly unscientific for us to restrict the flow at the production end, because sometimes the concurrent amount is very large, sometimes the concurrent amount is very small, we cannot restrict the production end, which is the behavior of users. Therefore, we should limit the flow to the consumer side to maintain the stability of the consumer side. When the number of messages surges, it is likely to cause resource exhaustion and affect the performance of the service, resulting in system stuck or even directly crash.

2. api explanation of current limiting#

RabbitMQ provides a QoS (quality of service) function, that is, on the premise of not automatically confirming messages, if a certain number of messages (by setting the value of QoS based on consumption or channel) are not confirmed, new messages will not be consume d.

Copy/** * Request specific "quality of service" settings. * These settings impose limits on the amount of data the server * will deliver to consumers before requiring acknowledgements. * Thus they provide a means of consumer-initiated flow control. * @param prefetchSize maximum amount of content (measured in * octets) that the server will deliver, 0 if unlimited * @param prefetchCount maximum number of messages that the server * will deliver, 0 if unlimited * @param global true if the settings should be applied to the * entire channel rather than each consumer * @throws java.io.IOException if an error is encountered */ void basicQos(int prefetchSize, int prefetchCount, boolean global) throws IOException;

- prefetchSize: 0, single message size limit, 0 means unlimited

- prefetchCount: the number of messages consumed at one time. RabbitMQ will be told not to push more than N messages to a consumer at the same time, that is, once there are N messages without ACK, the consumer will block until there is ack.

- global: true, false whether to apply the above settings to the channel, in short, whether the above limit is channel level or consumer level. When we set it to false, it takes effect. When we set it to true, there is no current limiting function, because the channel level has not been implemented.

- Note: for prefetchSize and global, rabbitmq has not been implemented and will not be studied for now. In particular, prefetchCount takes effect only when no_ask=false, that is, the two values do not take effect in the case of automatic response.

3. How to limit the flow of consumer#

- First of all, since we want to use the current restriction on the consumer side, we need to turn off the automatic ack and set the autoAck to false channel.basicconsume (queuename, false, consumer);

- The second step is to set the specific current limiting size and quantity. channel.basicQos(0, 15, false);

- The third step is to manually ack in the consumer's handleDelivery consumption method, and set the batch processing ack response to truechannel.basicAck(envelope.getDeliveryTag(), true);

This is the production side code. There is no change to the production side code in the previous chapters. The main operation is focused on the consumer side.

Copyimport com.rabbitmq.client.Channel; import com.rabbitmq.client.Connection; import com.rabbitmq.client.ConnectionFactory; public class QosProducer { public static void main(String[] args) throws Exception { //1. Create a ConnectionFactory and set it ConnectionFactory factory = new ConnectionFactory(); factory.setHost("localhost"); factory.setVirtualHost("/"); factory.setUsername("guest"); factory.setPassword("guest"); //2. Create a connection through a connection factory Connection connection = factory.newConnection(); //3. Create Channel through Connection Channel channel = connection.createChannel(); //4. statement String exchangeName = "test_qos_exchange"; String routingKey = "item.add"; //5. send String msg = "this is qos msg"; for (int i = 0; i < 10; i++) { String tem = msg + " : " + i; channel.basicPublish(exchangeName, routingKey, null, tem.getBytes()); System.out.println("Send message : " + tem); } //6. Close the connection channel.close(); connection.close(); } }

Here we create a consumer to verify that the current limiting effect and the global parameter setting to true do not work. We use Thread.sleep(5000); to slow down the ack processing process, so that we can clearly observe the flow restriction from the background management tool.

Copyimport com.rabbitmq.client.*; import java.io.IOException; public class QosConsumer { public static void main(String[] args) throws Exception { //1. Create a ConnectionFactory and set it ConnectionFactory factory = new ConnectionFactory(); factory.setHost("localhost"); factory.setVirtualHost("/"); factory.setUsername("guest"); factory.setPassword("guest"); factory.setAutomaticRecoveryEnabled(true); factory.setNetworkRecoveryInterval(3000); //2. Create a connection through a connection factory Connection connection = factory.newConnection(); //3. Create Channel through Connection final Channel channel = connection.createChannel(); //4. statement String exchangeName = "test_qos_exchange"; String queueName = "test_qos_queue"; String routingKey = "item.#"; channel.exchangeDeclare(exchangeName, "topic", true, false, null); channel.queueDeclare(queueName, true, false, false, null); channel.basicQos(0, 3, false); //In general, code binding is not required, but manual binding is required in the management interface channel.queueBind(queueName, exchangeName, routingKey); //5. Create consumers and receive messages Consumer consumer = new DefaultConsumer(channel) { @Override public void handleDelivery(String consumerTag, Envelope envelope, AMQP.BasicProperties properties, byte[] body) throws IOException { try { Thread.sleep(5000); } catch (InterruptedException e) { e.printStackTrace(); } String message = new String(body, "UTF-8"); System.out.println("[x] Received '" + message + "'"); channel.basicAck(envelope.getDeliveryTag(), true); } }; //6. Set Channel consumer binding queue channel.basicConsume(queueName, false, consumer); channel.basicConsume(queueName, false, consumer1); } }

From the figure below, we find that the Unacked value is always 3, and the consumption of a message every 5 seconds, i.e. Ready and Total, is reduced by 3. The Unacked value here represents the message that the consumer is processing. Through our experiment, we find that the consumer can process up to 3 messages at a time, which achieves the expected function of consumer flow restriction.

When we set the global in void basicQos(int prefetchSize, int prefetchCount, boolean global) to true, we find that it has no current limiting effect.

TTL#

TTL is the abbreviation of Time To Live, that is, Time To Live. RabbitMQ supports message expiration time, which can be specified when sending messages. RabbitMQ supports the expiration time of queues, which is calculated from the time messages enter the queue. As long as the queue timeout configuration is exceeded, messages will be automatically cleared.

This is similar to the concept of expiration time in Redis. We should reasonably use TTL technology, which can effectively deal with expired garbage messages, so as to reduce the load of the server and maximize the performance of the server.

RabbitMQ allows you to set TTL (time to live) for both messages and queues. This can be done using optional queue arguments or policies (the latter option is recommended). Message TTL can be enforced for a single queue, a group of queues or applied for individual messages.

RabbitMQ Allows you to set up for messages and queues TTL(Time to live). This can be done using optional queue parameters or policies (the latter option is recommended). Messages can be enforced on a single queue, a group of queues TTL,You can also apply messages to a single message TTL.

-Excerpt from RabbitMQ Official documents

1. TTL of message#

When sending messages at the production side, we can specify the expiration property in properties to set the message expiration time, in milliseconds (ms).

Copy /** * deliverMode Set to 2 for persistent messages * expiration It means to set the validity period of the message, which will be automatically deleted after it is not received by the consumer for more than 10 seconds * headers Some custom properties * */ //5. send Map<String, Object> headers = new HashMap<String, Object>(); headers.put("myhead1", "111"); headers.put("myhead2", "222"); AMQP.BasicProperties properties = new AMQP.BasicProperties().builder() .deliveryMode(2) .contentEncoding("UTF-8") .expiration("100000") .headers(headers) .build(); String msg = "test message"; channel.basicPublish("", queueName, properties, msg.getBytes());

We can also enter the Exchange send message to specify expiration in the background management page

2. TTL of the queue#

We can also add a queue in the background management interface. ttl can be set when creating. Messages in the queue that have exceeded this time will be removed.

Dead letter queue#

Dead letter queue: the queue of messages not consumed in time

Reasons for the news not being consumed in time:

- a. Message rejected (basic.reject/ basic.nack) and no longer resend request = false

- b. TTL (time to live) message timeout not consumed

- c. Maximum queue length reached

Steps to implement dead letter queue#

- First, you need to set exchange and queue of dead letter queue, and then bind: CopyExchange: dlx.exchange Queue: dlx.queue RoutingKey: ා for receiving all routing keys

- Then we declare the switch, queue and binding normally, but we need to add a parameter to the normal queue: arguments. Put ("x-dead-letter-exchange", 'Dlx. Exchange')

- In this way, when the message expires, the request fails, and the queue reaches the maximum length, the message can be directly routed to the dead letter queue!

Copyimport com.rabbitmq.client.AMQP; import com.rabbitmq.client.Channel; import com.rabbitmq.client.Connection; import com.rabbitmq.client.ConnectionFactory; public class DlxProducer { public static void main(String[] args) throws Exception { //Setting up connections and creating channel lake green String exchangeName = "test_dlx_exchange"; String routingKey = "item.update"; String msg = "this is dlx msg"; //We set the message expiration time, and then consume it 10 seconds later to send the message to the dead letter queue AMQP.BasicProperties properties = new AMQP.BasicProperties().builder() .deliveryMode(2) .expiration("10000") .build(); channel.basicPublish(exchangeName, routingKey, true, properties, msg.getBytes()); System.out.println("Send message : " + msg); channel.close(); connection.close(); } }

Copyimport com.rabbitmq.client.*; import java.io.IOException; import java.util.HashMap; import java.util.Map; public class DlxConsumer { public static void main(String[] args) throws Exception { //Create a connection, create a channel, and ignore the content, which can be obtained in the above code String exchangeName = "test_dlx_exchange"; String queueName = "test_dlx_queue"; String routingKey = "item.#"; //Parameters must be set in arguments Map<String, Object> arguments = new HashMap<String, Object>(); arguments.put("x-dead-letter-exchange", "dlx.exchange"); channel.exchangeDeclare(exchangeName, "topic", true, false, null); //Put arguments in the declaration of the queue channel.queueDeclare(queueName, true, false, false, arguments); //In general, code binding is not required, but manual binding is required in the management interface channel.queueBind(queueName, exchangeName, routingKey); //Declare dead letter queue channel.exchangeDeclare("dlx.exchange", "topic", true, false, null); channel.queueDeclare("dlx.queue", true, false, false, null); //If the routing key is ා, all messages can be routed channel.queueBind("dlx.queue", "dlx.exchange", "#"); Consumer consumer = new DefaultConsumer(channel) { @Override public void handleDelivery(String consumerTag, Envelope envelope, AMQP.BasicProperties properties, byte[] body) throws IOException { String message = new String(body, "UTF-8"); System.out.println(" [x] Received '" + message + "'"); } }; //6. Set Channel consumer binding queue channel.basicConsume(queueName, true, consumer); } }

Sum up

DLX is also a normal Exchange, which is no different from general Exchange. It can be specified on any queue, in fact, it is to set the properties of a queue. When there is dead message in the queue, RabbitMQ will automatically republish the message to the set Exchange, and then it will be routed to another queue. You can listen to messages in this queue and process them accordingly.

Source: https://www.cnblogs.com/haixiang/p/10905189.html Thank you for your reference- http://bjbsair.com/2020-04-01/tech-info/18382.html

Current restriction at the consumer end#

1. Why limit the flow to the consumer#

Suppose a scenario, first of all, our Rabbitmq server has a backlog of tens of thousands of unprocessed messages. If we open a consumer client, we will see this situation: a huge amount of messages are pushed all at once, but our single client cannot process so much data at the same time!

When the amount of data is very large, it is certainly unscientific for us to restrict the flow at the production end, because sometimes the concurrent amount is very large, sometimes the concurrent amount is very small, we cannot restrict the production end, which is the behavior of users. Therefore, we should limit the flow to the consumer side to maintain the stability of the consumer side. When the number of messages surges, it is likely to cause resource exhaustion and affect the performance of the service, resulting in system stuck or even directly crash.

2. api explanation of current limiting#

RabbitMQ provides a QoS (quality of service) function, that is, on the premise of not automatically confirming messages, if a certain number of messages (by setting the value of QoS based on consumption or channel) are not confirmed, new messages will not be consume d.

Copy/** * Request specific "quality of service" settings. * These settings impose limits on the amount of data the server * will deliver to consumers before requiring acknowledgements. * Thus they provide a means of consumer-initiated flow control. * @param prefetchSize maximum amount of content (measured in * octets) that the server will deliver, 0 if unlimited * @param prefetchCount maximum number of messages that the server * will deliver, 0 if unlimited * @param global true if the settings should be applied to the * entire channel rather than each consumer * @throws java.io.IOException if an error is encountered */ void basicQos(int prefetchSize, int prefetchCount, boolean global) throws IOException;

- prefetchSize: 0, single message size limit, 0 means unlimited

- prefetchCount: the number of messages consumed at one time. RabbitMQ will be told not to push more than N messages to a consumer at the same time, that is, once there are N messages without ACK, the consumer will block until there is ack.

- global: true, false whether to apply the above settings to the channel, in short, whether the above limit is channel level or consumer level. When we set it to false, it takes effect. When we set it to true, there is no current limiting function, because the channel level has not been implemented.

- Note: for prefetchSize and global, rabbitmq has not been implemented and will not be studied for now. In particular, prefetchCount takes effect only when no_ask=false, that is, the two values do not take effect in the case of automatic response.

3. How to limit the flow of consumer#

- First of all, since we want to use the current restriction on the consumer side, we need to turn off the automatic ack and set the autoAck to false channel.basicconsume (queuename, false, consumer);

- The second step is to set the specific current limiting size and quantity. channel.basicQos(0, 15, false);

- The third step is to manually ack in the consumer's handleDelivery consumption method, and set the batch processing ack response to truechannel.basicAck(envelope.getDeliveryTag(), true);

This is the production side code. There is no change to the production side code in the previous chapters. The main operation is focused on the consumer side.

Copyimport com.rabbitmq.client.Channel; import com.rabbitmq.client.Connection; import com.rabbitmq.client.ConnectionFactory; public class QosProducer { public static void main(String[] args) throws Exception { //1. Create a ConnectionFactory and set it ConnectionFactory factory = new ConnectionFactory(); factory.setHost("localhost"); factory.setVirtualHost("/"); factory.setUsername("guest"); factory.setPassword("guest"); //2. Create a connection through a connection factory Connection connection = factory.newConnection(); //3. Create Channel through Connection Channel channel = connection.createChannel(); //4. statement String exchangeName = "test_qos_exchange"; String routingKey = "item.add"; //5. send String msg = "this is qos msg"; for (int i = 0; i < 10; i++) { String tem = msg + " : " + i; channel.basicPublish(exchangeName, routingKey, null, tem.getBytes()); System.out.println("Send message : " + tem); } //6. Close the connection channel.close(); connection.close(); } }

Here we create a consumer to verify that the current limiting effect and the global parameter setting to true do not work. We use Thread.sleep(5000); to slow down the ack processing process, so that we can clearly observe the flow restriction from the background management tool.

Copyimport com.rabbitmq.client.*; import java.io.IOException; public class QosConsumer { public static void main(String[] args) throws Exception { //1. Create a ConnectionFactory and set it ConnectionFactory factory = new ConnectionFactory(); factory.setHost("localhost"); factory.setVirtualHost("/"); factory.setUsername("guest"); factory.setPassword("guest"); factory.setAutomaticRecoveryEnabled(true); factory.setNetworkRecoveryInterval(3000); //2. Create a connection through a connection factory Connection connection = factory.newConnection(); //3. Create Channel through Connection final Channel channel = connection.createChannel(); //4. statement String exchangeName = "test_qos_exchange"; String queueName = "test_qos_queue"; String routingKey = "item.#"; channel.exchangeDeclare(exchangeName, "topic", true, false, null); channel.queueDeclare(queueName, true, false, false, null); channel.basicQos(0, 3, false); //In general, code binding is not required, but manual binding is required in the management interface channel.queueBind(queueName, exchangeName, routingKey); //5. Create consumers and receive messages Consumer consumer = new DefaultConsumer(channel) { @Override public void handleDelivery(String consumerTag, Envelope envelope, AMQP.BasicProperties properties, byte[] body) throws IOException { try { Thread.sleep(5000); } catch (InterruptedException e) { e.printStackTrace(); } String message = new String(body, "UTF-8"); System.out.println("[x] Received '" + message + "'"); channel.basicAck(envelope.getDeliveryTag(), true); } }; //6. Set Channel consumer binding queue channel.basicConsume(queueName, false, consumer); channel.basicConsume(queueName, false, consumer1); } }

From the figure below, we find that the Unacked value is always 3, and the consumption of a message every 5 seconds, i.e. Ready and Total, is reduced by 3. The Unacked value here represents the message that the consumer is processing. Through our experiment, we find that the consumer can process up to 3 messages at a time, which achieves the expected function of consumer flow restriction.

When we set the global in void basicQos(int prefetchSize, int prefetchCount, boolean global) to true, we find that it has no current limiting effect.

TTL#

TTL is the abbreviation of Time To Live, that is, Time To Live. RabbitMQ supports message expiration time, which can be specified when sending messages. RabbitMQ supports the expiration time of queues, which is calculated from the time messages enter the queue. As long as the queue timeout configuration is exceeded, messages will be automatically cleared.

This is similar to the concept of expiration time in Redis. We should reasonably use TTL technology, which can effectively deal with expired garbage messages, so as to reduce the load of the server and maximize the performance of the server.

RabbitMQ allows you to set TTL (time to live) for both messages and queues. This can be done using optional queue arguments or policies (the latter option is recommended). Message TTL can be enforced for a single queue, a group of queues or applied for individual messages.

RabbitMQ Allows you to set up for messages and queues TTL(Time to live). This can be done using optional queue parameters or policies (the latter option is recommended). Messages can be enforced on a single queue, a group of queues TTL,You can also apply messages to a single message TTL.

-Excerpt from RabbitMQ Official documents

1. TTL of message#

When sending messages at the production side, we can specify the expiration property in properties to set the message expiration time, in milliseconds (ms).

Copy /** * deliverMode Set to 2 for persistent messages * expiration It means to set the validity period of the message, which will be automatically deleted after it is not received by the consumer for more than 10 seconds * headers Some custom properties * */ //5. send Map<String, Object> headers = new HashMap<String, Object>(); headers.put("myhead1", "111"); headers.put("myhead2", "222"); AMQP.BasicProperties properties = new AMQP.BasicProperties().builder() .deliveryMode(2) .contentEncoding("UTF-8") .expiration("100000") .headers(headers) .build(); String msg = "test message"; channel.basicPublish("", queueName, properties, msg.getBytes());

We can also enter the Exchange send message to specify expiration in the background management page

2. TTL of the queue#

We can also add a queue in the background management interface. ttl can be set when creating. Messages in the queue that have exceeded this time will be removed.

Dead letter queue#

Dead letter queue: the queue of messages not consumed in time

Reasons for the news not being consumed in time:

- a. Message rejected (basic.reject/ basic.nack) and no longer resend request = false

- b. TTL (time to live) message timeout not consumed

- c. Maximum queue length reached

Steps to implement dead letter queue#

- First, you need to set exchange and queue of dead letter queue, and then bind: CopyExchange: dlx.exchange Queue: dlx.queue RoutingKey: ා for receiving all routing keys

- Then we declare the switch, queue and binding normally, but we need to add a parameter to the normal queue: arguments. Put ("x-dead-letter-exchange", 'Dlx. Exchange')

- In this way, when the message expires, the request fails, and the queue reaches the maximum length, the message can be directly routed to the dead letter queue!

Copyimport com.rabbitmq.client.AMQP; import com.rabbitmq.client.Channel; import com.rabbitmq.client.Connection; import com.rabbitmq.client.ConnectionFactory; public class DlxProducer { public static void main(String[] args) throws Exception { //Setting up connections and creating channel lake green String exchangeName = "test_dlx_exchange"; String routingKey = "item.update"; String msg = "this is dlx msg"; //We set the message expiration time, and then consume it 10 seconds later to send the message to the dead letter queue AMQP.BasicProperties properties = new AMQP.BasicProperties().builder() .deliveryMode(2) .expiration("10000") .build(); channel.basicPublish(exchangeName, routingKey, true, properties, msg.getBytes()); System.out.println("Send message : " + msg); channel.close(); connection.close(); } }

Copyimport com.rabbitmq.client.*; import java.io.IOException; import java.util.HashMap; import java.util.Map; public class DlxConsumer { public static void main(String[] args) throws Exception { //Create a connection, create a channel, and ignore the content, which can be obtained in the above code String exchangeName = "test_dlx_exchange"; String queueName = "test_dlx_queue"; String routingKey = "item.#"; //Parameters must be set in arguments Map<String, Object> arguments = new HashMap<String, Object>(); arguments.put("x-dead-letter-exchange", "dlx.exchange"); channel.exchangeDeclare(exchangeName, "topic", true, false, null); //Put arguments in the declaration of the queue channel.queueDeclare(queueName, true, false, false, arguments); //In general, code binding is not required, but manual binding is required in the management interface channel.queueBind(queueName, exchangeName, routingKey); //Declare dead letter queue channel.exchangeDeclare("dlx.exchange", "topic", true, false, null); channel.queueDeclare("dlx.queue", true, false, false, null); //If the routing key is ා, all messages can be routed channel.queueBind("dlx.queue", "dlx.exchange", "#"); Consumer consumer = new DefaultConsumer(channel) { @Override public void handleDelivery(String consumerTag, Envelope envelope, AMQP.BasicProperties properties, byte[] body) throws IOException { String message = new String(body, "UTF-8"); System.out.println(" [x] Received '" + message + "'"); } }; //6. Set Channel consumer binding queue channel.basicConsume(queueName, true, consumer); } }

Sum up

DLX is also a normal Exchange, which is no different from general Exchange. It can be specified on any queue, in fact, it is to set the properties of a queue. When there is dead message in the queue, RabbitMQ will automatically republish the message to the set Exchange, and then it will be routed to another queue. You can listen to messages in this queue and process them accordingly.

Source: https://www.cnblogs.com/haixiang/p/10905189.html Thank you for your reference- http://bjbsair.com/2020-04-01/tech-info/18382.html

Current restriction at the consumer end#

1. Why limit the flow to the consumer#

Suppose a scenario, first of all, our Rabbitmq server has a backlog of tens of thousands of unprocessed messages. If we open a consumer client, we will see this situation: a huge amount of messages are pushed all at once, but our single client cannot process so much data at the same time!

When the amount of data is very large, it is certainly unscientific for us to restrict the flow at the production end, because sometimes the concurrent amount is very large, sometimes the concurrent amount is very small, we cannot restrict the production end, which is the behavior of users. Therefore, we should limit the flow to the consumer side to maintain the stability of the consumer side. When the number of messages surges, it is likely to cause resource exhaustion and affect the performance of the service, resulting in system stuck or even directly crash.

2. api explanation of current limiting#

RabbitMQ provides a QoS (quality of service) function, that is, on the premise of not automatically confirming messages, if a certain number of messages (by setting the value of QoS based on consumption or channel) are not confirmed, new messages will not be consume d.

Copy/** * Request specific "quality of service" settings. * These settings impose limits on the amount of data the server * will deliver to consumers before requiring acknowledgements. * Thus they provide a means of consumer-initiated flow control. * @param prefetchSize maximum amount of content (measured in * octets) that the server will deliver, 0 if unlimited * @param prefetchCount maximum number of messages that the server * will deliver, 0 if unlimited * @param global true if the settings should be applied to the * entire channel rather than each consumer * @throws java.io.IOException if an error is encountered */ void basicQos(int prefetchSize, int prefetchCount, boolean global) throws IOException;

- prefetchSize: 0, single message size limit, 0 means unlimited

- prefetchCount: the number of messages consumed at one time. RabbitMQ will be told not to push more than N messages to a consumer at the same time, that is, once there are N messages without ACK, the consumer will block until there is ack.

- global: true, false whether to apply the above settings to the channel, in short, whether the above limit is channel level or consumer level. When we set it to false, it takes effect. When we set it to true, there is no current limiting function, because the channel level has not been implemented.

- Note: for prefetchSize and global, rabbitmq has not been implemented and will not be studied for now. In particular, prefetchCount takes effect only when no_ask=false, that is, the two values do not take effect in the case of automatic response.

3. How to limit the flow of consumer#

- First of all, since we want to use the current restriction on the consumer side, we need to turn off the automatic ack and set the autoAck to false channel.basicconsume (queuename, false, consumer);

- The second step is to set the specific current limiting size and quantity. channel.basicQos(0, 15, false);

- The third step is to manually ack in the consumer's handleDelivery consumption method, and set the batch processing ack response to truechannel.basicAck(envelope.getDeliveryTag(), true);

This is the production side code. There is no change to the production side code in the previous chapters. The main operation is focused on the consumer side.

Copyimport com.rabbitmq.client.Channel; import com.rabbitmq.client.Connection; import com.rabbitmq.client.ConnectionFactory; public class QosProducer { public static void main(String[] args) throws Exception { //1. Create a ConnectionFactory and set it ConnectionFactory factory = new ConnectionFactory(); factory.setHost("localhost"); factory.setVirtualHost("/"); factory.setUsername("guest"); factory.setPassword("guest"); //2. Create a connection through a connection factory Connection connection = factory.newConnection(); //3. Create Channel through Connection Channel channel = connection.createChannel(); //4. statement String exchangeName = "test_qos_exchange"; String routingKey = "item.add"; //5. send String msg = "this is qos msg"; for (int i = 0; i < 10; i++) { String tem = msg + " : " + i; channel.basicPublish(exchangeName, routingKey, null, tem.getBytes()); System.out.println("Send message : " + tem); } //6. Close the connection channel.close(); connection.close(); } }

Here we create a consumer to verify that the current limiting effect and the global parameter setting to true do not work. We use Thread.sleep(5000); to slow down the ack processing process, so that we can clearly observe the flow restriction from the background management tool.

Copyimport com.rabbitmq.client.*; import java.io.IOException; public class QosConsumer { public static void main(String[] args) throws Exception { //1. Create a ConnectionFactory and set it ConnectionFactory factory = new ConnectionFactory(); factory.setHost("localhost"); factory.setVirtualHost("/"); factory.setUsername("guest"); factory.setPassword("guest"); factory.setAutomaticRecoveryEnabled(true); factory.setNetworkRecoveryInterval(3000); //2. Create a connection through a connection factory Connection connection = factory.newConnection(); //3. Create Channel through Connection final Channel channel = connection.createChannel(); //4. statement String exchangeName = "test_qos_exchange"; String queueName = "test_qos_queue"; String routingKey = "item.#"; channel.exchangeDeclare(exchangeName, "topic", true, false, null); channel.queueDeclare(queueName, true, false, false, null); channel.basicQos(0, 3, false); //In general, code binding is not required, but manual binding is required in the management interface channel.queueBind(queueName, exchangeName, routingKey); //5. Create consumers and receive messages Consumer consumer = new DefaultConsumer(channel) { @Override public void handleDelivery(String consumerTag, Envelope envelope, AMQP.BasicProperties properties, byte[] body) throws IOException { try { Thread.sleep(5000); } catch (InterruptedException e) { e.printStackTrace(); } String message = new String(body, "UTF-8"); System.out.println("[x] Received '" + message + "'"); channel.basicAck(envelope.getDeliveryTag(), true); } }; //6. Set Channel consumer binding queue channel.basicConsume(queueName, false, consumer); channel.basicConsume(queueName, false, consumer1); } }

From the figure below, we find that the Unacked value is always 3, and the consumption of a message every 5 seconds, i.e. Ready and Total, is reduced by 3. The Unacked value here represents the message that the consumer is processing. Through our experiment, we find that the consumer can process up to 3 messages at a time, which achieves the expected function of consumer flow restriction.

When we set the global in void basicQos(int prefetchSize, int prefetchCount, boolean global) to true, we find that it has no current limiting effect.

TTL#

TTL is the abbreviation of Time To Live, that is, Time To Live. RabbitMQ supports message expiration time, which can be specified when sending messages. RabbitMQ supports the expiration time of queues, which is calculated from the time messages enter the queue. As long as the queue timeout configuration is exceeded, messages will be automatically cleared.

This is similar to the concept of expiration time in Redis. We should reasonably use TTL technology, which can effectively deal with expired garbage messages, so as to reduce the load of the server and maximize the performance of the server.

RabbitMQ allows you to set TTL (time to live) for both messages and queues. This can be done using optional queue arguments or policies (the latter option is recommended). Message TTL can be enforced for a single queue, a group of queues or applied for individual messages.

RabbitMQ Allows you to set up for messages and queues TTL(Time to live). This can be done using optional queue parameters or policies (the latter option is recommended). Messages can be enforced on a single queue, a group of queues TTL,You can also apply messages to a single message TTL.

-Excerpt from RabbitMQ Official documents

1. TTL of message#

When sending messages at the production side, we can specify the expiration property in properties to set the message expiration time, in milliseconds (ms).

Copy /** * deliverMode Set to 2 for persistent messages * expiration It means to set the validity period of the message, which will be automatically deleted after it is not received by the consumer for more than 10 seconds * headers Some custom properties * */ //5. send Map<String, Object> headers = new HashMap<String, Object>(); headers.put("myhead1", "111"); headers.put("myhead2", "222"); AMQP.BasicProperties properties = new AMQP.BasicProperties().builder() .deliveryMode(2) .contentEncoding("UTF-8") .expiration("100000") .headers(headers) .build(); String msg = "test message"; channel.basicPublish("", queueName, properties, msg.getBytes());

We can also enter the Exchange send message to specify expiration in the background management page

2. TTL of the queue#

We can also add a queue in the background management interface. ttl can be set when creating. Messages in the queue that have exceeded this time will be removed.

Dead letter queue#

Dead letter queue: the queue of messages not consumed in time

Reasons for the news not being consumed in time:

- a. Message rejected (basic.reject/ basic.nack) and no longer resend request = false

- b. TTL (time to live) message timeout not consumed

- c. Maximum queue length reached

Steps to implement dead letter queue#

- First, you need to set exchange and queue of dead letter queue, and then bind: CopyExchange: dlx.exchange Queue: dlx.queue RoutingKey: ා for receiving all routing keys

- Then we declare the switch, queue and binding normally, but we need to add a parameter to the normal queue: arguments. Put ("x-dead-letter-exchange", 'Dlx. Exchange')

- In this way, when the message expires, the request fails, and the queue reaches the maximum length, the message can be directly routed to the dead letter queue!

Copyimport com.rabbitmq.client.AMQP; import com.rabbitmq.client.Channel; import com.rabbitmq.client.Connection; import com.rabbitmq.client.ConnectionFactory; public class DlxProducer { public static void main(String[] args) throws Exception { //Setting up connections and creating channel lake green String exchangeName = "test_dlx_exchange"; String routingKey = "item.update"; String msg = "this is dlx msg"; //We set the message expiration time, and then consume it 10 seconds later to send the message to the dead letter queue AMQP.BasicProperties properties = new AMQP.BasicProperties().builder() .deliveryMode(2) .expiration("10000") .build(); channel.basicPublish(exchangeName, routingKey, true, properties, msg.getBytes()); System.out.println("Send message : " + msg); channel.close(); connection.close(); } }

Copyimport com.rabbitmq.client.*; import java.io.IOException; import java.util.HashMap; import java.util.Map; public class DlxConsumer { public static void main(String[] args) throws Exception { //Create a connection, create a channel, and ignore the content, which can be obtained in the above code String exchangeName = "test_dlx_exchange"; String queueName = "test_dlx_queue"; String routingKey = "item.#"; //Parameters must be set in arguments Map<String, Object> arguments = new HashMap<String, Object>(); arguments.put("x-dead-letter-exchange", "dlx.exchange"); channel.exchangeDeclare(exchangeName, "topic", true, false, null); //Put arguments in the declaration of the queue channel.queueDeclare(queueName, true, false, false, arguments); //In general, code binding is not required, but manual binding is required in the management interface channel.queueBind(queueName, exchangeName, routingKey); //Declare dead letter queue channel.exchangeDeclare("dlx.exchange", "topic", true, false, null); channel.queueDeclare("dlx.queue", true, false, false, null); //If the routing key is ා, all messages can be routed channel.queueBind("dlx.queue", "dlx.exchange", "#"); Consumer consumer = new DefaultConsumer(channel) { @Override public void handleDelivery(String consumerTag, Envelope envelope, AMQP.BasicProperties properties, byte[] body) throws IOException { String message = new String(body, "UTF-8"); System.out.println(" [x] Received '" + message + "'"); } }; //6. Set Channel consumer binding queue channel.basicConsume(queueName, true, consumer); } }

Sum up

DLX is also a normal Exchange, which is no different from general Exchange. It can be specified on any queue, in fact, it is to set the properties of a queue. When there is dead message in the queue, RabbitMQ will automatically republish the message to the set Exchange, and then it will be routed to another queue. You can listen to messages in this queue and process them accordingly.

Source: https://www.cnblogs.com/haixiang/p/10905189.html Thank you for your reference- http://bjbsair.com/2020-04-01/tech-info/18382.html

Current restriction at the consumer end#

1. Why limit the flow to the consumer#

Suppose a scenario, first of all, our Rabbitmq server has a backlog of tens of thousands of unprocessed messages. If we open a consumer client, we will see this situation: a huge amount of messages are pushed all at once, but our single client cannot process so much data at the same time!

When the amount of data is very large, it is certainly unscientific for us to restrict the flow at the production end, because sometimes the concurrent amount is very large, sometimes the concurrent amount is very small, we cannot restrict the production end, which is the behavior of users. Therefore, we should limit the flow to the consumer side to maintain the stability of the consumer side. When the number of messages surges, it is likely to cause resource exhaustion and affect the performance of the service, resulting in system stuck or even directly crash.

2. api explanation of current limiting#

RabbitMQ provides a QoS (quality of service) function, that is, on the premise of not automatically confirming messages, if a certain number of messages (by setting the value of QoS based on consumption or channel) are not confirmed, new messages will not be consume d.

Copy/** * Request specific "quality of service" settings. * These settings impose limits on the amount of data the server * will deliver to consumers before requiring acknowledgements. * Thus they provide a means of consumer-initiated flow control. * @param prefetchSize maximum amount of content (measured in * octets) that the server will deliver, 0 if unlimited * @param prefetchCount maximum number of messages that the server * will deliver, 0 if unlimited * @param global true if the settings should be applied to the * entire channel rather than each consumer * @throws java.io.IOException if an error is encountered */ void basicQos(int prefetchSize, int prefetchCount, boolean global) throws IOException;

- prefetchSize: 0, single message size limit, 0 means unlimited

- prefetchCount: the number of messages consumed at one time. RabbitMQ will be told not to push more than N messages to a consumer at the same time, that is, once there are N messages without ACK, the consumer will block until there is ack.

- global: true, false whether to apply the above settings to the channel, in short, whether the above limit is channel level or consumer level. When we set it to false, it takes effect. When we set it to true, there is no current limiting function, because the channel level has not been implemented.

- Note: for prefetchSize and global, rabbitmq has not been implemented and will not be studied for now. In particular, prefetchCount takes effect only when no_ask=false, that is, the two values do not take effect in the case of automatic response.

3. How to limit the flow of consumer#

- First of all, since we want to use the current restriction on the consumer side, we need to turn off the automatic ack and set the autoAck to false channel.basicconsume (queuename, false, consumer);

- The second step is to set the specific current limiting size and quantity. channel.basicQos(0, 15, false);

- The third step is to manually ack in the consumer's handleDelivery consumption method, and set the batch processing ack response to truechannel.basicAck(envelope.getDeliveryTag(), true);

This is the production side code. There is no change to the production side code in the previous chapters. The main operation is focused on the consumer side.

Copyimport com.rabbitmq.client.Channel; import com.rabbitmq.client.Connection; import com.rabbitmq.client.ConnectionFactory; public class QosProducer { public static void main(String[] args) throws Exception { //1. Create a ConnectionFactory and set it ConnectionFactory factory = new ConnectionFactory(); factory.setHost("localhost"); factory.setVirtualHost("/"); factory.setUsername("guest"); factory.setPassword("guest"); //2. Create a connection through a connection factory Connection connection = factory.newConnection(); //3. Create Channel through Connection Channel channel = connection.createChannel(); //4. statement String exchangeName = "test_qos_exchange"; String routingKey = "item.add"; //5. send String msg = "this is qos msg"; for (int i = 0; i < 10; i++) { String tem = msg + " : " + i; channel.basicPublish(exchangeName, routingKey, null, tem.getBytes()); System.out.println("Send message : " + tem); } //6. Close the connection channel.close(); connection.close(); } }

Here we create a consumer to verify that the current limiting effect and the global parameter setting to true do not work. We use Thread.sleep(5000); to slow down the ack processing process, so that we can clearly observe the flow restriction from the background management tool.

Copyimport com.rabbitmq.client.*; import java.io.IOException; public class QosConsumer { public static void main(String[] args) throws Exception { //1. Create a ConnectionFactory and set it ConnectionFactory factory = new ConnectionFactory(); factory.setHost("localhost"); factory.setVirtualHost("/"); factory.setUsername("guest"); factory.setPassword("guest"); factory.setAutomaticRecoveryEnabled(true); factory.setNetworkRecoveryInterval(3000); //2. Create a connection through a connection factory Connection connection = factory.newConnection(); //3. Create Channel through Connection final Channel channel = connection.createChannel(); //4. statement String exchangeName = "test_qos_exchange"; String queueName = "test_qos_queue"; String routingKey = "item.#"; channel.exchangeDeclare(exchangeName, "topic", true, false, null); channel.queueDeclare(queueName, true, false, false, null); channel.basicQos(0, 3, false); //In general, code binding is not required, but manual binding is required in the management interface channel.queueBind(queueName, exchangeName, routingKey); //5. Create consumers and receive messages Consumer consumer = new DefaultConsumer(channel) { @Override public void handleDelivery(String consumerTag, Envelope envelope, AMQP.BasicProperties properties, byte[] body) throws IOException { try { Thread.sleep(5000); } catch (InterruptedException e) { e.printStackTrace(); } String message = new String(body, "UTF-8"); System.out.println("[x] Received '" + message + "'"); channel.basicAck(envelope.getDeliveryTag(), true); } }; //6. Set Channel consumer binding queue channel.basicConsume(queueName, false, consumer); channel.basicConsume(queueName, false, consumer1); } }

From the figure below, we find that the Unacked value is always 3, and the consumption of a message every 5 seconds, i.e. Ready and Total, is reduced by 3. The Unacked value here represents the message that the consumer is processing. Through our experiment, we find that the consumer can process up to 3 messages at a time, which achieves the expected function of consumer flow restriction.

When we set the global in void basicQos(int prefetchSize, int prefetchCount, boolean global) to true, we find that it has no current limiting effect.

TTL#

TTL is the abbreviation of Time To Live, that is, Time To Live. RabbitMQ supports message expiration time, which can be specified when sending messages. RabbitMQ supports the expiration time of queues, which is calculated from the time messages enter the queue. As long as the queue timeout configuration is exceeded, messages will be automatically cleared.

This is similar to the concept of expiration time in Redis. We should reasonably use TTL technology, which can effectively deal with expired garbage messages, so as to reduce the load of the server and maximize the performance of the server.

RabbitMQ allows you to set TTL (time to live) for both messages and queues. This can be done using optional queue arguments or policies (the latter option is recommended). Message TTL can be enforced for a single queue, a group of queues or applied for individual messages.

RabbitMQ Allows you to set up for messages and queues TTL(Time to live). This can be done using optional queue parameters or policies (the latter option is recommended). Messages can be enforced on a single queue, a group of queues TTL,You can also apply messages to a single message TTL.

-Excerpt from RabbitMQ Official documents

1. TTL of message#

When sending messages at the production side, we can specify the expiration property in properties to set the message expiration time, in milliseconds (ms).

Copy /** * deliverMode Set to 2 for persistent messages * expiration It means to set the validity period of the message, which will be automatically deleted after it is not received by the consumer for more than 10 seconds * headers Some custom properties * */ //5. send Map<String, Object> headers = new HashMap<String, Object>(); headers.put("myhead1", "111"); headers.put("myhead2", "222"); AMQP.BasicProperties properties = new AMQP.BasicProperties().builder() .deliveryMode(2) .contentEncoding("UTF-8") .expiration("100000") .headers(headers) .build(); String msg = "test message"; channel.basicPublish("", queueName, properties, msg.getBytes());

We can also enter the Exchange send message to specify expiration in the background management page

2. TTL of the queue#

We can also add a queue in the background management interface. ttl can be set when creating. Messages in the queue that have exceeded this time will be removed.

Dead letter queue#

Dead letter queue: the queue of messages not consumed in time

Reasons for the news not being consumed in time:

- a. Message rejected (basic.reject/ basic.nack) and no longer resend request = false

- b. TTL (time to live) message timeout not consumed

- c. Maximum queue length reached

Steps to implement dead letter queue#

- First, you need to set exchange and queue of dead letter queue, and then bind: CopyExchange: dlx.exchange Queue: dlx.queue RoutingKey: ා for receiving all routing keys

- Then we declare the switch, queue and binding normally, but we need to add a parameter to the normal queue: arguments. Put ("x-dead-letter-exchange", 'Dlx. Exchange')

- In this way, when the message expires, the request fails, and the queue reaches the maximum length, the message can be directly routed to the dead letter queue!

Copyimport com.rabbitmq.client.AMQP; import com.rabbitmq.client.Channel; import com.rabbitmq.client.Connection; import com.rabbitmq.client.ConnectionFactory; public class DlxProducer { public static void main(String[] args) throws Exception { //Setting up connections and creating channel lake green String exchangeName = "test_dlx_exchange"; String routingKey = "item.update"; String msg = "this is dlx msg"; //We set the message expiration time, and then consume it 10 seconds later to send the message to the dead letter queue AMQP.BasicProperties properties = new AMQP.BasicProperties().builder() .deliveryMode(2) .expiration("10000") .build(); channel.basicPublish(exchangeName, routingKey, true, properties, msg.getBytes()); System.out.println("Send message : " + msg); channel.close(); connection.close(); } }

Copyimport com.rabbitmq.client.*; import java.io.IOException; import java.util.HashMap; import java.util.Map; public class DlxConsumer { public static void main(String[] args) throws Exception { //Create a connection, create a channel, and ignore the content, which can be obtained in the above code String exchangeName = "test_dlx_exchange"; String queueName = "test_dlx_queue"; String routingKey = "item.#"; //Parameters must be set in arguments Map<String, Object> arguments = new HashMap<String, Object>(); arguments.put("x-dead-letter-exchange", "dlx.exchange"); channel.exchangeDeclare(exchangeName, "topic", true, false, null); //Put arguments in the declaration of the queue channel.queueDeclare(queueName, true, false, false, arguments); //In general, code binding is not required, but manual binding is required in the management interface channel.queueBind(queueName, exchangeName, routingKey); //Declare dead letter queue channel.exchangeDeclare("dlx.exchange", "topic", true, false, null); channel.queueDeclare("dlx.queue", true, false, false, null); //If the routing key is ා, all messages can be routed channel.queueBind("dlx.queue", "dlx.exchange", "#"); Consumer consumer = new DefaultConsumer(channel) { @Override public void handleDelivery(String consumerTag, Envelope envelope, AMQP.BasicProperties properties, byte[] body) throws IOException { String message = new String(body, "UTF-8"); System.out.println(" [x] Received '" + message + "'"); } }; //6. Set Channel consumer binding queue channel.basicConsume(queueName, true, consumer); } }

Sum up

DLX is also a normal Exchange, which is no different from general Exchange. It can be specified on any queue, in fact, it is to set the properties of a queue. When there is dead message in the queue, RabbitMQ will automatically republish the message to the set Exchange, and then it will be routed to another queue. You can listen to messages in this queue and process them accordingly.

Source: https://www.cnblogs.com/haixiang/p/10905189.html Thank you for your reference- http://bjbsair.com/2020-04-01/tech-info/18382.html

Current restriction at the consumer end#

1. Why limit the flow to the consumer#

Suppose a scenario, first of all, our Rabbitmq server has a backlog of tens of thousands of unprocessed messages. If we open a consumer client, we will see this situation: a huge amount of messages are pushed all at once, but our single client cannot process so much data at the same time!

When the amount of data is very large, it is certainly unscientific for us to restrict the flow at the production end, because sometimes the concurrent amount is very large, sometimes the concurrent amount is very small, we cannot restrict the production end, which is the behavior of users. Therefore, we should limit the flow to the consumer side to maintain the stability of the consumer side. When the number of messages surges, it is likely to cause resource exhaustion and affect the performance of the service, resulting in system stuck or even directly crash.

2. api explanation of current limiting#

RabbitMQ provides a QoS (quality of service) function, that is, on the premise of not automatically confirming messages, if a certain number of messages (by setting the value of QoS based on consumption or channel) are not confirmed, new messages will not be consume d.

Copy/** * Request specific "quality of service" settings. * These settings impose limits on the amount of data the server * will deliver to consumers before requiring acknowledgements. * Thus they provide a means of consumer-initiated flow control. * @param prefetchSize maximum amount of content (measured in * octets) that the server will deliver, 0 if unlimited * @param prefetchCount maximum number of messages that the server * will deliver, 0 if unlimited * @param global true if the settings should be applied to the * entire channel rather than each consumer * @throws java.io.IOException if an error is encountered */ void basicQos(int prefetchSize, int prefetchCount, boolean global) throws IOException;

- prefetchSize: 0, single message size limit, 0 means unlimited

- prefetchCount: the number of messages consumed at one time. RabbitMQ will be told not to push more than N messages to a consumer at the same time, that is, once there are N messages without ACK, the consumer will block until there is ack.

- global: true, false whether to apply the above settings to the channel, in short, whether the above limit is channel level or consumer level. When we set it to false, it takes effect. When we set it to true, there is no current limiting function, because the channel level has not been implemented.

- Note: for prefetchSize and global, rabbitmq has not been implemented and will not be studied for now. In particular, prefetchCount takes effect only when no_ask=false, that is, the two values do not take effect in the case of automatic response.

3. How to limit the flow of consumer#

- First of all, since we want to use the current restriction on the consumer side, we need to turn off the automatic ack and set the autoAck to false channel.basicconsume (queuename, false, consumer);

- The second step is to set the specific current limiting size and quantity. channel.basicQos(0, 15, false);

- The third step is to manually ack in the consumer's handleDelivery consumption method, and set the batch processing ack response to truechannel.basicAck(envelope.getDeliveryTag(), true);

This is the production side code. There is no change to the production side code in the previous chapters. The main operation is focused on the consumer side.

Copyimport com.rabbitmq.client.Channel; import com.rabbitmq.client.Connection; import com.rabbitmq.client.ConnectionFactory; public class QosProducer { public static void main(String[] args) throws Exception { //1. Create a ConnectionFactory and set it ConnectionFactory factory = new ConnectionFactory(); factory.setHost("localhost"); factory.setVirtualHost("/"); factory.setUsername("guest"); factory.setPassword("guest"); //2. Create a connection through a connection factory Connection connection = factory.newConnection(); //3. Create Channel through Connection Channel channel = connection.createChannel(); //4. statement String exchangeName = "test_qos_exchange"; String routingKey = "item.add"; //5. send String msg = "this is qos msg"; for (int i = 0; i < 10; i++) { String tem = msg + " : " + i; channel.basicPublish(exchangeName, routingKey, null, tem.getBytes()); System.out.println("Send message : " + tem); } //6. Close the connection channel.close(); connection.close(); } }

Here we create a consumer to verify that the current limiting effect and the global parameter setting to true do not work. We use Thread.sleep(5000); to slow down the ack processing process, so that we can clearly observe the flow restriction from the background management tool.

Copyimport com.rabbitmq.client.*; import java.io.IOException; public class QosConsumer { public static void main(String[] args) throws Exception { //1. Create a ConnectionFactory and set it ConnectionFactory factory = new ConnectionFactory(); factory.setHost("localhost"); factory.setVirtualHost("/"); factory.setUsername("guest"); factory.setPassword("guest"); factory.setAutomaticRecoveryEnabled(true); factory.setNetworkRecoveryInterval(3000); //2. Create a connection through a connection factory Connection connection = factory.newConnection(); //3. Create Channel through Connection final Channel channel = connection.createChannel(); //4. statement String exchangeName = "test_qos_exchange"; String queueName = "test_qos_queue"; String routingKey = "item.#"; channel.exchangeDeclare(exchangeName, "topic", true, false, null); channel.queueDeclare(queueName, true, false, false, null); channel.basicQos(0, 3, false); //In general, code binding is not required, but manual binding is required in the management interface channel.queueBind(queueName, exchangeName, routingKey); //5. Create consumers and receive messages Consumer consumer = new DefaultConsumer(channel) { @Override public void handleDelivery(String consumerTag, Envelope envelope, AMQP.BasicProperties properties, byte[] body) throws IOException { try { Thread.sleep(5000); } catch (InterruptedException e) { e.printStackTrace(); } String message = new String(body, "UTF-8"); System.out.println("[x] Received '" + message + "'"); channel.basicAck(envelope.getDeliveryTag(), true); } }; //6. Set Channel consumer binding queue channel.basicConsume(queueName, false, consumer); channel.basicConsume(queueName, false, consumer1); } }

From the figure below, we find that the Unacked value is always 3, and the consumption of a message every 5 seconds, i.e. Ready and Total, is reduced by 3. The Unacked value here represents the message that the consumer is processing. Through our experiment, we find that the consumer can process up to 3 messages at a time, which achieves the expected function of consumer flow restriction.

When we set the global in void basicQos(int prefetchSize, int prefetchCount, boolean global) to true, we find that it has no current limiting effect.

TTL#

TTL is the abbreviation of Time To Live, that is, Time To Live. RabbitMQ supports message expiration time, which can be specified when sending messages. RabbitMQ supports the expiration time of queues, which is calculated from the time messages enter the queue. As long as the queue timeout configuration is exceeded, messages will be automatically cleared.

This is similar to the concept of expiration time in Redis. We should reasonably use TTL technology, which can effectively deal with expired garbage messages, so as to reduce the load of the server and maximize the performance of the server.

RabbitMQ allows you to set TTL (time to live) for both messages and queues. This can be done using optional queue arguments or policies (the latter option is recommended). Message TTL can be enforced for a single queue, a group of queues or applied for individual messages.

RabbitMQ Allows you to set up for messages and queues TTL(Time to live). This can be done using optional queue parameters or policies (the latter option is recommended). Messages can be enforced on a single queue, a group of queues TTL,You can also apply messages to a single message TTL.

-Excerpt from RabbitMQ Official documents

1. TTL of message#

When sending messages at the production side, we can specify the expiration property in properties to set the message expiration time, in milliseconds (ms).

Copy /** * deliverMode Set to 2 for persistent messages * expiration It means to set the validity period of the message, which will be automatically deleted after it is not received by the consumer for more than 10 seconds * headers Some custom properties * */ //5. send Map<String, Object> headers = new HashMap<String, Object>(); headers.put("myhead1", "111"); headers.put("myhead2", "222"); AMQP.BasicProperties properties = new AMQP.BasicProperties().builder() .deliveryMode(2) .contentEncoding("UTF-8") .expiration("100000") .headers(headers) .build(); String msg = "test message"; channel.basicPublish("", queueName, properties, msg.getBytes());

We can also enter the Exchange send message to specify expiration in the background management page

2. TTL of the queue#

We can also add a queue in the background management interface. ttl can be set when creating. Messages in the queue that have exceeded this time will be removed.

Dead letter queue#

Dead letter queue: the queue of messages not consumed in time

Reasons for the news not being consumed in time:

- a. Message rejected (basic.reject/ basic.nack) and no longer resend request = false

- b. TTL (time to live) message timeout not consumed

- c. Maximum queue length reached

Steps to implement dead letter queue#

- First, you need to set exchange and queue of dead letter queue, and then bind: CopyExchange: dlx.exchange Queue: dlx.queue RoutingKey: ා for receiving all routing keys

- Then we declare the switch, queue and binding normally, but we need to add a parameter to the normal queue: arguments. Put ("x-dead-letter-exchange", 'Dlx. Exchange')

- In this way, when the message expires, the request fails, and the queue reaches the maximum length, the message can be directly routed to the dead letter queue!

Copyimport com.rabbitmq.client.AMQP; import com.rabbitmq.client.Channel; import com.rabbitmq.client.Connection; import com.rabbitmq.client.ConnectionFactory; public class DlxProducer { public static void main(String[] args) throws Exception { //Setting up connections and creating channel lake green String exchangeName = "test_dlx_exchange"; String routingKey = "item.update"; String msg = "this is dlx msg"; //We set the message expiration time, and then consume it 10 seconds later to send the message to the dead letter queue AMQP.BasicProperties properties = new AMQP.BasicProperties().builder() .deliveryMode(2) .expiration("10000") .build(); channel.basicPublish(exchangeName, routingKey, true, properties, msg.getBytes()); System.out.println("Send message : " + msg); channel.close(); connection.close(); } }

Copyimport com.rabbitmq.client.*; import java.io.IOException; import java.util.HashMap; import java.util.Map; public class DlxConsumer { public static void main(String[] args) throws Exception { //Create a connection, create a channel, and ignore the content, which can be obtained in the above code String exchangeName = "test_dlx_exchange"; String queueName = "test_dlx_queue"; String routingKey = "item.#"; //Parameters must be set in arguments Map<String, Object> arguments = new HashMap<String, Object>(); arguments.put("x-dead-letter-exchange", "dlx.exchange"); channel.exchangeDeclare(exchangeName, "topic", true, false, null); //Put arguments in the declaration of the queue channel.queueDeclare(queueName, true, false, false, arguments); //In general, code binding is not required, but manual binding is required in the management interface channel.queueBind(queueName, exchangeName, routingKey); //Declare dead letter queue channel.exchangeDeclare("dlx.exchange", "topic", true, false, null); channel.queueDeclare("dlx.queue", true, false, false, null); //If the routing key is ා, all messages can be routed channel.queueBind("dlx.queue", "dlx.exchange", "#"); Consumer consumer = new DefaultConsumer(channel) { @Override public void handleDelivery(String consumerTag, Envelope envelope, AMQP.BasicProperties properties, byte[] body) throws IOException { String message = new String(body, "UTF-8"); System.out.println(" [x] Received '" + message + "'"); } }; //6. Set Channel consumer binding queue channel.basicConsume(queueName, true, consumer); } }

Sum up