opencv opens the camera and performs face detection

Development environment: Qt5.12.4, MSVC2017, win10

First, sort out the process

- Configure opencv Library in Qt

- Start a sub thread to continuously obtain image frames from the camera

- Use the obtained image frame to input opencv face recognition algorithm, and draw a rectangular box

- Transfer the image frame with face rectangular frame to the main interface for display

Create a widget project (preferably a QQuick project, which is just for the convenience of displaying the face detection effect, so create a widget project)

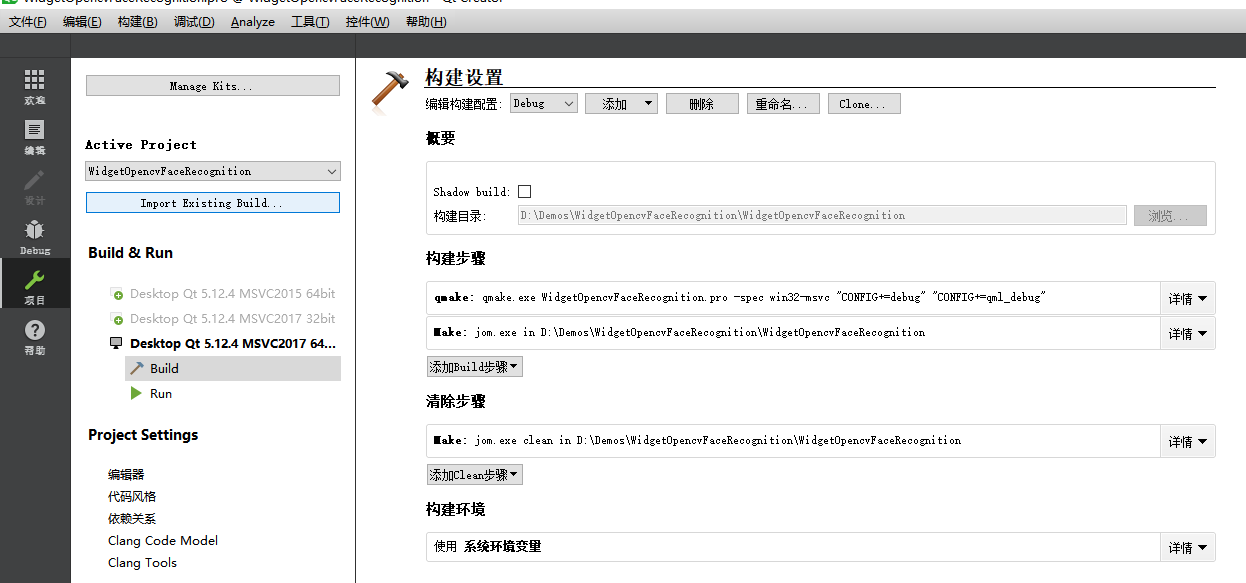

After creating the project, uncheck shadow build

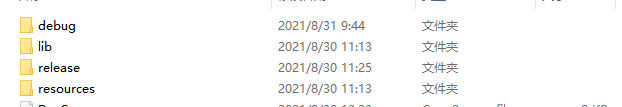

Then create four folders, debug, release, lib and resources, in the same level directory of the project. pro file.

Then create four folders, debug, release, lib and resources, in the same level directory of the project. pro file.

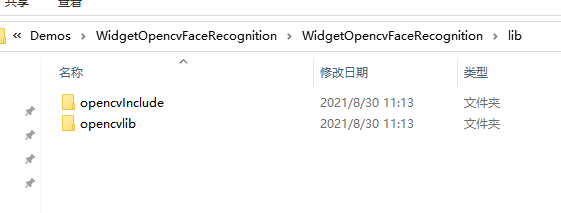

Then create two folders in the lib folder, opencvInclude and opencvlib

Qt configure openCV Library

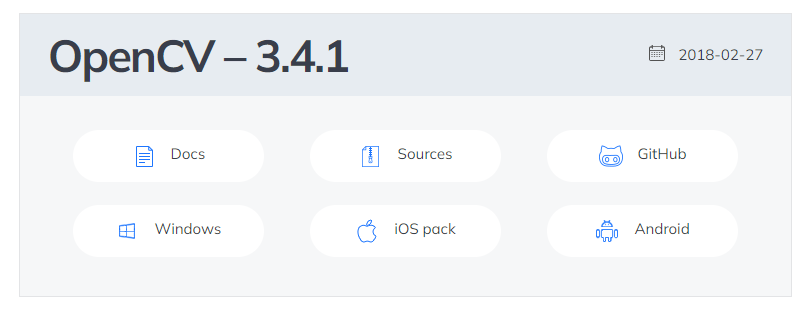

The opencv version I use is 3.4.1, 64 bit, Click page to find opencv3.4.1

After downloading, install:

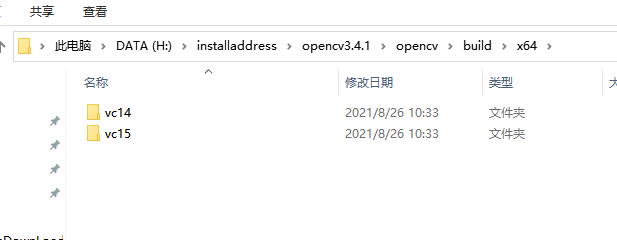

After installation, find the build folder in the installation path,

There is an X64 folder in the build folder. Go in and select the corresponding compiler version,

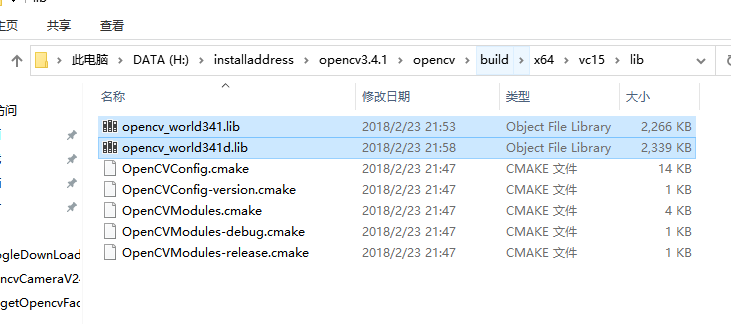

Here, I use MSVC2017, that is, VC15, and then find two libraries in the Lib folder in the next layer path. One is OpenCV_ World341.lib (the library used by release), and opencv_world341d.lib (the library used for debug).

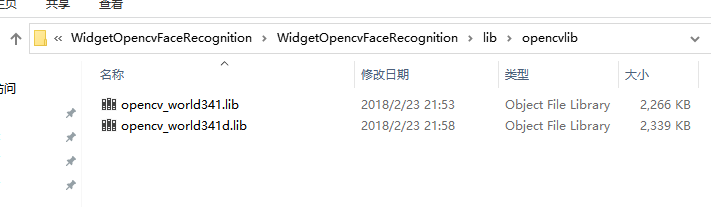

Copy the two libraries into the project directory lib/opencvlib

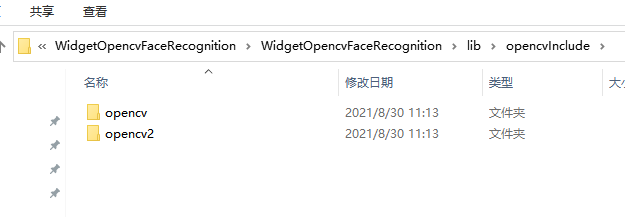

Then copy the two folders under build/include under the opencv3.4.1 installation path: opencv and opencv2 into lib/opencvinclude under the project directory

Then, two dynamic libraries under the build/x64/vc15/bin path under the opencv3.4.1 installation path: OpenCV_ World314.dll - > copy into the debug folder under the project path, opencv_ World314d.dll - > copy into the release folder under the project path,

Add face model xml file

Find the haarcascade under build/etc/haarcascades / in the opencv3.4.1 installation path_ frontalface_ Alt2.xml file, and then create the folder resources under the project path to haarcascade_ frontalface_ Copy alt2.xml to the Resources folder

###At this point, the required files are added!

Configuration. pro file

Add configuration information to the project. pro file as follows:

INCLUDEPATH += $$PWD/lib/opencvInclude

CONFIG( debug, debug | release ){

DESTDIR = $$PWD/debug

LIBS += -L$$PWD/lib/opencvlib -lopencv_world341d

}

CONFIG( release , debug | release ){

DESTDIR = $$PWD/release

LIBS += -L$$PWD/lib/opencvlib -lopencv_world341

}

#####So far, the opencv library has been configured

Next, start using opencv

Step 1: turn on the camera and get the image

Before writing this blog, I first used the Camera class of Quick to open the Camera and get images( Referring to this article, although it is an Android platform, it is almost the same as the development on windows)

Later, because the performance of the computer purchased by the company was too low, the Camera pictures provided by Quick were stuck, so opencv was used to open the Camera.

First, create a new class for human face detection

FaceRecognition.h

#ifndef FACERECOGNITION_H

#define FACERECOGNITION_H

#include <QObject>

#include <QDebug>

#include <QFile>

#include <QFileInfo>

#include <vector>

#include <QDateTime>

#include <iostream>

#include <QTimer>

#include "opencv2/opencv.hpp"

#include "opencv2/core.hpp"

#include "opencv2/objdetect/objdetect.hpp"

#include "opencv2/highgui/highgui.hpp"

#include "opencv2/imgproc/imgproc.hpp"

using namespace cv;

using namespace std;

class FaceRecognition : public QObject

{

Q_OBJECT

public:

explicit FaceRecognition(QObject *parent = nullptr);

//Initialize face recognition

void initFaceRecog();

//Initialization timer

void initTimer();

//Set the xml file of opencv face recognition library

bool setXmlFilePath( QString path );

//Get the path of opencv face recognition library file

QString getXmlFilePath();

//Set the value of the member variable m_objFrame

void setFrame( Mat frame );

// Use a rectangle to frame the face in the image

Mat paintRect( Mat frame );

//Get the number of faces

int getFaces();

//http flag bit, used to wait for the returned result

void setHttpAvalable( bool state );

//Get the flag bit result of http

bool getHttpAvailable();

signals:

public slots:

void onTimerTimeout();

private:

//Face classifier

CascadeClassifier m_objFace_cascade;

//Mat of opencv

Mat m_objFrame;

//xml file path of face recognition database

QString m_qsXmlFilePath;

//Store face frame

vector< Rect > m_veFaces;

//Whether to identify the flag bit

bool m_bRecognition;

//Number of faces

int m_iFaces;

//timer

QTimer m_timer;

// Flag bit waiting for http to return results

bool m_bHttpAvalable;

};

#endif // FACERECOGNITION_H

FaceRecognition.cpp

#include "FaceRecognition.h"

FaceRecognition::FaceRecognition(QObject *parent) : QObject(parent)

{

initFaceRecog();

initTimer();

}

void FaceRecognition::initFaceRecog()

{

m_bRecognition = true;

bool ok = setXmlFilePath( "../../resources/haarcascade_frontalface_alt2.xml" );

if( !ok )

{

qDebug() << " xml file not existed";

return;

}

//Load character training model

if( !m_objFace_cascade.load( m_qsXmlFilePath.toLocal8Bit().toStdString() ) )

{

qDebug() << " load xml file failed ";

return;

}

qDebug() << " load xml file success ";

}

void FaceRecognition::initTimer()

{

m_timer.setInterval( 300 );

connect( &m_timer, &QTimer::timeout, this, &FaceRecognition::onTimerTimeout );

m_timer.start();

}

bool FaceRecognition::setXmlFilePath( QString path )

{

QFile file( path );

QFileInfo fileInfo( file );

if( !fileInfo.exists() )

{

return false;

}

m_qsXmlFilePath = fileInfo.absoluteFilePath();

qDebug() << "xml path = " << m_qsXmlFilePath;

return true;

}

QString FaceRecognition::getXmlFilePath()

{

return m_qsXmlFilePath;

}

void FaceRecognition::setFrame( Mat frame )

{

if( frame.empty() )

{

qDebug() << " empty frame ";

return;

}

m_objFrame = frame;

}

Mat FaceRecognition::paintRect( Mat frame )

{

if( !m_bRecognition )

{

return frame;

}

setFrame( frame );

m_veFaces.clear();

Mat grayImg;

Mat outPutGrayImg;

Mat smallImg;

//Convert to grayscale

cvtColor( m_objFrame, grayImg, cv::COLOR_BGR2GRAY );

// resize( grayImg, smallImg, Size(), 0.9, 0.9, INTER_LINEAR );

//Histogram equalization to improve image quality

equalizeHist( grayImg, outPutGrayImg );

// cout<< " size = " <<outPutGrayImg.size() << endl;

// qint64 time1 = QDateTime::currentMSecsSinceEpoch();

//Start face detection. The Size will affect the performance of face detection. It is recommended to set the minimum Size slightly larger

m_objFace_cascade.detectMultiScale( outPutGrayImg, m_veFaces, 1.1, 3, 0, Size( 100, 100 ), Size( 300, 300 ) );

//Store the number of faces in the image to determine whether to send the image to the server through http in the run() function of DevCamera.cpp

m_iFaces = m_veFaces.size();

// qDebug() << " there are " << m_veFaces.size() << " faces ";

// qDebug() << QString( " coast %1 " ).arg( QDateTime::currentMSecsSinceEpoch() - time1 );

for( size_t i = 0; i < m_veFaces.size(); i++ )

{

Rect tmpFace = m_veFaces[ i ];

Point point1( tmpFace.x, tmpFace.y );

Point point2( tmpFace.x + tmpFace.width, tmpFace.y + tmpFace.height );

rectangle( m_objFrame, point1, point2, Scalar( 255, 0, 0 ) );

}

return m_objFrame;

}

int FaceRecognition::getFaces()

{

return m_iFaces;

}

void FaceRecognition::setHttpAvalable( bool state )

{

m_bHttpAvalable = state;

}

bool FaceRecognition::getHttpAvailable()

{

return m_bHttpAvalable;

}

void FaceRecognition::onTimerTimeout()

{

m_bRecognition = !m_bRecognition;

}

Then, create a new camera device class to obtain the camera image. Here, you need to start a new thread to continuously obtain the camera image. Transfer the face recognition class for face recognition and input the image with face box

DevCamera.h

#ifndef DEVCAMERA_H

#define DEVCAMERA_H

#include <QObject>

#include <iostream>

#include <QThread>

#include <QDebug>

#include <QMutex>

#include <QImage>

#include "opencv2/core/core.hpp"

#include "opencv2/highgui/highgui.hpp"

#include "opencv2/imgproc/imgproc.hpp"

#include "FaceRecognition.h"

class DevCamera : public QThread

{

Q_OBJECT

public:

DevCamera();

//Convert the Mat type of opencv to QImage

QImage matToQimage( Mat frame );

//The thread on which the QThread actually resides

void run();

//Turn off the camera

void closeCamera();

signals:

void signalNewQimageAvailable( QImage image );

void signalQimageToServer( QImage image );

private:

//Camera object

VideoCapture *m_pCamera;

//Camera operation flag bit

bool m_bCameraRunning;

//Wire program lock

QMutex m_objMutex;

//Path of opencv face recognition library file

QString m_objXmlPath;

//

QImage m_objQimg;

// Mat type of opencv

Mat m_objFrame;

//Face recognition class

FaceRecognition m_objFaceRecog;

};

#endif // DEVCAMERA_H

DevCamera.cpp

#include "DevCamera.h"

DevCamera::DevCamera()

{

m_pCamera = new VideoCapture();

}

void DevCamera::run()

{

if( !m_pCamera->isOpened() )

{

m_pCamera->open( 0 );

}

m_bCameraRunning = true;

while( true )

{

m_objMutex.lock();

if( !m_bCameraRunning )

{

m_objMutex.unlock();

break;

}

m_objMutex.unlock();

*m_pCamera >> m_objFrame;

//Face detection

m_objFrame = m_objFaceRecog.paintRect( m_objFrame );

QImage img = matToQimage( m_objFrame );

if( m_objFaceRecog.getFaces() > 0 )

{

emit signalQimageToServer( img );

}

emit signalNewQimageAvailable( img );

QThread::msleep( 20 );

}

m_pCamera->release();

m_bCameraRunning = false;

qDebug() << " break while ";

}

void DevCamera::closeCamera()

{

m_objMutex.lock();

m_bCameraRunning = false;

m_objMutex.unlock();

}

QImage DevCamera::matToQimage( Mat frame )

{

QImage img;

if (frame.channels()==3)

{

cvtColor(frame, frame, CV_BGR2RGB);

img = QImage((const unsigned char *)(frame.data), frame.cols, frame.rows,

frame.cols*frame.channels(), QImage::Format_RGB888);

}

else if (frame.channels()==1)

{

img = QImage((const unsigned char *)(frame.data), frame.cols, frame.rows,

frame.cols*frame.channels(), QImage::Format_ARGB32);

}

else

{

img = QImage((const unsigned char *)(frame.data), frame.cols, frame.rows,

frame.cols*frame.channels(), QImage::Format_RGB888);

}

return img;

}

Note!!!: to display an image, you only need to respond to the signalnewqimageavailable (IMG) signal of the DevCamera object!. in addition, opencv's face detection performance is relatively low. When recognizing a face, you'd better set a detection range: m_objface_cascade. Detectmultiscale (outputgrayimg, m_vefaces, 1.1, 3, 0, size (100, 100), size (300, 300)) The minimum identification size should be no less than 100 and the maximum should be no more than 400, otherwise the performance will be very low. I also add a timer here, which is set to detect once every 0.5 seconds, so as to help improve some performance.

The display effect is shown in the following figure:

. the key code has ended here. Since I use the QML Image control to display images, but my project is a QWidget project, the rest of the code is used to embed QML into the QWidget, which has no impact on the identification part.