Tensor and Autoograd - --- Tensor

Tensor

Establish:

- Tensor(*size) does not allocate space immediately when created, but only when used, while others are allocated immediately when created.

- ones(*sizes)

- zeros(*sizes)

- eye(*sizes) diagonal 1 other 0

- arange(s,e,step) steps from s to e

- linspace(s,e,steps) is evenly divided into steps from s to e

- rand/randn(*sizes) uniform/standard global distribution

- normal(mean,std)/uniform(from,to)

- randperm(m) Random Arrangement

Using List to create tensor

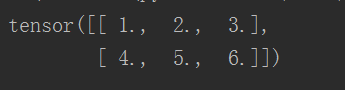

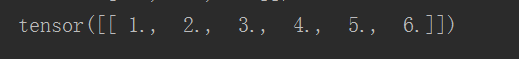

a = torch.Tensor([[1, 2, 3], [4, 5, 6]]) print(a)

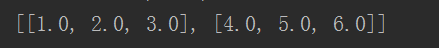

b = a.tolist() print(b)

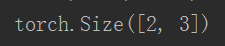

print(a.size())

print(a.numel())#Output the num of element

# Create a Tensor the size of a c = torch.Tensor(a.size()) print(c.size()) # Look at the shape of c print(c.shape)

Common tensor operations:

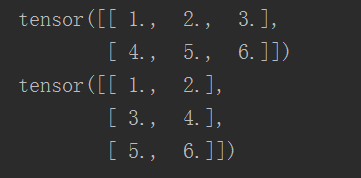

- Tensor.view() can adjust tensor's shape, but does not modify its own data. Both share memory.

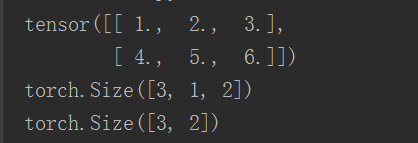

a = torch.Tensor([[1, 2, 3], [4, 5, 6]]) print(a) b = a.view(3, 2) print(b)

# - 1 Automatically calculates the size of the dimension c = a.view(-1, 6) print(c)

-

squeeze,unsqueeze reduces dimensions and increases dimensions

a = torch.Tensor([[1, 2, 3], [4, 5, 6]]) b = a.view(3, 2) # Add one dimension to one dimension b = b.unsqueeze(1) print(b.size()) # Subtract the penultimate dimension c = b.squeeze(-2) print(c.size())

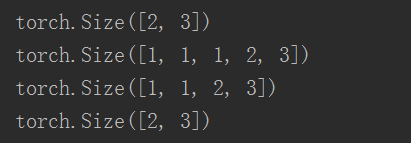

a = torch.Tensor([[1, 2, 3], [4, 5, 6]]) print(a.size()) b = a.view(1, 1, 1, 2, 3) print(b.size()) c = b.squeeze(0) print(c.size()) # Delete all dimensions 1 c = c.squeeze() print(c.size())

- resize: Adjust the size, you can modify tensor size, if the new size is larger than the original size will allocate new memory space, if less than the original size, the data will still be saved.

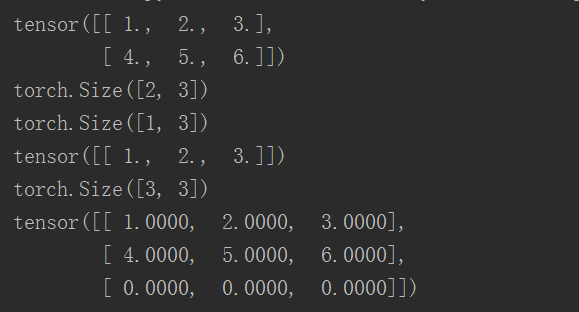

a = torch.Tensor([[1, 2, 3], [4, 5, 6]]) print(a) print(a.size()) b = a.resize_(1, 3) print(b.size()) print(b) b = a.resize_(3, 3) print(b.size()) print(b)

-

Indexes:

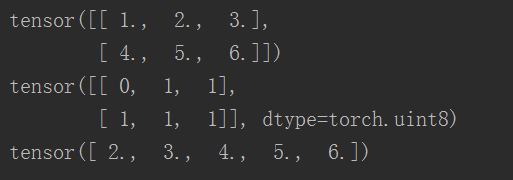

a = torch.Tensor([[1, 2, 3], [4, 5, 6]]) print(a) # Whether the output of each element satisfies the criteria of 1 or 0 print(a > 1) # Output of qualified elements print(a[a>1])

-

gather (input, dim, index): Select data according to index in dim dimension, and select the same size as index. When dim=0, out [i] [j] = input [index [i] [j] [j], when dim = 1, out[i][j]=input[i][index[i][j]]

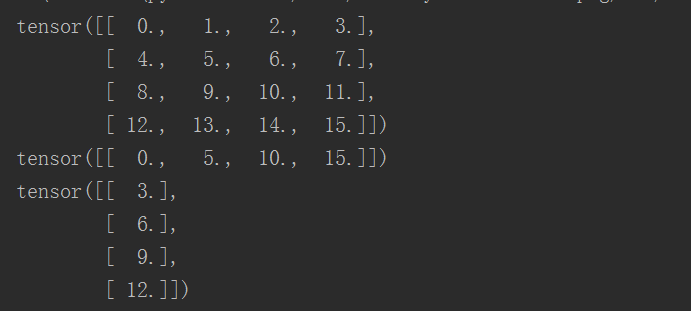

a = torch.arange(0, 16).view(4, 4) print(a) index = torch.LongTensor([[0, 1, 2, 3]]) b = a.gather(0, index) print(b) index = torch.LongTensor([[3], [2], [1], [0]]) c = a.gather(1, index) print(c)

-

The inverse operation of scatter:gather,

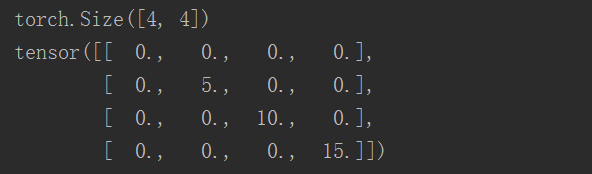

c = torch.zeros((4, 4)) c.scatter_(0, index, b) print(c.size()) print(c)

-

Advanced Index:

x[[1,2],[1,2],[2,0]]# x[1,1,2],x[2,2,0] x[[2,1,0],[0],[1]] #x[2,0,1],x[1,0,1],x[0,0,1]

linear regression

import torch as t

from matplotlib import pyplot as plt

from IPython import display

t.manual_seed(100)

def get_fake_data(batch_size=8):

x = t.rand(batch_size, 1) * 20

y = x * 2 + (1 + t.randn(batch_size, 1)) * 3

return x, y

x, y = get_fake_data()

plt.scatter(x.squeeze().numpy(), y.squeeze().numpy())

w = t.rand(1, 1)

b = t.zeros(1, 1)

lr = 0.001

for ii in range(20000):

x, y = get_fake_data()

y_pred = x.mul(w) + b.expand_as(y)

loss = 0.5 * (y_pred - y) ** 2

loss = loss.sum()

dloss = 1

dy_pred = dloss * (y_pred - y)

dw = x * dy_pred

db = dy_pred.sum()

w.sub_((lr * dw).sum())

b.sub_(lr * db)

if ii % 1000 == 0:

display.clear_output(wait=True)

x = t.arange(0, 20).view(-1, 1)

y = x.mul(w) + b.expand_as(x)

plt.plot(x.numpy(), y.numpy())

x2, y2 = get_fake_data(batch_size=20)

plt.scatter(x2.numpy(), y2.numpy())

plt.xlim(0, 20)

plt.ylim(0, 41)

plt.show()

plt.pause(0.5)