Refer to the previous article on the idea of short rent of reptile piglets https://www.cnblogs.com/aby321/p/9946831.html, and continue to be familiar with the basic reptile methods. This time, I crawled the ranking of Migu music

Migu music list home page: http://music.migu.cn/v2/music/billboard/? "From = Migu & page = 1

Note: sometimes, this program will report an error when it is running again. The reason for the error is unknown!

Different from pig short rent, the ranking information crawled is not in the detailed page of each song. It needs to be obtained in the page url (lines 19-25 of the code). Use the packing cycle and output it to the function get_info()

1 """ 2 Typical paging website - Migu Music List 3 Sometimes the operation will report an error, sometimes it is normal, and the reason is unknown 4 """ 5 import requests 6 from bs4 import BeautifulSoup as bs 7 import time 8 9 headers = { 10 'User-Agent':'User-Agent:Mozilla/5.0 (Windows NT 6.1) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/63.0.3239.132 Safari/537.36' 11 } 12 13 #Get the URL of each song,Parameter is paging url 14 def get_link(url): 15 html_data = requests.get(url, headers = headers) 16 soup = bs(html_data.text, 'lxml')#bs4 Recommended resolution Library 17 #print(soup.prettify()) #The source code in the standardized output url (may be inconsistent with that in web page viewing, and the label writing may be irregular in web page) is grabbed based on this. If the grabbing fails, use this command to view the source code 18 links = soup.select('#js_songlist > div > div.song-name > span > a')#Pay attention to the circulation point!!! 19 ranks = soup.select('#js_songlist > div > div.song-number ')#Because there is no ranking information in the song details, you need to get the details in this section 20 #print(ranks) 21 for rank, link in zip(ranks,links):#Packaging cycle, mainly for output matching rank and link 22 rank = rank.get_text() 23 link = 'http://music.migu.cn' + link.get('href')#Observe the detailed webpage of each song and find that the previous part needs to be added manually http://music.migu.cn 24 #print(rank,link) 25 get_info(rank,link) 26 27 #Get the details of each song, ranking, song name, singer and album name, parameters url Is the URL of each song 28 def get_info(rank,url): 29 html_data = requests.get(url, headers = headers) 30 soup = bs(html_data.text, 'lxml')#bs4 Recommended resolution Library 31 # print(soup.prettify()) #The source code in the standardized output url (may be inconsistent with that in web page viewing, and the label writing may be irregular in web page) is grabbed based on this. If the grabbing fails, use this command to view the source code 32 title = soup.select('div.container.pt50 > div.song-data > div.data-cont > div.song-name > span.song-name-text')[0].string.strip() 33 34 # Web page copy It's all here“ body > div.wrap.clearfix.con_bg > div.con_l > div.pho_info > h4 > em",But I can't use this to crawl out the data (I don't know why),hold body Remove or use the shortest way below (use only the most recent and unique div) 35 # title = soup.select('div.pho_info > h4 > em ') 36 # Query results title The format is a one-dimensional list. You need to continue to extract list elements (generally[0]),The list element has labels before and after it. You need to continue to extract the contents of the labels get_text()perhaps string 37 singer = soup.select('div.container.pt50 > div.song-data > div.data-cont > div.song-statistic > span > a')[0].string.strip() 38 cd = soup.select('div.container.pt50 > div.song-data > div.data-cont > div.style-like > div > span > a')[0].string.strip() # Get the property value of the label 39 40 #Organize detailed data into dictionary format 41 data = { 42 'ranking':rank, 43 'Song name':title, 44 'singer':singer, 45 'Album':cd 46 } 47 print(data) 48 49 50 #Program main entrance 51 if __name__=='__main__': 52 for number in range(1,3): 53 url = 'http://music.migu.cn/v2/music/billboard/?_from=migu&page={}'.format(number) #Structural paging url(It's not about song details url) 54 get_link(url) 55 time.sleep(1)

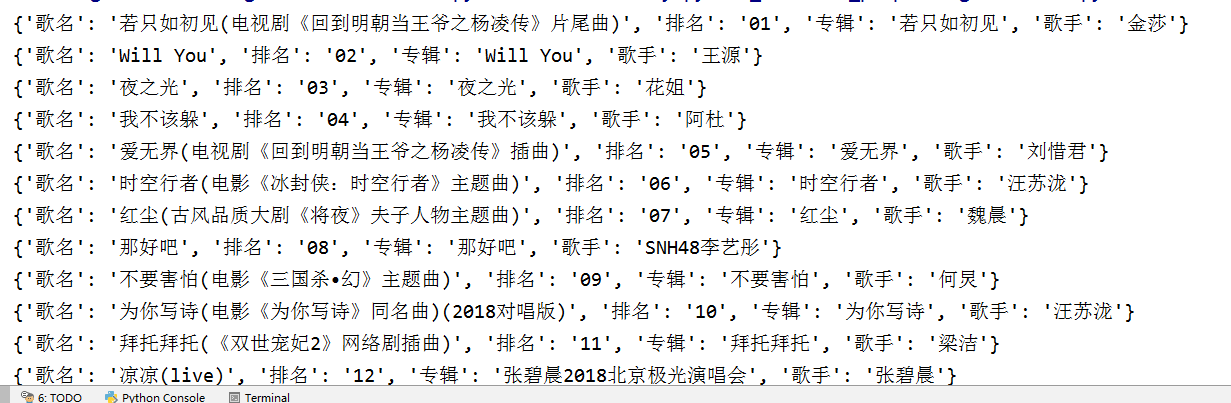

Output result: the order of each output data (Dictionary type) field is random, because the dictionary type data has no order. If you want to fix the order, please use the list

Take one example: This crawler template can be used for the same type of paged websites, such as top 100 of Douban movie, top list of timenet, etc

ps: I don't know if this list is accurate. Anyway, I haven't heard of it