I. Introduction

Beautiful Soup It is a Python library that can extract data from HTML or XML files. It can realize the usual way of document navigation, search and modification through your favorite converter. Beautiful Soup will save you hours or even days of working time

Official Chinese document address: https://beautifulsoup.readthedocs.io/zh_CN/v4.4.0/

Two, installation

Open the command line and execute the following installation commands (install Python first). pip is a python package management tool, which provides the functions of finding, downloading, installing and uninstalling Python packages. Generally, Python is installed with this tool by default. If not, please install this tool first

pip install beautifulsoup4

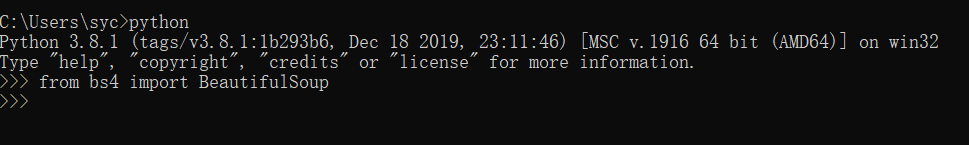

After the installation is completed, enter the python command into the python execution environment on the command line, and execute the following code (indicating the introduction of the beutifulsoup4 library, pay attention to the case here)

from bs4 import BeautifulSoup

If no error is reported after returning, the installation is successful

3, Use cases

Now we use beautiful soup to parse the names and blog addresses of all bloggers from the source code of the web page and save them as json files. The code is as follows

# -*- coding: utf-8 -*- from bs4 import BeautifulSoup import re path=r'C:\Users\syc\Desktop\work\xxxx.html' htmlfile = open(path, 'r', encoding='utf-8') htmlhandle = htmlfile.read() soup = BeautifulSoup(htmlhandle, 'html.parser') txtName = "dataSource.txt" f=open(txtName, "a+", encoding='utf8') t=0 for item in soup.find_all('a', href = re.compile('https://www.xxxxxx.com/[^/]+/$')): jsonData = r'{"name": "' + item.get_text() + r'","url": "'+ item['href']},' f.write(jsonData) t = t + 1 htmlfile.close() print(t) f.close()

Line by line code interpretation

/*2.x Generally, the version of py file is ASCII by default. If there is Chinese in the file, there will be garbled code at runtime. Therefore, you need to change the file encoding type to utf-8*/ # -*- coding: utf-8 -*- /*Introduce the installed bs4 Library*/ from bs4 import BeautifulSoup /*Introduce re library, which is a python standard library for string matching*/ import re /*Download the path of the source code file of the web page. r means that the string is output according to the original text. There is no need to escape the special characters in the string*/ path=r'C:\Users\syc\Desktop\work\xxxx.html' /*open() Function is used to open a file and create a file object, where r means to open it in read-only mode*/ htmlfile = open(path, 'r', encoding='utf-8') /*Get all byte streams of files*/ htmlhandle = htmlfile.read() /*Create a beautifulsop object and specify the parser as html.parser*/ soup = BeautifulSoup(htmlhandle, 'html.parser') txtName = "dataSource.txt" /*a+Indicates that a file is open for reading and writing. If the file already exists, the file pointer will be placed at the end of the file. The file opens in append mode. If the file does not exist, create a new file for reading and writing*/ f=open(txtName, "a+", encoding='utf8') t=0 /*Find all a-nodes whose href values match the regular match. For parameters here, please refer to the documentation. There are more parameters and methods to help parse the web page*/ for item in soup.find_all('a', href = re.compile('https://www.xxxxxx.com/[^/]+/$')): /*item.get_text()Indicates the text value in the get node, and item['href '] indicates the value in the get node*/ jsonData = r'{"name": "' + item.get_text() + r'","url": "'+ item['href']}' f.write(jsonData) t = t + 1 htmlfile.close() print(t) f.close()