Preface

First, do binocular calibration to get the internal parameters of the binocular camera, then do ranging. This binocular visual ranging is based on SGBM algorithm.

Note: The effect of binocular calibration will affect the accuracy of ranging. We recommend that you do a good job when doing binocular calibration (try to make the error small)

If you are not familiar with the principles of binocular vision, it is recommended that you read this article first: An article on Binocular Stereo Vision

Catalog

3. Preparing for Binocular Ranging

4. Binocular Test Implementation

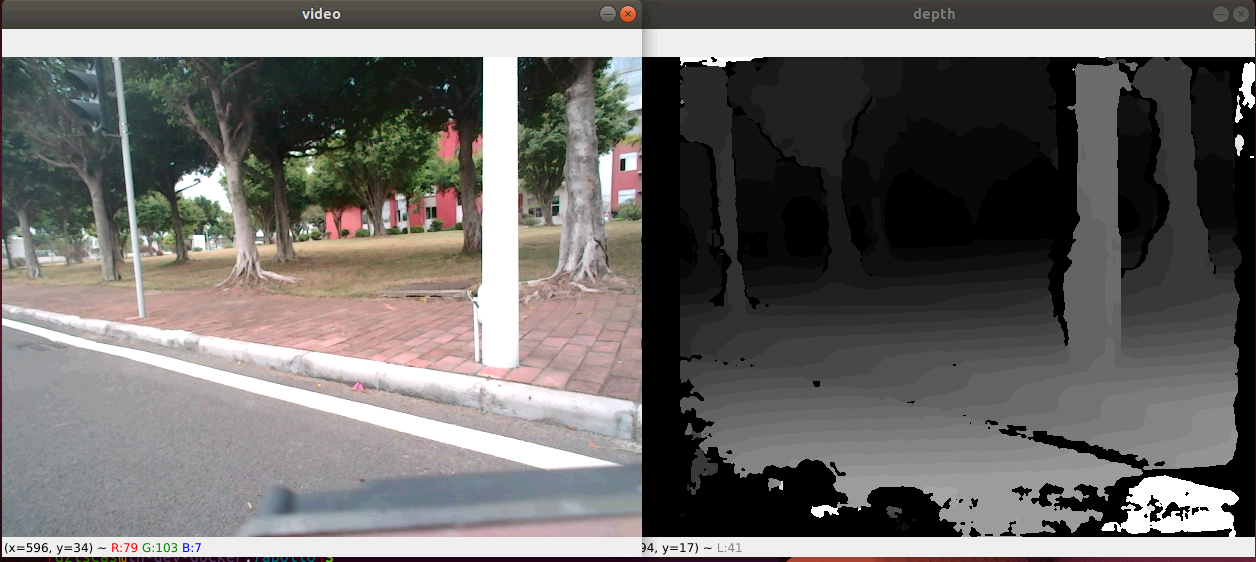

1. Binocular Ranging Effect

Effect of generating disparity map based on SGBM algorithm

By clicking on the parallax map with the mouse, the program automatically calculates the world coordinates and distances of the point, and the output information is as follows:

Pixel coordinates x = 523, y = 366

The world coordinate xyz is: 0.37038836669921876 0.24825479125976563 0.7842975463867188 m

Distance is: 0.9021865798368828 m

The distance here is the actual distance from the center of the binocular camera (left and right camera centers) to the object, such as in meters above.

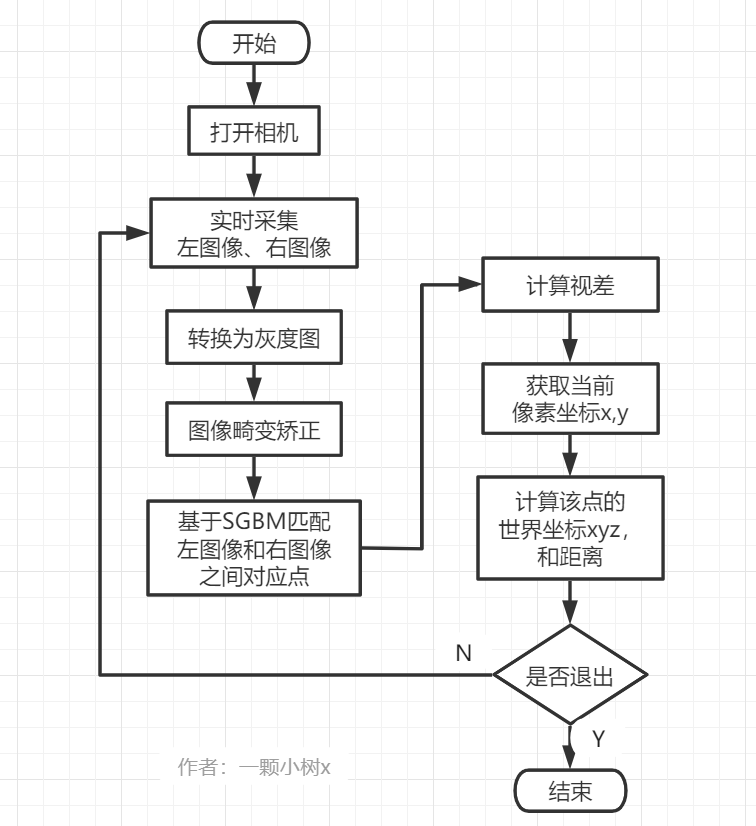

2. Binocular Ranging Process

The program flowchart is as follows

3. Preparing for Binocular Ranging

1) Turn on the binocular camera;

Reference here: OpenCV turns on the binocular camera (python version)

2) Binocular camera calibration; parameters obtained:

Left Camera Internal Reference, Left Camera Distortion Factor: [k1, k2, p1, p2, k3]

Right camera internal parameter, right camera distortion coefficient: [k1, k2, p1, p2, k3]

Rotation matrix, translation vector between left and right cameras. Named camera_config.py, below is what you need to use for ranging.

import cv2

import numpy as np

# Left Camera Internal Reference

left_camera_matrix = np.array([[416.841180253704, 0.0, 338.485167779639],

[0., 416.465934495134, 230.419201769346],

[0., 0., 1.]])

# Left camera distortion coefficient: [k1, k2, p1, p2, k3]

left_distortion = np.array([[-0.0170280933781798, 0.0643596519467521, -0.00161785356900972, -0.00330684695473645, 0]])

# Right Camera Internal Reference

right_camera_matrix = np.array([[417.765094485395, 0.0, 315.061245379892],

[0., 417.845058291483, 238.181766936442],

[0., 0., 1.]])

# Right camera distortion coefficient: [k1, k2, p1, p2, k3]

right_distortion = np.array([[-0.0394089328586398, 0.131112076868352, -0.00133793245429668, -0.00188957913931929, 0]])

# om = np.array([-0.00009, 0.02300, -0.00372])

# R = cv2.Rodrigues(om)[0]

# Rotation Matrix

R = np.array([[0.999962872853149, 0.00187779299260463, -0.00840992323112715],

[ -0.0018408858041373, 0.999988651353238, 0.00439412154902114],

[ 0.00841807904053251, -0.00437847669953504, 0.999954981430194]])

# Shift vector

T = np.array([[-120.326603502087], [0.199732192805711], [-0.203594457929446]])

size = (640, 480)

R1, R2, P1, P2, Q, validPixROI1, validPixROI2 = cv2.stereoRectify(left_camera_matrix, left_distortion,

right_camera_matrix, right_distortion, size, R,

T)

left_map1, left_map2 = cv2.initUndistortRectifyMap(left_camera_matrix, left_distortion, R1, P1, size, cv2.CV_16SC2)

right_map1, right_map2 = cv2.initUndistortRectifyMap(right_camera_matrix, right_distortion, R2, P2, size, cv2.CV_16SC2)

Binocular calibration can refer to: Binocular Visual Calibration+Correction (Based on MATLAB)

Binocular data conversion can be referred to as: Binocular 3D Reconstruction, Ranging - Preparation (Data Conversion)

4. Binocular Test Implementation

The complete code mainly includes the code of main.py and camera_config.py files; main.py is the main function to achieve binocular visual ranging. Camera parameters are represented by camera_config.py.

The main.py code is as follows:

# -*- coding: utf-8 -*-

import numpy as np

import cv2

import camera_config

import random

import math

cap = cv2.VideoCapture(0)

cap.set(3, 1280)

cap.set(4, 480) #Turn on and set the camera

# Mouse Callback Function

def onmouse_pick_points(event, x, y, flags, param):

if event == cv2.EVENT_LBUTTONDOWN:

threeD = param

print('\n Pixel coordinates x = %d, y = %d' % (x, y))

# print("World coordinates are:", threeD[y][x][0], threeD[y][x][1], threeD[y][x][2], "mm")

print("World coordinates xyz Yes:", threeD[y][x][0]/ 1000.0 , threeD[y][x][1]/ 1000.0 , threeD[y][x][2]/ 1000.0 , "m")

distance = math.sqrt( threeD[y][x][0] **2 + threeD[y][x][1] **2 + threeD[y][x][2] **2 )

distance = distance / 1000.0 # mm -> m

print("Distance is:", distance, "m")

WIN_NAME = 'Deep disp'

cv2.namedWindow(WIN_NAME, cv2.WINDOW_AUTOSIZE)

while True:

ret, frame = cap.read()

frame1 = frame[0:480, 0:640]

frame2 = frame[0:480, 640:1280] #Cut Binocular Image

imgL = cv2.cvtColor(frame1, cv2.COLOR_BGR2GRAY) # Convert BGR format to grayscale pictures

imgR = cv2.cvtColor(frame2, cv2.COLOR_BGR2GRAY)

# cv2.remap is the process of placing a pixel in one image at a specified location in another picture.

# Reconstructing distortion-free pictures from MATLAB measurements

img1_rectified = cv2.remap(imgL, camera_config.left_map1, camera_config.left_map2, cv2.INTER_LINEAR)

img2_rectified = cv2.remap(imgR, camera_config.right_map1, camera_config.right_map2, cv2.INTER_LINEAR)

imageL = cv2.cvtColor(img1_rectified, cv2.COLOR_GRAY2BGR)

imageR = cv2.cvtColor(img2_rectified, cv2.COLOR_GRAY2BGR)

# SGBM

blockSize = 8

img_channels = 3

stereo = cv2.StereoSGBM_create(minDisparity = 1,

numDisparities = 64,

blockSize = blockSize,

P1 = 8 * img_channels * blockSize * blockSize,

P2 = 32 * img_channels * blockSize * blockSize,

disp12MaxDiff = -1,

preFilterCap = 1,

uniquenessRatio = 10,

speckleWindowSize = 100,

speckleRange = 100,

mode = cv2.STEREO_SGBM_MODE_HH)

disparity = stereo.compute(img1_rectified, img2_rectified) # Calculate Parallax

disp = cv2.normalize(disparity, disparity, alpha=0, beta=255, norm_type=cv2.NORM_MINMAX, dtype=cv2.CV_8U) #Normalization function algorithm

threeD = cv2.reprojectImageTo3D(disparity, camera_config.Q, handleMissingValues=True) #Calculating three-dimensional coordinate data values

threeD = threeD * 16

# threeD[y][x] x:0~640; y:0~480; !!!!!!!!!!

cv2.setMouseCallback(WIN_NAME, onmouse_pick_points, threeD) # Mouse Callback Event

cv2.imshow("left", frame1)

# cv2.imshow("right", frame2)

# cv2.imshow("left_r", imgL)

# cv2.imshow("right_r", imgR)

cv2.imshow(WIN_NAME, disp) #Binocular display of depth map

key = cv2.waitKey(1)

if key == ord("q"):

break

cap.release()

cv2.destroyAllWindows()

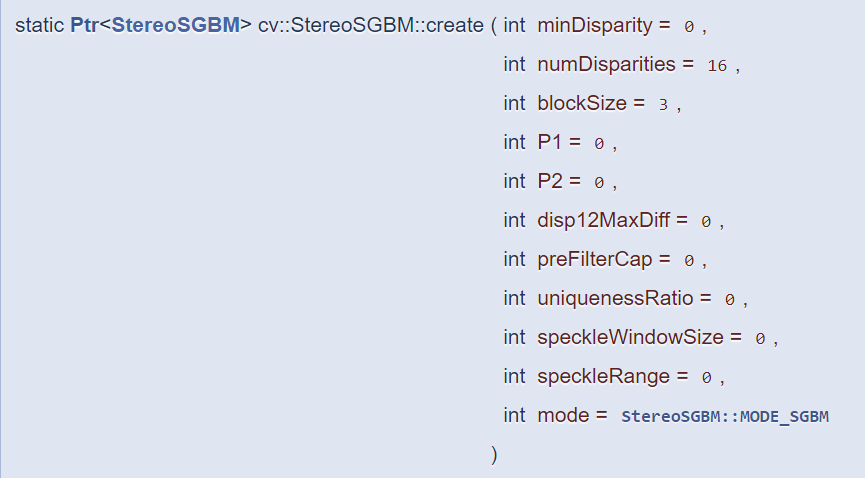

5. SGBM algorithm

SGBM algorithm, full name is Stereo Processing by Semiglobal Matching and Mutual Information.

It is a global matching algorithm. Stereo matching is much better than local matching, but it is also much more complex than local matching.

By selecting disparity of each pixel point, a disparity map is formed, and a global energy function related to the disparity map is set to minimize this energy function in order to solve the optimal disparity of each pixel.

Reference resources: [Everything about stereo vision] Stereo-matched imaging algorithms BM, SGBM, GC, SAD at a glance-know

Below will be some practical, how to adjust parameters in SGBM to achieve good results in different environments.

SGBM functions in OpenCV, C++:

Python call interface: parameter same as above

cv.StereoSGBM_create()

Parameter meaning:

| minDisparity Minimum Parallax | Minimum possible parallax value. Normally zero, but sometimes the correction algorithm results in image shift, so this parameter needs to be adjusted accordingly. |

| numDisparities Quantity difference | Maximum Parallax minus Minimum Parallax. This value is always greater than zero. In the current implementation, this parameter must be divisible by 16 integers. |

| blockSize Block size | Match block size. It must be odd >=1. Typically, it should be somewhere within the range of 3.11. |

| P1 | The first parameter controls the parallax smoothness. P1 penalizes the parallax change of plus or minus 1 between adjacent pixels. |

| P2 | The second parameter controls parallax smoothness. The larger the value, the smoother the parallax. P2 is a penalty for parallax changes exceeding 1 between adjacent pixels. This algorithm requires P2 > P1. |

| disp12MaxDiff | Maximum difference allowed in left-right disparity check (in integer pixels). Set it to a non-positive value to disable the check. |

| preFilterCap Pre-filter cap | The truncation value of the pre-filtered image pixels. The algorithm first calculates the x-derivative of each pixel and clips its value at [-preFilterCap, preFilterCap] intervals. The result value is passed to the Birchfield-Tomasi pixel cost function. |

| uniquenessRatio Uniqueness Ratio | The best (minimum) cost function value calculated in percentage should "win" the second best value to assume that the match found is correct. Generally, values in the range of 5-15 are sufficient. |

| speckleWindowSize Speckle window size | Smooth the maximum size of parallax areas to account for and invalidate their noise speckles. Set it to 0 to disable speckle filtering. Otherwise, set it somewhere within the 50-200 range. |

| speckleRange Speckle Range | Maximum parallax change within each connected component. If speckle filtering is done and the parameter is set to a positive value, it is implicitly multiplied by 16. Normally, 1 or 2 is sufficient. |

| mode Pattern | Set it to StereoSGBM::MODE_HH It will consume O(W*H*numDisparities) bytes, which is large for 640x480 stereos and for HD-sized pictures. By default, it is set to false. |

Reference resources: OpenCV: cv::StereoSGBM Class Reference

SGBM Case 1: Suitable for outdoor use

# SGBM

blockSize = 8

img_channels = 3

stereo = cv2.StereoSGBM_create(minDisparity = 1,

numDisparities = 64,

blockSize = blockSize,

P1 = 8 * img_channels * blockSize * blockSize,

P2 = 32 * img_channels * blockSize * blockSize,

disp12MaxDiff = -1,

preFilterCap = 1,

uniquenessRatio = 10,

speckleWindowSize = 100,

speckleRange = 100,

mode = cv2.STEREO_SGBM_MODE_HH)SGBM Case 2:

numberOfDisparities = ((640 // 8) + 15) & -16 #640 corresponds to the width of the resolution

stereo = cv2.StereoSGBM_create(minDisparity=0, numDisparities=numberOfDisparities, blockSize=9,

P1=8*1*9*9, P2=32*1*9*9, disp12MaxDiff=1, uniquenessRatio=10,

speckleWindowSize=100, speckleRange=32, mode=cv2.STEREO_SGBM_MODE_SGBM)Summary

Usually, binocular vision ranging can be combined with target detection. First, the object is framed with target detection algorithms such as YOLO, SSD, etc. Then the center or particle of the object is calculated, and a point nearby is selected to calculate three-dimensional coordinates and distances.

Reference

An article on Binocular Stereo Vision

OpenCV turns on the binocular camera (python version)

Binocular Visual Calibration+Correction (Based on MATLAB)

Binocular 3D Reconstruction, Ranging - Preparation (Data Conversion)

Welcome to the exchange;