Spider

1. Python Basics

1.Python environment installation

1.1 download Python

Official website: https://www.python.org/

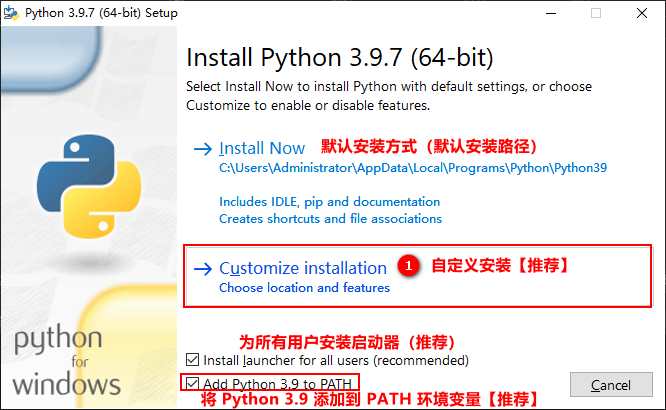

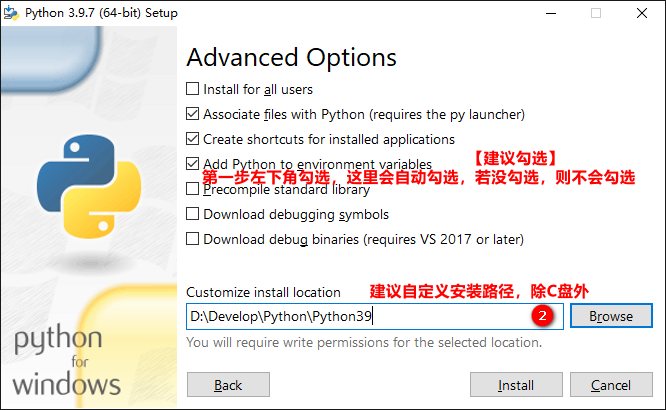

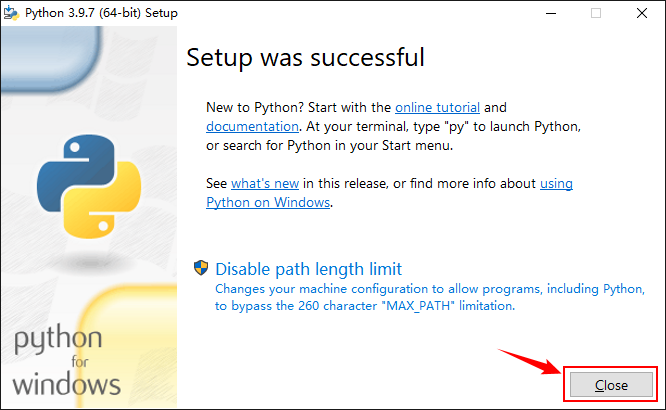

1.2 installing Python

One way fool installation

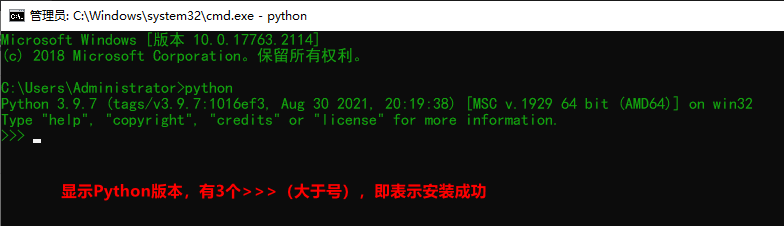

1.3 test whether the installation is successful

Win + R, enter cmd, enter

If an error occurs: 'python', it is not an internal or external command, nor is it a runnable program or batch file.

Cause: the problem of environment variables may be because the Add Python 3.x to PATH option is not checked during Python installation. At this time, python needs to be configured manually.

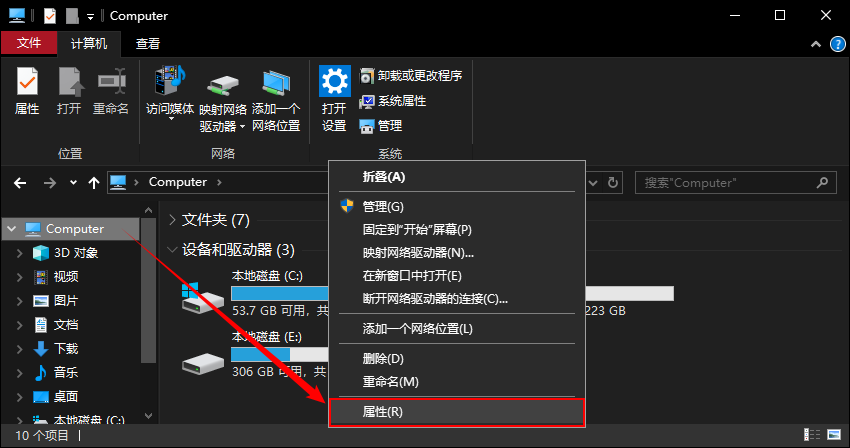

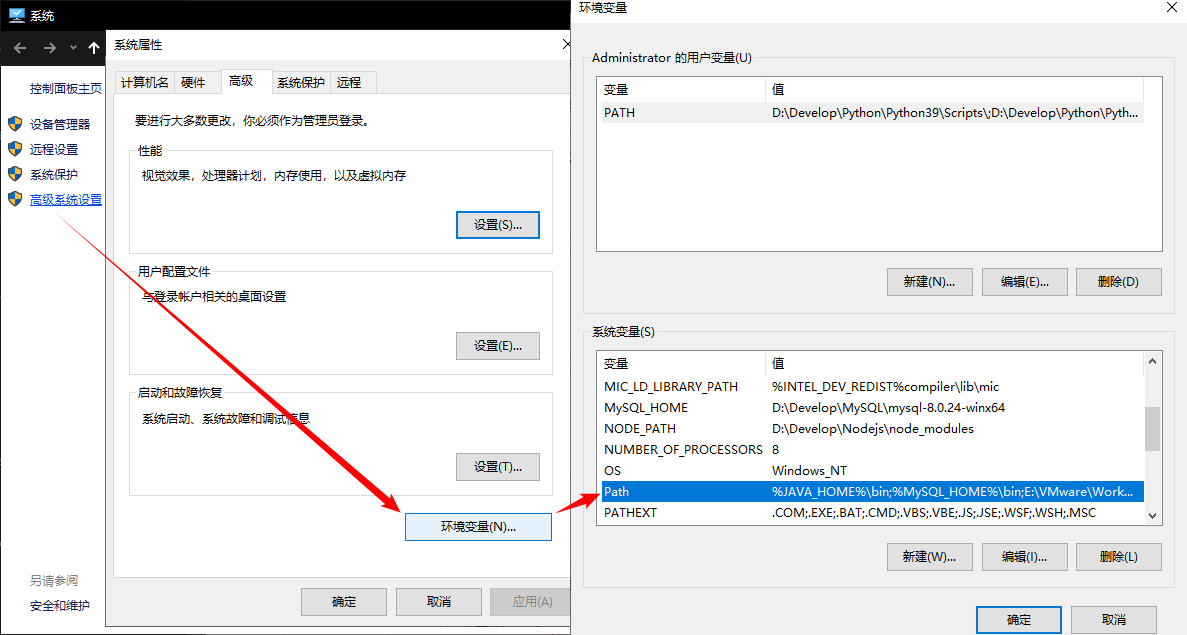

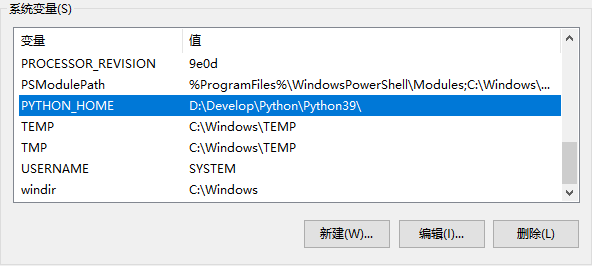

1.4 configuring Python environment variables

Note: if the Add Python 3.x to PATH option has been checked during the installation process, and in the cmd command mode, enter the python instruction to display version information without error, there is no need to manually configure python. (skip the step of manually configuring environment variables)

Right click this computer, select properties,

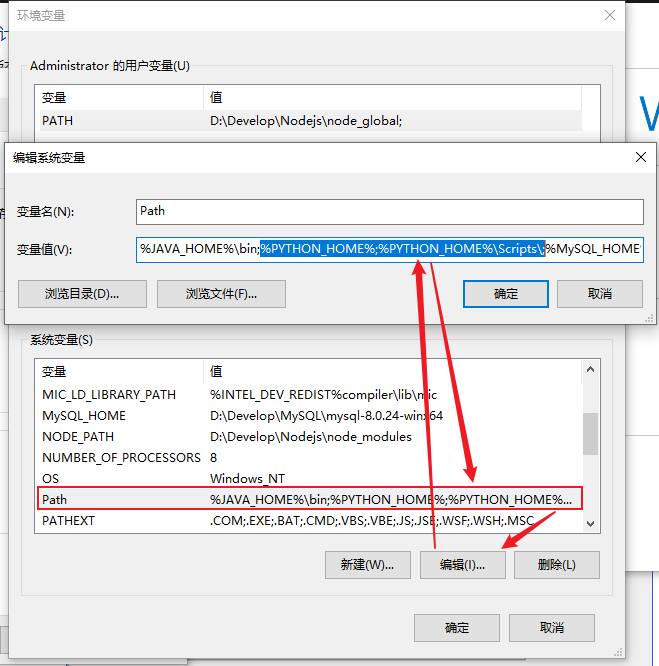

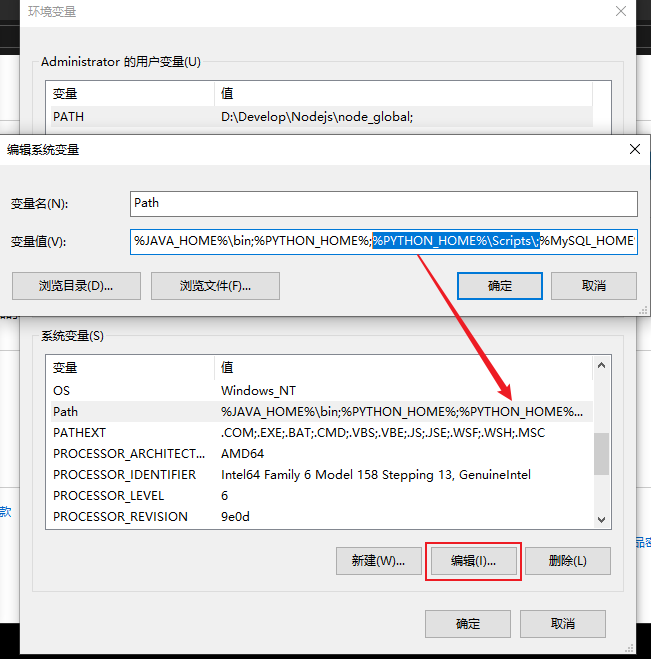

Select advanced system settings -- > environment variables -- > find and double-click Path

Double click Path, click New in the pop-up box, add the python installation directory, add the Path, then edit the environment variable in Path, and use%% to read the python installation Path

2. Use of Pip

pip is a modern, general-purpose Python package management tool. It provides the functions of finding, downloading, installing and uninstalling Python packages, which is convenient for us to manage Python resource packages.

2.1 installation

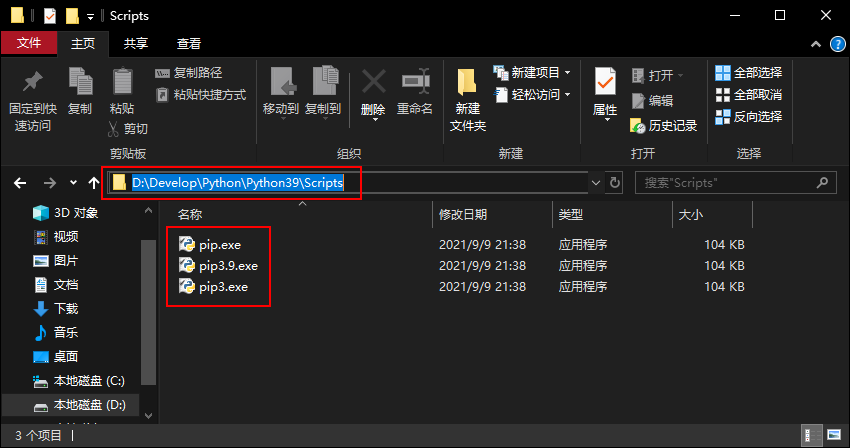

When Python is installed, pip.exe is automatically downloaded and installed

2.2 configuration

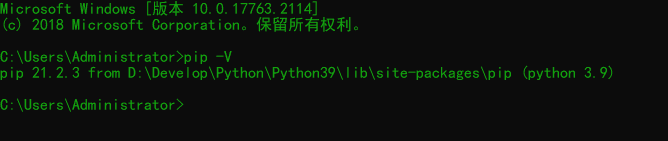

On the windows command line, enter pip -V to view the version of pip.

# View pip Version (capital V) pip -V

If you run pip -V on the command line, the following prompt appears: 'pip', which is not an internal or external command, nor a runnable program or batch file.

Cause: the problem of environment variables may be because the Add Python 3.x to PATH option is not checked during Python installation. At this time, python needs to be configured manually.

Right click the computer -- > environment variable -- > find and double-click path -- > click Edit in the pop-up window – > find the installation directory of pip (that is, the path of Scripts in the python installation directory) and add the path.

Configure environment variables (if configured, please skip. There are many ways, you can configure them anyway)

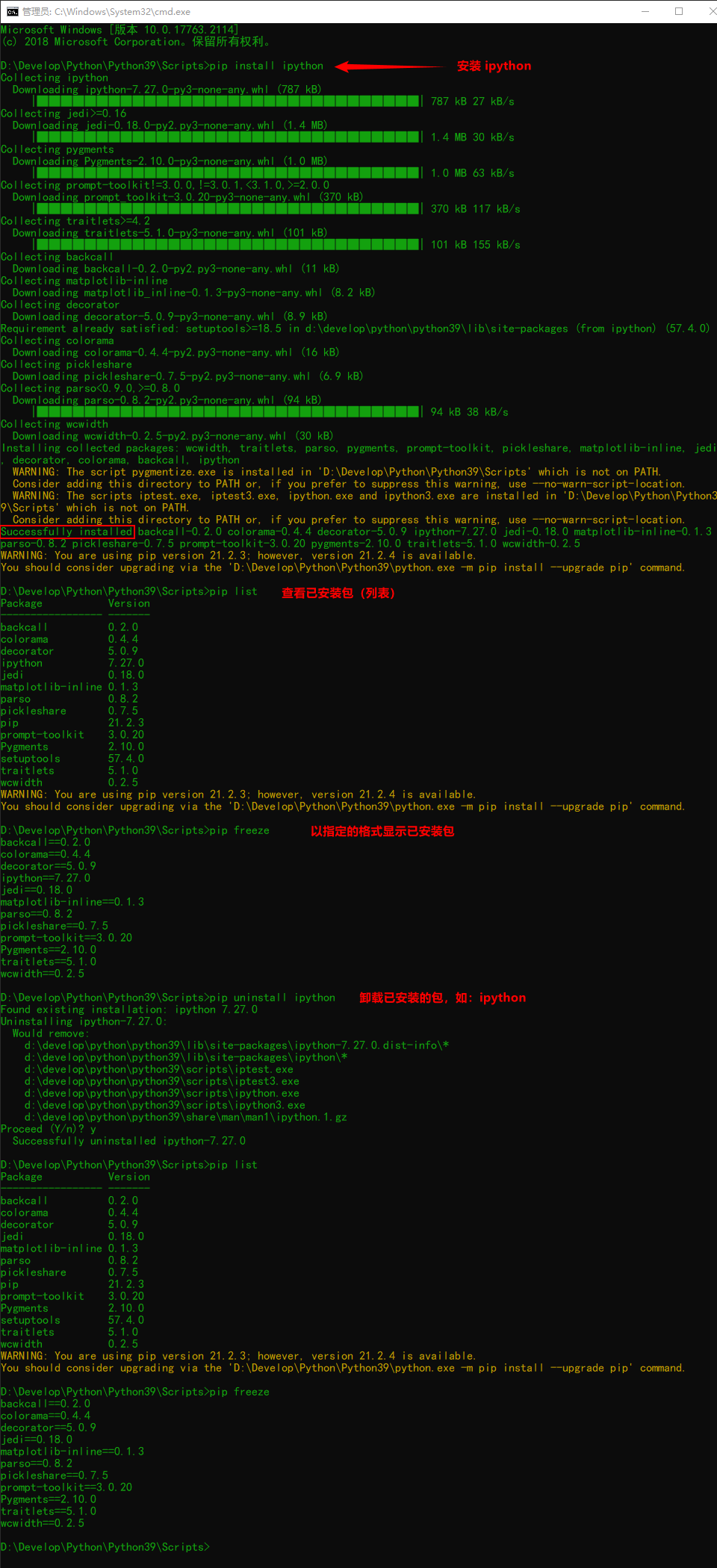

2.3 using pip to manage Python packages

pip install <Package name> # Install the specified package pip uninstall <Package name> # Delete the specified package pip list # Displays installed packages pip freeze # Displays the installed packages in the specified format

2.4 modify pip download source

- Running the pip install command will download the specified python package from the website. The default is from the https://files.pythonhosted.org/ Download from the website. This is a foreign website. In case of bad network conditions, the download may fail. We can modify the source of pip software through commands.

- Format: pip install package name-i address

- Example: pip install ipython -i https://pypi.mirrors.ustc.edu.cn/simple/ Is to download requests (a third-party web Framework Based on python) from the server of USTC

List of commonly used pip download sources in China:

- Alibaba cloud: http://mirrors.aliyun.com/pypi/simple/

- University of science and technology of China: https://pypi.mirrors.ustc.edu.cn/simple/

- Douban: http://pypi.douban.com/simple/

- Tsinghua University: https://pypi.tuna.tsinghua.edu.cn/simple/

- University of science and technology of China:[ http://pypi.mirrors.ustc.edu.cn/simple/ ](

2.4.1 temporary modification

pip install -i https://pypi.tuna.tsinghua.edu.cn/simple/

2.4.2 permanent modification

Under Linux, modify ~ /. pip/pip.conf (or create one), and modify the index URL variable to the source address to be replaced:

[global] index-url = https://mirrors.aliyun.com/pypi/simple/ [install] trusted-host = mirrors.ustc.edu.cn

Under windows, create a PIP directory in the user directory, such as C:\Users\xxx\pip, and create a new file pip.ini, as follows:

3. Run Python program

3.1 terminal operation

-

Write code directly in the python interpreter

# Exit python environment exit() Ctrl+Z,Enter

-

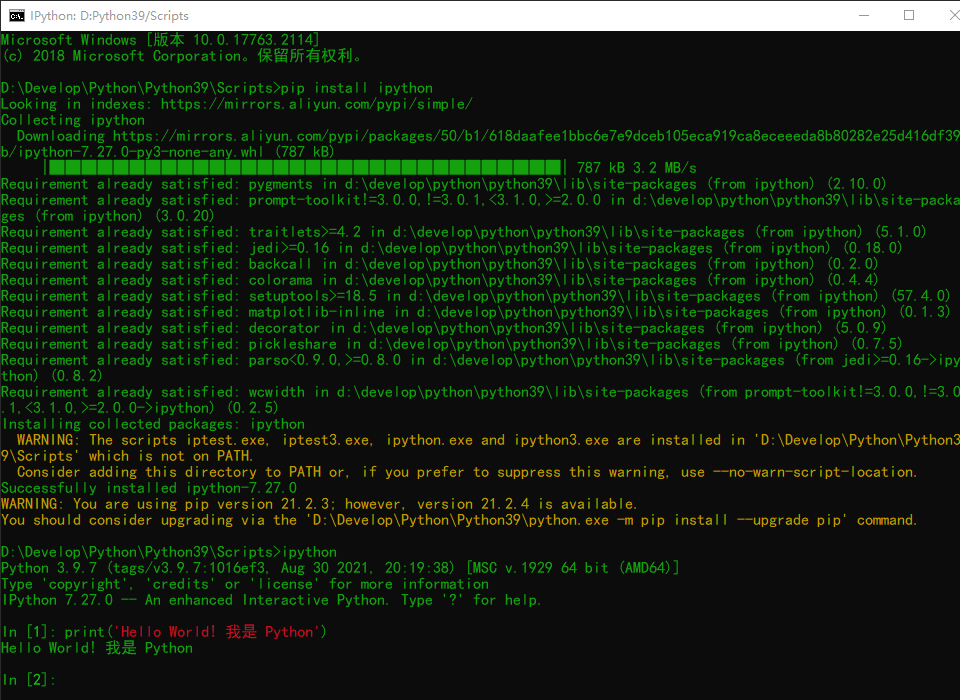

Write code using the ipython interpreter

Using the pip command, you can quickly install IPython

# Install ipython pip install ipython

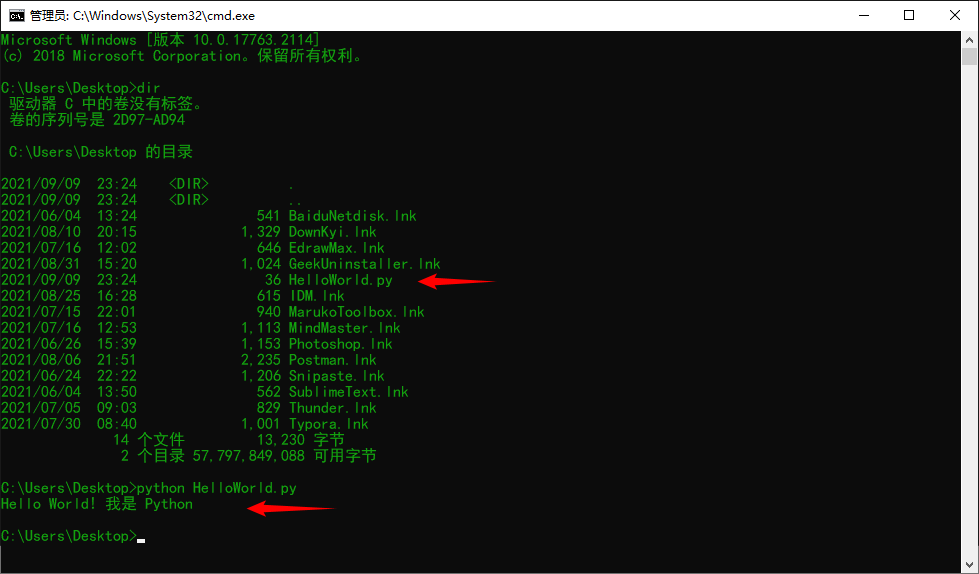

3.2 running Python files

Use the python directive to run a python file with the suffix. py

python File path\xxx.py

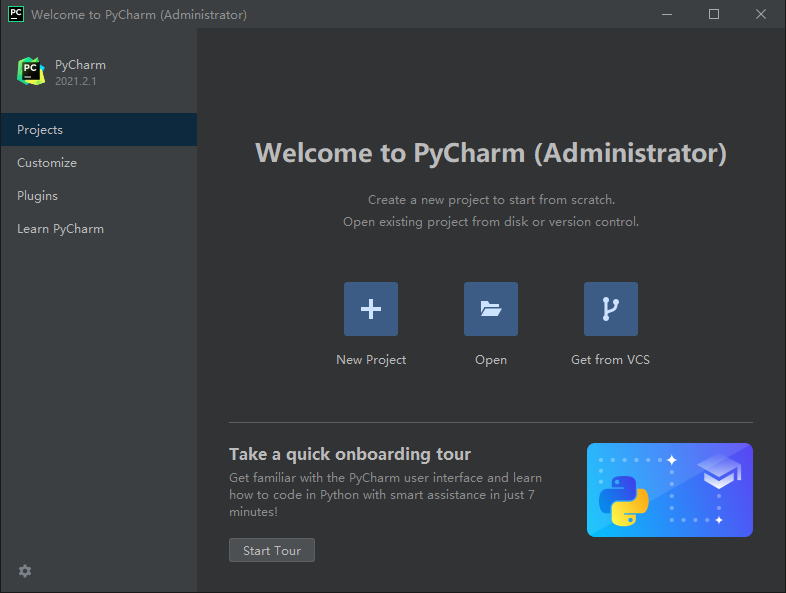

3.3 pychar (IDE integrated development environment)

Concept of IDE

IDE(Integrated Development Environment) is also called integrated development environment. To put it bluntly, it has a graphical interface software, which integrates the functions of editing code, compiling code, analyzing code, executing code and debugging code. In Python development, the commonly used IDE is pychart

pycharm is an IDE developed by JetBrains, a Czech company. It provides code analysis, graphical debugger, integrated tester, integrated version control system, etc. it is mainly used to write Python code.

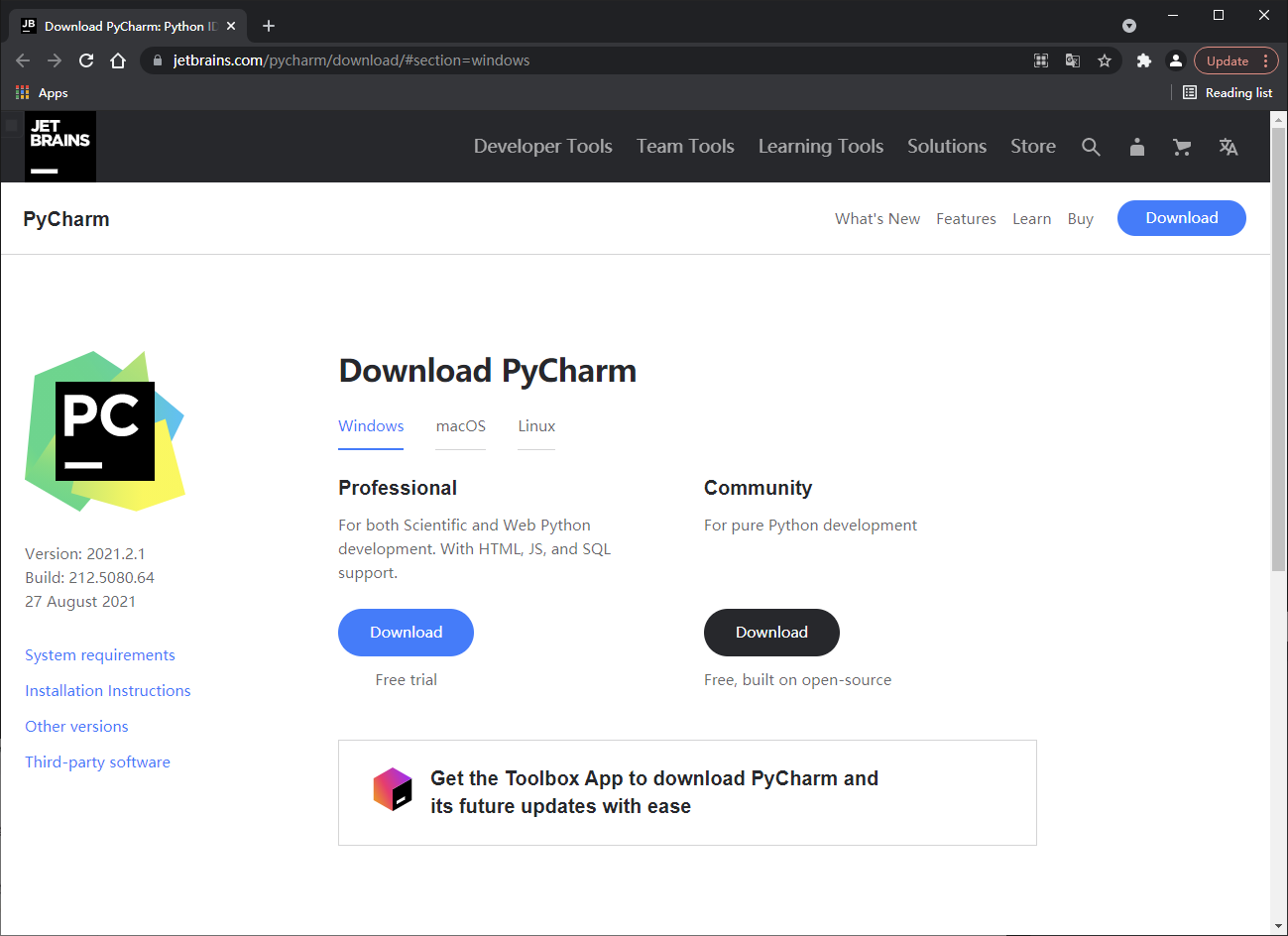

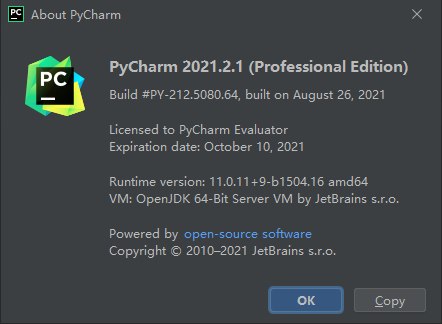

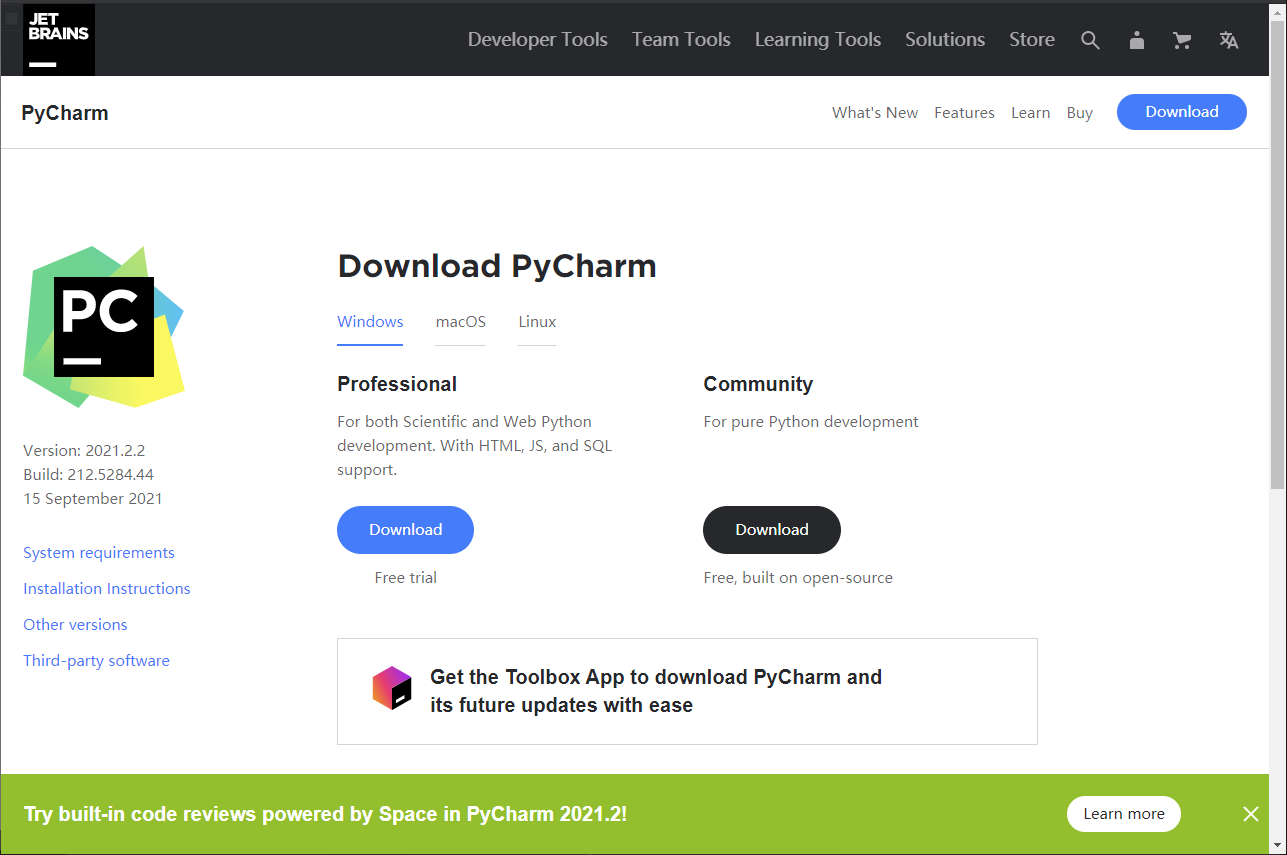

3.3.1 download pychar

Official website download address: http://www.jetbrains.com/pycharm/download

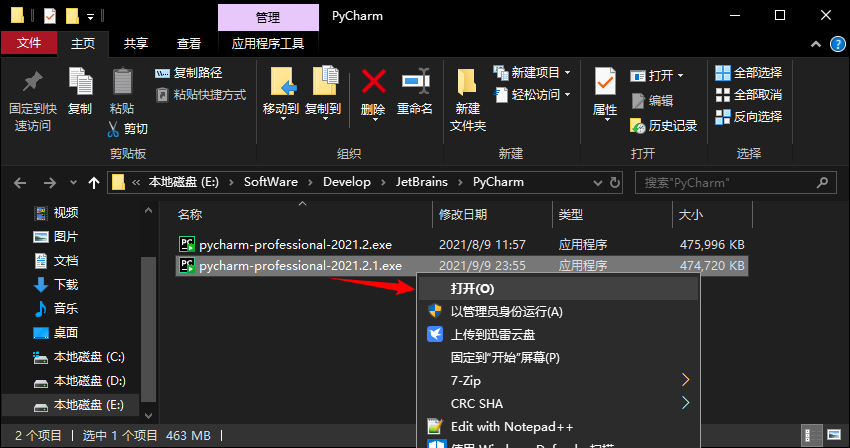

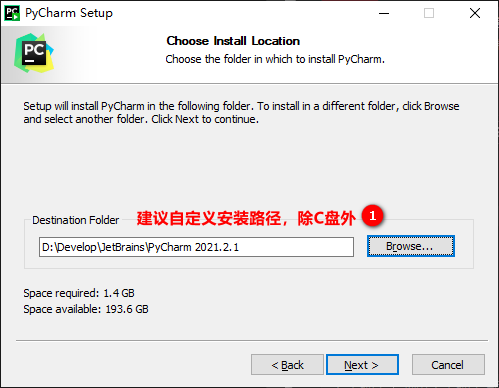

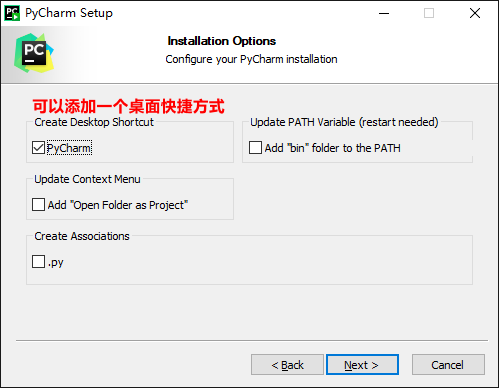

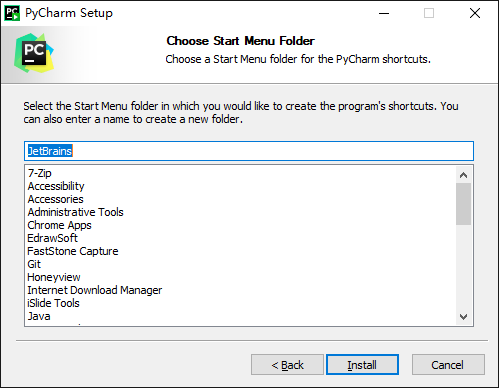

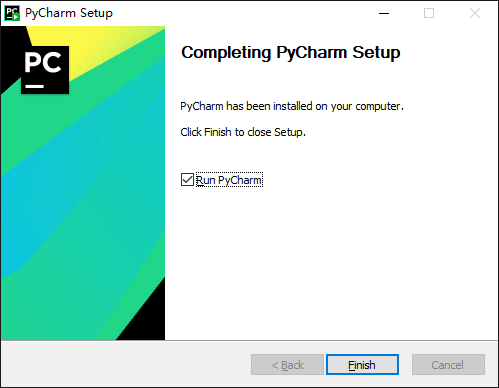

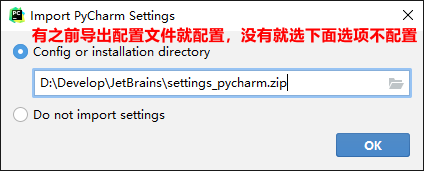

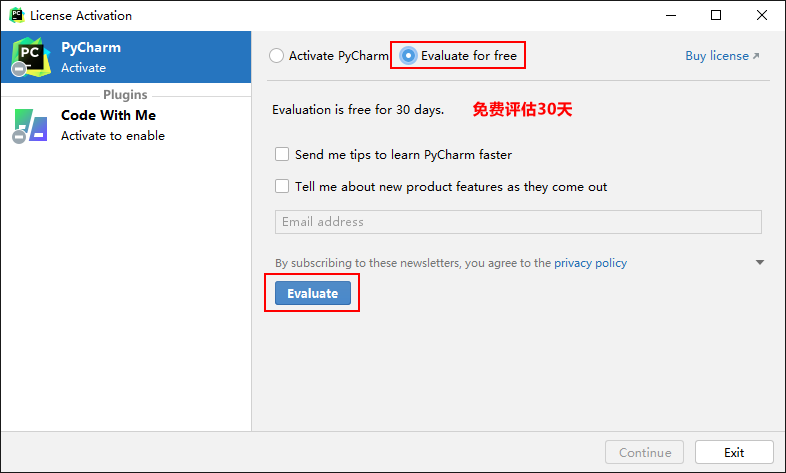

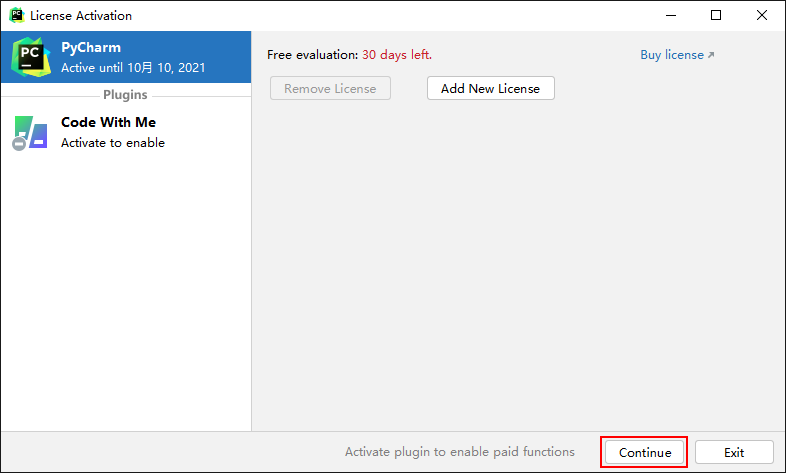

3.3.2 installation of pychar

One way fool installation

At present, it has been updated to 2021.2.2, which can be downloaded to Download from the official website Used, updated on the official website: September 15, 2021

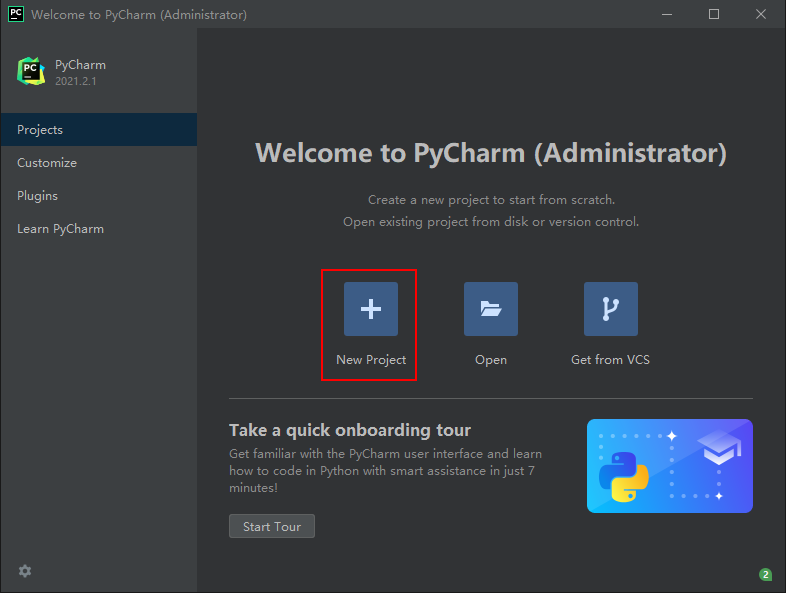

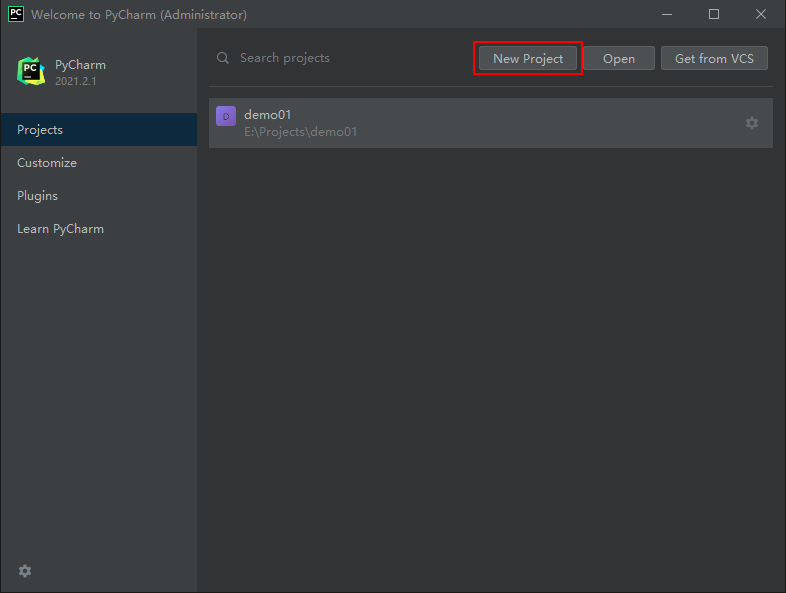

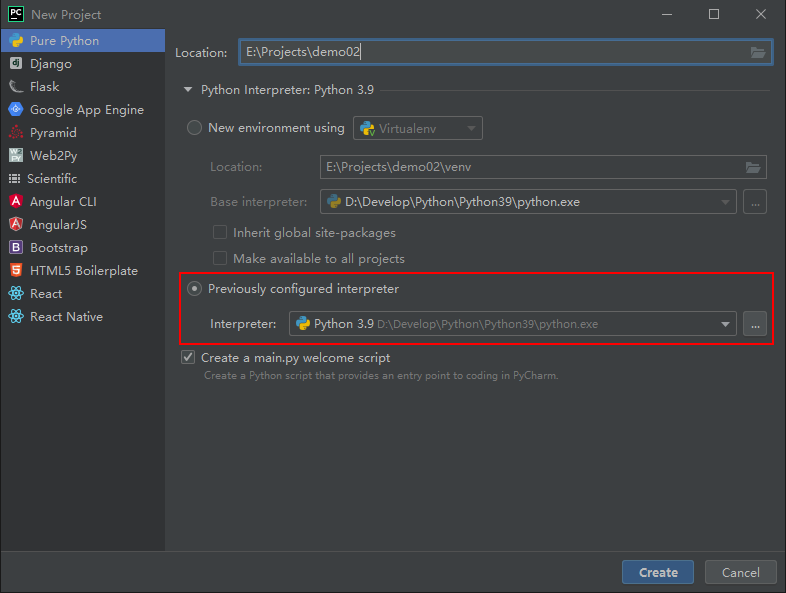

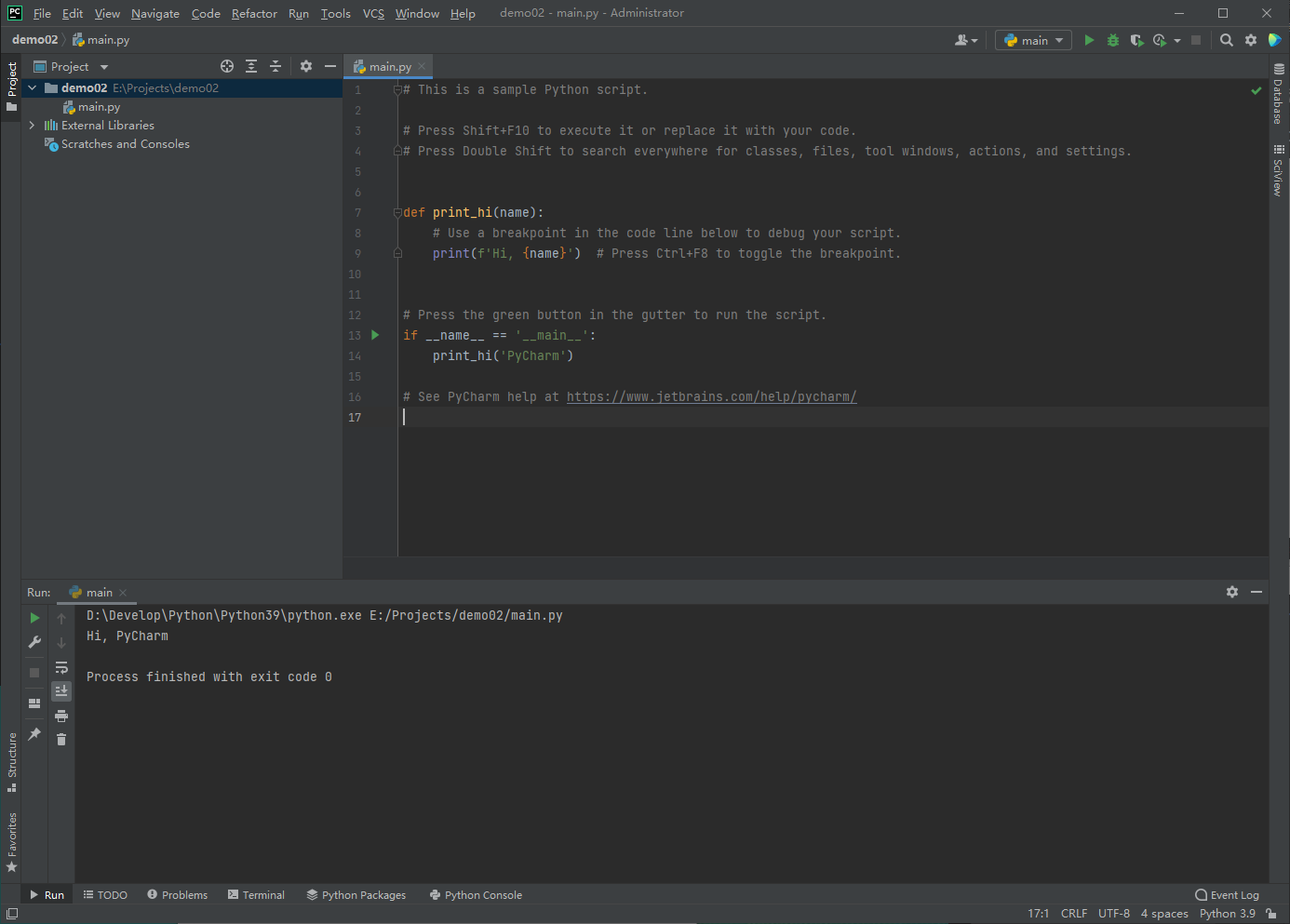

3.3.3 use pychar

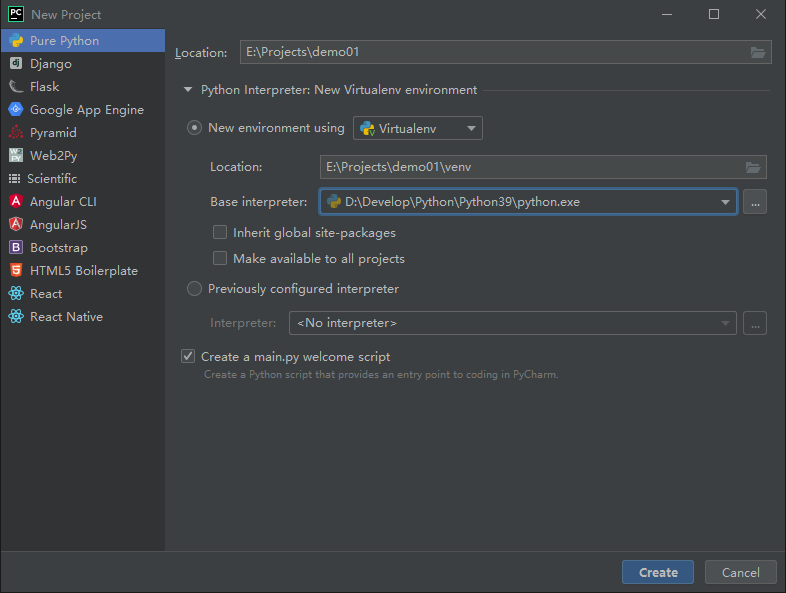

Create a new project

You can select an existing interpreter

Run test

4. Notes

Comments are for programmers. In order to make it easy for programmers to read the code, the interpreter will ignore comments. It is a good coding habit to annotate and explain the code appropriately in their familiar language.

4.1 classification of notes

Single line comments and multi line comments are supported in Python.

Single-Line Comments

Start with #.

multiline comment

Start with '' and end with '', multiline comment.

5. Variables and data types

5.1 definition of variables

Data that is reused and often needs to be modified can be defined as variables to improve programming efficiency.

Variables are variables that can be changed and can be modified at any time.

Programs are used to process data, and variables are used to store data.

5.2 variable syntax

Variable name = variable value. (the = function here is assignment.)

5.3 access to variables

After defining a variable, you can use the variable name to access the variable value.

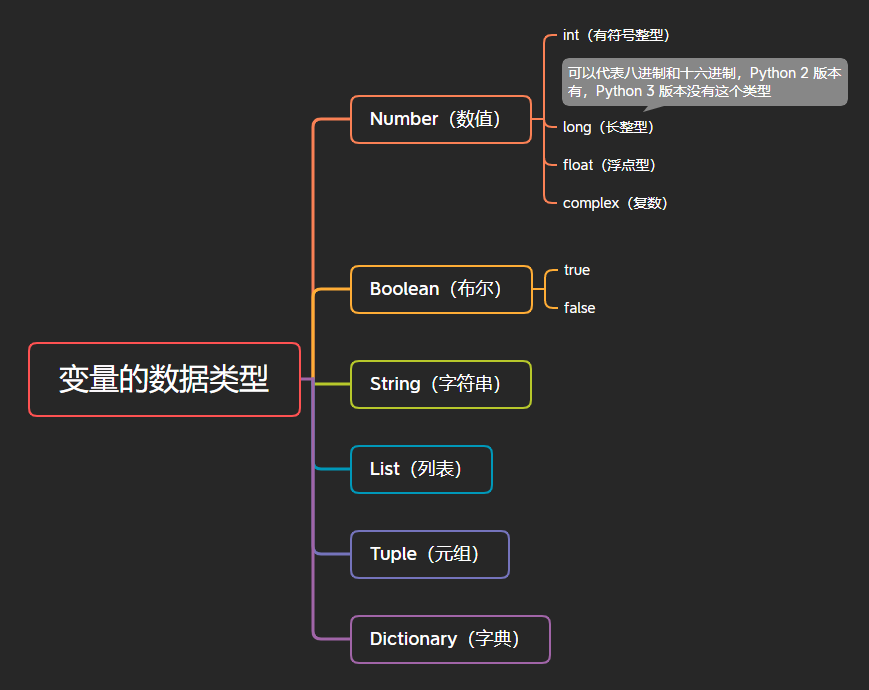

5.4 data types of variables

In Python, in order to meet different business needs, data is also divided into different types.

Variable has no type, data has type

5.5 viewing data types

In python, as long as a variable is defined and it has data, its type has been determined. It does not need the developer to actively explain its type, and the system will automatically identify it. That is to say, "when a variable has no type, data has type".

To view the data type stored by a variable, you can use type (variable name) to view the data type stored by the variable.

# Data type of variable

# numerical value

money = 100000

print(type(money)) # <class 'int'>

# Boolean

gender = True

sex = False

print(type(gender)) # <class 'bool'>

# character string

s = 'character string'

s1 = "String 1"

s2 = '"Cross nesting of single and double quotation marks"'

s3 = "'Cross nesting of single and double quotation marks'"

print(s2)

print(type(s)) # <class 'str'>

# list

name_list = ['Tomcat', 'Java']

print(type(name_list)) # <class 'list'>

# Tuple tuple

age_tuple = (16, 17, 18)

print(type(age_tuple)) # <class 'tuple'>

# dictionary variable name = {key:value,key:value,...}

person = {'name': 'admin', 'age': 18}

print(type(person)) # <class 'dict'>

6. Identifier and keyword

In computer programming language, identifier is the name used by users in programming. It is used to name variables, constants, functions, statement blocks, etc., so as to establish the relationship between name and use.

- An identifier consists of letters, underscores, and numbers, and cannot begin with a number.

- Strictly case sensitive.

- Keywords cannot be used.

6.1 naming conventions

Identifier naming shall be as the name implies (see the meaning of name).

Follow certain naming conventions.

-

Hump nomenclature is divided into large hump nomenclature and small hump nomenclature.

- lower camel case: the first word starts with a lowercase letter; The first letter of the second word is capitalized, for example: myName, aDog

- upper camel case: the first letter of each word is capitalized, such as FirstName and LastName

-

Another naming method is to use the underscore "" to connect all words, such as send_buf. Python's command rules follow the PEP8 standard

6.2 keywords

Keywords: some identifiers with special functions.

Keyword has been officially used by python, so developers are not allowed to define identifiers with the same name as keywords.

| False | None | True | and | as | assert | break | class | continue | def | del |

|---|---|---|---|---|---|---|---|---|---|---|

| elif | else | except | finally | for | from | global | if | import | in | is |

| lambda | nonlocal | not | or | pass | raise | return | try | while | with | yield |

7. Type conversion

| function | explain |

|---|---|

| int(x) | Convert x to an integer |

| float(x) | Convert x to a floating point number |

| str(x) | Convert object x to string |

| bool(x) | Convert object x to Boolean |

Convert to integer

print(int("10")) # 10 convert string to integer

print(int(10.98)) # 10 convert floating point numbers to integers

print(int(True)) # The Boolean value True is converted to an integer of 1

print(int(False)) # The Boolean value False of 0 is converted to an integer of 0

# The conversion will fail in the following two cases

'''

123.456 And 12 ab Strings contain illegal characters and cannot be converted into integers. An error will be reported

print(int("123.456"))

print(int("12ab"))

'''

Convert to floating point number

f1 = float("12.34")

print(f1) # 12.34

print(type(f1)) # float converts the string "12.34" to a floating point number 12.34

f2 = float(23)

print(f2) # 23.0

print(type(f2)) # float converts an integer to a floating point number

Convert to string

str1 = str(45) str2 = str(34.56) str3 = str(True) print(type(str1),type(str2),type(str3))

Convert to Boolean

print(bool('')) # False

print(bool("")) # False

print(bool(0)) # False

print(bool({})) # False

print(bool([])) # False

print(bool(())) # False

8. Operator

8.1 arithmetic operators

| Arithmetic operator | describe | Example (a = 10, B = 20) |

|---|---|---|

| + | plus | Adding two objects a + b outputs the result 30 |

| - | reduce | Get a negative number or subtract one number from another a - b output - 10 |

| * | ride | Multiply two numbers or return a string a * b repeated several times, and output the result 200 |

| / | except | b / a output result 2 |

| // | to be divisible by | Return the integer part of quotient 9 / / 2 output result 4, 9.0 / / 2.0 output result 4.0 |

| % | Surplus | Return the remainder of Division B% a output result 0 |

| ** | index | a**b is the 20th power of 10 |

| () | parentheses | Increase the operation priority, such as: (1 + 2) * 3 |

# Note: during mixed operation, the priority order is: * * higher than * /% / / higher than + -. In order to avoid ambiguity, it is recommended to use () to handle the operator priority. Moreover, when different types of numbers are mixed, integers will be converted into floating-point numbers for operation. >>> 10 + 5.5 * 2 21.0 >>> (10 + 5.5) * 2 31.0 # If two strings are added, the two strings will be directly spliced into a string. In [1]: str1 ='hello' In [2]: str2 = ' world' In [3]: str1+str2 Out[3]: 'hello world' # If you add numbers and strings, an error will be reported directly. In [1]: str1 = 'hello' In [2]: a = 2 In [3]: a+str1 --------------------------------------------------------------------------- TypeError Traceback (most recent call last) <ipython-input-3-993727a2aa69> in <module> ----> 1 a+str1 TypeError: unsupported operand type(s) for +: 'int' and 'str' # If you multiply a number and a string, the string will be repeated multiple times. In [4]: str1 = 'hello' In [5]: str1*10 Out[5]: 'hellohellohellohellohellohellohellohellohellohello'

8.2 assignment operators

| Assignment Operators | describe | Example |

|---|---|---|

| = | Assignment Operators | Assign the result on the right of the = sign to the variable on the left, such as num = 1 + 2 * 3, and the value of the result num is 7 |

| compound assignment operators | describe | Example |

|---|---|---|

| += | Additive assignment operator | c += a is equivalent to c = c + a |

| -= | Subtraction assignment operator | c -= a is equivalent to c = c - a |

| *= | Multiplication assignment operator | c *= a is equivalent to c = c * a |

| /= | Division assignment operator | c /= a is equivalent to c = c / a |

| //= | Integer division assignment operator | c //= a is equivalent to c = c // a |

| %= | Modulo assignment operator | C% = a is equivalent to C = C% a |

| **= | Power assignment operator | c **= a is equivalent to c = c ** a |

# Single variable assignment >>> num = 10 >>> num 10 # Assign values to multiple variables at the same time (connect with equal sign) >>> a = b = 4 >>> a 4 >>> b 4 >>> # Multiple variable assignments (separated by commas) >>> num1, f1, str1 = 100, 3.14, "hello" >>> num1 100 >>> f1 3.14 >>> str1 "hello" # Example:+= >>> a = 100 >>> a += 1 # Equivalent to performing a = a + 1 >>> a 101 # Example:*= >>> a = 100 >>> a *= 2 # Equivalent to performing a = a * 2 >>> a 200 # Example: * =, during operation, the expression on the right side of the symbol calculates the result first, and then operates with the value of the variable on the left >>> a = 100 >>> a *= 1 + 2 # Equivalent to executing a = a * (1+2) >>> a 300

8.3 comparison operators

<>: Python version 3.x does not support <>, use != Instead, python version 2 supports < >, python version 3 no longer supports < >, use= Replace.

For all comparison operators, 1 means True and 0 means False, which are equivalent to the special variables True and False respectively.

| Comparison operator | describe | Example (a=10,b=20) |

|---|---|---|

| == | Equal: whether the comparison objects are equal | (a == b) returns False |

| != | Not equal: compares whether two objects are not equal | (a! = b) returns True |

| > | Greater than: Returns whether x is greater than y | (a > b) returns False |

| >= | Greater than or equal to: Returns whether x is greater than or equal to y | (a > = b) returns False |

| < | Less than: Returns whether x is less than y | (a < b) returns True |

| <= | Less than or equal to: Returns whether x is less than or equal to y | (a < = b) return True |

8.4 logical operators

| Logical operator | expression | describe | Example |

|---|---|---|---|

| and | x and y | As long as one operand is False, the result is False; The result is True only if all operands are True If the front is False, it will not be executed later (short circuit and) | True and False – > the result is False True and True and True – > the result is true |

| or | x or y | As long as one operand is True, the result is True; The result is False only if all operands are False If the front is True, the back is not executed (short circuit or) | False or False or True – > the result is True False or False or False – > the result is false |

| not | not x | Boolean not - Returns False if x is True. If x is False, it returns True. | not True --> False |

9. Input and output

9.1 output

Normal output:

print('xxx')

Format output:

# %s: Representative string% d: representative value

age = 18

name = "admin"

print("My name is%s, Age is%d" % (name, age))

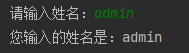

9.2 input

In Python, the method to get the data entered by the keyboard is to use the input function

- In the parentheses of input(), the prompt information is used to give a simple prompt to the user before obtaining the data

- After obtaining data from the keyboard, input() will be stored in the variable to the right of the equal sign

- input() treats any value entered by the user as a string

name = input("Please enter your name:")

print('The name you entered is:%s' % name)

10. Process control statement

10.1 if conditional judgment statement

# ① Single if statement

if Judgment conditions:

When the condition holds, execute the statement

# For example:

age = 16

if age >= 18:

print("Grow up")

# ② If else statement

if Judgment conditions:

If the condition holds, execute the statement

else:

If the condition does not hold, execute the statement

# For example:

height = input('Please enter your height(cm): \n')

if int(height) <= 150:

print('Free tickets for Science Park')

else:

print('I need to buy a ticket')

# ③ elif statement

if Judgment condition 1:

If condition 1 is true, execute the statement

elif Judgment condition 2:

If condition 2 is true, execute the statement

elif Judgment condition 3:

If condition 3 is true, execute the statement

elif Judgment conditions n:

condition n Yes, execute the statement

# For example:

score = 77

if score>=140:

print('The result is A')

elif score>=130:

print('The result is B')

elif score>=120:

print('The result is C')

elif score>=100:

print('The result is D')

elif score<90:

print('The result is E')

10.2 for cycle

# for loop

for Temporary variable in Iteratable objects such as lists, strings, etc:

Circulatory body

# For example:

name = 'admin'

for i in name:

print(i)

# range(x): [0,x)

for i in range(3):

print(i) # 0 1 2

# range(a,b): [a,b)

for i in range(2, 5):

print(i) # 2 3 4

# Range of range(a,b,c): [a, b], C is the step size, and within this range, it is increased according to the step value

for i in range(2, 10, 3):

print(i) # 2 5 8

11. Data type

11.1 string

Common methods / functions in strings

| Method / function | describe |

|---|---|

| len() | Gets the length of the string |

| find() | Finds whether the specified content exists in the string. If it exists, it returns the start position index value of the content for the first time in the string. If it does not exist, it returns - 1 |

| startswith()/endswith | Judge whether the string starts / ends with who |

| count() | Returns the number of occurrences of subStr in objectStr between start and end |

| replace() | Replace the content specified in the string. If count is specified, the replacement will not exceed count |

| split() | Cut the string by the content of the parameter |

| upper()/lower() | Convert case |

| strip() | Remove spaces around the string |

| join() | String splicing |

str1 = ' Administrators '

print(len(str1)) # 18

print(str1.find('d')) # 3

print(str1.startswith('a')) # False

print(str1.endswith('s')) # False

print(str1.count('s')) # 2

print(str1.replace('s', '', 1)) # Adminitrators

print(str1.split('n')) # [' Admi', 'istrators ']

print(str1.upper()) # ADMINISTRATORS

print(str1.lower()) # administrators

print(str1.strip()) # Administrators

print(str1.join('admin')) # a Administrators d Administrators m Administrators i Administrators n

11.2 list

Addition, deletion, modification and query of list

| Add element | describe |

|---|---|

| append() | Append a new element to the end of the list |

| insert() | Inserts a new element at the specified index location |

| extend() | Append all elements of a new list to the end of the list |

# Add element

name_list = ['zhang', 'cheng', 'wang', 'li', 'liu']

print(name_list) # ['zhang', 'cheng', 'wang', 'li', 'liu']

name_list.append('tang')

print(name_list) # ['zhang', 'cheng', 'wang', 'li', 'liu', 'tang']

name_list.insert(2, 'su')

print(name_list) # ['zhang', 'cheng', 'su', 'wang', 'li', 'liu', 'tang']

subName = ['lin', 'qing', 'xue']

name_list.extend(subName)

print(name_list) # ['zhang', 'cheng', 'su', 'wang', 'li', 'liu', 'tang', 'lin', 'qing', 'xue']'xue']

| Modify element | describe |

|---|---|

| list[index] = modifyValue | Modify a list element by specifying a subscript assignment |

# Modify element name_list[0] = 'zhao' print(name_list) # ['zhao', 'cheng', 'su', 'wang', 'li', 'liu', 'tang', 'lin', 'qing', 'xue']

| Find element | describe |

|---|---|

| in | Judge whether it exists. If it exists, the result is true; otherwise, it is false |

| not in | Judge whether it does not exist. If it does not exist, the result is true; otherwise, it is false |

# Find element

findName = 'li'

# In the list ['zhao ',' Cheng ',' Su ',' Wang ',' li ',' Liu ',' Tang ',' Lin ',' Qing ',' Xue '], the last name is found: li

if findName in nameList:

print('In list %s Last name found in:%s' % (nameList, findName))

else:

print('In list %s Last name is not found in:%s' % (nameList, findName))

findName1 = 'qian'

# In the list ['zhao ',' Cheng ',' Su ',' Wang ',' Li ',' Liu ',' Tang ',' Lin ',' Qing ',' Xue '], the last name is not found: qian

if findName1 not in nameList:

print('In list %s Last name is not found in:%s' % (nameList, findName1))

else:

print('In list %s Last name found in:%s' % (nameList, findName1))

| Delete element | describe |

|---|---|

| del | Delete by subscript |

| pop() | The last element is deleted by default |

| remove | Delete according to the value of the element |

# Delete element

print(nameList) # ['zhao', 'cheng', 'su', 'wang', 'li', 'liu', 'tang', 'lin', 'qing', 'xue']

# del nameList[1] # Deletes the element of the specified index

# print(nameList) # ['zhao', 'su', 'wang', 'li', 'liu', 'tang', 'lin', 'qing', 'xue']

# nameList.pop() # Default output last element

# print(nameList) # ['zhao', 'cheng', 'su', 'wang', 'li', 'liu', 'tang', 'lin', 'qing']

# nameList.pop(3) # Deletes the element of the specified index

# print(nameList) # ['zhao', 'cheng', 'su', 'li', 'liu', 'tang', 'lin', 'qing', 'xue']

nameList.remove('zhao') # Deletes the element with the specified element value

print(nameList) # ['cheng', 'su', 'wang', 'li', 'liu', 'tang', 'lin', 'qing', 'xue']

11.3 tuples

Python tuples are similar to lists, except that the element data of tuples cannot be modified, while the element data of lists can be modified. Tuples use parentheses and lists use brackets.

# tuple

nameTuple = ('zhang', 'cheng', 'wang', 'li', 'liu')

print(nameTuple) # ('zhang', 'cheng', 'wang', 'li', 'liu')

# nameTuple[3] = 'su' # Tuples cannot modify the value of elements inside

# print(nameTuple) # TypeError: 'tuple' object does not support item assignment

ageInt = (16) # If you do not write a comma, it is of type int

print(ageInt, type(ageInt)) # 16 <class 'int'>

ageTuple = (17,) # To define a tuple with only one element, you need to write a comma after the unique element

print(ageTuple, type(ageTuple)) # (17,) <class 'tuple'>

11.4 slicing

Slicing refers to the operation of intercepting part of the operated object. String, list and tuple all support slicing.

Slicing syntax

# The interval of slice (start index, end index), and the step size represents the slice interval. Slice is no different from interception. Note that it is a left closed and right open interval [Start index:End index:step] # Intercept the index from the start index to the end index in a specified step [Start index:End index] # The default step size is 1, which can simplify not writing

# section str_slice = 'Hello World!' # The slice follows the left closed right open interval, cutting the left without cutting the right print(str_slice[2:]) # llo World! print(str_slice[0:5]) # Hello print(str_slice[2:9:2]) # loWr print(str_slice[:8]) # Hello Wo

11.5 dictionary

Addition, deletion and modification of dictionary

Use key to find data and get() to get data

| View element | describe |

|---|---|

| dictionaryName['key'] | Specify the key to find the corresponding value value, access the nonexistent key, and an error is reported |

| dictionaryName.get('key') | Use its get('key ') method to obtain the value value corresponding to the key, access the nonexistent key and return None |

# View element

personDictionary = {'name': 'King', 'age': 16}

print(personDictionary) # {'name': 'King', 'age': 16}

print(personDictionary['name'], personDictionary['age']) # King 16

# print(personDictionary['noExistKey']) # KeyError: 'noExistKey', the key is specified in square brackets. If you access a nonexistent key, an error will be reported

print(personDictionary.get('name')) # King

print(personDictionary.get('noExistKey')) # None, access the nonexistent key in the form of get(), and return none without error

| Modify element | describe |

|---|---|

| dictionaryName['key'] = modifiedValue | Assign the new value to the value of the key to be modified |

# Modify element

petDictionary = {'name': 'glory', 'age': 17}

print(petDictionary) # {'name': 'Glory', 'age': 17}

petDictionary['age'] = 18

print(petDictionary) # {'name': 'Glory', 'age': 18}

| Add element | describe |

|---|---|

| dictionaryName['key'] = newValue | When using variable name ['key'] = data, this "key" does not exist in the dictionary, so this element is added |

# Add element

musicDictionary = {'name': 'Netease', 'age': 19}

print(musicDictionary) # {'name': 'Netease', 'age': 19}

# musicDictionary['music'] = 'xxx' # When the key does not exist, add an element

# print(musicDictionary) # {'name': 'Netease', 'age': 19, 'music': 'xxx'}

musicDictionary['age'] = '20' # Overwrite element when key exists

print(musicDictionary) # {'name': 'Netease', 'age': '20'}

| Delete element | describe |

|---|---|

| del | Deletes a specified element or the entire dictionary |

| clear() | Empty the dictionary and keep the dictionary object |

# Delete element

carDictionary = {'name': 'bmw', 'age': 20}

print(carDictionary) # {'name': 'BMW', 'age': 20}

# del carDictionary['age'] # Delete the element of the specified key

# print(carDictionary) # {'name': 'BMW'}

# del carDictionary # Delete entire dictionary

# print(carDictionary) # NameError: name 'xxx' is not defined. The dictionary has been deleted, so it will report undefined

carDictionary.clear() # Empty dictionary

print(carDictionary) # {}

| Traversal element | describe |

|---|---|

| for key in dict.keys(): print(key) | key to traverse the dictionary |

| for value in dict.values(): print(value) | Traverse the value of the dictionary |

| for key,value in dict.items(): print(key,value) | Traverse the key value (key value pair) of the dictionary |

| for item in dict.items(): print(item) | Traverse the element/item of the dictionary |

# Traversal element

airDictionary = {'name': 'aviation', 'age': 21}

# key traversing dictionary

# for key in airDictionary.keys():

# print(key) # name age

# Traverse the value of the dictionary

# for value in airDictionary.values():

# print(value) # Aviation 21

# Traverse the key value of the dictionary

# for key, value in airDictionary.items():

# print(key, value) # name aviation 21

# Traverse the item/element of the dictionary

for item in airDictionary.items():

print(item) # ('name ',' aviation ') ('age', 21)

12. Function

12.1 defining functions

format

# Define a function. After defining a function, the function will not be executed automatically. You need to call it

def Function name():

Method body

code

# Define function

def f1():

print('After the function is defined, the function will not be executed automatically. You need to call it')

12.2 calling functions

format

# Call function Function name()

code

# Call function f1()

12.3 function parameters

Formal parameter: defines the parameters in parentheses of the function, which are used to receive the parameters of the calling function.

Argument: the parameter in parentheses of the calling function, which is used to pass to the parameter defining the function.

12.3.1 position transfer (sequential transfer)

Transfer parameters according to the one-to-one correspondence of parameter position order

format

# Define functions with parameters

def Function name(arg1,arg2,...):

Method body

# Calling a function with parameters

Function name(arg1,arg2,...)

code

# Define functions with parameters

def sum_number(a, b):

c = a + b

print(c)

# Calling a function with parameters

sum_number(10, 6)

12.3.2 keyword parameter transfer (non sequential parameter transfer)

Pass the parameters in the specified parameter order

format

# Define functions with parameters

def Function name(arg1,arg2,...):

Method body

# Calling a function with parameters

Function name(arg2=xxx,arg1=xxx,...)

code

# Define functions with parameters

def sum_number(a, b):

c = a + b

print(c)

# Calling a function with parameters

sum_number(b=6, a=10)

12.4 function return value

Return value: the result returned to the caller after the function in the program completes one thing

format

# Define a function with a return value

def Function name():

return Return value

# Receive function with return value

recipient = Function name()

# Use results

print(recipient)

code

# Define a function with a return value

def pay_salary(salary, bonus):

return salary + bonus * 16

# Receive function with return value

receive_salary = pay_salary(1000000, 100000)

print(receive_salary)

13. Local and global variables

13.1 local variables

Local variable: a variable defined inside a function and on a function parameter.

Scope of local variable: used inside the function (not outside the function).

# local variable

def partial_variable(var1, var2):

var3 = var1 + var2

var4 = 15

return var3 + var4

local_variable = partial_variable(12, 13)

print(local_variable)

13.2 global variables

Global variables: variables defined outside the function.

Scope of global variables: both internal and external functions can be used

# global variable

globalVariable = 100

def global_variable(var1, var2):

return var1 + var2 + globalVariable

global_var = global_variable(10, 20)

print(global_var, globalVariable)

14. Documentation

14.1 opening and closing of documents

Open / create file: in python, you can use the open() function to open an existing file or create a new file open (file path, access mode)

Close file: close() function

Absolute path: absolute position, which completely describes the location of the target, and all directory hierarchical relationships are clear at a glance.

Relative path: relative position, the path starting from the folder (directory) where the current file is located.

Access mode: r, w, a

| Access mode | describe |

|---|---|

| r | Open the file as read-only. The pointer to the file is placed at the beginning of the file. If the file does not exist, an error is reported. This is the default mode. |

| w | Open a file for writing only. If the file already exists, overwrite it. If the file does not exist, create a new file. |

| a | Open a file for append. If the file already exists, the file pointer will be placed at the end of the file. That is, the new content will be written after the existing content. If the file does not exist, create a new file for writing. |

| r+ | Open a file for reading and writing. The file pointer will be placed at the beginning of the file. |

| w+ | Open a file for reading and writing. If the file already exists, overwrite it. If the file does not exist, create a new file. |

| a+ | Open a file for reading and writing. If the file already exists, the file pointer will be placed at the end of the file. The file is opened in append mode. If the file does not exist, create a new file for reading and writing. |

| rb | Open a file in binary format for read-only. The file pointer will be placed at the beginning of the file. |

| wb | Open a file in binary format for writing only. If the file already exists, overwrite it. If the file does not exist, create a new file. |

| ab | Open a file in binary format for append. If the file already exists, the file pointer will be placed at the end of the file. That is, the new content will be written after the existing content. If the file does not exist, create a new file for writing. |

| rb+ | Open a file in binary format for reading and writing. The file pointer will be placed at the beginning of the file. |

| wb+ | Open a file in binary format for reading and writing. If the file already exists, overwrite it. If the file does not exist, create a new file. |

| ab+ | Open a file in binary format for reading and writing. If the file already exists, the file pointer will be placed at the end of the file. If the file does not exist, create a new file for reading and writing. |

# Create a file open (file path, access mode)

testFile = open('file/test.txt', 'w', encoding='utf-8')

testFile.write('Write file contents')

# Close the document [suggestion]

testFile.close()

14.2 reading and writing of documents

14.2.1 write data

Write data: write() can write data to a file. If the file does not exist, create it; If it exists, empty the file first and then write data

# Write data

writeFile = open('file/write.txt', 'w', encoding='utf-8')

writeFile.write('Write file data\n' * 5)

writeFile.close()

14.2.2 data reading

Read data: read(num) can read data from the file. Num indicates the length of the data to be read from the file (in bytes). If num is not passed in, it means to read all the data in the file

# Read data

readFile = open('file/write.txt', 'r', encoding='utf-8')

# readFileCount = readFile.read() # By default, read one byte by one, and read all data of the file

# readFileCount1 = readFile.readline() # Read line by line, only one line of data of the file can be read

readFileCount2 = readFile.readlines() # Read by line, read all data of the file, and return all data in the form of a list. The elements of the list are data line by line

print(readFileCount2)

readFile.close()

14.3 file serialization and deserialization

Through file operation, we can write strings to a local file. However, if it is an object (such as list, dictionary, tuple, etc.), it cannot be written directly to a file. The object needs to be serialized before it can be written to the file.

Serialization: convert data (objects) in memory into byte sequences, so as to save them to files or network transmission. (object – > byte sequence)

Deserialization: restore the byte sequence to memory and rebuild the object. (byte sequence – > object)

The core of serialization and deserialization: the preservation and reconstruction of object state.

Python provides JSON modules to serialize and deserialize data.

JSON module

JSON (JavaScript object notation) is a lightweight data exchange standard. JSON is essentially a string.

Serialization using JSON

JSON provides dumps and dump methods to serialize an object.

Deserialization using JSON

Using the loads and load methods, you can deserialize a JSON string into a Python object.

14.3.1 serialization

dumps(): converts an object into a string. It does not have the function of writing data to a file.

import json

# Serialization ① dumps()

serializationFile = open('file/serialization1.txt', 'w', encoding='utf-8')

name_list = ['admin', 'administrator', 'administrators']

names = json.dumps(name_list)

serializationFile.write(names)

serializationFile.close()

dump(): specify a file object while converting the object into a string, and write the converted String to this file.

import json

# Serialization ② dump()

serializationFile = open('file/serialization2.txt', 'w', encoding='utf-8')

name_list = ['admin', 'administrator', 'administrators']

json.dump(name_list, serializationFile) # This is equivalent to the two steps of dumps() and write() combined

serializationFile.close()

14.3.2 deserialization

loads(): a string parameter is required to load a string into a Python object.

import json

# Deserialization ① loads()

serializationFile = open('file/serialization1.txt', 'r', encoding='utf-8')

serializationFileContent = serializationFile.read()

deserialization = json.loads(serializationFileContent)

print(deserialization, type(serializationFileContent), type(deserialization))

serializationFile.close()

load(): you can pass in a file object to load the data in a file object into a Python object.

import json

# Deserialization ② load()

serializationFile = open('file/serialization2.txt', 'r', encoding='utf-8')

deserialization = json.load(serializationFile) # It is equivalent to two steps of combined loads() and read()

print(deserialization, type(deserialization))

serializationFile.close()

15. Abnormal

During the running process of the program, our program cannot continue to run due to non-standard coding or other objective reasons. At this time, the program will be abnormal. If we do not handle exceptions, the program may be interrupted directly due to exceptions. In order to ensure the robustness of the program, the concept of exception handling is proposed in the program design.

15.1 try... except statement

The try... except statement can handle exceptions that may occur during code running.

Syntax structure:

try: A block of code where an exception may occur except Type of exception: Processing statement after exception

# Example:

try:

fileNotFound = open('file/fileNotFound.txt', 'r', encoding='utf-8')

fileNotFound.read()

except FileNotFoundError:

print('The system is being upgraded. Please try again later...')

2,Urllib

1. Internet crawler

1.1 introduction to reptiles

If the Internet is compared to a large spider network, the data on a computer is a prey on the spider network, and the crawler program is a small spider that grabs the data you want along the spider network.

Explanation 1: through a program, according to Url (e.g.: http://www.taobao.com )Crawl the web page to get useful information.

Explanation 2: use the program to simulate the browser to send a request to the server and obtain the response information

1.2 reptile core

- Crawl web page: crawl the entire web page, including all the contents in the web page

- Analyze data: analyze the data you get in the web page

- Difficulty: game between reptile and anti reptile

1.3 use of reptiles

-

Data analysis / manual data set

-

Social software cold start

-

Public opinion monitoring

-

Competitor monitoring, etc

-

List item

1.4 classification of reptiles

1.4.1 universal crawler

Example: Baidu, 360, google, sougou and other search engines - Bole Online

Functions: accessing web pages - > fetching data - > data storage - > Data Processing - > providing retrieval services

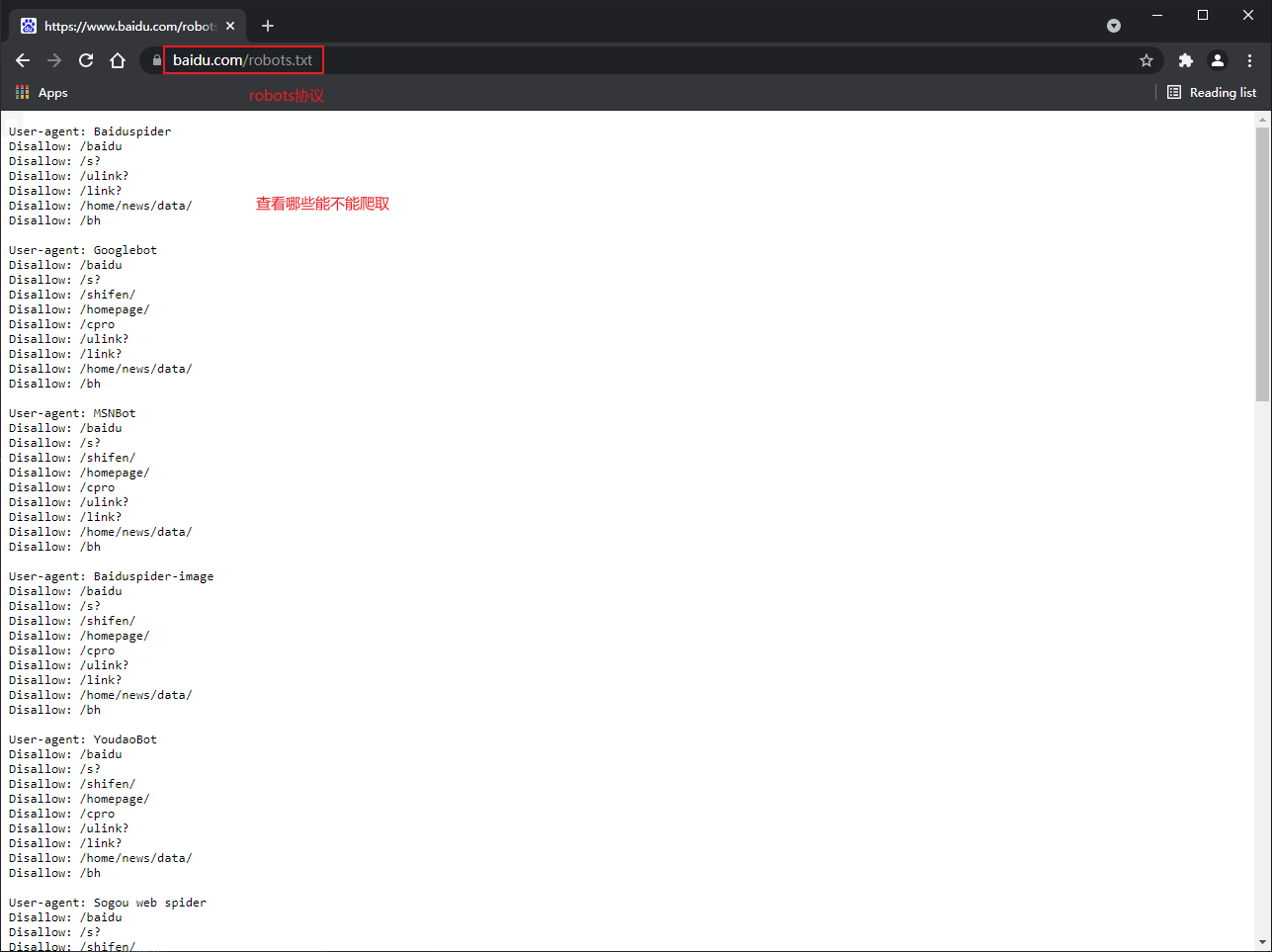

Robots protocol: a conventional protocol. Add robots.txt file to specify what content of this website can not be captured and can not play a restrictive role. Crawlers written by themselves do not need to abide by it.

Website ranking (SEO):

- Rank according to pagerank algorithm value (refer to website traffic, click through rate and other indicators)

- Competitive ranking (whoever gives more money will rank first)

Disadvantages:

- Most of the captured data is useless

- Unable to accurately obtain data according to the needs of users

1.4.2 focused reptiles

Function: implement the crawler program and grab the required data according to the requirements

Design idea:

- Determine the Url to crawl (how to get the Url)

- Simulate the browser accessing the url through http protocol to obtain the html code returned by the server (how to access it)

- Parse html string (extract the required data according to certain rules) (how to parse)

1.5 anti climbing means

1.5.1 User-Agent

The Chinese name of User Agent is User Agent, or UA for short. It is a special string header that enables the server to identify the operating system and version, CPU type, browser and version, browser rendering engine, browser language, browser plug-in, etc.

1.5.2 proxy IP

-

Xici agency

-

Fast agent

Anonymity, high anonymity, transparent proxy and the differences between them

-

Using a transparent proxy, the other server can know that you have used the proxy and your real IP.

-

Using anonymous proxy, the other server can know that you have used the proxy, but does not know your real IP.

-

Using high anonymous proxy, the other server does not know that you use the proxy, let alone your real IP.

1.5.3 verification code access

- Coding platform

- Cloud coding platform

- super 🦅

1.5.4 dynamically loading web pages

The website returns other js data, not the real data of the web page

selenium drives real browsers to send requests

1.5.5 data encryption

Analyze js code

2. Use of urllib Library

urllib.request.urlopen(): simulate the browser to send a request to the server

1 type and 6 methods

- Response: the data returned by the server. The data type of response is HttpResponse

- decode: byte – > string

- Encoding: string – > bytes

- read(): read binary in byte form, red (Num): return the first num bytes

- readline(): read one line

- readlines(): read line by line until the end

- getcode(): get the status code

- geturl(): get url

- getheaders(): get headers

import urllib.request url = "http://www.baidu.com" response = urllib.request.urlopen(url) # 1 type and 6 methods # ① The data type of response is HttpResponse # print(type(response)) # <class 'http.client.HTTPResponse'> # ① read(): read byte by byte # content = response.read() # Low efficiency # content = response.read(10) # Returns the first 10 bytes # print(content) # ② readline(): read one line # content = response.readline() # Read one line # print(content) # ③ readlines(): read line by line until the end # content = response.readlines() # Read line by line until the end # print(content) # ④ getcode(): get the status code # statusCode = response.getcode() # Return to 200, that is OK! # print(statusCode) # ⑤ geturl(): returns the url address of the access # urlAddress = response.geturl() # print(urlAddress) # ⑥ getheaders(): get request headers getHeaders = response.getheaders() print(getHeaders)

urllib.request.urlretrieve(): copy (download) the network object represented by the URL to the local file

- Request web page

- Request picture

- Request video

import urllib.request url_page = 'http://www.baidu.com' # url: download path, filename: file name # Request web page # urllib.request.urlretrieve(url_page, 'image.baidu.html') # Download pictures # url_img = 'https://img2.baidu.com/it/u=3331290673,4293610403&fm=26&fmt=auto&gp=0.jpg' # urllib.request.urlretrieve(url_img, '0.jpg') # Download Video url_video = 'https://vd4.bdstatic.com/mda-kev64a3rn81zh6nu/hd/mda-kev64a3rn81zh6nu.mp4?v_from_s=hkapp-haokan-hna&auth_key=1631450481-0-0-e86278b3dbe23f6324c929891a9d47cc&bcevod_channel=searchbox_feed&pd=1&pt=3&abtest=3000185_2' urllib.request.urlretrieve(url_video, 'Frozen.mp4')

3. Customization of request object

Purpose: in order to solve the first method of anti crawling, if the crawling request information is incomplete, the customization of the request object is used

Introduction to UA: the Chinese name of User Agent is User Agent, or UA for short. It is a special string header that enables the server to identify the operating system and version, CPU type, browser and version used by the customer. Browser kernel, browser rendering engine, browser language, browser plug-in, etc.

Syntax: request = urllib.request.Request()

import urllib.request

url = 'https://www.baidu.com'

# Composition of url

# For example: https://www.baidu.com/s?ie=utf -8&f=8&rsv_ BP = 1 & TN = Baidu & WD = rat Laibao & RSV_ pq=a1dbf18f0000558d&rsv_ t=076ebVS%2BfOJbuqzKTEC4L%2FtOXZ5BxqzbgdFwHDGl8vEpGmeM5%2BKSr6Owpjk&rqlang=cn&rsv_ enter=1&rsv_ dl=tb&rsv_ sug3=13&rsv_ sug1=11&rsv_ sug7=100&rsv_ sug2=0&rsv_ btype=t&inputT=3568&rsv_ sug4=3568

# Protocol: http/https (https is more secure with SSL)

# Host (domain name): www.baidu.com

# Port number (default): http (80), https (443), mysql (3306), oracle (1521), redis (6379), mongodb (27017)

# Path: s

# Parameters: ie=utf-8, f=8, wd = rat Laibao

# Anchor point:#

# Problem: the requested information is incomplete -- UA anti crawl

# Solution -- disguise the complete request header information

headers = {'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/93.0.4577.63 Safari/537.36'}

# Because the dictionary cannot be stored in urlopen(), headers cannot be passed in

# Customization of request object

# Check the Request() source code: because of the order of the parameters passed, you can't write the url and headers directly. There is a data parameter in the middle, so you need to pass the parameters by keyword

request = urllib.request.Request(url=url, headers=headers)

response = urllib.request.urlopen(request)

content = response.read().decode('utf-8')

print(content)

Evolution of coding set

Because the computer was invented by Americans, only 127 characters were encoded into the computer at first, that is, upper and lower case English letters, numbers and some symbols. This coding table is called ASCII coding. For example, the coding of capital letter A is 65 and the coding of lower case letter z is 122. However, it is obvious that one byte is not enough to deal with Chinese, at least two bytes are required, and it can not conflict with ASCII coding. Therefore, China has formulated GB2312 coding to encode Chinese.

Imagine that there are hundreds of languages all over the world. Japan compiles Japanese into English_ In JIS, South Korea compiles Korean into EUC Kr. If countries have national standards, there will inevitably be conflicts. As a result, there will be garbled codes in multilingual mixed texts.

Therefore, Unicode came into being. Unicode unifies all languages into one set of codes, so that there will be no more random code problems. The Unicode standard is also evolving, but the most commonly used is to represent a character with two bytes (four bytes are required if very remote characters are used). Modern operating systems and most programming languages support Unicode directly.

4. Encoding and decoding

4.1 get request method

4.1.1 urllib.parse.quote()

import urllib.request

# url to visit

url = 'https://www.baidu.com/s?ie=UTF-8&wd='

# The customization of request object is the first method to solve anti crawling

headers = {'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/93.0.4577.63 Safari/537.36'}

# Parsing Chinese characters into unicode encoding format depends on urllib.parse

words = urllib.parse.quote('Frozen')

# The url should be spliced

url = url + words

# Customization of request object

request = urllib.request.Request(url=url, headers=headers)

# Impersonate the browser to send a request to the server

response = urllib.request.urlopen(request)

# Get the content of the response

content = response.read().decode('utf-8')

# print data

print(content)

4.1.2 urllib.parse.urlencode()

import urllib.parse

import urllib.request

# urlencode() application scenario: when the url has multiple parameters

# url source code: https://www.baidu.com/s?ie=UTF -8&wd=%E5%86%B0%E9%9B%AA%E5%A5%87%E7%BC%98&type=%E7%94%B5%E5%BD%B1

# url decoding: https://www.baidu.com/s?ie=UTF -8 & WD = snow and Ice & type = movie

base_url = 'https://www.baidu.com/s?ie=UTF-8&'

data = {'wd': 'Frozen', 'type': 'film'}

urlEncode = urllib.parse.urlencode(data)

url = base_url + urlEncode

headers = {'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/93.0.4577.63 Safari/537.36'}

request = urllib.request.Request(url=url, headers=headers)

response = urllib.request.urlopen(request)

content = response.read().decode('utf-8')

print(content)

4.2 post request mode

1.post request Baidu translation

import json

import urllib.parse

import urllib.request

# post request Baidu translation

# Browser general -- > request URL:

# url = 'https://translate.google.cn/_/TranslateWebserverUi/data/batchexecute?rpcids=MkEWBc&f.sid=2416072318234288891&bl=boq_translate-webserver_20210908.10_p0&hl=zh-CN&soc-app=1&soc-platform=1&soc-device=1&_reqid=981856&rt=c'

url = 'https://fanyi.baidu.com/v2transapi?from=en&to=zh'

# Browser request headers -- > User Agent:

headers = {'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/93.0.4577.63 Safari/537.36'}

# Browser From Data (data in the form of key value. Note: if there are characters such as: \ in the browser data, you need to escape when copying to pychar, and remember to add one more \)

# Example: browser From Data: f.req: [[["mkewbc", [[\ "spider \", \ "auto \", \ "zh cn \", true], [null]], null, "generic"]]]

# data = {'f.req': '[[["MkEWBc","[[\\"Spider\\",\\"auto\\",\\"zh-CN\\",true],[null]]",null,"generic"]]]'}

data = {'query': 'Spider'}

# The parameters of the post request must be decoded. data = urllib.parse.urlencode(data)

# After encoding, you must call the encode() method data = urllib.parse.urlencode(data).encode('utf-8 ')

data = urllib.parse.urlencode(data).encode('utf-8')

# The parameter is placed in the method customized by the request object. request = urllib.request.Request(url=url, data=data, headers=headers)

request = urllib.request.Request(url=url, data=data, headers=headers)

# Impersonate the browser to send a request to the server

response = urllib.request.urlopen(request)

# Get response data

content = response.read().decode('utf-8')

# # print data

print(content)

# String -- > JSON object

jsonObjContent = json.loads(content)

print(jsonObjContent)

Summary:

Difference between post and get

- The parameters of the get request method must be encoded. The parameters are spliced behind the url. After encoding, you do not need to call the encode method

- The parameters of the post request method must be encoded. The parameters are placed in the method customized by the request object. After encoding, you need to call the encode method

2.post requests Baidu to translate in detail and anti crawl – > cookie (plays a decisive role) to solve

import json

import urllib.parse

import urllib.request

# post request Baidu translation's anti crawling Cookie (plays a decisive role)

# Browser general -- > request URL:

# url = 'https://translate.google.cn/_/TranslateWebserverUi/data/batchexecute?rpcids=MkEWBc&f.sid=2416072318234288891&bl=boq_translate-webserver_20210908.10_p0&hl=zh-CN&soc-app=1&soc-platform=1&soc-device=1&_reqid=981856&rt=c'

url = 'https://fanyi.baidu.com/v2transapi?from=en&to=zh'

# Browser request headers -- > User Agent:

# headers = {

# 'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/93.0.4577.63 Safari/537.36'

# }

headers = {

# 'Accept': '*/*',

# 'Accept-Encoding': 'gzip, deflate, br', # Be sure to annotate this sentence

# 'Accept-Language': 'en-GB,en-US;q=0.9,en;q=0.8,zh-CN;q=0.7,zh;q=0.6',

# 'Connection': 'keep-alive',

# 'Content-Length': '137',

# 'Content-Type': 'application/x-www-form-urlencoded; charset=UTF-8',

'Cookie': 'BIDUPSID=7881F5C444234A44A8A135144C7277E2; PSTM=1631452046; BAIDUID=7881F5C444234A44B6D4E05D781C0A89:FG=1; H_PS_PSSID=34442_34144_34552_33848_34524_34584_34092_34576_26350_34427_34557; BDORZ=B490B5EBF6F3CD402E515D22BCDA1598; delPer=0; PSINO=6; BAIDUID_BFESS=7881F5C444234A44B6D4E05D781C0A89:FG=1; BA_HECTOR=0k0h2h8g040l8hag8k1gjs8h50q; BCLID=7244537998497862517; BDSFRCVID=XrFOJexroG0YyvRHhm4AMZOfDuweG7bTDYLEOwXPsp3LGJLVJeC6EG0Pts1-dEu-EHtdogKK3gOTH4DF_2uxOjjg8UtVJeC6EG0Ptf8g0M5; H_BDCLCKID_SF=tR3aQ5rtKRTffjrnhPF3KJ0fXP6-hnjy3bRkX4nvWnnVMhjEWxntQbLWbttf5q3RymJJ2-39LPO2hpRjyxv4y4Ldj4oxJpOJ-bCL0p5aHl51fbbvbURvDP-g3-AJ0U5dtjTO2bc_5KnlfMQ_bf--QfbQ0hOhqP-jBRIEoCvt-5rDHJTg5DTjhPrMWh5lWMT-MTryKKJwM4QCObnzjMQYWx4EQhofKx-fKHnRhlRNB-3iV-OxDUvnyxAZyxomtfQxtNRJQKDE5p5hKq5S5-OobUPUDUJ9LUkJ3gcdot5yBbc8eIna5hjkbfJBQttjQn3hfIkj2CKLK-oj-D8RDjA23e; BCLID_BFESS=7244537998497862517; BDSFRCVID_BFESS=XrFOJexroG0YyvRHhm4AMZOfDuweG7bTDYLEOwXPsp3LGJLVJeC6EG0Pts1-dEu-EHtdogKK3gOTH4DF_2uxOjjg8UtVJeC6EG0Ptf8g0M5; H_BDCLCKID_SF_BFESS=tR3aQ5rtKRTffjrnhPF3KJ0fXP6-hnjy3bRkX4nvWnnVMhjEWxntQbLWbttf5q3RymJJ2-39LPO2hpRjyxv4y4Ldj4oxJpOJ-bCL0p5aHl51fbbvbURvDP-g3-AJ0U5dtjTO2bc_5KnlfMQ_bf--QfbQ0hOhqP-jBRIEoCvt-5rDHJTg5DTjhPrMWh5lWMT-MTryKKJwM4QCObnzjMQYWx4EQhofKx-fKHnRhlRNB-3iV-OxDUvnyxAZyxomtfQxtNRJQKDE5p5hKq5S5-OobUPUDUJ9LUkJ3gcdot5yBbc8eIna5hjkbfJBQttjQn3hfIkj2CKLK-oj-D8RDjA23e; Hm_lvt_64ecd82404c51e03dc91cb9e8c025574=1631461937; Hm_lpvt_64ecd82404c51e03dc91cb9e8c025574=1631461937; __yjs_duid=1_9333541ca3b081bff2fb5ea3b217edc41631461934213; REALTIME_TRANS_SWITCH=1; FANYI_WORD_SWITCH=1; HISTORY_SWITCH=1; SOUND_SPD_SWITCH=1; SOUND_PREFER_SWITCH=1; ab_sr=1.0.1_MTZhNGI2ZDNjYmUzYTFjZjMxMmI4YWM3OGU1MTM1Nzc4M2JiN2M0OTE3ZDcyNmEwMzY0MTA3MzI2NzZjMDBjNzczMzExMmQyZGMyOGQ5MjIyYjAyYWIzNjMxMmYzMGVmNWNmNTFkODc5ZTVmZTQzZWFhOGM5YjdmNGVhMzE2OGI3ZDFkMjhjNzAwMDgxMWVjMmYzMmE5ZjAzOTA0NWI4Nw==; __yjs_st=2_ZTZkODNlNThkYTFhZDgwNGQxYjE1Y2VmZTFkMzYxYzIyMzQ3Mjk4ZGM0NWViM2Y0ZDRkMjFiODkxNjQxZDhmMWNjMDA0OTQ0N2I2N2U4ZDdkZDdjNzAxZTZhYWNkYjI5NWIwMWVkMWZlYTMxNzA2ZjI0NjU3MDhjNjU5NDgzYjNjNDRiMDA1ODQ4YTg4NTg0MGJmY2VmNTE0YmEzN2FiMGVkZjUxZDMzY2U3YjIzM2RmNTQ4YThjMzU4NzMxOTBkZmJiMDgzZTIxYjdlMzIxY2M3MjhiNTQ4MGI2ZTI0ODRhMDI4NWI3ZDhhOGFkN2RhNjk2NjI3YzdkN2M5ZmQyN183XzI5ODZkODEz',

# 'Host': 'fanyi.baidu.com',

# 'Origin': 'https://fanyi.baidu.com',

# 'Referer': 'https://fanyi.baidu.com/translate?aldtype=16047&query=Spider&keyfrom=baidu&smartresult=dict&lang=auto2zh',

# 'sec-ch-ua': '"Google Chrome";v="93", " Not;A Brand";v="99", "Chromium";v="93"',

# 'sec-ch-ua-mobile': '?0',

# 'sec-ch-ua-platform': '"Windows"',

# 'Sec-Fetch-Dest': 'empty',

# 'Sec-Fetch-Mode': 'cors',

# 'Sec-Fetch-Site': 'same-origin',

# 'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/93.0.4577.63 Safari/537.36',

# 'X-Requested-With': 'XMLHttpRequest',

}

# Browser From Data (data in the form of key value. Note: if there are characters such as: \ in the browser data, you need to escape when copying to pychar, and remember to add one more \)

# Example: browser From Data: f.req: [[["mkewbc", [[\ "spider \", \ "auto \", \ "zh cn \", true], [null]], null, "generic"]]]

# data = {'f.req': '[[["MkEWBc","[[\\"Spider\\",\\"auto\\",\\"zh-CN\\",true],[null]]",null,"generic"]]]'}

data = {'from': 'en', 'to': 'zh', 'query': 'Spider', 'transtype': 'realtime', 'simple_means_flag': '3',

'sign': '579526.799991', 'token': 'e2d3a39e217e299caa519ed2b4c7fcd8', 'domain': 'common'}

# The parameters of the post request must be decoded. data = urllib.parse.urlencode(data)

# After encoding, you must call the encode() method data = urllib.parse.urlencode(data).encode('utf-8 ')

data = urllib.parse.urlencode(data).encode('utf-8')

# The parameter is placed in the method customized by the request object. request = urllib.request.Request(url=url, data=data, headers=headers)

request = urllib.request.Request(url=url, data=data, headers=headers)

# Impersonate the browser to send a request to the server

response = urllib.request.urlopen(request)

# Get response data

content = response.read().decode('utf-8')

# # print data

print(content)

# String -- > JSON object

jsonObjContent = json.loads(content)

print(jsonObjContent)

5. get request of Ajax

Example: Douban movie

Climb the data on the first page of Douban film - ranking list - Costume - and save it

# Climb the data on the first page of Douban film - ranking list - Costume - and save it

# This is a get request

import urllib.request

url = 'https://movie.douban.com/j/chart/top_list?type=30&interval_id=100%3A90&action=&start=0&limit=20'

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/93.0.4577.63 Safari/537.36'

}

# Customization of request object

request = urllib.request.Request(url=url, headers=headers)

# Get response data

response = urllib.request.urlopen(request)

content = response.read().decode('utf-8')

# Download data locally

# Open() uses gbk encoding by default. If you need to save Chinese, you want to specify UTF-8 encoding format encoding='utf-8 'in open()

# downloadFile = open('file/douban.json', 'w', encoding='utf-8')

# downloadFile.write(content)

# This kind of writing has the same effect

with open('file/douban1.json', 'w', encoding='utf-8') as downloadFile:

downloadFile.write(content)

Climb the data of the first 10 pages of Douban movie - ranking list - Costume - and save it (the costume data is not so much, about 4 pages, not behind, and the data is empty when you climb down)

import urllib.parse

import urllib.request

# Climb the data of the first 10 pages of Douban film - ranking list - Costume - and save it

# This is a get request

# Find regular top_ list?type=30&interval_ id=100%3A90&action=&start=40&limit=20

# Page 1: https://movie.douban.com/j/chart/top_ list?type=30&interval_ id=100%3A90&action=&start=0&limit=20

# Page 2: https://movie.douban.com/j/chart/top_ list?type=30&interval_ id=100%3A90&action=&start=20&limit=20

# Page 3: https://movie.douban.com/j/chart/top_ list?type=30&interval_ id=100%3A90&action=&start=40&limit=20

# Page n: start=(n - 1) * 20

def create_request(page):

base_url = 'https://movie.douban.com/j/chart/top_list?type=30&interval_id=100%3A90&action=&'

data = {

'start': (page - 1) * 20,

'limit': 20

}

data = urllib.parse.urlencode(data)

url = base_url + data

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/93.0.4577.63 Safari/537.36'

}

request = urllib.request.Request(url=url, headers=headers)

return request

def get_content(request):

response = urllib.request.urlopen(request)

content = response.read().decode('utf-8')

return content

def download(content, page):

# downloadFile = open('file/douban.json', 'w', encoding='utf-8')

# downloadFile.write(content)

# This kind of writing has the same effect

with open('file/douban_ancient costume_' + str(page) + '.json', 'w', encoding='utf-8') as downloadFile:

downloadFile.write(content)

if __name__ == '__main__':

start_page = int(input('Please enter the starting page number: '))

end_page = int(input('Please enter the ending page number: '))

for page in range(start_page, end_page + 1):

# Each page has customization of its own request object

request = create_request(page)

# Get response data

content = get_content(request)

# download

download(content, page)

6.ajax post request

Example: KFC official website, climb KFC official website - Restaurant query - City: Beijing - the first 10 pages of data and save them

import urllib.parse

import urllib.request

# Climb KFC's official website - Restaurant query - City: Beijing - the first 10 pages of data and save it

# This is a post request

# Find GetStoreList.ashx?op=cname

# Request address: http://www.kfc.com.cn/kfccda/ashx/GetStoreList.ashx?op=cname

# Form Data

# Page 1

# cname: Beijing

# pid:

# pageIndex: 1

# pageSize: 10

# Page 2

# cname: Beijing

# pid:

# pageIndex: 2

# pageSize: 10

# Page n

# cname: Beijing

# pid:

# pageIndex: n

# pageSize: 10

def create_request(page):

base_url = 'http://www.kfc.com.cn/kfccda/ashx/GetStoreList.ashx?op=cname'

data = {

'cname': 'Beijing',

'pid': '',

'pageIndex': page,

'pageSize': 10,

}

data = urllib.parse.urlencode(data).encode('utf-8')

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/93.0.4577.63 Safari/537.36'

}

request = urllib.request.Request(url=base_url, data=data, headers=headers)

return request

def get_content(request):

response = urllib.request.urlopen(request)

content = response.read().decode('utf-8')

return content

def download(content, page):

# downloadFile = open('file/douban.json', 'w', encoding='utf-8')

# downloadFile.write(content)

# This kind of writing has the same effect

with open('file/KFC_city_beijing_' + str(page) + '.json', 'w', encoding='utf-8') as downloadFile:

downloadFile.write(content)

if __name__ == '__main__':

start_page = int(input('Please enter the starting page number: '))

end_page = int(input('Please enter the ending page number: '))

for page in range(start_page, end_page + 1):

# Each page has customization of its own request object

request = create_request(page)

# Get response data

content = get_content(request)

# download

download(content, page)

7.URLError/HTTPError

brief introduction

- The HTTPError class is a subclass of the URLError class

- Imported package urlib.error.httperror, urlib.error.urlerror

- HTTP error: http error is an error prompt added when the browser cannot connect to the server. Guide and tell the viewer what went wrong with the page.

- When sending a request through urllib, it may fail. At this time, if you want to make your code more robust, you can catch exceptions through try exception. There are two types of exceptions: URLError\HTTPError

import urllib.request

import urllib.error

# url = 'https://blog.csdn.net/sjp11/article/details/120236636'

url = 'https://If the url is misspelled, the url will report an error '

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/93.0.4577.63 Safari/537.36'

}

try:

request = urllib.request.Request(url=url, headers=headers)

response = urllib.request.urlopen(request)

content = response.read().decode('utf-8')

print(content)

except urllib.error.HTTPError:

print('The system is being upgraded,Please try again later...')

except urllib.error.URLError:

print('As I said, the system is being upgraded,Please try again later...')

8.cookie login

Example: weibo login

Assignment: qq space crawling

(temporarily missing code)

9.Handler processor

Reasons for learning the handler processor:

urllib.request.urlopen(url): request headers cannot be customized

urllib.request.Request(url,headers,data): request headers can be customized

Handler: Customize more advanced request headers. With the complexity of business logic, the customization of request object can not meet our needs (dynamic cookie s and agents can not use the customization of request object).

import urllib.request

# Use handler to visit Baidu and get the web page source code

url = 'http://www.baidu.com'

# headers = {

# 'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/93.0.4577.63 Safari/537.36'

# }

headers = {

'User - Agent': 'Mozilla / 5.0(Windows NT 10.0;Win64;x64) AppleWebKit / 537.36(KHTML, likeGecko) Chrome / 74.0.3729.169Safari / 537.36'

}

request = urllib.request.Request(url=url, headers=headers)

# handler,build_opener,open

# Get handler object

handler = urllib.request.HTTPHandler()

# Get opener object

opener = urllib.request.build_opener(handler)

# Call the open method

response = opener.open(request)

content = response.read().decode('utf-8')

print(content)

10. Proxy server

-

Common functions of agent

-

Break through their own IP access restrictions and visit foreign sites.

-

Access internal resources of some units or groups

For example, FTP of a University (provided that the proxy address is within the allowable access range of the resource) can be used for various FTP download and upload, as well as various data query and sharing services open to the education network by using the free proxy server in the address section of the education network.

-

Improve access speed

For example, the proxy server usually sets a large hard disk buffer. When external information passes through, it is also saved in the buffer. When other users access the same information again, the information is directly taken out from the buffer and transmitted to users to improve the access speed.

-

Hide real IP

For example, Internet users can also hide their IP in this way to avoid attacks.

-

-

Code configuration agent

- Create a Reuqest object

- Create ProxyHandler object

- Creating an opener object with a handler object

- Send the request using the opener.open function

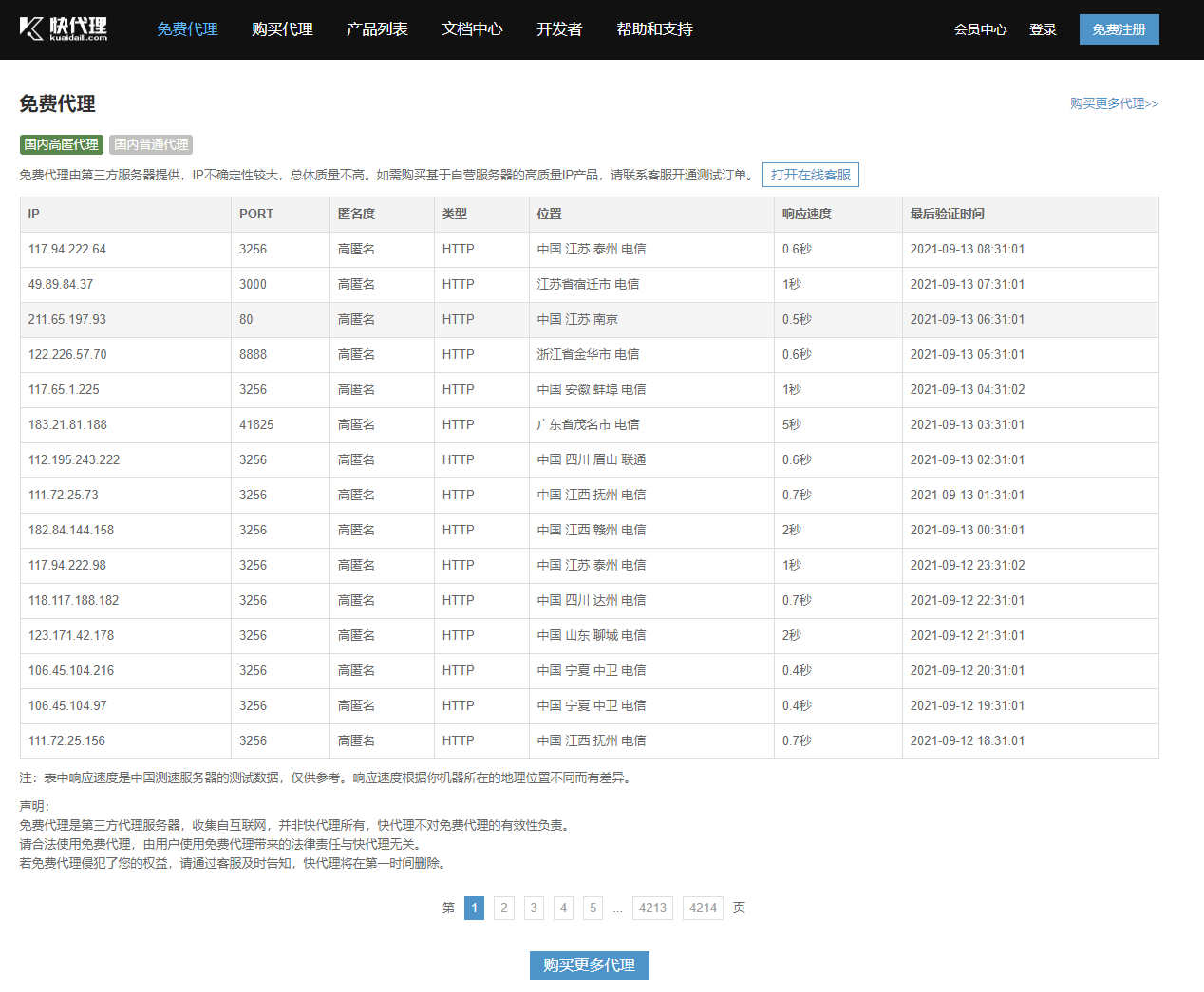

agent

Fast agent - free agent: https://www.kuaidaili.com/free/

You can also purchase proxy ip: generate API connection - return high hidden ip and port, but if you access it frequently, it will still be blocked. Therefore, the need for proxy pool means that there are a pile of high hidden ip in the proxy pool, which will not expose your real ip.

Single agent

import urllib.request

url = 'http://www.baidu.com/s?ie=UTF-8&wd=ip'

headers = {

'User - Agent': 'Mozilla / 5.0(Windows NT 10.0;Win64;x64) AppleWebKit / 537.36(KHTML, likeGecko) Chrome / 74.0.3729.169Safari / 537.36'

}

# Request object customization

request = urllib.request.Request(url=url, headers=headers)

# The proxy ip address can be found on this website: https://www.kuaidaili.com/free/

proxies = {'http': '211.65.197.93:80'}

handler = urllib.request.ProxyHandler(proxies=proxies)

opener = urllib.request.build_opener(handler)

# Impersonate browser access server

response = opener.open(request)

# Get response information

content = response.read().decode('utf-8')

# Save to local

with open('file/proxy.html', 'w', encoding='utf-8') as downloadFile:

downloadFile.write(content)

Agent pool

import random

import urllib.request

url = 'http://www.baidu.com/s?ie=UTF-8&wd=ip'

headers = {

'User - Agent': 'Mozilla / 5.0(Windows NT 10.0;Win64;x64) AppleWebKit / 537.36(KHTML, likeGecko) Chrome / 74.0.3729.169Safari / 537.36'

}

# https://www.kuaidaili.com/free/

proxies_pool = [

{'http': '118.24.219.151:16817'},

{'http': '118.24.219.151:16817'},

{'http': '117.94.222.64:3256'},

{'http': '49.89.84.37:3000'},

{'http': '211.65.197.93:80'},

{'http': '122.226.57.70:8888'},

{'http': '117.65.1.225:3256'},

{'http': '183.21.81.188:41825'},

{'http': '112.195.243.222:3256'},

{'http': '111.72.25.73:3256'},

{'http': '182.84.144.158:3256'},

{'http': '117.94.222.98:3256'},

{'http': '118.117.188.182:3256'},

{'http': '123.171.42.178:3256'},

{'http': '106.45.104.216:3256'},

{'http': '106.45.104.97:3256'},

{'http': '111.72.25.156:3256'},

{'http': '111.72.25.156:3256'},

{'http': '163.125.29.37:8118'},

{'http': '163.125.29.202:8118'},

{'http': '175.7.199.119:3256'},

{'http': '211.65.197.93:80'},

{'http': '113.254.178.224:8197'},

{'http': '117.94.222.106:3256'},

{'http': '117.94.222.52:3256'},

{'http': '121.232.194.229:9000'},

{'http': '121.232.148.113:3256'},

{'http': '113.254.178.224:8380'},

{'http': '163.125.29.202:8118'},

{'http': '113.254.178.224:8383'},

{'http': '123.171.42.178:3256'},

{'http': '113.254.178.224:8382'},

]

# Request object customization

request = urllib.request.Request(url=url, headers=headers)

proxies = random.choice(proxies_pool)

handler = urllib.request.ProxyHandler(proxies=proxies)

opener = urllib.request.build_opener(handler)

# Impersonate browser access server

response = opener.open(request)

# Get response information

content = response.read().decode('utf-8')

# Save to local

with open('file/proxies_poor.html', 'w', encoding='utf-8') as downloadFile:

downloadFile.write(content)

3. Analysis

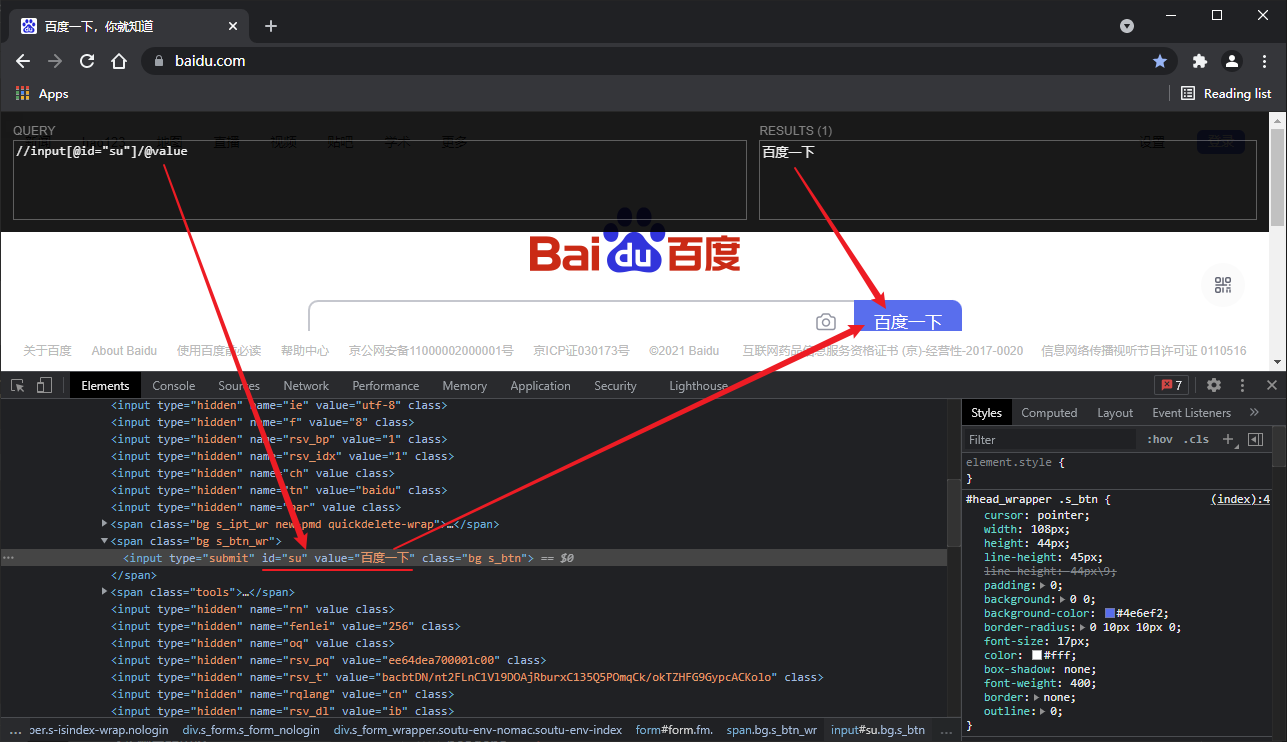

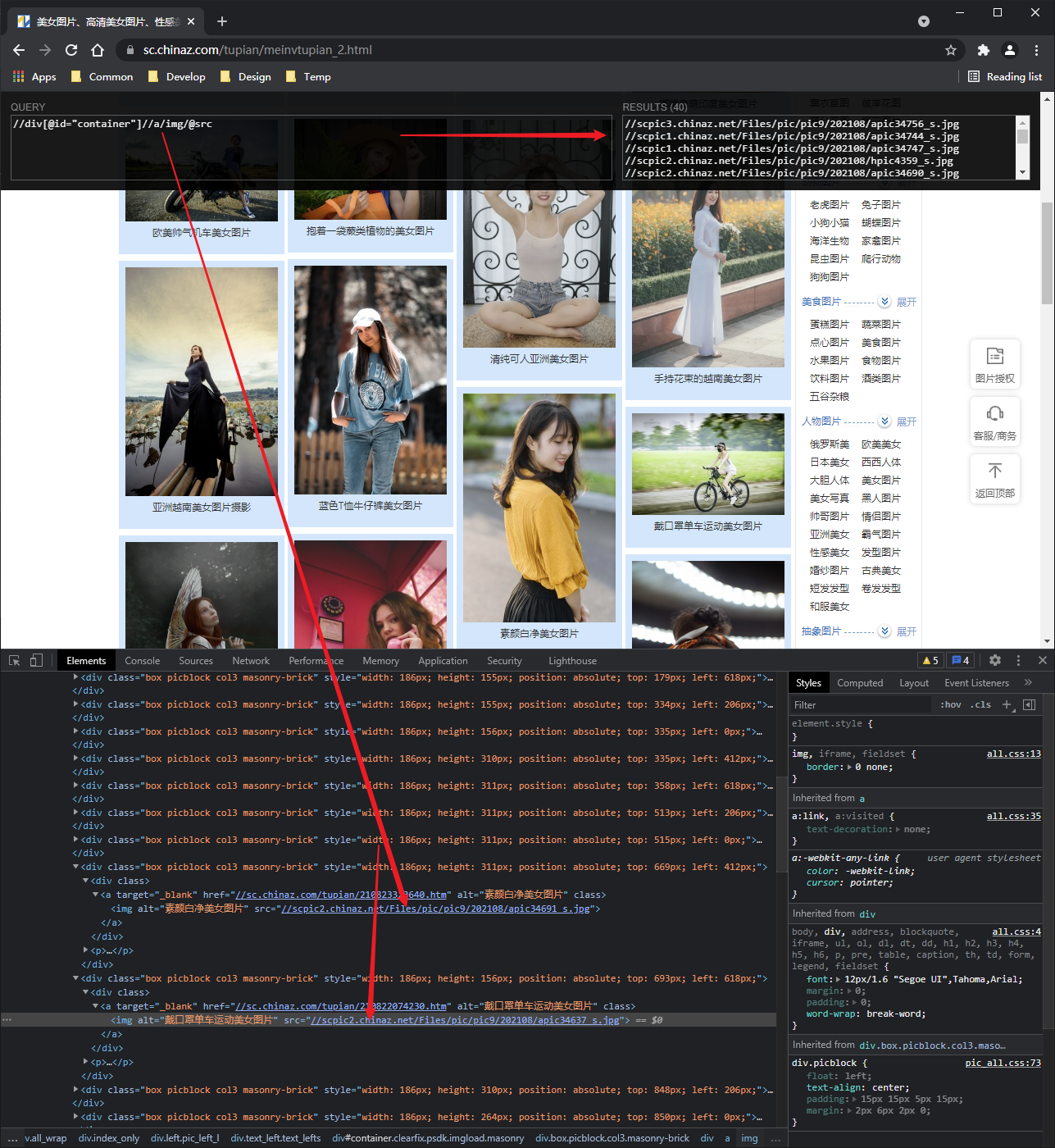

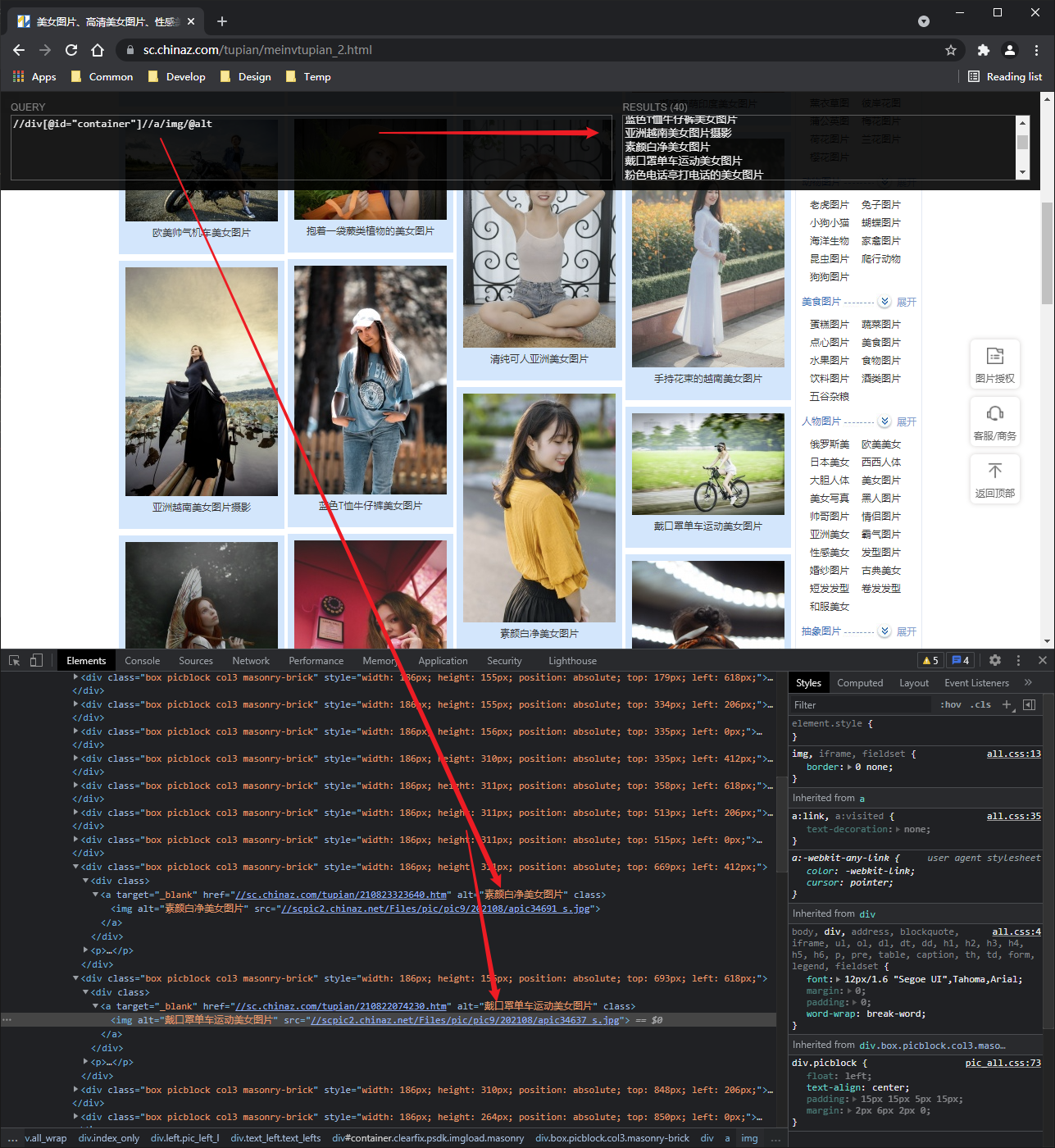

1.xpath

1.1 use of XPath

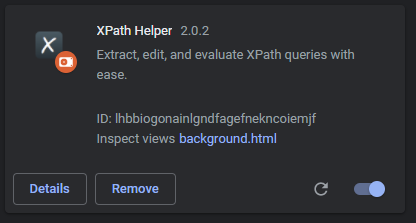

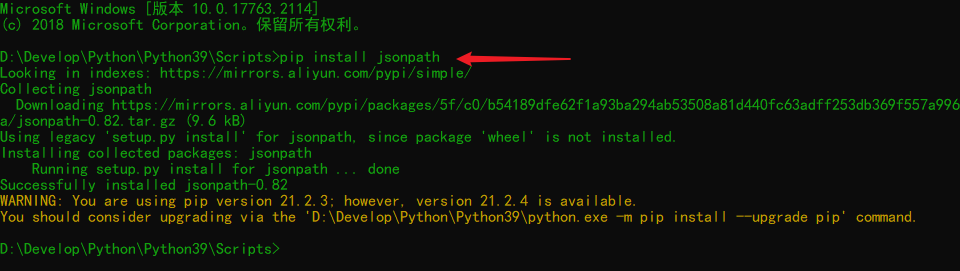

Installing the xpath plug-in

Open the Chrome browser -- > click the dot in the upper right corner -- > more tools -- > extensions -- > drag the xpath plug-in into the extensions -- > if the crx file fails, you need to modify the suffix of the. crx file to. zip or. rar compressed file -- > drag again -- > close the browser, reopen -- > open a web page, and press Ctrl + Shift + X -- > to display a small black box, Description the xpath plug-in is in effect

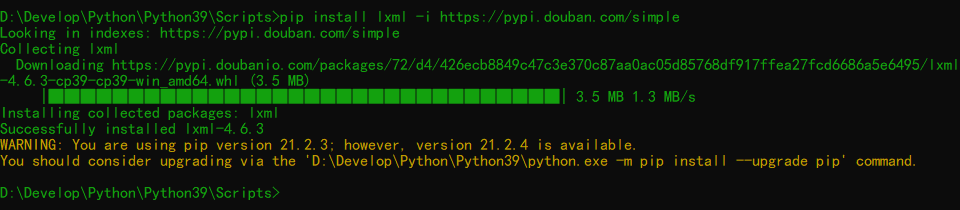

Install lxml Library

Note: the installation path is consistent with the library path (Scripts directory path) of python, such as D: \ develop \ Python \ Python 39 \ Scripts

# 1. Install lxml Library

pip install lxml -i https://pypi.douban.com/simple

# 2. Import lxml.etree

from lxml import etree

# 3.etree.parse() parse local files

html_tree = etree.parse('XX.html')

# 4.etree.HTML() server response file

html_tree = etree.HTML(response.read().decode('utf-8')

# 4.html_tree.xpath(xpath path)

Basic xpath syntax

# Basic xpath syntax

# 1. Path query

//: find all descendant nodes regardless of hierarchy

/ : Find direct child node

# 2. Predicate query

//div[@id]

//div[@id="maincontent"]

# 3. Attribute query

//@class

# 4. Fuzzy query

//div[contains(@id, "he")]

//div[starts‐with(@id, "he")]

# 5. Content query

//div/h1/text()

# 6. Logical operation

//div[@id="head" and @class="s_down"]

//title | //price

Local HTML file: 1905.html

<!DOCTYPE html>

<html lang="zh-cmn-Hans">

<head>

<meta charset="utf-8"/>

<title>Movie Network_1905.com</title>

<meta property="og:image" content="https://static.m1905.cn/144x144.png"/>

<link rel="dns-prefetch" href="//image14.m1905.cn"/>

<style>

.index-carousel .index-carousel-screenshot {

background: none;

}

</style>

</head>

<body>

<!-- Movie number -->

<div class="layout-wrapper depth-report moive-number">

<div class="layerout1200">

<h3>

<span class="fl">Movie number</span>

<a href="https://www.1905.com/dianyinghao/" class="fr" target="_ Blank "> more</a>

</h3>

<ul class="clearfix">

<li id="1">

<a href="https://www.1905.com/news/20210908/1539457.shtml">

<img src="//static.m1905.cn/images/home/pixel.gif"/></a>

<a href="https://www.1905.com/dianyinghao/detail/lst/95/">

<img src="//static.m1905.cn/images/home/pixel.gif"/>

<em>Mirror Entertainment</em>

</a>

</li>

<li id="2">

<a href="https://www.1905.com/news/20210910/1540134.shtml">

<img src="//static.m1905.cn/images/home/pixel.gif"/></a>

<a href="https://www.1905.com/dianyinghao/detail/lst/75/">

<img src="//static.m1905.cn/images/home/pixel.gif"/>

<em>Entertainment Capital</em>

</a>

</li>

<li id="3">

<a href="https://www.1905.com/news/20210908/1539808.shtml">

<img src="//static.m1905.cn/images/home/pixel.gif"/>

</a>

<a href="https://www.1905.com/dianyinghao/detail/lst/59/">

<img src="//static.m1905.cn/images/home/pixel.gif"/>

<em>Rhinoceros Entertainment</em>

</a>

</li>

</ul>

</div>

</div>

<!-- Links -->

<div class="layout-wrapper">

<div class="layerout1200">

<section class="frLink">

<div>Links</div>

<p>

<a href="http://Www.people.com.cn "target =" _blank "> people.com</a>

<a href="http://www.xinhuanet.com/" target="_ Blank "> Xinhua</a>

<a href="http://Www.china. Com. CN / "target =" _blank "> china.com</a>

<a href="http://www.cnr.cn" target="_ Blank "> CNR</a>

<a href="http://Www.legaldaily. Com. CN / "target =" _blank "> Legal Network</a>

<a href="http://www.most.gov.cn/" target="_ Blank "> Ministry of science and technology</a>

<a href="http://Www.gmw.cn "target =" _blank "> guangming.com</a>

<a href="http://news.sohu.com" target="_ Blank "> Sohu News</a>

<a href="https://News.163.com "target =" _blank "> Netease News</a>

<a href="https://www.1958xy.com/" target="_ blank" style="margin-right:0; "> xiying.com</a>

</p>

</section>

</div>

</div>

<!-- footer -->

<footer class="footer" style="min-width: 1380px;">

<div class="footer-inner">

<h3 class="homeico footer-inner-logo"></h3>

<p class="footer-inner-links">

<a href="https://Www.1905. COM / about / aboutus / "target =" _blank "> about us < / a > < span >|</span>

<a href="https://www.1905.com/sitemap.html" target="_ Blank "> website map < / a > < span >|</span>

<a href="https://Www.1905. COM / jobs / "target =" _blank "> looking for talents < / a > < span >|</span>

<a href="https://www.1905.com/about/copyright/" target="_ Blank "> copyright notice < / a > < span >|</span>

<a href="https://Www.1905. COM / about / contactus / "target =" _blank "> contact us < / a > < span >|</span>

<a href="https://www.1905.com/error_ report/error_ report-p-pid-125-cid-126-tid-128.html" target="_ Blank "> help and feedback < / a > < span >|</span>

<a href="https://Www.1905. COM / link / "target =" _blank "> link < / a > < span >|</span>

<a href="https://www.1905.com/cctv6/advertise/" target="_ Blank "> CCTV6 advertising investment < / a > <! -- < span >|</span>

<a href="javascript:void(0)">Associated Media</a>-->

</p>

<div class="footer-inner-bottom">

<a href="https://Www.1905. COM / about / license / "target =" _blank "> network audio visual license No. 0107199</a>

<a href="https://www.1905.com/about/cbwjyxkz/" target="_ Blank "> publication business license</a>

<a href="https://Www.1905. COM / about / dyfxjyxkz / "target =" _blank "> film distribution license</a>

<a href="https://www.1905.com/about/jyxyc/" target="_ Blank "> business performance license</a>

<a href="https://Www.1905. COM / about / gbdsjm / "target =" _blank "> Radio and television program production and operation license</a>

<br/>

<a href="https://www.1905.com/about/beian/" target="_ Blank "> business license of enterprise legal person</a>

<a href="https://Www.1905. COM / about / zzdxyw / "target =" _blank "> value added telecom business license</a>

<a href="http://beian.miit.gov.cn/" target="_ Blank "> Jing ICP Bei 12022675-3</a>

<a href="http://Www.beian. Gov.cn / portal / registersysteminfo? Recordcode = 11010202000300 "target =" _blank "> jinggong.com.anbei No. 11010202000300</a>

</div>

</div>

</footer>

<!-- copyright -->

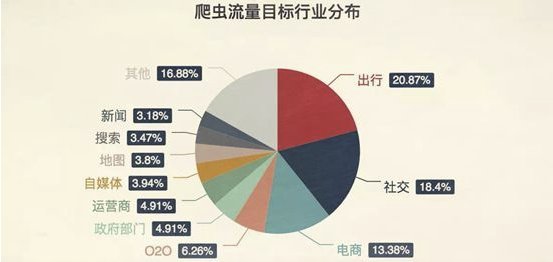

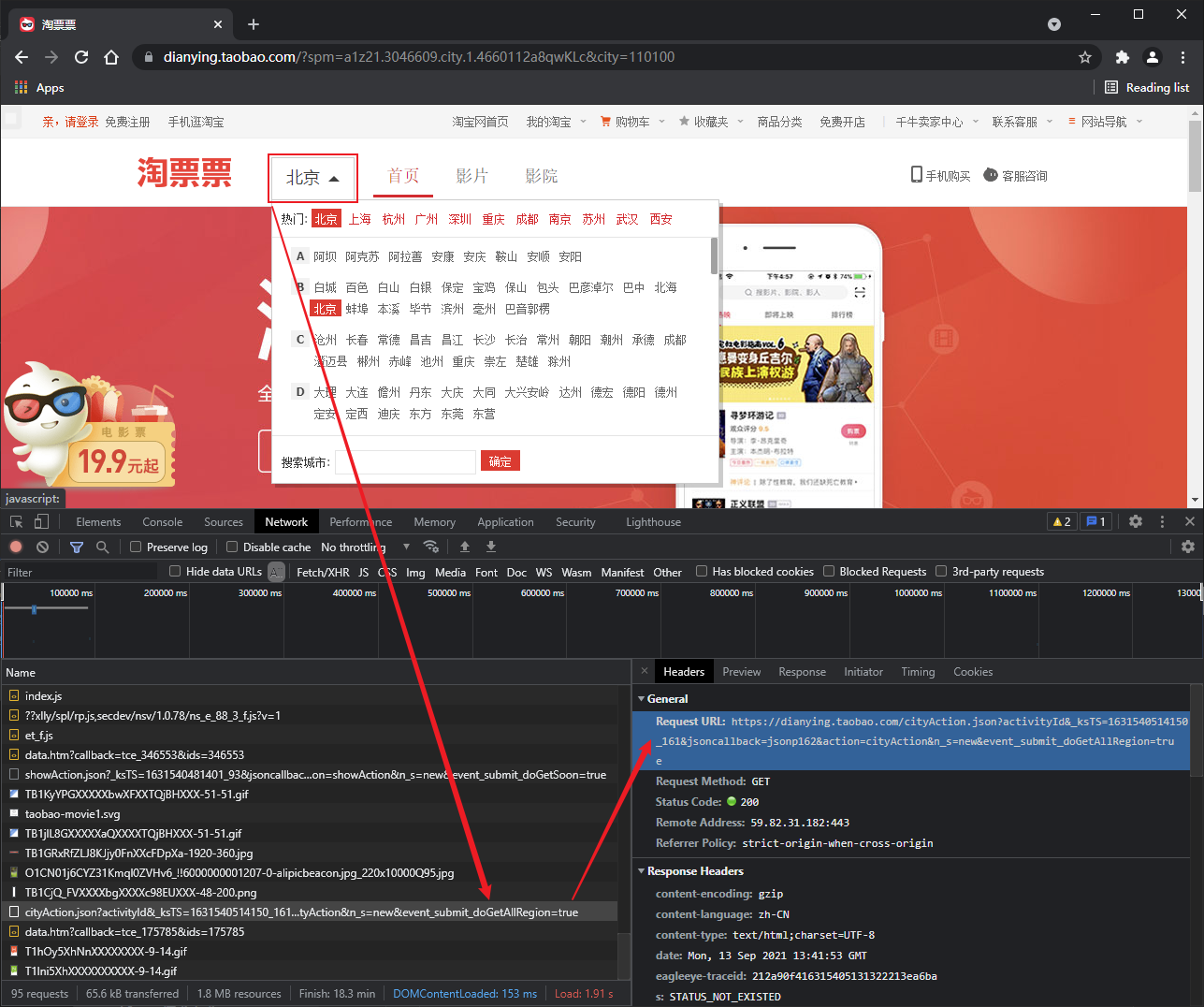

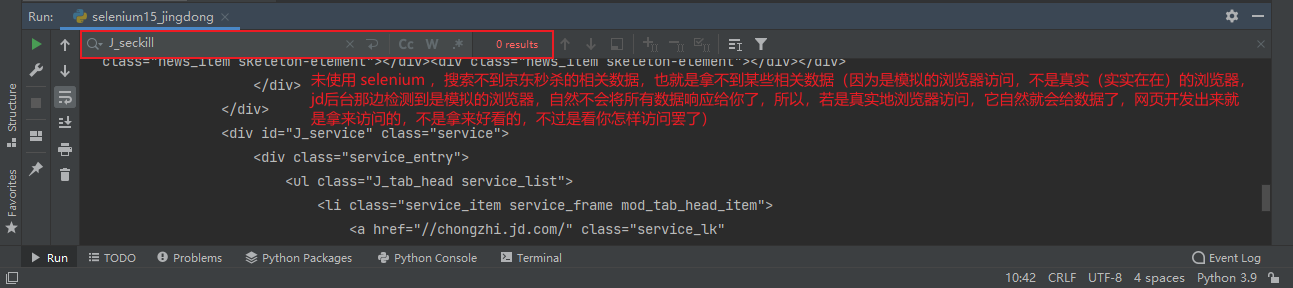

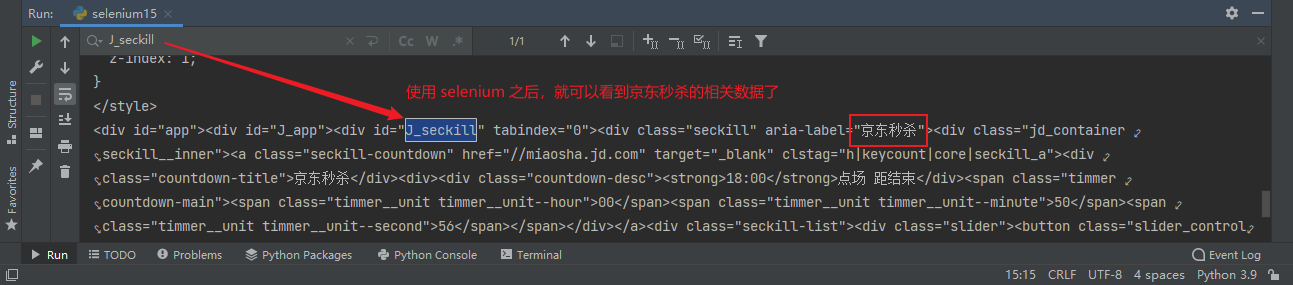

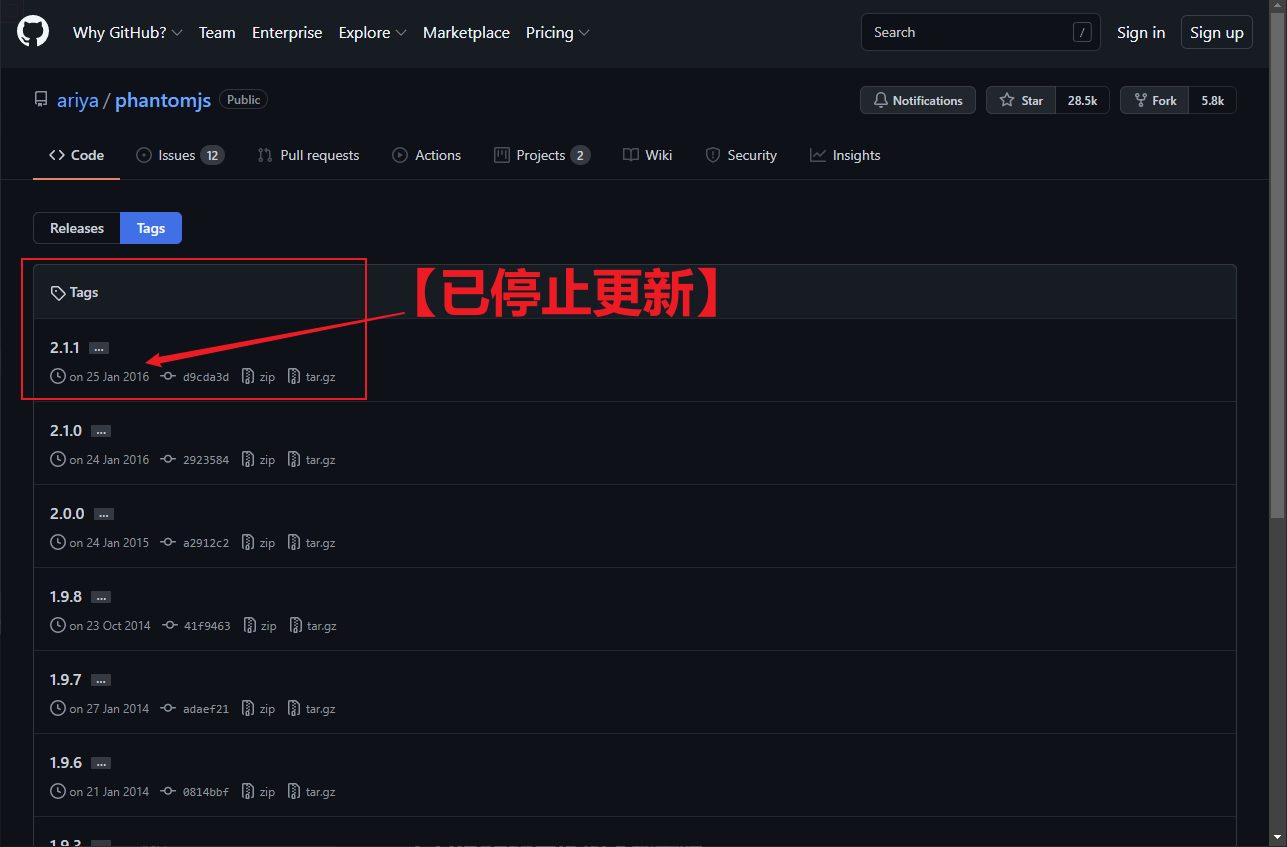

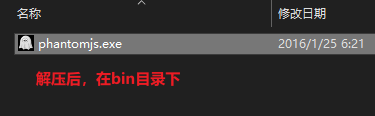

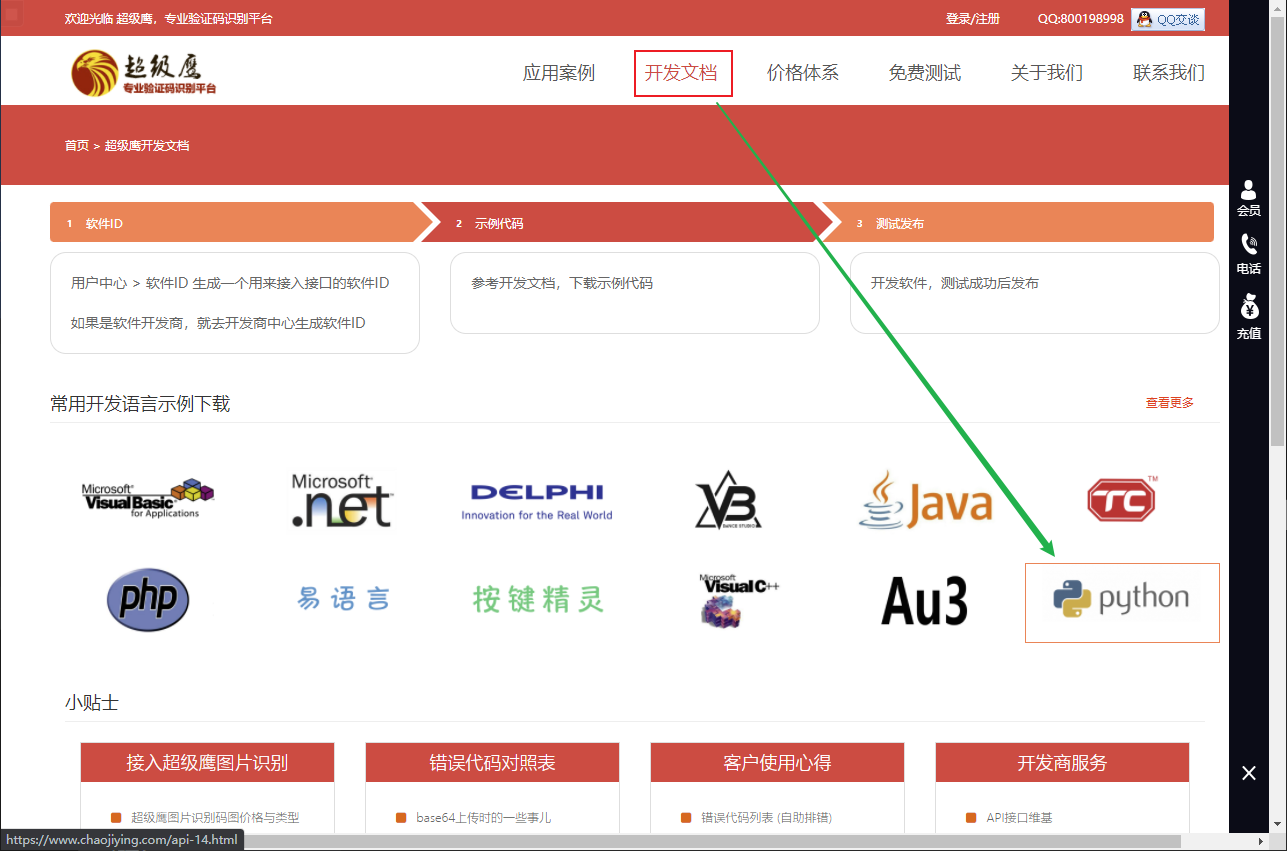

<div class="copy-right" style="min-width: 1380px;">