/* Internet Novels:[secret] */ #coding:utf-8 import re import sys from bs4 import BeautifulSoup import urllib.request import time import random proxy_list = [ {"http":"124.88.67.54:80"}, {"http":"61.135.217.7:80"}, {"http":"120.230.63.176:80"}, {"http":"210.35.205.176:80"} ] proxy = random.choice(proxy_list)#Randomly select an ip address httpproxy_handler = urllib.request.ProxyHandler(proxy) opener = urllib.request.build_opener(httpproxy_handler) urllib.request.install_opener(opener) #Define a function of crawling network novel def getNovelContent(): html = urllib.request.urlopen('https://www.luoqiuzw.com/book/94819/',timeout=40) data = BeautifulSoup(html , 'html.parser') #print(data) reg = r'<dd><a href="/book/94819/(.*?)">(.*?)</a></dd>' #Matching of regular expressions reg = re.compile(reg) #Can be added or not, increase efficiency urls = re.findall(reg,str(data)) #print(urls) index = 1 start_chapter_num = 7+763 #Add 7 to the last chapter and press F5 directly (13 corresponds to Chapter 1) for url in urls: if(index<start_chapter_num): index = index + 1 continue index = index + 1 chapter_url = url[0] #Hyperlink to Chapter chapter_url = "https://www.luoqiuzw.com/book/94819/" + chapter_url #print(chapter_url) chapter_title = url[1] #Chapter name chapter_html = urllib.request.urlopen(chapter_url,timeout=40).read() #Body content source code chapter_html = chapter_html.decode("utf-8") #print(chapter_html) chapter_reg = r'<div id="content" deep="3"><p>(.*?)</p><br></div><div class="bottem2">' chapter_reg = re.compile(chapter_reg,re.S) chapter_content = re.findall(chapter_reg,chapter_html) #print(chapter_content) for content in chapter_content: content = content.replace(" ","") content = content.replace("One second to remember the address:[Fall Chinese] https://www.luoqiuzw.com/ fastest update! No ads! ","") content = content.replace("<br>","\n") content = content.replace("content_detail","") content = content.replace("<p>","") content = content.replace("</p>","") content = content.replace("\r\n\t\t","") #print(content) f = open('124.txt','a',encoding='utf-8') f.write("\n"+chapter_title+"\n\n") f.write(content+"\n") f.close() print(chapter_url)#This chapter has been written to the file if __name__ == '__main__': getNovelContent()

/* Internet Novels:[secret] */ #coding:utf-8 import re import sys from bs4 import BeautifulSoup import urllib.request from io import BytesIO #for gzip decode import gzip #for gzip decode import time import random headers = ('User-Agent', 'Mozilla/5.0 (iPhone; CPU iPhone OS 9_1 like Mac OS X) AppleWebKit/601.1.46 (KHTML, like Gecko) Version/9.0 Mobile/13B143 Safari/601.1') opener = urllib.request.build_opener() opener.addheaders = {headers} urllib.request.install_opener(opener) #Define a function of crawling network novel def getNovelContent(): html = urllib.request.urlopen('http://www.vipzw.com/90_90334/',timeout=60).read() #gzip decode start buff = BytesIO(html) fr = gzip.GzipFile(fileobj=buff) html = fr.read().decode('utf-8') #gzip decode end #print(html) data = BeautifulSoup(html , "html.parser") #print(data) reg = r'<dd><a href="/90_90334/(.*?)">(.*?)</a></dd>' #Matching of regular expressions reg = re.compile(reg) #Can be added or not, increase efficiency urls = re.findall(reg,str(data)) #print(urls) index = 1 start_chapter_num = 9 + 20 #Add 9 to the last chapter, and press F5 directly (13 corresponds to the first chapter) for url in urls: if(index<start_chapter_num): index = index + 1 continue index = index + 1 chapter_url = url[0] #Hyperlink to Chapter chapter_url = "http://www.vipzw.com/90_90334/" + chapter_url chapter_title = url[1] #Chapter name chapter_html = urllib.request.urlopen(chapter_url,timeout=60).read() #Body content source code #gzip decode start try: buff2 = BytesIO(chapter_html) fr2 = gzip.GzipFile(fileobj=buff2) chapter_html = fr2.read().decode('utf-8') except: chapter_html = chapter_html.decode("utf-8") #gzip decode end #print(chapter_html) chapter_reg = r'<div id="content">(.*?)</div>' chapter_reg = re.compile(chapter_reg,re.S) chapter_content = re.findall(chapter_reg,chapter_html) #print(chapter_content) for content in chapter_content: #content = content.replace("\r","") content = content.replace(" "," ") content = content.replace("<br />","") content = content.replace("Please remember the first domain name of this book: www.vipzw.com. VIP Chinese_Mobile version of biquge website: m.vipzw.com","") content = content.replace("\u3000\u3000","") #print(content) f = open('126.txt','a',encoding='utf-8') f.write("\n"+chapter_title+"\n\n") f.write(content+"\n") f.close() fr2.close() print(chapter_url)#This chapter has been written to the file fr.close() if __name__ == '__main__': getNovelContent()

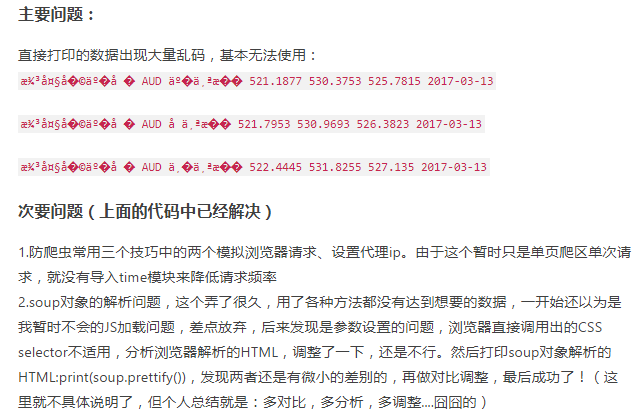

The above online novel crawlers are all crawled for others, and the following problems are found in the process of writing code:

1, Proxy IP (random IP crawling content)

2, gzip grabs web pages

3, utf-8 encoding

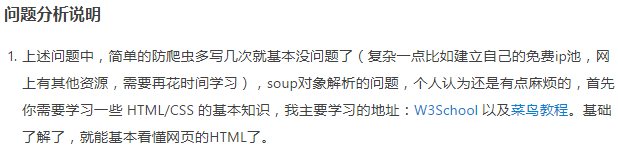

1, Proxy IP problem (random IP crawling content)

Reference link: python crawler - multiple ip address accesshttps://blog.csdn.net/qq_43709494/article/details/93937821

Overall idea: we can create a list of ip addresses, including multiple available ip addresses (you can search free ip addresses online), and then call an ip address randomly every time to establish an http connection. This avoids the risk that multiple accesses to the same ip address are blocked.

from urllib import request import random proxy_list = [ {"http":"124.88.67.54:80"}, {"http":"61.135.217.7:80"}, {"http":"42.231.165.132:8118"} ] proxy = random.choice(proxy_list) #Randomly select an ip address httpproxy_handler = request.ProxyHandler(proxy) opener = request.build_opener(httpproxy_handler) request = request.Request("http://www.baidu.com/") response =opener.open(request) print(response.read())

2, gzip grabs web pages

WARNING:root:Some characters could not be decoded, and were replaced with REPLACEMENT CHARACTER.

Reference link: How to read web pages in gzip format and normal format by pythonhttps://blog.csdn.net/HelloHaibo/article/details/77624416

In general, when we read web page analysis to return content, it looks like this:

#!/usr/bin/python #coding:utf-8 import urllib2 headers = {"User-Agent": 'Opera/9.25 (Windows NT 5.1; U; en)'} request = urllib2.Request(url='http://www.baidu.com', headers=headers) response = urllib2.urlopen(request).read()

In general, you can see the source code of the returned web page:

<html> <head> <meta http-equiv="content-type" content="text/html;charset=utf-8"> <meta http-equiv="X-UA-Compatible" content="IE=Edge"> <meta content="always" name="referrer"> <meta name="theme-color" content="#2932e1"> <link rel="shortcut icon" href="/favicon.ico" type="image/x-icon" /> <link rel="search" type="application/opensearchdescription+xml" href="/content-search.xml" title="Baidu search" /> <link rel="icon" sizes="any" mask href="//www.baidu.com/img/baidu.svg"> <link rel="dns-prefetch" href="//s1.bdstatic.com"/> <link rel="dns-prefetch" href="//t1.baidu.com"/> <link rel="dns-prefetch" href="//t2.baidu.com"/> <link rel="dns-prefetch" href="//t3.baidu.com"/> <link rel="dns-prefetch" href="//t10.baidu.com"/> <link rel="dns-prefetch" href="//t11.baidu.com"/> <link rel="dns-prefetch" href="//t12.baidu.com"/> <link rel="dns-prefetch" href="//b1.bdstatic.com"/> <title>Baidu once, you will know</title> <style id="css_index" index="index" type="text/css">html,body{height:100%} html{overflow-y:auto}

But sometimes when you visit some web pages, you will return the random code. ok, you will first consider the coding problem (this is not the focus of this article, but you will take it with you), check the coding of the web page (refer to my article python for the method of page coding), and then use its coding method to decode the content, which will solve some web page random code, but sometimes you can It's definitely not about coding. Why is it still garbled?

Of course, there is another situation that we haven't considered, and it's also the most easily overlooked one, the format of the returned web page. Generally, the format of data returned by web pages is text/html and gzip. Data in text/html format can be read directly, while data in gzip format cannot be read directly. Special gzip module is needed for reading. There's no need to talk about much nonsense, and the code is bright:

from StringIO import StringIO import gzip import urllib2 headers = {"User-Agent": 'Opera/9.25 (Windows NT 5.1; U; en)'} request = urllib2.Request(url='gzip Web page in format', headers=headers) response = urllib2.urlopen(request) buf = StringIO( response.read()) f = gzip.GzipFile(fileobj=buf) data = f.read() #Handle ......... f.close()

/*Possible errors*/ OSError: Not a gzipped file (b'<!') #f.close() is not added or gzip operation is not required for the web page

Note: F must be closed, i.e. f.close() must be available, especially for crawler and multithreading.

Of course, this is not perfect. Some servers will take the initiative to change the format of the returned web page (if you interpret the data in text/html format with gzip module, the output is also a pile of random code), which may be test/html in the daytime, gzip in the evening, or rotation every day (my own experience, a website is in text/html format after 12:00 noon, gzip in the morning). Therefore, we have to It's very natural that we should think of checking the content format (through the content encoding item in info()) after reading the web page, and processing accordingly. Final code:

#coding:utf-8 from StringIO import StringIO import gzip import urllib2 headers = {"User-Agent": 'Opera/9.25 (Windows NT 5.1; U; en)'} request = urllib2.Request(url='Website', headers=headers) response = urllib2.urlopen(request) if response.info().get('Content-Encoding') == 'gzip': buf = StringIO( response.read()) f = gzip.GzipFile(fileobj=buf) data = f.read() #Handle f.close() else: data = response .read()

3, utf-8 encoding

Reference link 1: The coding problem of using Requests and beautiful soup in Python 3https://www.jianshu.com/p/664483569101

Words written in the front:

I've been learning Python for a while, but there hasn't been much practice. In the early stage, I mainly read the e-book Python learning manual I bought (well, I just saw that the e-book of this book has been taken off the shelves, but I'm sure that's what I bought. The e-book has the same characteristics as the entity: it's almost the most expensive of the same kind of books, and it was really painful to buy at that time! ), less than 30% of them. I found that this book is still a little threshold, so I watched some zero based videos, as well as the classic Python 3 tutorial of Liao Xuefeng, and the corresponding video tutorial (key: remember to appreciate the author Liao Xuefeng!). In a word: I found a lot of information and read some books. However, with the egg, the heart is bottomless, then fight it! This paper is the first difficult problem in practice, as well as the solution.

Source code:

#!/usr/bin/env python3 # It is useless for Windows and can run directly on UNIX kernel system (Mac OS, Linux...) # -*- coding: utf-8 -*- # Tell the calling object that the program is encoded in UTF-8 import requests # Import third party Library from bs4 import BeautifulSoup def get_BOC_data(url): # Encapsulate as function headers = {"User-Agent": "Mozilla/4.0 (compatible; MSIE 7.0; Windows NT 5.1; Maxthon 2.0)", "Referer": "http://www.boc.cn/"} # Simulate browser request header information proxies = {"http": "218.76.106.78:3128"} # Setting proxy IP is unstable and may fail at any time response = requests.get(url, headers=headers, proxies=proxies) #Call requsts.get() method print(response.status_code, "\n", rsponse.url) # Print status code and requested URL soup = BeautifulSoup(response.text, "lxml") # Call beautifulsop to parse web page data print(soup.prettify()) # Format print soup object table_data = soup.select("body div.publish table")[0] #Call the soap object to support CSS selectors for row in table_data.find_all("tr"): # Print table data directly for col in row.find_all("td"): # Use the find all() method most commonly used in soup print(col.string, end="\t") print("\n") if __name__ == "__main__": # It seems to be for testing. I haven't figured out the specific principle yet start_url = "http://www.bankofchina.com/sourcedb/ffx/index.html" #Initial target URL get_BOC_data(start_url) # Calling function

Problem Description:

Code background description

The company I stayed in used to do financial data. Generally, the way to get data is: crawler (large amount of regular data), manual collection (many data collectors, collect irregular or small amount or very important data), and purchase. I started as a data collector, which was very boring, but I kept learning. Later, I did quality inspection, and finally left as a data planning analyst. Because of that experience, I want to learn reptiles and improve efficiency. The data captured this time is the data that I manually copied and pasted at that time: the quotation of forward settlement and sale of foreign exchange of Bank of China.

The first code is the verb encode, The second encoding name is the noun encoding, and the code is str.encode(encoding =), The second sentence is also the reverse process of the first sentence. The code represents bytes. Decode (encoding =); Where str represents string object and bytes represents binary object

Intercept the above code

response = requests.get(url, headers=headers, proxies=proxies) soup = BeautifulSoup(response.text, "lxml") print(soup.prettify())

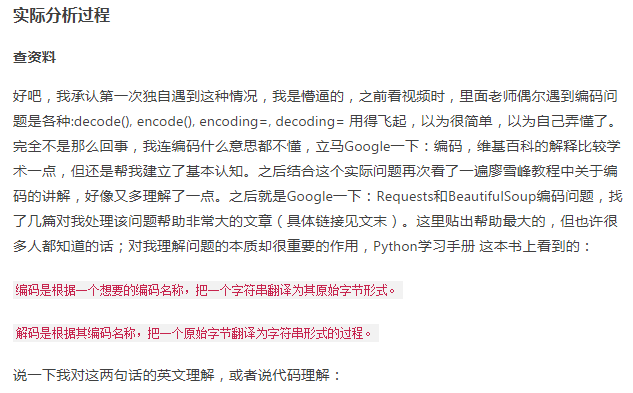

Principles of coding and decoding:

How to encode the data will eventually need to be decoded in the same (or compatible) way. Why in the end? The final meaning is that the encoding used in the first decode may be inconsistent with the encoding used in the encode due to errors or other reasons, resulting in the occurrence of garbled code. If the same encoding is completely inverted once again, after decoding once, it can be restored. for instance:

>>> s = "SacrÃ" # Define the variable s and assign the string "Sacr Ã" to s (Note: at this time, the symbol "Ã" is not a random code, but a letter in Portuguese) >>> s.encode("utf-8") # Code as "utf-8" b'Sacr\xc3\x83' # Returns binary (hexadecimal, letter or Ascii) >>> s.encode("utf-8").decode("Latin-1") # Then "Latin-1" is used for decoding ("coding of Western European languages") 'SacrÃ\x83' # Due to the inconsistency of encoding and decoding methods, the code is disordered >>> s.encode("utf-8").decode("Latin-1").encode("Latin-1") # Code with "Latin-1" b'Sacr\xc3\x83' # Return binary >>> s.encode("utf-8").decode("Latin-1").encode("Latin-1").decode("utf-8") # Finally, "utf-8" is used for decoding 'SacrÃ' # to return a thing intact to its owner!

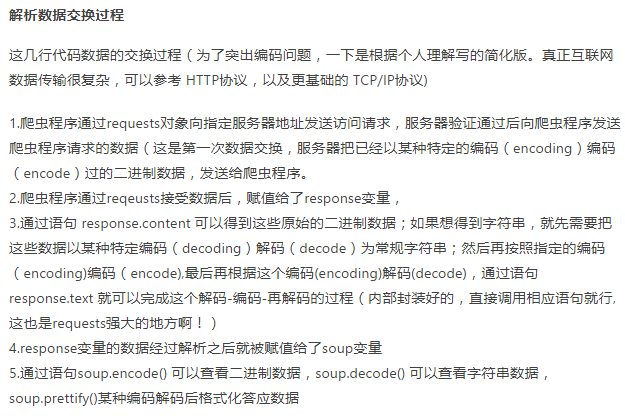

After understanding the coding principles, and then analyzing the data exchange process, we can find problems:

1. First, the crawler needs to know how to encode the binary data sent by the server, so as to effectively decode it

1.1. The content type field in the headers returned by the server generally contains the encoding method of data, for example, content type: text / HTML; charset = utf-8, which indicates that the data sent by the server has been utf-8 encoded.

1.2. But now the problem is: at present, some non-standard websites return header files that do not contain encoding information. At this time, requests cannot parse out the encoding, and then call the default encoding method of the program: "Latin-1". Why is it? According to some transmission protocol, the programmers who develop the requests library just wrote it. That's right, but it's not practical in China. This is what caused the coding problem

2. Solutions

2.1 after finding out the garbled code, first check the decoding method of requests through print(response.encoding). After calling response.content, find a string similar to this through regular way: \ n, where charset=utf-8 indicates the encoding method of data. After knowing the encoding method, add the statement: response.encoding = "utf-8" (specify encoding method). After the correct analysis, the data exchange process will not be a problem. Why? Because the default output mode of requests is unicode (of course, you can also modify it by coding first and then decoding, such as response.text.encode("gbk"). Decode ("gbk"), but it is not necessary in general), In general, beautifulsop can effectively parse the encoding method passed in (if the incoming data itself is a string, there is no need to decode it; if it is a binary encoding, if it is a encoding method that you knew by birth, you can parse the original encoding method passed in, such as: soup = beautifulsop (B '\ x34\xa4\x3f', from_encoding = "utf-8"), "lxml") and the default output is utf-8 encoding format (unicode and utf-8 are compatible), (the default output is utf-8, but if you want to output other formats, you can also directly soup.decode(), which is unnecessary). The key is the first step! After that, it defaults directly.

2.2 or directly view the source code of the webpage through the browser, and view the header of the HTML webpage < meta >, you can see the above information. The content of the label is not much, so you can see it soon.

3. Discuss other situations (also common problems in China)

3.1. It is found that the original code sent by the server is a non unicode system code such as gbk or gb2321. After setting, the requests can correctly parse the code, but in the end, the output format of the soup is still garbled. I think it shouldn't be. I tried it myself, and there is no garbled code. (it may be related to the version of related programs, I use win10-32bit, python 3)

3.2. The domestic situation is more complex, so there is a more exotic situation: the header returned by the server or the HTML header has the named data encoding form, but: all are wrong! , it's terrible to think about, but there is such a situation. For example, the header returned by the server does not indicate the code, but you find that charset=gb2312 in the HTML header is very happy at this time, and then set the code response.encoding = "gb2312", But the random code still arrived as promised, and I began to doubt myself. So I checked the data and found that the coding provided by it might be incorrect. The more I tried to set 'response. Encoding = "utf-8", the miracle happened, and the random code disappeared! , but the problem is that I also took a chance. Maybe there are methods that can be detected, so I checked the data and found that there are two ways to determine the original binary encoding format

# The module in the third-party library bs4 can detect binary encoding mode without calling beatifulsop. from bs4 import UnicodeDammit dammit = UnicodeDammit(r.content) #Note: this needs to pass in binary raw data, not string print(dammit.original_encoding) //The output is: Some characters could not be decoded, and were replaced with REPLACEMENT CHARACTER. gb2312 #Correct recognition

# The special code detection library chardet looks like a cow. Let's try it: import chardet print(chardet.detect(r.content)) # Note: the incoming string will report an error: ValueError: Expected a bytes object, not a unicode object. That is to say, like the above Unicode damit, only bytes data can be passed in. //The output result is:{'encoding': 'GB2312', 'confidence': 0.99} # Really great.

Summary (thanks again This big guy!)

#!/usr/bin/env python3 # -*- coding: utf-8 -*- import pymysql import requests from bs4 import BeautifulSoup def conn_to_mysql(): # Establish database connection through function connection = pymysql.connect(host="localhost", user="root", passwd="521513", db="spider_data", port=3306, charset="UTF8") cursor = connection.cursor() return connection, cursor def get_boc_data(url): # Grab single page data and output to MySQL headers = {"User-Agent": "Mozilla/4.0 (compatible; MSIE 7.0; Windows NT 5.1; Maxthon 2.0)", "Referer": "http://www.boc.cn/"} proxies = {"http": "218.76.106.78:3128"} r = requests.get(url, headers=headers) r.encoding = "utf-8" print(r.status_code, "\n", r.url) soup = BeautifulSoup(r.text, "lxml") table_data = soup.select("body div.publish table")[0] conn, cur = conn_to_mysql() for row in table_data.find_all("tr"): boc_data = [] for col in row.find_all("td"): boc_data.append(col.text) print(tuple(boc_data)) if len(boc_data) == 7: sql = "INSERT INTO boc_data_2(cur_name, cur_id, tra_date, bid_price, off_price,mid_price, date) " \ "values('%s', '%s', '%s', '%s', '%s', '%s', '%s')" % tuple(boc_data) cur.execute(sql) else: pass conn.commit() conn.close() def get_all_page_data(pages): # Download multi page data start_url = "http://www.bankofchina.com/sourcedb/ffx/index.html" get_boc_data(start_url) for page in range(1, pages): new_url = "http://www.bankofchina.com/sourcedb/ffx/index_" + str(page) + ".html" get_boc_data(new_url) if __name__ == "__main__": get_all_page_data(4)

Reference link 2:

Solutions to the problems of Utf-8 decoding error and gzip compression in Python crawling web pages

https://zhuanlan.zhihu.com/p/25095566

When we use python3 to crawl some websites, we get the url of the webpage and parse it. When we use decode('utf-8 ') to decode, sometimes UTF-8 cannot decode. For example, the result will prompt:

Unicode Decode Error: 'utf8' codec can't decode byte 0xb2 in position 0: invalid start byte 'utf-8' codec can't decode byte 0x8b in position 1: invalid start byte

This is because some websites have gzip compression, the most typical is sina. This problem often occurs in web crawlers, so why compress? Sogou encyclopedia explains as follows:

GZIP coding over HTTP protocol is a technology used to improve the performance of WEB applications. WEB sites with large traffic often use GZIP compression technology to make users feel faster. This generally refers to a function installed in the WWW server. When someone comes to visit the website in this server, this function in the server compresses the WEB content and transmits it to the visiting computer browser for display. Generally, the plain text content can be compressed to 40% of the original size. In this way, the transmission will be fast, and the effect is that you will soon display it after clicking the website. Of course, this It will also increase the load of the server. Generally, this function module is installed in the server.

When we open a Sina Web page, right-click to select "check", and then the word "Request Headers" under "Network" All "Headers will be found:

Accept-Encoding:gzip, deflate, sdch

Some suggestions for this problem: "see if there is' accept encoding ':' gzip, deflate 'in the set header. If there is, delete it." , but sometimes the header does not exist. How to delete it? Here is an example of opening a Sina News page:

import urllib.request from bs4 import BeautifulSoup url='http://news.sina.com.cn/c/nd/2017-02-05/doc-ifyafcyw0237672.shtml' req = urllib.request.Request(url) req.add_header('User-Agent', 'Mozilla/5.0 (Windows NT 6.1) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/49.0.2623.22 Safari/537.36 SE 2.X MetaSr 1.0') page = urllib.request.urlopen(req) # Log in as a browser txt = page.read().decode('utf-8') soup = BeautifulSoup(txt, 'lxml') title =soup.select('#artibodyTitle')[0].text print(title)

There will still be problems after run. After decode('utf-8 ') is removed, the resulting page is garbled. Therefore, the solution is not so.

There are two solutions: (1) decompress and decode the web page with gzip library; (2) parse the web page with requests library instead of urllib.

(1) The solution is: after the "txt = page.read()" page is read, add the following command:

txt=gzip.decompress(txt).decode('utf-8')

(2) The solution is:

import requests import gzip url="http://news.sina.com.cn/c/nd/2017-02-05/doc-ifyafcyw0237672.shtml" req = requests.get(url) req.encoding= 'utf-8'

This is the format set to "utf-8" for web pages, but here the simulated browser login needs to use this method:

headers = { 'Host': 'blog.csdn.net', 'User-Agent': 'Mozilla/5.0 (Windows NT 6.1; WOW64; rv:43.0) Gecko/20100101 Firefox/43.0', 'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8', 'Accept-Language': 'zh-CN,zh;q=0.8,en-US;q=0.5,en;q=0.3', .... }

Reference link 3: UnicodeDecodeError: 'utf-8' codec can't decode byte 0x8b in position 1: invalid start byte

https://blog.csdn.net/zhang_cl_cn/article/details/94575568

from urllib import request class Spilder(): #Fighting fish url url='https://www.douyu.com/' def __fetch_content(self): r = request.urlopen(Spilder.url) htmls = r.read() #Get bytecode (html) print(htmls) htmls = str(htmls, encoding='utf-8') print(htmls) def go(self): self.__fetch_content() spilder=Spilder() spilder.go()

First of all, we can see that the byte code output by the first print starts with "B '\ x1f\x8b\x08", indicating that it is gzip compressed data, which is also the cause of error reporting, so we need to decode the byte code we received. Amend to read:

url='https://www.douyu.com/' def __fetch_content(self): r = request.urlopen(Spilder.url) htmls = r.read() buff = BytesIO(htmls) f = gzip.GzipFile(fileobj=buff) htmls = f.read().decode('utf-8') print(htmls)