.

Chinese Email Classification Based on Naive Bayes algorithm

1. Principle of naive Bayesian algorithm

Bayesian Theory: calculate the probability of another event according to the probability of one occurrence.

.

simple logic: when using this algorithm for classification, calculate the probability that the unknown sample belongs to the known class, and then select the sample with the largest probability as the classification result,

Brief introduction: naive Bayesian classifier originated from classical mathematics theory, has a solid mathematical foundation and stable classification efficiency. Bayesian method is based on Bayesian theory, using the knowledge of probability and statistics to classify the sample data set, and the rate of misjudgment is very low. The characteristic of Bayesian method is to combine the prior probability and the posterior probability, which avoids the subjective bias of using only the prior probability and the over fitting phenomenon of using the sample information alone. When the data set is large, it shows high accuracy.

The naive Bayes method is based on the Bayes algorithm, which assumes that the attributes are independent of each other when the target value is given. The proportion of attribute variables is almost the same, which greatly simplifies the complexity of Bayesian method, but reduces the classification effect.

2. Project introduction

.

3. Project steps

(1) Collect enough spam and non spam content from email as training set.

(2) Read all training sets and delete interference characters, such as [] *. ,, and so on, then segmentation, and then delete the single word with the length of 1, such a single word has no contribution to text classification, the rest of the vocabulary is considered as effective vocabulary.

(3) Count the occurrence times of each effective vocabulary in all training sets, and intercept the first N (which can be adjusted according to the actual situation) with the most occurrence times.

(4) According to each spam and non spam content preprocessed in step 2, feature vectors are generated, and the frequency of N words in step 3 is counted. Each email corresponds to a feature vector, the length of which is N, and the value of each component represents the number of times the corresponding words appear in this email. For example, the feature vector [3, 0, 0, 5] indicates that the first word appears three times in this email, the second and third words do not appear, and the fourth word appears five times.

(5) According to the feature vector obtained in step 4 and the known e-mail classification, a naive Bayesian model is created and trained.

(6) Read the test mail, refer to step 2, preprocess the mail text, extract the feature vector.

(7) The trained model in step 5 is used to classify the mails according to the feature vectors extracted in step 6.

4. code

Import various libraries:

from re import sub from os import listdir from collections import Counter from itertools import chain from numpy import array from jieba import cut from sklearn.naive_bayes import MultinomialNB

get all valid words in each message:

def getWordsFromFile(txtFile):

# Get all words in each message

words = []

# All Notepad files that store the text content of messages are encoded in UTF8

with open(txtFile, encoding='utf8') as fp:

for line in fp:

# Traverses each line, removing white space characters at both ends

line = line.strip()

# Filter for interfering or invalid characters

line = sub(r'[.[]0-9,—. ,!~\*]', '', line)

# participle

line = cut(line)

# Filter words of length 1

line = filter(lambda word: len(word)>1, line)

# Add words from text preprocessing of this line to the words list

words.extend(line)

# Returns a list of all valid words in the current message text

return words

train and save results

# Store words in all documents

# Each element is a sublist that holds all the words in a file

allWords = []

def getTopNWords(topN):

# Process all Notepad documents in the current folder in the order of document number

# 151 emails in the training set, 0.txt to 126.txt are spam

# 127.txt to 150.txt are normal message contents

txtFiles = [str(i)+'.txt' for i in range(151)]

# Get all words in all messages in the training set

for txtFile in txtFiles:

allWords.append(getWordsFromFile(txtFile))

# Get and return the top n words with the most occurrences

freq = Counter(chain(*allWords))

return [w[0] for w in freq.most_common(topN)]

# The first 600 words with the largest number of times in all training sets

topWords = getTopNWords(600)

# Get the feature vector, how often each word of the first 600 words appears in each message

vectors = []

for words in allWords:

temp = list(map(lambda x: words.count(x), topWords))

vectors.append(temp)

vectors = array(vectors)

# Label of each message in training set, 1 for spam, 0 for normal message

labels = array([1]*127 + [0]*24)

# Create models and train with known training sets

model = MultinomialNB()

model.fit(vectors, labels)

# Here is the save result

joblib.dump(model, "Spam classifier.pkl")

print('Save the model and training results successfully.')

with open('topWords.txt', 'w', encoding='utf8') as fp:

fp.write(','.join(topWords))

print('Preservation topWords Success.')

load and use training results

model = joblib.load("Spam classifier.pkl")

print('Loading the model and training results were successful.')

with open('topWords.txt', encoding='utf8') as fp:

topWords = fp.read().split(',')

def predict(txtFile):

# Get the content of the specified mail file and return the classification result

words = getWordsFromFile(txtFile)

currentVector = array(tuple(map(lambda x: words.count(x),

topWords)))

result = model.predict(currentVector.reshape(1, -1))[0]

return 'Junk mail' if result==1 else 'Normal mail'

# 151.txt to 155.txt are the contents of the test message

for mail in ('%d.txt'%i for i in range(151, 156)):

print(mail, predict(mail), sep=':')

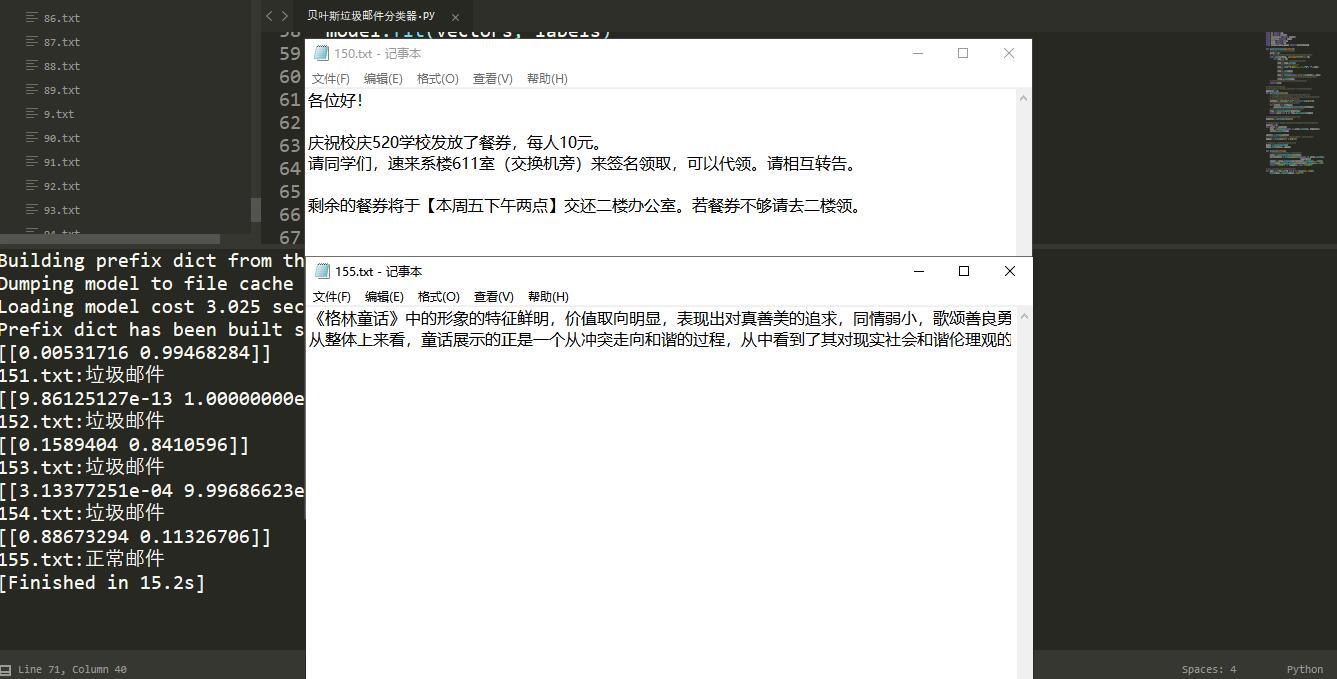

5. results