Python deep learning (2) -- RNN and its advanced model implementation

0 prediction training function

def train_and_predict_rnn(rnn, get_params, init_rnn_state, num_hiddens, vocab_size, device, corpus_indices, idx_to_char, char_to_idx, is_random_iter, num_epochs, num_steps, lr, clipping_theta, batch_size, pred_period, pred_len, prefixes): if is_random_iter: data_iter_fn = d2l.data_iter_random else: data_iter_fn = d2l.data_iter_consecutive params = get_params() loss = nn.CrossEntropyLoss() for epoch in range(num_epochs): if not is_random_iter: # If adjacent sampling is used, the hidden state is initialized at the beginning of epoch state = init_rnn_state(batch_size, num_hiddens, device) l_sum, n, start = 0.0, 0, time.time() data_iter = data_iter_fn(corpus_indices, batch_size, num_steps, device) for X, Y in data_iter: if is_random_iter: # If random sampling is used, the hidden state is initialized before each small batch update state = init_rnn_state(batch_size, num_hiddens, device) else: # Otherwise, you need to use the detach function to separate the hidden state from the calculation graph. This is to # So that the gradient calculation of model parameters only depends on the small batch sequence read by one iteration (to prevent too much cost of gradient calculation) for s in state: s.detach_() inputs = to_onehot(X, vocab_size) # outputs have num steps matrices with the shape (batch size, vocab size) (outputs, state) = rnn(inputs, state, params) # After splicing, the shape is (Num ﹐ steps * batch ﹐ size, vocab ﹐ size) outputs = torch.cat(outputs, dim=0) # The shape of Y is (batch_size, num_steps). After transposing, it becomes the length of # The vector of batch * num ﹐ steps, so it corresponds to the output line one by one y = torch.transpose(Y, 0, 1).contiguous().view(-1) # Using cross entropy loss to calculate average classification error l = loss(outputs, y.long()) # Gradient Qing 0 if params[0].grad is not None: for param in params: param.grad.data.zero_() l.backward() grad_clipping(params, clipping_theta, device) # Clipping gradient d2l.sgd(params, lr, 1) # Because the error has been averaged, the gradient does not need to be averaged l_sum += l.item() * y.shape[0] n += y.shape[0] if (epoch + 1) % pred_period == 0: print('epoch %d, perplexity %f, time %.2f sec' % ( epoch + 1, math.exp(l_sum / n), time.time() - start)) for prefix in prefixes: print(' -', predict_rnn(prefix, pred_len, rnn, params, init_rnn_state, num_hiddens, vocab_size, device, idx_to_char, char_to_idx))

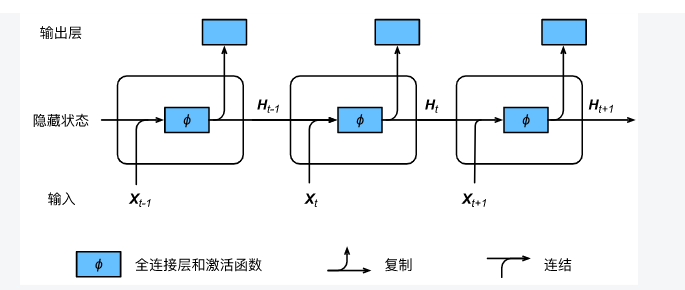

1.RNN implementation

nn module in PyTorch provides the implementation of cyclic neural network. Next, a RNN layer with a single hidden layer and 256 hidden units is constructed.

num_hiddens = 256 # rnn_layer = nn.LSTM(input_size=vocab_size, hidden_size=num_hiddens) # Tested rnn_layer = nn.RNN(input_size=vocab_size, hidden_size=num_hiddens)

Next, we inherit the Module class to define a complete cyclic neural network. It first uses one hot vector to represent the input data and then inputs it into RNN layer, and then uses full connection output layer to get the output. The number of outputs is equal to the dictionary size vocab.

# This class has been saved in the d2lzh pytorch package for later use class RNNModel(nn.Module): def __init__(self, rnn_layer, vocab_size): super(RNNModel, self).__init__() self.rnn = rnn_layer self.hidden_size = rnn_layer.hidden_size * (2 if rnn_layer.bidirectional else 1) self.vocab_size = vocab_size self.dense = nn.Linear(self.hidden_size, vocab_size) self.state = None def forward(self, inputs, state): # inputs: (batch, seq_len) # Get one hot vector representation X = d2l.to_onehot(inputs, self.vocab_size) # X is a list. Y, self.state = self.rnn(torch.stack(X), state) # The fully connected layer will first change the shape of Y into (Num ﹣ steps * batch ﹣ size, num ﹣ hiddens), its output # The shape is (Num? Steps * batch? Size, vocab? Size) output = self.dense(Y.view(-1, Y.shape[-1])) return output, self.state

num_epochs, batch_size, lr, clipping_theta = 250, 32, 1e-3, 1e-2 # Pay attention to the learning rate setting here pred_period, pred_len, prefixes = 50, 50, ['Separate', 'No separation'] train_and_predict_rnn_pytorch(model, num_hiddens, vocab_size, device, corpus_indices, idx_to_char, char_to_idx, num_epochs, num_steps, lr, clipping_theta, batch_size, pred_period, pred_len, prefixes)

Output:

epoch 50, perplexity 10.658418, time 0.05 sec - From the beginning, my mother wants you. I don't want to let me know. My mother, I can't think anymore. I don't want to think anymore. I don't want to think anymore. I don't want to think anymore - No, I want you no, I don't want you, I don't want you to let my heart know that my mother is lovely, and that bad woman makes me crazy, and that bad woman makes me crazy epoch 100, perplexity 1.308539, time 0.05 sec - It won't hurt to be separated. Don't you start with black humor. The dream of beautiful whole face flickers into memories of hurting beauty. Your perfectionism makes me completely - It's not that I don't want to think about you anymore. I can't hold your hand like this. I can't let go of love. It's just that I don't hurt you. You're on my shoulder epoch 150, perplexity 1.070370, time 0.05 sec - You can't go to Song Mountain in Henan Province to learn Shaolin and Wudang. Use the double staff to hum and ha. Use the double staff to hum and ha. Remember that the benevolent is invincible - I'll figure out if it's all started, if it's not, if it's not, if it's not, everyone's watching me today, a girl in autumn soft language, walking slowly through the old Bund and disappearing epoch 200, perplexity 1.034663, time 0.05 sec - Can't you leave? Jay Chou wants you to break up in a tragedy. My perfectionism is too complete. It's like verbal violence. I can't help it - Don't separate, let me face you. Love comes too fast. It's like a tornado. It can't escape without a storm. I can't think anymore. I can't think anymore. No, I don't. no, I don't epoch 250, perplexity 1.021437, time 0.05 sec - Apart from my home, you know what I love and don't look at. I want to come back again. I don't want to be obsessed with what I've written. I only have memories to tell you to take you and you - I don't want to be separated. I want to be with you. No, I can't help it. I don't want to know that you're gone. I don't know that I'm following the rhythm

Summary of RNN

- The nn module of PyTorch provides the implementation of cyclic neural network layer.

- nn.RNN instance of PyTorch will return output and hidden state respectively after forward calculation. The forward calculation does not involve the output layer calculation.

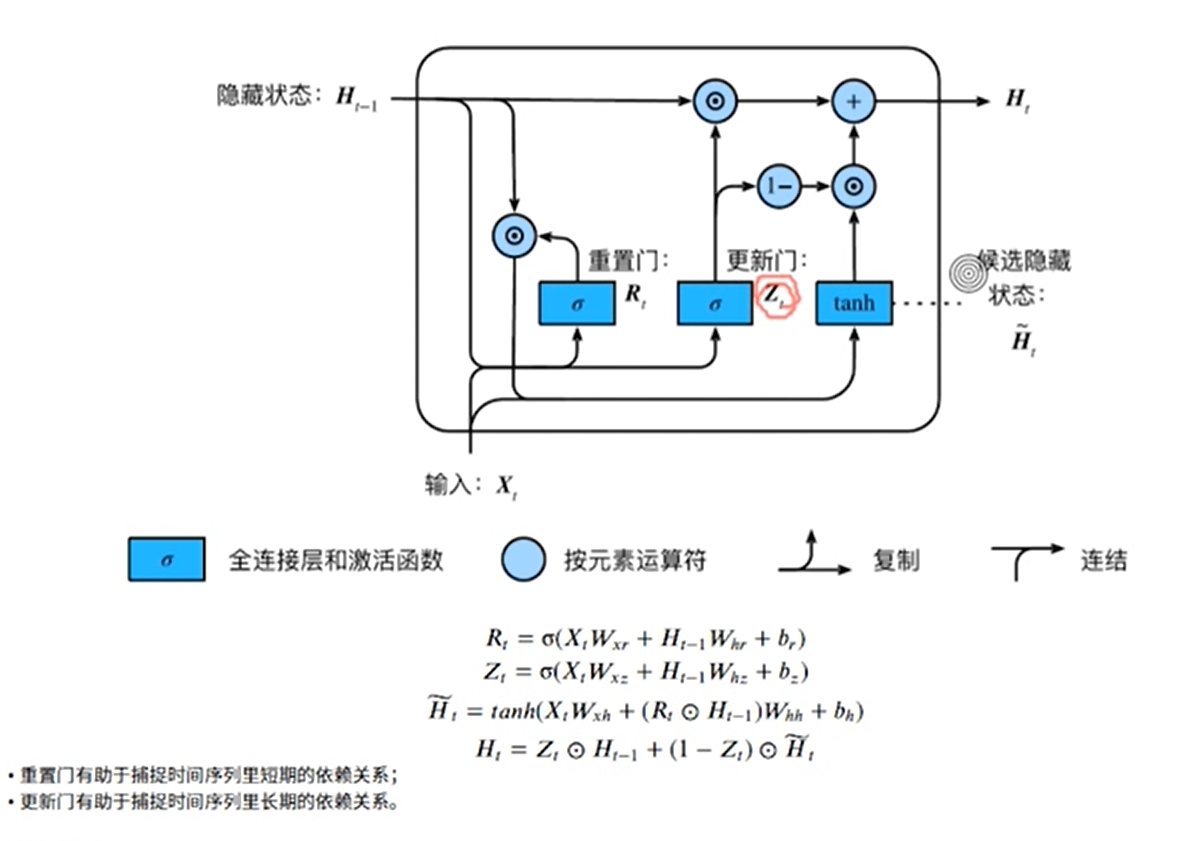

2.GRU implementation

In PyTorch, we can directly call GRU class in nn module.

num_hiddens=256 num_epochs, num_steps, batch_size, lr, clipping_theta = 160, 35, 32, 1e2, 1e-2 pred_period, pred_len, prefixes = 40, 50, ['Separate', 'No separation'] lr = 1e-2 # Pay attention to adjusting the learning rate gru_layer = nn.GRU(input_size=vocab_size, hidden_size=num_hiddens) model = d2l.RNNModel(gru_layer, vocab_size).to(device) d2l.train_and_predict_rnn_pytorch(model, num_hiddens, vocab_size, device, corpus_indices, idx_to_char, char_to_idx, num_epochs, num_steps, lr, clipping_theta, batch_size, pred_period, pred_len, prefixes)

Output:

epoch 40, perplexity 1.022157, time 1.02 sec - Hand in hand, step by step, step by step, step by step, look at the sky, see the stars, one by one, two, three, four in a line, back to back, silently promise to see if the stars in the distance are listening - I can't think about it anymore. I can't think about it anymore. I can't think about it anymore. No, I can't love it. It's like a tornado can't bear it. I have nowhere to go epoch 80, perplexity 1.014535, time 1.04 sec - I began to imagine my father and mother talking about Wunong's soft words as they walked slowly through the memory road of 1943 when the old time disappeared on the Bund - It's like you're gone before you know it. I'm following this rhythm. I'll be fine after another autumn epoch 120, perplexity 1.147843, time 1.04 sec - It's all up to me. You're holding the ball, you're not shooting, and you're not covering. I'm blind to choose your teammates. I'm telling you how to keep the score. All the memories are attacking me - No matter how annoying I am, no matter how dangerous the grass is. A dream. I'll face it. I'm full of anger. I want to beat you for a long time. Don't try to hide from you epoch 160, perplexity 1.018370, time 1.05 sec - The tragedy of falling in love with you is the perfect performance for you. I would rather cry with heartbreak and forget that you loved me. The evidence makes crystal tears flash - I start to worry about your life today. The whole picture is that you can't sleep as you want. It's cute and fragrant on you

Summary of GRU

- The gated cyclic neural network can better capture the dependence of time step distance in time series.

- The concept of gate is introduced into the gate control loop unit, which modifies the calculation method of hidden state in the cyclic neural network. It includes reset door, update door, candidate hidden state and hidden state.

- Reset gates help to capture short-term dependencies in time series.

- Updating gates helps capture long-term dependencies in time series.

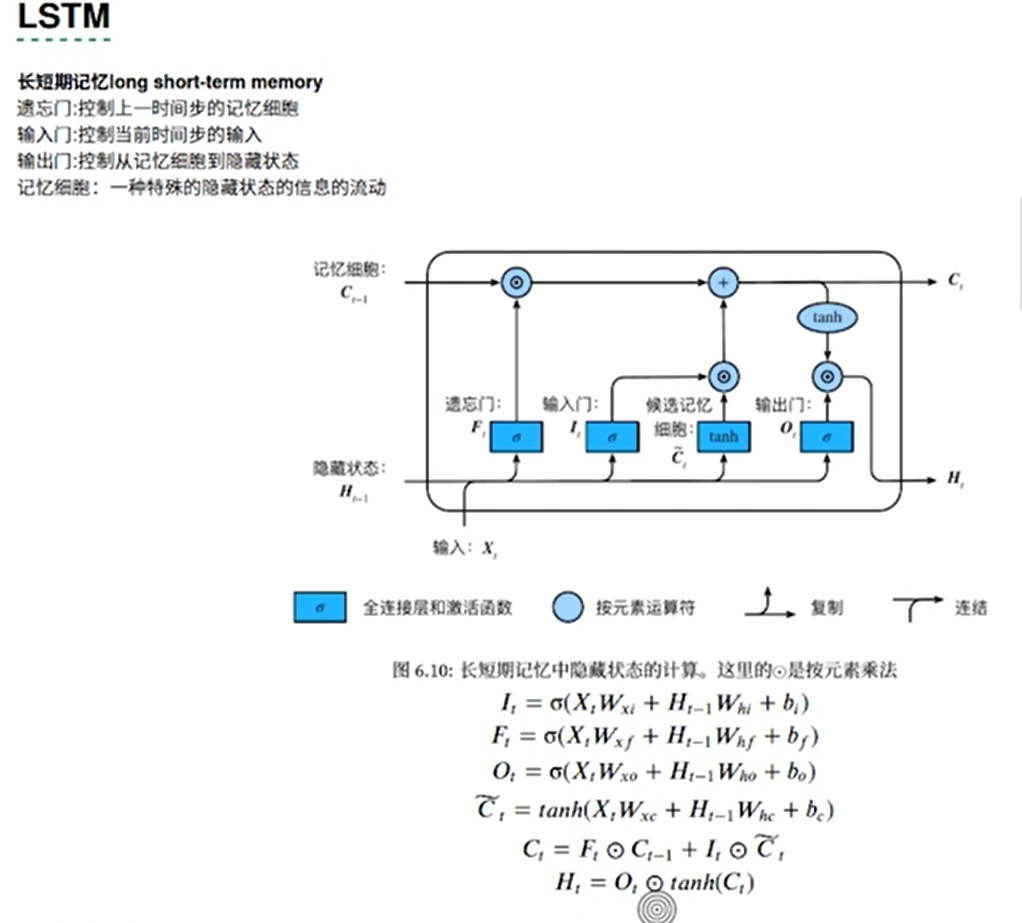

3.LSTM implementation

In Python, we can directly call the LSTM class in the rnn module.

num_hiddens=256 num_epochs, num_steps, batch_size, lr, clipping_theta = 160, 35, 32, 1e2, 1e-2 pred_period, pred_len, prefixes = 40, 50, ['Separate', 'No separation'] lr = 1e-2 # Pay attention to adjusting the learning rate lstm_layer = nn.LSTM(input_size=vocab_size, hidden_size=num_hiddens) model = d2l.RNNModel(lstm_layer, vocab_size) d2l.train_and_predict_rnn_pytorch(model, num_hiddens, vocab_size, device, corpus_indices, idx_to_char, char_to_idx, num_epochs, num_steps, lr, clipping_theta, batch_size, pred_period, pred_len, prefixes)

Output:

epoch 40, perplexity 1.020401, time 1.54 sec - I started to think that mom and I must be my mom. Because before the break-up, I was so sorry. The clock moved back from the opposite direction - Start to imagine mom and me. I'll close my loneliness. I'll stay here for no date. Then I'll review the past and let me fall in love with your tragedy epoch 80, perplexity 1.011164, time 1.34 sec - From now on, I want to take care of the lovely women who used to love me tenderly. The lovely women who transparently moved me. The lovely women who are bad make me crazy - I'm full of lovely women who make me crazy, beautiful, blushing and tender, lovely women who make me sad, transparent and touching epoch 120, perplexity 1.025348, time 1.39 sec - Start to cross every day, hand in hand, step by step, step by step, step by step, look at the stars, one star, two stars, three stars, four stars in a line, back to back, silent, tacit wish to see - I don't know how his smile is different. In your heart, I'm no longer favored. Is my sky rain, wind or rainbow? Are you manipulating epoch 160, perplexity 1.017492, time 1.42 sec - Apart from Shixiang, I believe in fate. Thanks to the gravity, I can meet you. You are beautiful. You make me blush. You are lovely. You are tender. You are lovely. You are transparent - I can't think about it anymore. No, I can't love. It's like a tornado can't bear it. I have nowhere to hide. I don't want to think about it anymore

Summary of LSTM

- The hidden layer output of long-term and short-term memory includes hidden state and memory cells. Only the hidden state is passed to the output layer.

- The input gate, forgetting gate and output gate of long-term and short-term memory can control the flow of information.

- Long and short term memory can deal with the problem of gradient attenuation in recurrent neural networks, and better capture the dependence of time step distance in time series.

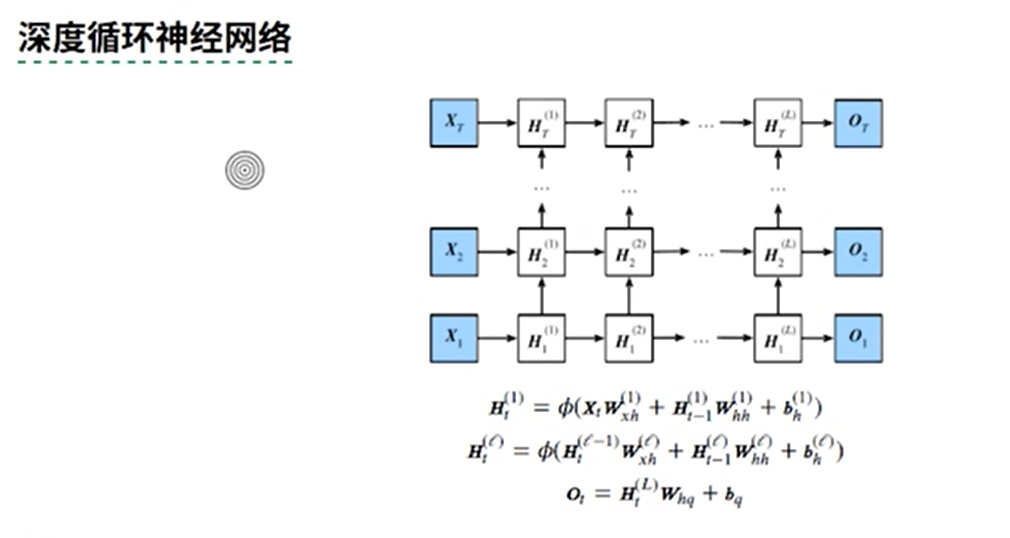

4.Deep-RNN

Add parameter num? Layers = 2

num_hiddens=256 num_epochs, num_steps, batch_size, lr, clipping_theta = 160, 35, 32, 1e2, 1e-2 pred_period, pred_len, prefixes = 40, 50, ['Separate', 'No separation'] lr = 1e-2 # Pay attention to adjusting the learning rate gru_layer = nn.LSTM(input_size=vocab_size, hidden_size=num_hiddens,num_layers=2) model = d2l.RNNModel(gru_layer, vocab_size).to(device) d2l.train_and_predict_rnn_pytorch(model, num_hiddens, vocab_size, device, corpus_indices, idx_to_char, char_to_idx, num_epochs, num_steps, lr, clipping_theta, batch_size, pred_period, pred_len, prefixes)

output

epoch 40, perplexity 12.840496, time 1.52 sec - If I miss you, I'm thinking about how you hurt my daughter. I think you and I have to miss you. You want me to sink my daughter. I think you want me to sink my daughter - Not separated by the love of women bad bad let me crazy lovely women bad let me crazy lovely women bad bad let me crazy lovely women bad bad bad let me epoch 80, perplexity 1.247634, time 1.52 sec - There's a hot rattlesnake lying powerless in the dry river waiting for the rainy season to come. The swamp turns grey wolf gnaws at the bone of the water deer. The vulture hovers and stares at it - I will not separate from you. I will let you fly. I will walk around the nature and meet the wind. I will hold hands every day epoch 120, perplexity 1.021974, time 1.56 sec - How important I am to separate my mother. I regret that I didn't let you know. Listen to you quietly. Watch you sleep until you are too old to open your mouth. Let her know that's it - I don't want to be part of the club. I don't want to be part of it. I don't want to be part of it. I don't want to be part of it epoch 160, perplexity 1.016324, time 1.59 sec - No one left the old time in 1943. Time became better on the way of memory. The old neighborhood and the small alley belong to the white wall and black of that era - I don't want to separate. I want to hit my mother like this again. Won't your hand hurt? Don't hit my mother like this again? Won't your hand hurt? Don't do it again

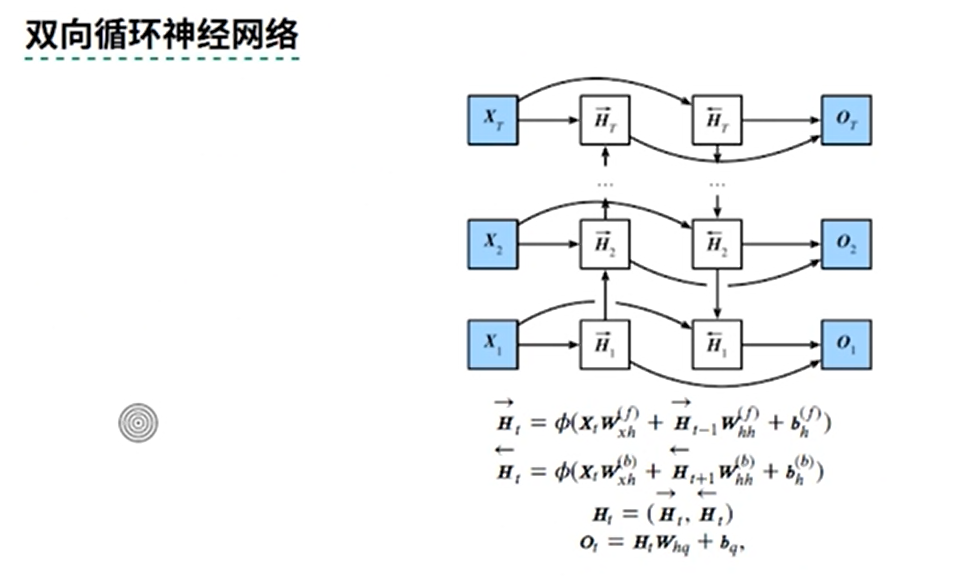

5. Bi RNN implementation

Add parameter bidirectional=True

num_hiddens=128 num_epochs, num_steps, batch_size, lr, clipping_theta = 160, 35, 32, 1e-2, 1e-2 pred_period, pred_len, prefixes = 40, 50, ['Separate', 'No separation'] lr = 1e-2 # Pay attention to adjusting the learning rate gru_layer = nn.GRU(input_size=vocab_size, hidden_size=num_hiddens,bidirectional=True) model = d2l.RNNModel(gru_layer, vocab_size).to(device) d2l.train_and_predict_rnn_pytorch(model, num_hiddens, vocab_size, device, corpus_indices, idx_to_char, char_to_idx, num_epochs, num_steps, lr, clipping_theta, batch_size, pred_period, pred_len, prefixes)

output

epoch 40, perplexity 1.001741, time 0.91 sec - Minute start start start start start start start start start start start start start start start start start start start start start start start - Start start start start start start start start start start start start start start start start start start start start start start epoch 80, perplexity 1.000520, time 0.91 sec - Minute start start start start start start start start start start start start start start start start start start start start start start start - Start start start start start start start start start start start start start start start start start start start start start start epoch 120, perplexity 1.000255, time 0.99 sec - Minute start start start start start start start start start start start start start start start start start start start start start start start - No kickoff, I play my ball, I play my ball, I play my ball, I play my ball, I play my ball, I play my ball, I play my ball, I play my ball, I play my ball, I play my ball, I play my ball, I play my ball, I play my ball, I play my ball epoch 160, perplexity 1.000151, time 0.92 sec - Minute start start start start start start start start start start start start start start start start start start start start start start start - No kickoff, I play my ball, I play my ball, I play my ball, I play my ball, I play my ball, I play my ball, I play my ball, I play my ball, I play my ball, I play my ball, I play my ball, I play my ball, I play my ball, I play my ball