brief introduction

The choice of wallpaper can, to a great extent, be able to see the inner world of the computer host. Some people like the scenery, some people like the stars, some people like beautiful women, others like animals. However, one day you will have aesthetic fatigue, but when you decide to change the wallpaper, you will find that the wallpaper on the Internet is either low resolution or watermarked.

The choice of wallpaper can, to a great extent, be able to see the inner world of the computer host. Some people like the scenery, some people like the stars, some people like beautiful women, others like animals. However, one day you will have aesthetic fatigue, but when you decide to change the wallpaper, you will find that the wallpaper on the Internet is either low resolution or watermarked.

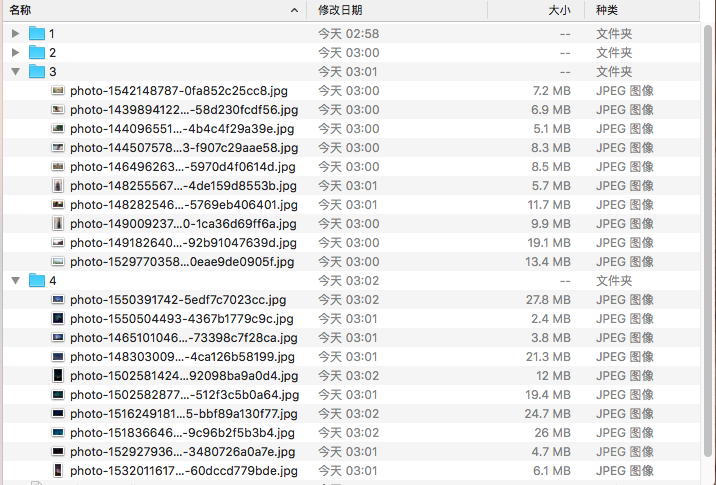

Demo pictures

Complete source code

1 ''' 2 What can I learn from my learning process? 3 python Learning Exchange Button qun,934109170 4 There are good learning tutorials, development tools and e-books in the group. 5 Share with you python Enterprises'Current Demand for Talents and How to Learn Well from Zero Foundation python,And learn what. 6 ''' 7 # -*- coding:utf-8 -*- 8 9 from requests import get 10 from filetype import guess 11 from os import rename 12 from os import makedirs 13 from os.path import exists 14 from json import loads 15 from contextlib import closing 16 17 18 # File Downloader 19 def Down_load(file_url, file_full_name, now_photo_count, all_photo_count): 20 headers = {"User-Agent": "Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/63.0.3239.132 Safari/537.36"} 21 22 # Start downloading pictures 23 with closing(get(file_url, headers=headers, stream=True)) as response: 24 chunk_size = 1024 # Maximum single request 25 content_size = int(response.headers['content-length']) # Total file size 26 data_count = 0 # Current transmitted size 27 with open(file_full_name, "wb") as file: 28 for data in response.iter_content(chunk_size=chunk_size): 29 file.write(data) 30 done_block = int((data_count / content_size) * 50) 31 data_count = data_count + len(data) 32 now_jd = (data_count / content_size) * 100 33 print("\r %s: [%s%s] %d%% %d/%d" % (file_full_name, done_block * '█', ' ' * (50 - 1 - done_block), now_jd, now_photo_count, all_photo_count), end=" ") 34 35 # After downloading the picture, get the picture extension and add it to it. 36 file_type = guess(file_full_name) 37 rename(file_full_name, file_full_name + '.' + file_type.extension) 38 39 40 41 # Crawling Different Types of Pictures 42 def crawler_photo(type_id, photo_count): 43 44 # Latest 1, The hottest 2, Girl 3, Star 4 45 if(type_id == 1): 46 url = 'https://service.paper.meiyuan.in/api/v2/columns/flow/5c68ffb9463b7fbfe72b0db0?page=1&per_page=' + str(photo_count) 47 elif(type_id == 2): 48 url = 'https://service.paper.meiyuan.in/api/v2/columns/flow/5c69251c9b1c011c41bb97be?page=1&per_page=' + str(photo_count) 49 elif(type_id == 3): 50 url = 'https://service.paper.meiyuan.in/api/v2/columns/flow/5c81087e6aee28c541eefc26?page=1&per_page=' + str(photo_count) 51 elif(type_id == 4): 52 url = 'https://service.paper.meiyuan.in/api/v2/columns/flow/5c81f64c96fad8fe211f5367?page=1&per_page=' + str(photo_count) 53 54 # Getting Picture List Data 55 headers = {"User-Agent": "Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/63.0.3239.132 Safari/537.36"} 56 respond = get(url, headers=headers) 57 photo_data = loads(respond.content) 58 59 # Number of photos downloaded 60 now_photo_count = 1 61 62 # Number of photos 63 all_photo_count = len(photo_data) 64 65 # Start downloading and save 5 K Resolution wallpaper 66 for photo in photo_data: 67 68 # Create a folder to store the images we downloaded 69 if not exists('./' + str(type_id)): 70 makedirs('./' + str(type_id)) 71 72 # Links to images to be downloaded 73 file_url = photo['urls']['raw'] 74 75 # The name of the image to be downloaded,No extensions included 76 file_name_only = file_url.split('/') 77 file_name_only = file_name_only[len(file_name_only) -1] 78 79 # The complete path to be saved locally 80 file_full_name = './' + str(type_id) + '/' + file_name_only 81 82 # Start downloading pictures 83 Down_load(file_url, file_full_name, now_photo_count, all_photo_count) 84 now_photo_count = now_photo_count + 1 85 86 87 88 if __name__ == '__main__': 89 90 # Latest 1, The hottest 2, Girl 3, Star 4 91 # Crawl an image of type 3(Girl student),A total of 20,000 copies are ready to be crawled. 92 wall_paper_id = 1 93 wall_paper_count = 10 94 while(True): 95 96 # Newline character 97 print('\n\n') 98 99 # Choosing Wallpaper Type 100 wall_paper_id = input("Wallpaper type: latest wallpaper 1, Hottest Wallpaper 2, Girls Wallpaper 3, Star Wallpaper 4\n Please enter a number to select 5 K Ultra Clean Wallpaper Type:") 101 # Determine whether the input is correct 102 while(wall_paper_id != str(1) and wall_paper_id != str(2) and wall_paper_id != str(3) and wall_paper_id != str(4)): 103 wall_paper_id = input("Wallpaper type: latest wallpaper 1, Hottest Wallpaper 2, Girls Wallpaper 3, Star Wallpaper 4\n Please enter a number to select 5 K Ultra Clean Wallpaper Type:") 104 105 106 # Select the number of wallpapers to download 107 wall_paper_count = input("Please enter 5 to download K Number of super clean wallpapers:") 108 # Determine whether the input is correct 109 while(int(wall_paper_count) <= 0): 110 wall_paper_count = input("Please enter 5 to download K Number of super clean wallpapers:") 111 112 113 # Start crawling 5 K HD Wallpapers 114 print("Download 5 K Super clean wallpaper, please wait a moment...") 115 crawler_photo(int(wall_paper_id), int(wall_paper_count)) 116 print('\n Download 5 K HD Wallpaper success!')