I. Crawling Contents and Websites

1. The website we crawled is the disease information in the 99 Health Network, including the name of the disease, the Department and the body feature information.

2. There are two ways to search for specific diseases in websites: searching by department and searching by location.

(1) Search by Departments: Diseases in Departments - Small Departments (2) Searching by Location: Location - Diseases

(2) Searching by Location: Location - Diseases It can be seen that the path of searching by location is shorter, so this time we use the process of searching by location to crawl disease information.

It can be seen that the path of searching by location is shorter, so this time we use the process of searching by location to crawl disease information.

3. Integral crawling idea: crawl the part contained - - crawl the disease name and disease-related links contained in each part - - crawl the disease-related information - - write the form and save it.

4. Web Site Characteristics

(1) The corresponding website of location information is jbk.99.com.cn/buei./

(2) Climbing to all parts ['head',... ]

(3) Diseases in a certain part: jbk.99.com.cn/buwei/toubu-n.html (only need to add Pinyin and uniform suffix. html), crawl all diseases in this part, and the corresponding link prefix in the source code of the web page is also crawled and saved. - In n, n is the page number

4. Specific links for diseases are https + disease links climbed in the previous step + zhengzhuang.html

2. Crawling process (source code)

// An highlighted block #Website buwei_url1 = "https://jbk.99.com.cn/" buwei_url2 = ".html" #Climbing location information https://jbk.99.com.cn/location/ buwei_url=buwei_url1+'buwei/' try: buwei_urlop = urllib.request.urlopen(buwei_url,timeout=100) except Exception: print("overtime") data_buwei=buwei_urlop.read().decode('GBK')#!!!!! The original web page coding method is GBK #Get the location name and save it in the list ls_buwei link_buwei="<div class=\"part-txt\">\\s*<h3>\\s*<span>\\s*(.*?)</span>" ls_buwei=[] patten=re.compile(link_buwei) ls_buwei=patten.findall(data_buwei) 2.Climb the disease names and links according to the location, and then click the specific information of the disease. #New Form Text book=xlwt.Workbook(encoding='utf-8') heads=[u'Name of disease',u'link',u'Location of onset',u'Consulting Departments',u'Somatotype Characteristics',u'Symptom'] #Get the names of diseases in different parts for i,bw in enumerate(ls_buwei): catch=1#Replacement of position switch ii=0 #Diseases in the same location are placed in a sheet sheet=book.add_sheet(bw,cell_overwrite_ok=True) for j,head in enumerate(heads): sheet.write(0,j,head) while catch==1: ii+=1 #Location Web Site buwei_url_s=buwei_url+hp(bw)+'-'+str(ii)+buwei_url2 print(buwei_url_s) try: #Grab the Web Page buwei_urlop_s=urllib.request.urlopen(buwei_url_s,timeout=100) except Exception: print('overtime') continue try: data_buwei=buwei_urlop_s.read().decode('GBK') #Web source code crawling except: continue #Determine whether it's the last page and compare the source code on the last page. link_is_msg="<div class=\"part-cont3\">\\s*(.*?)<div class=\"digg\">" patten_is_msg=re.compile(link_is_msg) is_msg=patten_is_msg.findall(data_buwei) #If it's the last page, switch to the next part if is_msg==['']: catch=0 continue #Get all disease names and links on this page, and save the results in the list ls_des link_buwei= "<dd>\\s*<h3>\\s*<span>\\s*</span>\\s*<a href=\"(.*?)\"\\s*title=\".+\" target=\"_blank\">(.*?)</a></h3>" patten_buwei=re.compile(link_buwei) ls_des=patten_buwei.findall(data_buwei) #Grasping the specific information of diseases in ls_des iii=0 for x in ls_des: t1=time.clock() iii+=1 row=(ii-1)*10+iii print(row) #Write disease names and links to tables sheet.write(row,0,x[1]) sheet.write(row,1,x[0]) #Disease Links jibing_url='https:'+x[0]+'zhengzhuang.html' print(jibing_url) try: jibing_urlop=urllib.request.urlopen(jibing_url,timeout=100) except Exception: print('overtime') continue try: #Source code acquisition data_jibing=jibing_urlop.read().decode('GBK') except: continue #Information on the location of the disease link_fbbw="<li><font>Location of onset:(.*?)</a></li>" patten_fbbw=re.compile(link_fbbw) st_abbw=patten_fbbw.findall(data_jibing) if st_abbw==[]: continue hz_fbbw=re.findall('[\u4e00-\u9fa5]+',st_abbw[0])#Chinese Extraction fbbw=','.join(ff for ff in hz_fbbw ) sheet.write(row,2,fbbw) #Grasp and Write Information of Medical Departments link_jzks="<li><font>Visiting Departments:(.*?)</a></li>" patten_jzks=re.compile(link_jzks) st_jzks=patten_jzks.findall(data_jibing) jzks=','.join(ff for ff in re.findall('[\u4e00-\u9fa5]+',st_jzks[0]))#Chinese Extraction sheet.write(row,3,jzks) #Grasping Stylistic Features link_tztz="<dt><a>Symptoms and signs:</a></dt>\\s*<dd>\\s*<ul>(.*?)</a></li></ul>" patten_tztz=re.compile(link_tztz) st_tztz=patten_tztz.findall(data_jibing) tztz=','.join(ff for ff in re.findall('[\u4e00-\u9fa5]+',st_tztz[0])) if st_tztz!=[] else 0 sheet.write(row,4,tztz) iii+=1 t2=time.clock() print(t2-t1) book.save("jibing.xls")#Save the form

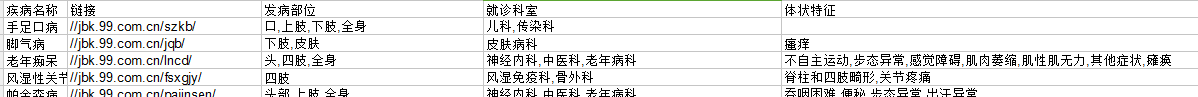

Result presentation

Matters needing attention

1. Coding Conversion

View the original page coding format, if utf-8 can be used directly, if not, using str.decode('original page coding format') to convert to

2. Chinese Extraction

The Chinese range of unicode codes is_ 4e00- 9fa5