ABSTRACT: Scrapy was used to crawl 70,000+App of pea pod net, and exploratory analysis was carried out.

Write on the front: If you are not interested in the data capture part, you can directly drop down to the data analysis part.

1 Analytical Background

Before, we used Scrapy to crawl and analyze Kuan 6000+ App. Why is this article about app?

Because I like tossing App, haha. Of course, it is mainly because of the following points:

First, the web pages captured before are very simple.

In the process of crawling Kuan'an Web, we use for loop, traverse hundreds of pages to complete the crawling of all the content, which is very simple, but the reality is often not so easy. Sometimes the content we want to grasp is relatively large, such as crawling the data of the entire website. In order to enhance the crawling skills, this paper chooses "pea pod". This website.

The goal is to crawl all the app information classified on the site and download the App icon, which is about 70,000, an order of magnitude higher than that of Kuan'an.

Second, practice using a powerful Scrapy framework again

Previously, Scrapy was only used for grabbing preliminarily, but not fully understood how powerful Scrapy is, so this paper attempts to use Scrapy in depth, adding random User Agent, proxy IP and image download settings.

Third, compare the two websites of Kuan and Peapod

I believe many people are using pea pods to download App, but I use Kuan more, so I also want to compare the similarities and differences between the two websites.

Not much to say, the next step is to start the grabbing process.

_Analysis objectives

First of all, let's see what kind of target page we want to crawl.

You can see that App on the website is divided into many categories, including "Application Play" and "System Tools". There are 14 major categories, each of which is subdivided into several sub-categories, such as "Video" and "Live Broadcast".

Click on "Video" to enter the second level subcategory page, you can see some information of each App, including icon, name, installation number, volume, comments and so on.

Next, we can go to the third level page, that is, the details page of each App. We can see that there are many parameters such as download number, rating rate and comment number. The idea of crawling is similar to that of the second level page. At the same time, in order to reduce the pressure of the website, the details page of App will not be crawled.

Therefore, this is a problem of crawling classified multi-level pages, crawling all subclasses of data under each large class in turn.

Learned to grasp this idea, many websites we can grasp, such as many people like to climb the "Douban Film" is the same structure.

_Analysis Content

After data capture is completed, this paper mainly carries on the simple exploratory analysis to the categorized data, including the following aspects:

-

Total ranking of App s with the most/least Downloads

-

App Classification/Sub-Classification Ranking with the Most/Least Downloads

-

Interval Distribution of App Downloads

-

How many names do App have?

- Contrast with Kuan App

_analysis tools

-

Python

-

Scrapy

-

MongoDB

-

Pyecharts

- Matplotlib

2 Data grabbing

_Website Analysis

We have just made a preliminary analysis of the website. The general idea can be divided into two steps. First, extract the URL links of all subclasses, and then grab the App information under each URL separately.

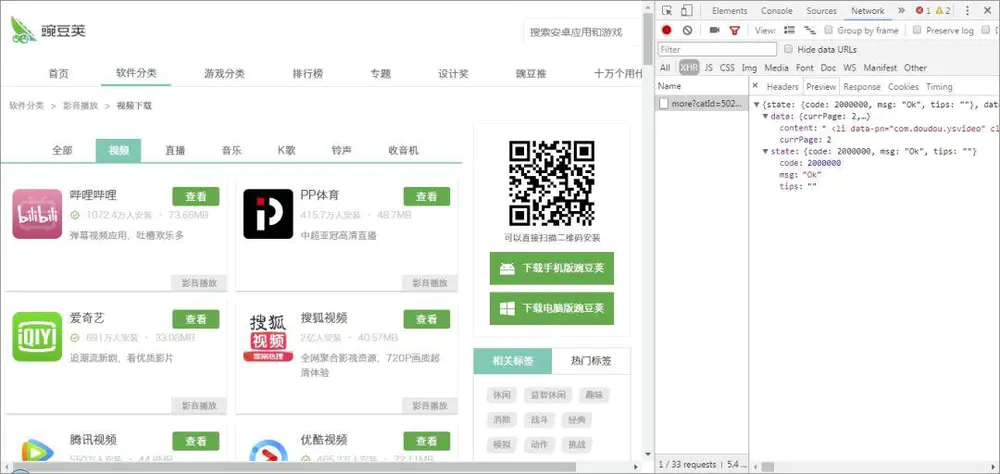

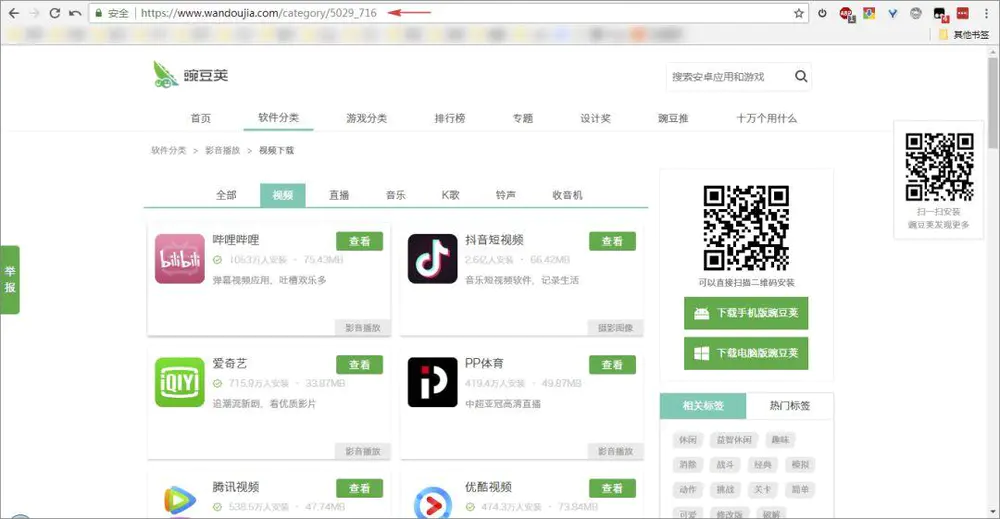

As you can see, the URL s of subclasses are made up of two numbers. The number in front represents the classification number, and the number in the back represents the subclassification number. If we get these two numbers, we can grab all the App information under the classification. How can we get these two numerical codes?

Returning to the classification page and locating the information, we can see that the classification information is wrapped in each li node, and the sub-classification URL is in the href attribute of sub-node a. There are 14 big classifications and 88 sub-classifications.

At this point, the idea is very clear, we can use CSS to extract all sub-categorized URL s, and then grab the required information separately.

In addition, it should be noted that the homepage information of the website is loaded statically, and Ajax dynamic loading is adopted from page 2. Different URL s need to be parsed and extracted separately.

_Scrapy Grab

We need to crawl two parts of content, one is the data information of APP, including the name, installation quantity, volume, comments and so on. The other is to download the icons of each App and store them in folders.

Because the website has some anti-crawling measures, we need to add random UA and proxy IP.

Here random UA uses scrapy-fake-useragent library, one line of code can be done, proxy IP directly on Abu cloud payment agent, a few dollars to make simple and easy.

Next, go straight to the code.

items.py

1 import scrapy 23 class WandoujiaItem (scrapy.Item): 4 cate_name = scrapy.Field()# Category name 5 child_cate_name = scrapy.Field()# Category number 6 app_name = scrapy.Field()# Subcategory name 7 install = scrapy.Field()# Subcategory number 8 = scrapy.Field()# Volume 9 commen T = scrapy. Field ()# Comment 10 icon_url = scrapy. Field ()# icon URL Python resource sharing qun 784758214, including installation packages, PDF, learning videos, here is the gathering place of Python learners, zero foundation, advanced, are welcome.

middles.py

Middleware is mainly used to set up proxy IP.

1import base64 2proxyServer = "http://Http-dyn.abuyun.com:9020 "3proxyUser = your information" 4proxyPass = your information "56 proxyAuth = Basic" +base64.urlsafe_b64 encode (bytes ((proxyUser +): "+proxyPass",""iii"). decode ("utf8") 7class Abyun Proxy (8 def_process, request, self):9.request. Meta ["proxy"] = proxyServer10 request. headers ["Proxy-Authorization"] = proxyAuth11 logging. debug ('Using Proxy:% s'% proxyServer)

pipelines.py

This file is used to store data to MongoDB and download icons to classified folders.

Store in MongoDB:

1MongoDB Storage 2 class MongoPipeline(object): 3 def __init__(self,mongo_url,mongo_db): 4 self.mongo_url = mongo_url 5 self.mongo_db = mongo_db 6 7 @classmethod 8 def from_crawler(cls,crawler): 9 return cls(10 mongo_url = crawler.settings.get('MONGO_URL'),11 mongo_db = crawler.settings.get('MONGO_DB')12 )1314 def open_spider(self,spider):15 self.client = pymongo.MongoClient(self.mongo_url)16 self.db = self.client[self.mongo_db]1718 def process_item(self,item,spider):19 name = item.__class__.__name__20 # self.db[name].insert(dict(item))21 self.db[name].update_one(item, {'$set': item}, upsert=True)22 return item2324 def close_spider(self,spider):25 self.client.close()

Download icons by folder:

1# Subfolder Download 2class Image download Pipeline (Images Pipeline): 3def get_media_requests (self, item, info): 4 if item ['icon_url']: 5 yield scrapy. Request (item ['icon_url'], meta={'item': item}) 67 def file_path (self, request, response = e, info = None):8 = name T. meta ['item'] ['app_name'] 9 cate_name = request. meta ['item'] ['cate_name'] 10 child_cate_name = request. meta ['item'] ['child_cate_name'] 1112 path1 = r'/ wandoujia/% s'% (cate_name, child_cate_name) 13 = path'{} {} {format 1,'jpg') 14 = path 1516. Def item_completed (self, results, item, info): 17 image_path = [x['path'] for ok, X in results if ok] 18 if not image_path: 19 raise DropItem ('Item contains no images') 20 return item

settings.py

1BOT_NAME='wandoujia'2SPIDER_MODULES= ['wandoujia. spiders'] 3NEWSPIDER_MODULE='wandoujia. spiders' 4 5MONGO_URL='localhost'6MONGO_DB='wandoujia' 7 8# Does the Robot Rule 9ROBOTSTXT_OBEY = False10 Set the delay of 0.2 s to 11 DOWNLOAD_DELAY = 0.21213 DOWNLOADER_MIDDLEWARES = {14'scrapy.downloader middlewares.useragent.User Agent Middleware': None, 15'scrapy_fake_useragent.middleware.Random User Agent Middleware': 100, Random UA16'wanjia.middleware.Abuyunware': 200_ Abu Middleware Reason 17) 1819 ITEM_PIPELINES = {20'wandoujia. pipelines. Mongo Pipeline': 300, 21'wandoujia. pipelines. Image download Pipeline': 400, 22} 2324# URL does not duplicate 25DUPEFILTER_CLASS ='scrapy. dupefilters.BaseDupeFilter'

wandou.py

The key parts of the main program are listed here:

1def __init__(self): 2 self.cate_url = 'https://Www.wandoujia.com/category/app'3 Subcategory Home Page URL 4 self.url ='https://www.wandoujia.com/category/'5 Subcategory Ajax Request Page URL 6 self.ajax_url ='https://www.wandoujia.com/wdjweb/api/category/more?'7 Instantiated Classification Label 8.wandou_url = Get_category() 9def start_requests (self): 10 yield scrapy. Request (self. cate_url, callback = self. get_category) 1112 def get_category (self, response): 13 cate_content = self. wandou_category. parse_category (response) 14

Here, we first define several URLs, including: Classification Page, Subclassification Home Page, Subclassification AJAX Page, that is, the URLs at the beginning of page 2. Then we define a class Get_category() which is used to extract all subclassified URLs. We will expand the code of this class later.

The program starts with start_requests, parses the home page to get a response, calls the get_category() method, and then extracts all URL s using the parse_category() method in the Get_category() class. The code is as follows:

1class Get_category(): 2 def parse_category(self, response): 3 category = response.css('.parent-cate') 4 data = [{ 5 'cate_name': item.css('.cate-link::text').extract_first(), 6 'cate_code': self.get_category_code(item), 7 'child_cate_codes': self.get_child_category(item), 8 } for item in category] 9 return data1011 # Get the numeric code 12 for all main classification labels def get_category_code(self, item):13 cate_url = item.css('.cate-link::attr("href")').extract_first()14 pattern = re.compile(r'.*/(\d+)') # Extract the main class label code 15 cate_code = re.search(pattern, cate_url)16 return cate_code.group(1)1718 # Get all subcategory names and codes 19 def get_child_category(self, item):20 child_cate = item.css('.child-cate a')21 child_cate_url = [{22 'child_cate_name': child.css('::text').extract_first(),23 'child_cate_code': self.get_child_category_code(child)24 } for child in child_cate]25 return child_cate_url2627 # Regular Extraction Subclassification Code 28 def get_child_category_code(self, child):29 child_cate_url = child.css('::attr("href")').extract_first()30 pattern = re.compile(r'.*_(\d+)') # Extract label number 31 child_cate_code = re. search (pattern, child_cate_url) 32 return child_cate_code. group (1)

Python Resource sharing qun 784758214 ,Installation packages are included. PDF,Learning videos, here is Python The gathering place of learners, zero foundation and advanced level are all welcomed.Here, in addition to cate_name, which can be easily extracted directly, we use get_category_code() and other three methods to extract the names and codes of the sub-categories of classification coding and sub-categories. The extraction method uses CSS and regular expressions, which is relatively simple.

The final extracted classification names and encoding results are as follows. With these encodings, we can construct a URL request to start extracting App information under each subcategory.

1{'cate_name': 'Video and audio broadcasting', 'cate_code': '5029', 'child_cate_codes': [ 2 {'child_cate_name': 'video', 'child_cate_code': '716'}, 3 {'child_cate_name': 'Live broadcast', 'child_cate_code': '1006'}, 4 ... 5 ]}, 6{'cate_name': 'System tools', 'cate_code': '5018', 'child_cate_codes': [ 7 {'child_cate_name': 'WiFi', 'child_cate_code': '895'}, 8 {'child_cate_name': 'Browser', 'child_cate_code': '599'}, 9 ...10 ]}, 11...

Then the previous get_category() continues to write down to extract App's information:

1def get_category(self,response): 2 cate_content = self.wandou_category.parse_category(response) 3 # ... 4 for item in cate_content: 5 child_cate = item['child_cate_codes'] 6 for cate in child_cate: 7 cate_code = item['cate_code'] 8 cate_name = item['cate_name'] 9 child_cate_code = cate['child_cate_code']10 child_cate_name = cate['child_cate_name']1112 page = 1 # Set crawl start page number 13 if page == 1:14 # Constructing Home Page url15 category_url = '{}{}_{}' .format(self.url, cate_code, child_cate_code)16 else:17 params = {18 'catId': cate_code, # Category 19 'subCatId': child_cate_code, # Subcategory 20'page': page, 21} 22 category_url = self.ajax_url + URLEncode (params) 23 dict = {page': page,'cate_name': cate_name,'cate_code': cate_code,'child_cate_name': child_cate_name,'child_cate_code': child_cate_name,'child_cate_code': child_cate_code} 24 Yield scrapy. Request (category_url, callback = self. parse, meta = dict)

Here, all the classification names and codes are extracted in turn to construct the URL of the request.

Because the form of the URL on the home page is different from that at the beginning of the second page, if statements are used to construct them respectively. Next, request the URL and call the self.parse() method for parsing, where meta parameters are used to pass the relevant parameters.

1def parse(self, response): 2 if len(response.body) >= 100: # To determine whether the page has been crawled, the value is set at 100 because the length is 873 when there is no content. page = response.meta['page'] 4 cate_name = response.meta['cate_name'] 5 cate_code = response.meta['cate_code'] 6 child_cate_name = response.meta['child_cate_name'] 7 child_cate_code = response.meta['child_cate_code'] 8 9 if page == 1:10 contents = response11 else:12 jsonresponse = json.loads(response.body_as_unicode())13 contents = jsonresponse['data']['content']14 # response yes json,json Content html,html Cannot be used directly for text.css To extract, first convert 15 contents = scrapy.Selector(text=contents, type="html")1617 contents = contents.css('.card')18 for content in contents:19 # num += 120 item = WandoujiaItem()21 item['cate_name'] = cate_name22 item['child_cate_name'] = child_cate_name23 item['app_name'] = self.clean_name(content.css('.name::text').extract_first()) 24 item['install'] = content.css('.install-count::text').extract_first()25 item['volume'] = content.css('.meta span:last-child::text').extract_first()26 item['comment'] = content.css('.comment::text').extract_first().strip()27 item['icon_url'] = self.get_icon_url(content.css('.icon-wrap a img'),page)28 yield item2930 # Climb the next page recursively 31 page += 132 params = {33 'catId': cate_code, # Large Category 34 'subCatId': child_cate_code, # Small category 35'page': page, 36} 37 ajax_url = self.ajax_url + URLEncode (params) 38 dict = {page': page,'cate_name': cate_name,'cate_code': cate_code,'child_cate_name': child_cate_name,'child_cate_code': child_cate_name,'child_cate_code': child_cate_code} 39 yield scrapy.R. Equest (ajax_url, callback = self. parse, meta = dict)

Finally, the parse() method is used to parse and extract the final information such as the name of App and the amount of installation we need. After parsing completes one page, the page is incremented, and then the parse() method is repeated to parse until the last page of the complete class is parsed.

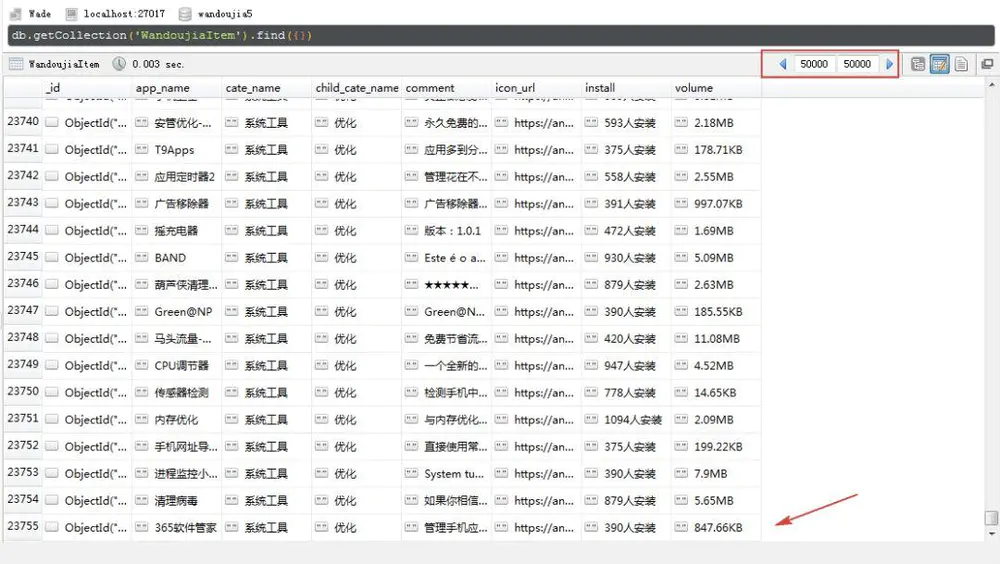

Ultimately, in a few hours, we can grab all the App information. I get 73,755 messages and 72,150 icons. The two values are different because some Apps only have information but no icons.

Icon Download:

The following is a simple exploratory analysis of the extracted information.

3 Data analysis

_Overall situation

First, let's look at the installation of App. After all, more than 70,000 apps are naturally interested in which apps are most used and which are least used.

The code is implemented as follows:

1plt.style.use('ggplot') 2colors = '#6D6D6D' #Font color 3 colorline = '#63AB47' #gules CC2824 #Pea pod green 4 fontsize_title = 20 5fontsize_text = 10 6 7# Total number of downloads ranked 8 def analysis_maxmin(data): 9 data_max = (data[:10]).sort_values(by='install_count')10 data_max['install_count'] = (data_max['install_count'] / 100000000).round(1)11 data_max.plot.barh(x='app_name',y='install_count',color=colorline)12 for y, x in enumerate(list((data_max['install_count']))):13 plt.text(x + 0.1, y - 0.08, '%s' %14 round(x, 1), ha='center', color=colors)1516 plt.title('Top 10 installations App ?',color=colors)17 plt.xlabel('Download volume(Billion times)')18 plt.ylabel('App')19 plt.tight_layout()20 # plt.savefig('App. png', DPI = 200) 21 plt. show ()

Looking at the picture above, there are two "unexpected":

-

No. 1 is a mobile phone management software.

Surprised by the number one on Pea Pod. com, first, curious that everyone loves mobile phone cleaning or is afraid of poisoning? After all, my own mobile phones have been running naked for years; second, the number one is not the goose factory's other products, such as Wechat or QQ.

-

Looking at the list, I thought what would happen didn't appear, but what I didn't expect appeared.

Among the top ten, there are even fewer names that have been heard from books, banner novels, and print people. Even the national App WeChat, Alipay and so on have not even appeared in this list.

With doubts and curiosity, we found the home pages of two App s, Tencent Mobile Housekeeper and Weixin.

Tencent Mobile Housekeeper Download and Installation Volume:

Wechat download and installation volume:

What is this situation???

Tencent housekeeper's 300 million downloads are equivalent to installation, while Weixin's 2 billion downloads are only 10 million installations. The comparison of the two sets of data roughly reflects two problems:

-

Or Tencent housekeepers don't actually download as much.

- Or Wechat has less Downloads

Whatever the problem, it reflects a problem: the website is not careless enough.

To prove this point, by comparing the installation and download volumes of the top ten apps, we find that many apps have the same installation and download volumes. That is to say, the actual installation volumes of these apps are not that large, and if so, the list will be very watery.

Did you get such a result after so long hard climbing?

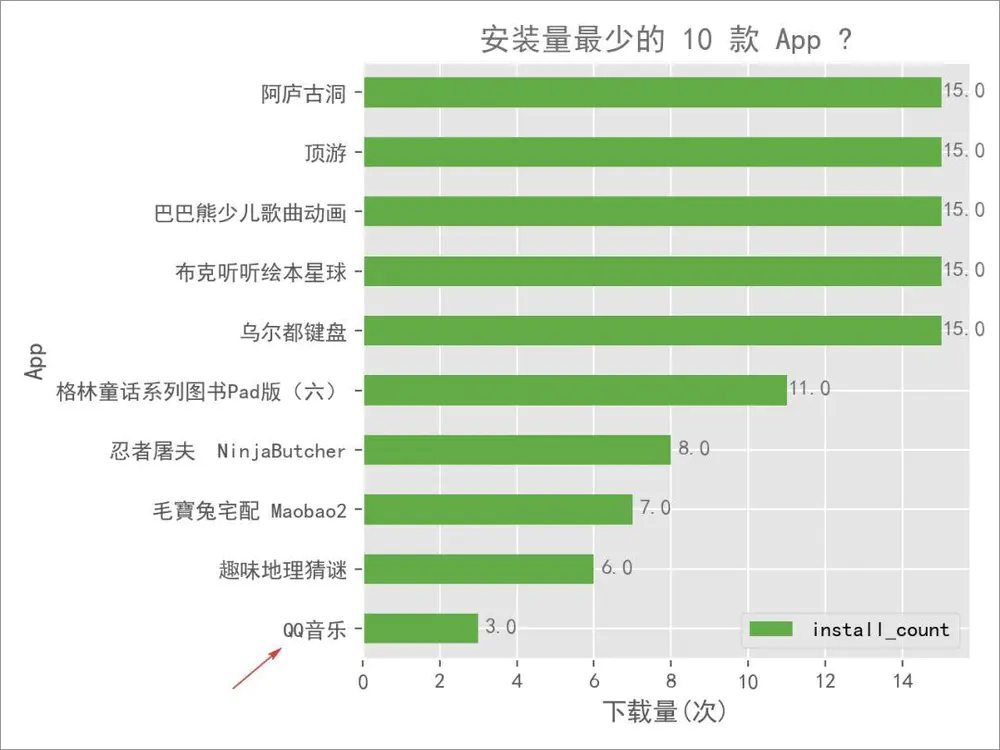

Don't die, and then look at the smallest number of apps installed, here to find out the smallest 10:

Glancing at it, I was even more surprised:

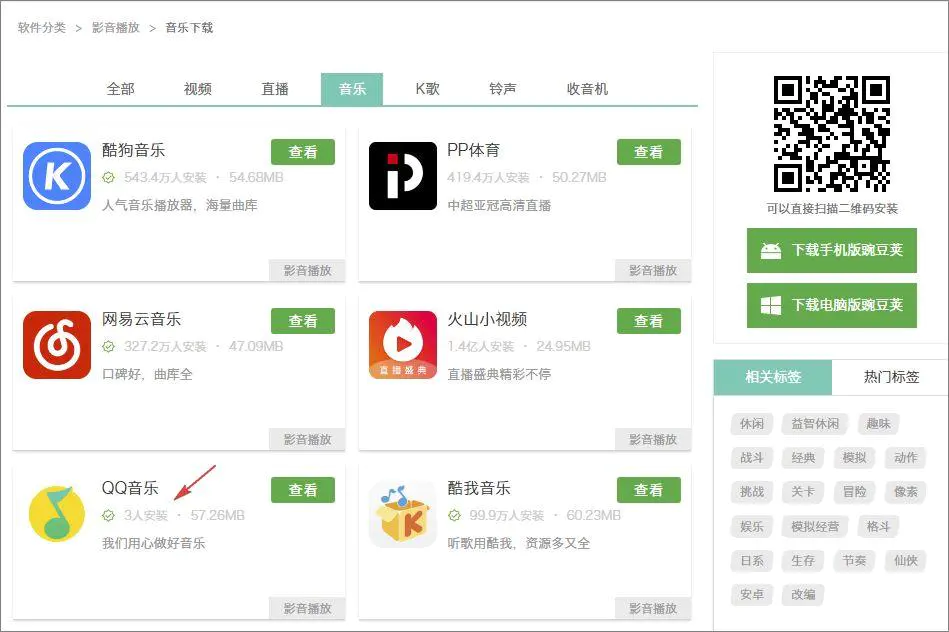

"QQ Music" is the penultimate, only three installations!

Is this the same product as QQ music, which just went on sale and has a market value of 100 billion yuan?

Check again:

No mistake. It says three people to install it!

To what extent has it stopped wandering? This installation quantity, goose factory can also "make music with heart"?

To tell you the truth, I don't want to go on with my analysis here anymore. I'm afraid I'll scratch out more unexpected things. But after all this hard climbing, I'd better look down again.

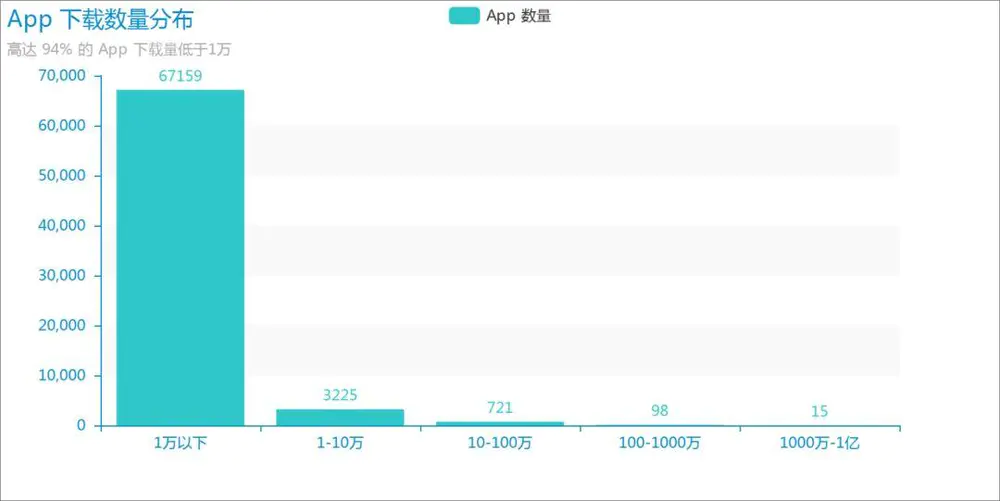

Looking at the beginning and the end, let's take a look at the whole, understand the distribution of the number of installations of all Apps, and remove the top ten Apps with a lot of water.

It's amazing to find that there are 67,195 apps, accounting for 94% of the total, installed less than 10,000!

If all the data on this website is true, then the number one mobile stewardship on this site is almost equal to the installation of more than 60,000 App s!

For most app developers, it can only be said that the reality is very cruel, hard-working development of App, users do not exceed 10,000 people as high as nearly 95%.

The code is implemented as follows:

1def analysis_distribution(data): 2 data = data.loc[10:,:] 3 data['install_count'] = data['install_count'].apply(lambda x:x/10000) 4 bins = [0,1,10,100,1000,10000] 5 group_names = ['1 Less than 10,000','1-10 ten thousand','10-100 ten thousand','100-1000 ten thousand','1000 ten thousand-1 Billion'] 6 cats = pd.cut(data['install_count'],bins,labels=group_names) 7 cats = pd.value_counts(cats) 8 bar = Bar('App Download Quantity Distribution','Up to 94% Of App Download less than 10,000') 9 bar.use_theme('macarons')10 bar.add(11 'App Number',12 list(cats.index),13 list(cats.values),14 is_label_show = True,15 xaxis_interval = 0,16 is_splitline_show = 0,17 )18 bar.render(path='App Download Quantity Distribution.png',pixel_ration=1)

_Classification

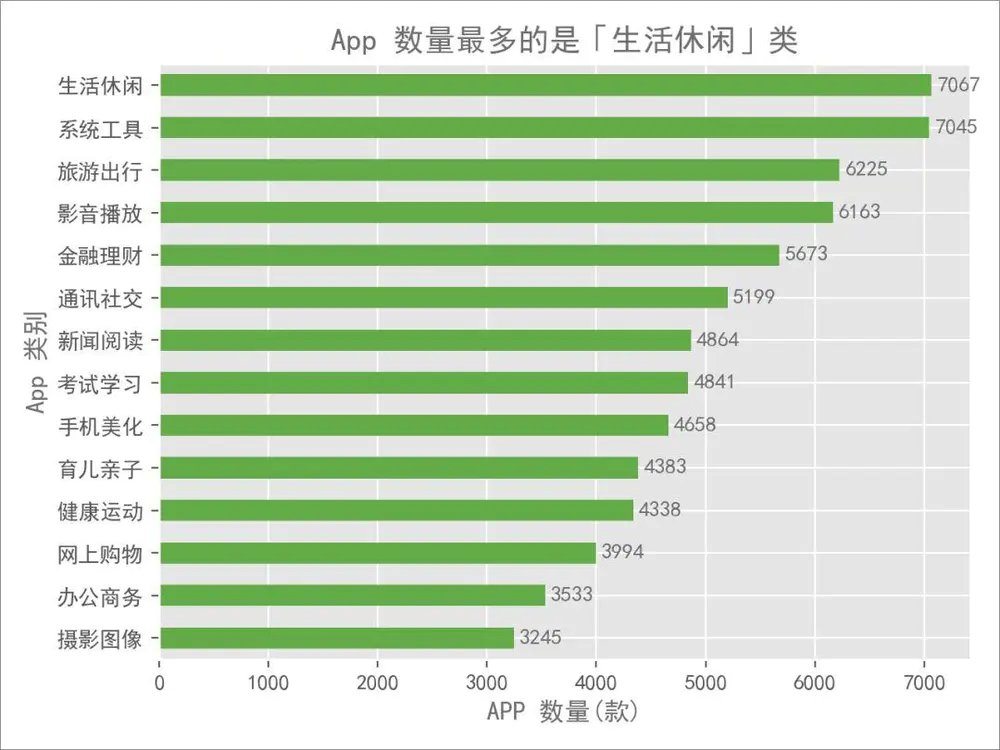

Next, let's take a look at App under each category. Instead of looking at the number of installations, let's look at the number to eliminate the interference.

It can be seen that the number of App s in each of the 14 major categories is not much different, and the largest number of "life and leisure" is more than twice the number of "photographic images".

Next, we further look at the number of App s in 88 sub-classifications and select the 10 sub-classifications with the largest number and the smallest number.

Two interesting phenomena can be found:

-

The "Radio" category has the largest number of App s, reaching more than 1,300.

This is very surprising. At present, the radio is totally an antique, but there are still people to develop it.

-

App subclasses are quite different in number

The most "radio" is the least "dynamic wallpaper" nearly 20 times. If I am an App developer, I would like to try to develop a small group of Apps, less competitive, such as "memorizing words" and "children's encyclopedia".

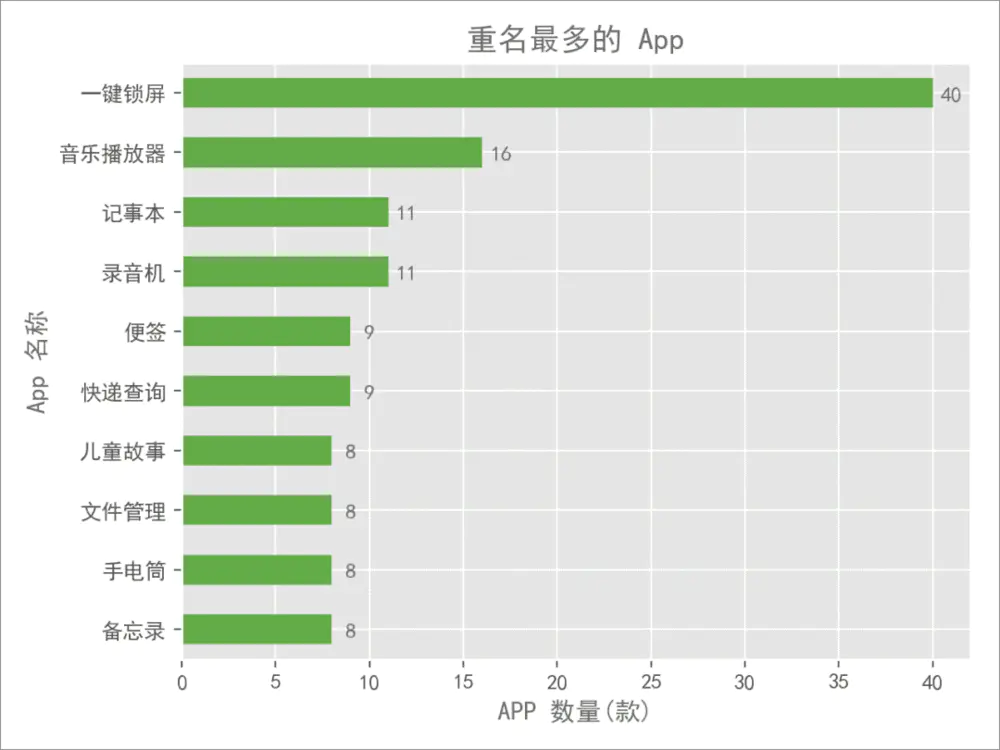

After looking at the general situation and classification, I suddenly thought of a question: so many App s, do you have a rename?

Amazingly, there are as many as 40 apps called "One-click Lock Screen". Is it hard for App to come up with another name for this feature? Nowadays, many mobile phones support touch lock screen, which is more convenient to operate than one-button lock screen.

Next, let's briefly compare App between Peapod and Kuan.

_Contrast Kuan'an

One of the most intuitive differences between the two is that pea pods have absolute advantages in the number of App s, reaching 10 times as many as Kuan'an, so we are naturally interested in:

Does the pea pod include all App s on Kuan'an?

If it is, "I have what you have, and I have what you don't have", then Kuan has no advantage. After statistics, it was found that pea pods included only 3,018 items, or about half of them, while the remaining half did not.

Although there is a discrepancy between App names on the two platforms, there is more reason to believe that many of the niche products of App in Kuan are unique, not in the pea pod.

The code is implemented as follows:

1 include = data3. shape [0] 2notinclude = data2. shape [0] - data3. shape [0] 3sizes = [include, notinclude] 4labels = [u'contains', U' does not contain'] 5explode = [0, 0.05] 6plt. pie (7 sizes, 8 autopct ='%%. 1f%', 9 = labels, 10 colors = [line,'#7FC161',\ pea pod green 11 = 12 shadow, False Startangle = 90,13 explode = explode, 14 textprops = {fontsize': 14,'color': colors} 15) 16plt. Title ('pea pod only contains half of the App number of Kuan', color = colorline, fontsize = 16) 17plt. axis ('equal') 18plt. axis ('off') 19plt. tight_layout () 20T. savefig ('contains no contrast. png',dpi=200)21plt.show()

Python resource sharing qun 784758214, including installation packages, PDF, learning videos, here is the gathering place of Python learners, zero foundation, advanced, are welcome.Next, let's look at the download volume of the included App s on both platforms.

As you can see, the difference between the number of App downloads on the two platforms is still obvious.

Finally, let me look at the apples that are not included in the pea pods.

It was found that many artifacts were not included, such as RE, Green Guardian, a wooden letter and so on. The comparison between pea pods and Kuan'an is here. If I sum it up in one sentence, I may say:

Pea pods are too powerful. App s are ten times as many as Kuan's, so I chose Kuan.