Yesterday it took an afternoon to write a little reptile to analyze its fan data. This is fun! Today, I helped a lot of big V s in the group to climb their data. Running speed: more than 5,000 fans per minute. For the time being, I have to prepare for the make-up exam these two days, so I don't have time to continue playing with it.

Next improvements: 1. Multithreading 2. scrapy 3. Deep data 4. Distributed Crawlers

Hope to achieve the functions:

- 1. Districts, educational level, registration time, powder feeding recognition and color detection

- 2. Export h5 superb interface and perfect xlsx data

- 3. Suggestions on content

- 4. Automation of Docking Wechat Background

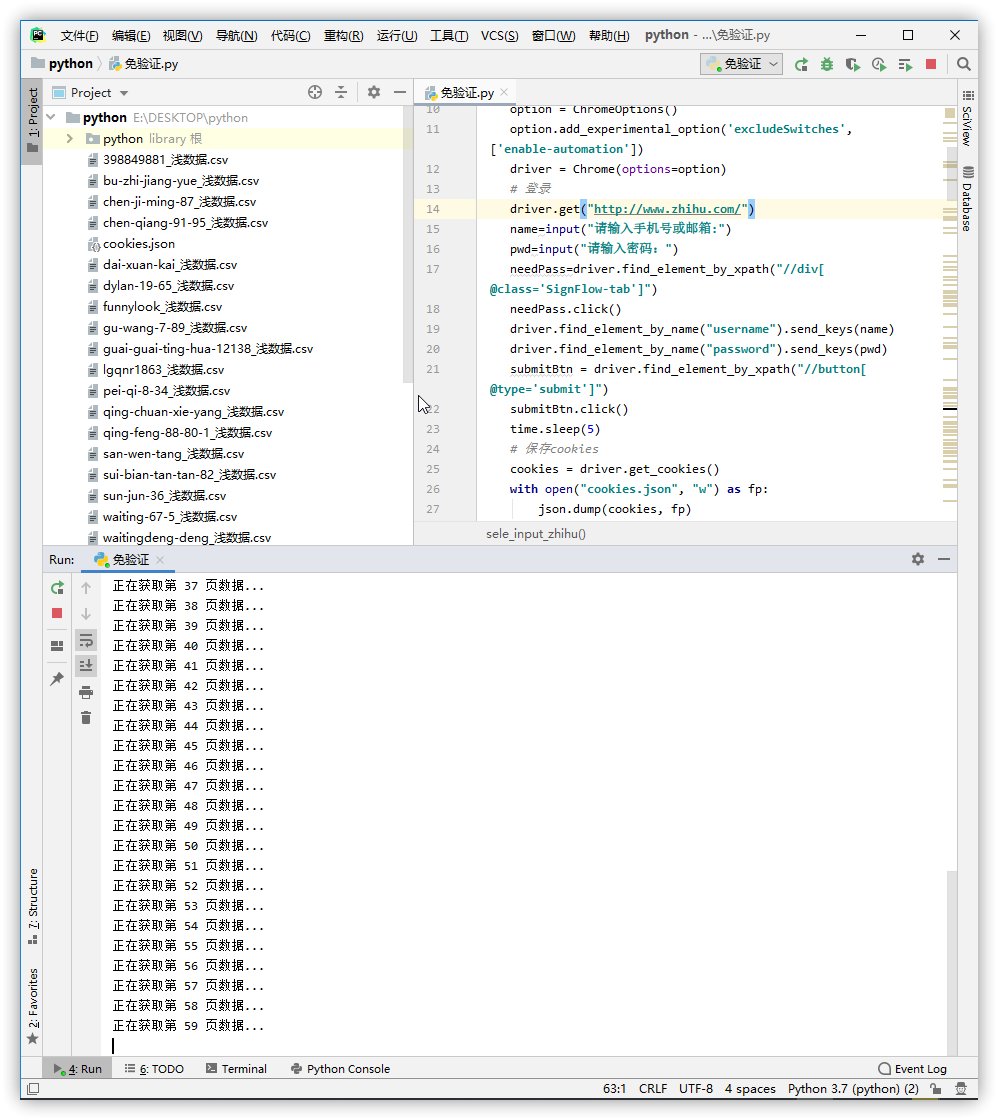

The following is the source code, which was tested on August 21, 2019.

from selenium.webdriver import Chrome,ChromeOptions

from requests.cookies import RequestsCookieJar

from lxml import etree

from pandas import DataFrame

import json,time,requests,re,os,clipboard

def sele_input_zhihu():

'First login, you need to enter your account password.'

# Preventing detection

option = ChromeOptions()

option.add_experimental_option('excludeSwitches', ['enable-automation'])

driver = Chrome(options=option)

# Sign in

driver.get("http://www.zhihu.com/")

name=input("Please enter your cell phone number or mailbox:")

pwd=input("Please input a password:")

needPass=driver.find_element_by_xpath("//div[@class='SignFlow-tab']")

needPass.click()

driver.find_element_by_name("username").send_keys(name)

driver.find_element_by_name("password").send_keys(pwd)

submitBtn = driver.find_element_by_xpath("//button[@type='submit']")

submitBtn.click()

time.sleep(5)

# Save cookies

cookies = driver.get_cookies()

with open("cookies.json", "w") as fp:

json.dump(cookies, fp)

print("Preservation cookies Success!")

driver.close()

def login_zhihu(s):

'Using preserved cookies Log in and know'

headers = {

'User-Agent':'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/76.0.3809.100 Safari/537.36'

}

s.headers=headers

cookies_jar = RequestsCookieJar()

with open("cookies.json","r")as fp:

cookies = json.load(fp)

for cookie in cookies:

cookies_jar.set(cookie['name'], cookie['value'])

s.cookies.update(cookies_jar)

print("Log in successfully!")

# Shallow data processing

# "users":{(.*), "questions":{}

def parse_infos(session,url,id):

content = session.get(url).text

info_json=re.search(r'"users":(.*}}),"questions":{}',content).group(1)

json_dict=json.loads(info_json)

items=[]

for key in json_dict.keys():

item = json_dict[key]

if "name" in item.keys() and key!=id:

custom = "yes" if(item["useDefaultAvatar"]==False) else "no"

thetype="Ordinary users" if(item["isOrg"]==False) else "Institution number"

gender="female" if(item["gender"]==0) else ("male" if (item["gender"]==1)else "Unknown")

vip="no"if(item["vipInfo"]["isVip"]==False) else "yes"

items.append([item["urlToken"] , item["name"] , custom , item["avatarUrl"], item["url"] , thetype , item["headline"] , gender , vip , item["followerCount"] , item["answerCount"] , item["articlesCount"]])

return items

def main():

# Sign in

if not os.path.exists("cookies.json"):

print("Unlogged account, please login!")

sele_input_zhihu()

session=requests.session()

login_zhihu(session)

# Required data

zhuye_url="https://www.zhihu.com/people/you-yi-shi-de-hu-xi/activities"# This place is used to enter home page links

zhuye=re.match(r"(.*)/activities$",zhuye_url).group(1)

id = re.match(r".*/(.*)$",zhuye).group(1)

followers_url=zhuye+r"/followers?page={}"

# Analysis of the number and page number of fans

html = etree.HTML(session.get(zhuye_url).text)

text=html.xpath("//div[@class='NumberBoard FollowshipCard-counts NumberBoard--divider']//strong/text()")[1]

follows = int("".join(text.split(",")))

pages = follows//20+1

print("Focusers "+str(follows)+"Man, altogether "+str(pages)+"Page data!")

# Getting Exported Shallow Data

all_info = []

for i in range(1,pages+1):

infos_url = followers_url.format(i)

print("Getting the "+str(i)+" Page data...")

array = parse_infos(session,infos_url,id)

all_info+=array

many=len(all_info)

print("Data acquisition completed, total"+ str(many)+" Bar data!")

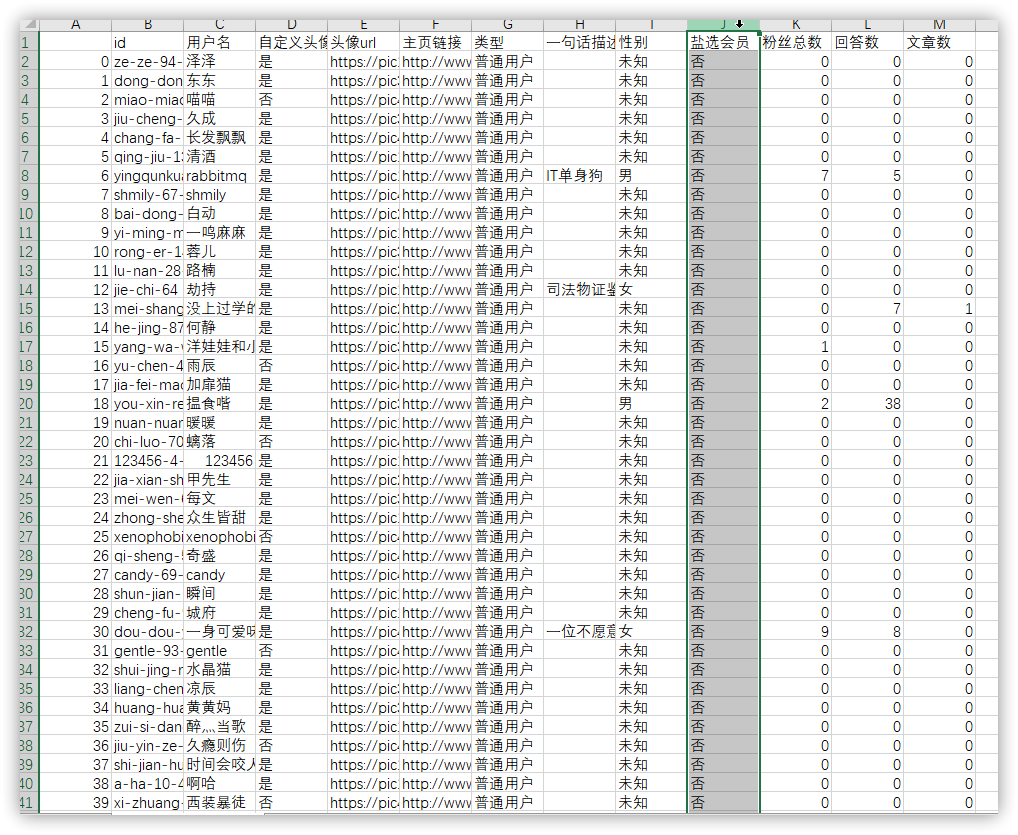

data = DataFrame(data=all_info,columns=["id", "User name", "Custom Avatar", "Head portrait url", "Home page links", "type", "One sentence description", "Gender", "Salt Selected Members", "Number of fans", "Number of answers", "Number of articles"])

data.to_csv(id+"_Shallow data.csv",encoding="utf-8-sig")

print("Data has been exported to"+ id+"_Shallow data.csv!")

# Generating fan data reports

wood=org=female=money=male=f2k=f5k=f10k=gfemale=gmale=0

for info in all_info:

if info[2]=="no" and info[9]==0 and info[10]<=2 and info[11]<=2: wood+=1

if info[5]=="Institution number": org+=1

if info[7]=="female":

female+=1

if info[9]>=20: gfemale+=1

else:

if info[9]>=50: gmale+=1

if info[7]=="male": male+=1

if info[8]=="yes": money+=1

if info[9]>=10000: f10k+=1

elif info[9]>=5000: f5k+=1

elif info[9]>=2000: f2k+=1

report="*"*40+"\n Shallow Fan Data Snapshot: In All You Have "+str(many)+" Among the fans:\n"+"Shared zombie powder "+str(wood)+" Percentage "+"{:.4%}".format(wood/many)+" ,That's quite enough."+(" low " if (wood/many)<0.2 else " high ")+"Proportion.\n"+"In addition, the ratio of male to female fans is 1. : "+"{:.3}".format(female/male)+" ,It seems that you are well received."+(" female " if (female>=male) else " male ")+"Sex compatriots love!\n"+"Pretty girl"+str(gfemale)+"people,handsome young man"+str(gmale)+"Man\n"+"Among all your fans, krypton learners have "+str(money)+" individual,Proportion "+"{:.3%}".format(money/many)+",It seems that most of your fans are"+("high"if(money/many>0.045) else" low ")+"Income users!\n"+" ◉ Fan 10 K+Yes "+str(f10k)+" People;\n"+" ◉ Fan 5 K-10K Yes "+str(f5k)+" People;\n"+" ◉ Fan 2 K-5K Yes "+str(f2k)+" People;\n"

if org>=1:

report+="Besides, there are other fans among you. "+str(org)+" Location number! Detailed report quickly shallow data.csv Look inside!\n"

clipboard.copy(report)

print(report)

if __name__=="__main__":

main()Operating screenshots:

This is my fan report for today:

Shallow Fan Data Snapshot: Of all your 9934 fans:

There are 2091 zombie powder, accounting for 21.0489%, which is quite a high proportion.

In addition, the male-to-female ratio of fans is 1:0.352, it seems that you are deeply loved by the majority of male compatriots!

There are 123 beautiful women and 354 beautiful children.

Among all your fans, Krypton Learning has 477 users, accounting for 4.802%. It seems that most of your fans are high-income users!

_10 K + 11 fans;

_7 fans at 5K-10K;

_There are 17 fans at 2K-5K.

In addition, there is an organization number among your fans! Detailed report go to shallow data. csv to see it!