1. zlib GNUzlib compression

Zlib module provides the underlying interface for many functions in the zlib compression library of GNU Project.

1.1 processing data in memory

The simplest way to use zlib requires that all data to be compressed or decompressed be stored in memory.

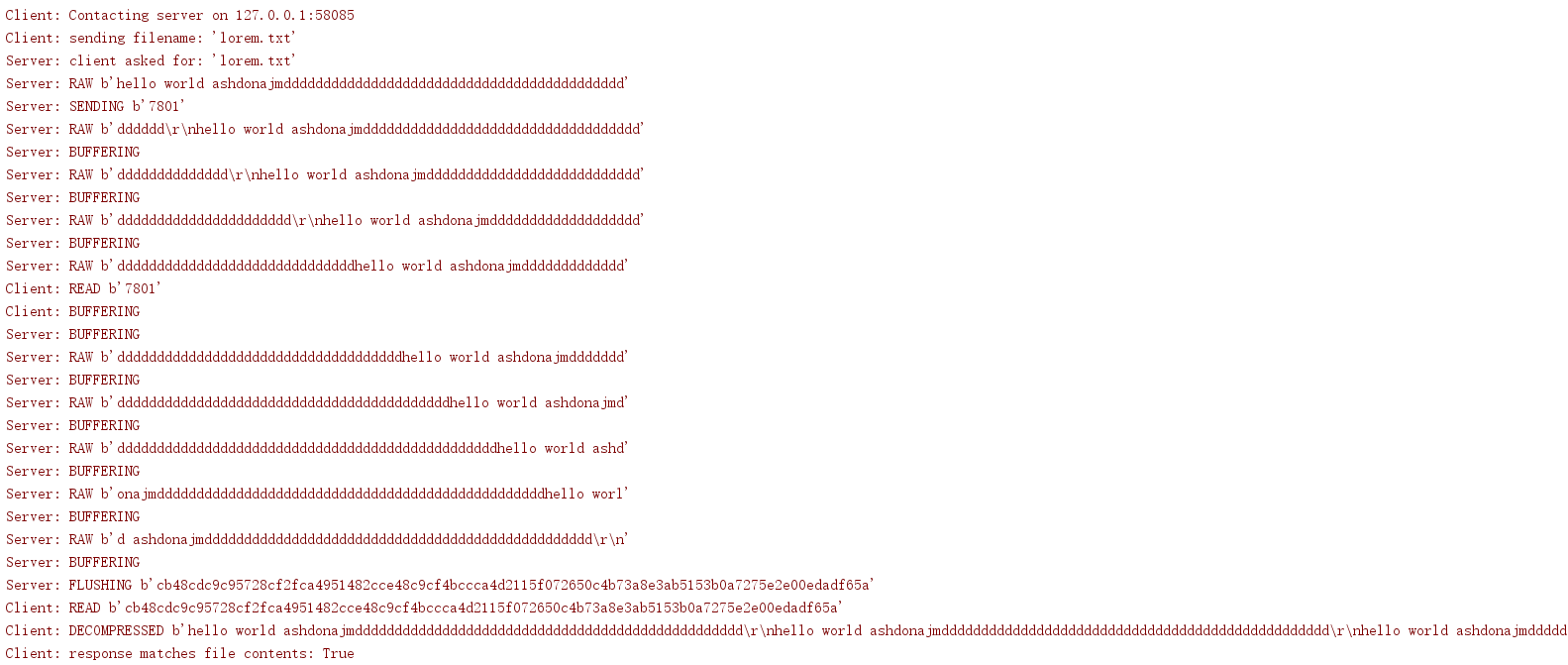

import zlib import binascii original_data = b'This is the original text.' print('Original :', len(original_data), original_data) compressed = zlib.compress(original_data) print('Compressed :', len(compressed), binascii.hexlify(compressed)) decompressed = zlib.decompress(compressed) print('Decompressed :', len(decompressed), decompressed)

The compress() and decompress() functions take a byte sequence parameter and return a byte sequence.

As you can see from the previous example, the compressed version of a small amount of data may be larger than the uncompressed version. The results depend on the input data, but it's interesting to observe the compression overhead of small datasets.

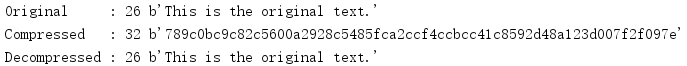

import zlib original_data = b'This is the original text.' template = '{:>15} {:>15}' print(template.format('len(data)', 'len(compressed)')) print(template.format('-' * 15, '-' * 15)) for i in range(5): data = original_data * i compressed = zlib.compress(data) highlight = '*' if len(data) < len(compressed) else '' print(template.format(len(data), len(compressed)), highlight)

The * in the output highlights which rows of compressed data take up more memory than the uncompressed version.

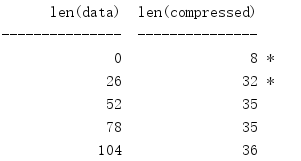

Zlib supports different levels of compression, allowing for a balance between computing costs and space reduction. The default compression level, zlib.Z DEFAULT COMPRESSION, is -1, which corresponds to a hard coded value representing a trade-off between performance and compression results. Currently this corresponds to level 6.

import zlib input_data = b'Some repeated text.\n' * 1024 template = '{:>5} {:>5}' print(template.format('Level', 'Size')) print(template.format('-----', '----')) for i in range(0, 10): data = zlib.compress(input_data, i) print(template.format(i, len(data)))

A compression level of 0 means no compression at all. Level 9 requires the most computation and produces the smallest output. As the following example, for a given input, the amount of space reduction that can be obtained by multiple compression levels is the same.

1.2 incremental compression and decompression

This compression method in memory has some disadvantages. The main reason is that the system needs enough memory, which can hold both uncompressed and compressed versions in memory. Therefore, this method is not practical for real-world use cases. Another way is to use the Compress and Decompress objects to incrementally process the data, so you don't need to put the entire dataset in memory.

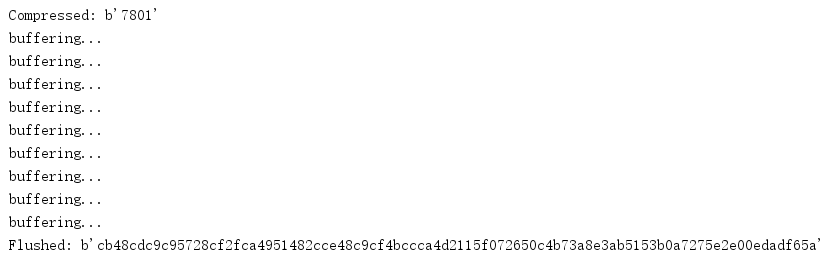

import zlibimport binascii compressor = zlib.compressobj(1) with open('lorem.txt','rb') as input: while True: block = input.read(64) if not block: break compressed = compressor.compress(block) if compressed: print('Compressed: {}'.format( binascii.hexlify(compressed))) else: print('buffering...') remaining = compressor.flush() print('Flushed: {}'.format(binascii.hexlify(remaining)))

This example reads a small block of data from a plain text file and passes the data set to compress(). The compressor maintains a memory buffer for compressed data. Because compression algorithms rely on checksums and the smallest block size, the compressor may not be ready to return data each time it receives more input. If it is not ready for a full compressed block, an empty byte string is returned. When all

1.3 mixed content flow

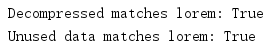

In the case of mixed compressed and uncompressed data, you can also use the Decompress class returned by decompressobj().

import zlib lorem = open('lorem.txt','rb').read() compressed = zlib.compress(lorem) combined = compressed +lorem decompressor = zlib.decompressobj() decompressed = decompressor.decompress(combined) decompressed_matches = decompressed == lorem print('Decompressed matches lorem:',decompressed_matches) unused_matches = decompressor.unused_data == lorem print('Unused data matches lorem:',unused_matches)

After decompressing all the data, the unused data property contains all the unused data.

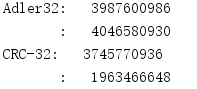

1.4 checksums

In addition to the compression and decompression functions, zlib also includes two functions for calculating the checksum of data, adler32() and crc32(). The checksums calculated by these two functions cannot be considered as password safe, they are only used for data integrity verification.

import zlib data = open('lorem.txt','rb').read() cksum = zlib.adler32(data) print('Adler32: {:12d}'.format(cksum)) print(' : {:12d}'.format(zlib.adler32(data,cksum))) cksum = zlib.crc32(data) print('CRC-32: {:12d}'.format(cksum)) print(' : {:12d}'.format(zlib.crc32(data,cksum)))

These two functions take the same parameters, including a byte string containing data and an optional value, which can be used as the starting point of the checksum. These functions return a 32-bit signed integer value that can be passed back as a new starting parameter to subsequent calls to generate a dynamically changing checksum.

1.5 compress network data

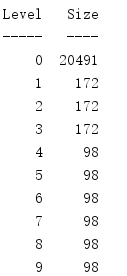

The server in the next code listing uses a stream compressor in response to a filename request, which writes a compressed version of the file to a socket that communicates with the client.

import zlib import logging import socketserver import binascii BLOCK_SIZE = 64 class ZlibRequestHandler(socketserver.BaseRequestHandler): logger = logging.getLogger('Server') def handle(self): compressor = zlib.compressobj(1) # Find out what file the client wants filename = self.request.recv(1024).decode('utf-8') self.logger.debug('client asked for: %r', filename) # Send chunks of the file as they are compressed with open(filename, 'rb') as input: while True: block = input.read(BLOCK_SIZE) if not block: break self.logger.debug('RAW %r', block) compressed = compressor.compress(block) if compressed: self.logger.debug( 'SENDING %r', binascii.hexlify(compressed)) self.request.send(compressed) else: self.logger.debug('BUFFERING') # Send any data being buffered by the compressor remaining = compressor.flush() while remaining: to_send = remaining[:BLOCK_SIZE] remaining = remaining[BLOCK_SIZE:] self.logger.debug('FLUSHING %r', binascii.hexlify(to_send)) self.request.send(to_send) return if __name__ == '__main__': import socket import threading from io import BytesIO logging.basicConfig( level=logging.DEBUG, format='%(name)s: %(message)s', ) logger = logging.getLogger('Client') # Set up a server, running in a separate thread address = ('localhost', 0) # let the kernel assign a port server = socketserver.TCPServer(address, ZlibRequestHandler) ip, port = server.server_address # what port was assigned? t = threading.Thread(target=server.serve_forever) t.setDaemon(True) t.start() # Connect to the server as a client logger.info('Contacting server on %s:%s', ip, port) s = socket.socket(socket.AF_INET, socket.SOCK_STREAM) s.connect((ip, port)) # Ask for a file requested_file = 'lorem.txt' logger.debug('sending filename: %r', requested_file) len_sent = s.send(requested_file.encode('utf-8')) # Receive a response buffer = BytesIO() decompressor = zlib.decompressobj() while True: response = s.recv(BLOCK_SIZE) if not response: break logger.debug('READ %r', binascii.hexlify(response)) # Include any unconsumed data when # feeding the decompressor. to_decompress = decompressor.unconsumed_tail + response while to_decompress: decompressed = decompressor.decompress(to_decompress) if decompressed: logger.debug('DECOMPRESSED %r', decompressed) buffer.write(decompressed) # Look for unconsumed data due to buffer overflow to_decompress = decompressor.unconsumed_tail else: logger.debug('BUFFERING') to_decompress = None # deal with data reamining inside the decompressor buffer remainder = decompressor.flush() if remainder: logger.debug('FLUSHED %r', remainder) buffer.write(remainder) full_response = buffer.getvalue() lorem = open('lorem.txt', 'rb').read() logger.debug('response matches file contents: %s', full_response == lorem) # Clean up s.close() server.socket.close()

We artificially partition the code list to show the buffering behavior. If the data is passed to compress() or decompress(), but the complete compressed or uncompressed output block is not obtained, the buffering will be performed.

The client connects to the socket and requests a file. Then loop and receive the compressed data block. Since a block may not contain enough information to fully decompress, the remaining data previously received will be combined with the new data and passed to the decompressor. When the data is decompressed, it is appended to a buffer and compared with the contents of the file at the end of the processing cycle.