Yesterday, I wrote a small crawler. When using axios.all to request multiple pages at the same time, it's easy for the reasons of the domestic network to time out and reject. It's not advisable for Buddhist resolve. Then I thought that I could realize a function of "resending failed requests".

Promise.all(requestPromises).then(...).catch(...) will enter the then method when all requestPromises are resolve d, and return all results as an array. As long as there is a failure, it will enter the catch. If the catch method is defined in a single request, the catch method of Promise.all will not be entered. Therefore, you can put the failed promise into a list in a single catch, and then request the failed request after a round of requests.

let failedList = []

function getDataById (id) { // This is a single request

return new Promise(function (resolve, reject) {

getResponse(id, resolve, reject)

}).catch(e => {

failedList.push(arguments.callee(id)) // If it fails, the request is reissued and the promise of the request is placed in the failedList for subsequent processing

})

}

function getResponse (id, resolve, reject) { // Simulation return results

setTimeout(() => {

if (Math.random() > 0.8) resolve({id, msg: 'ok'})

else reject({id, msg: 'error'})

}, 1000)

}

const RequestList = [getDataById(1), getDataById(2), getDataById(3)]

fetchData(RequestList)

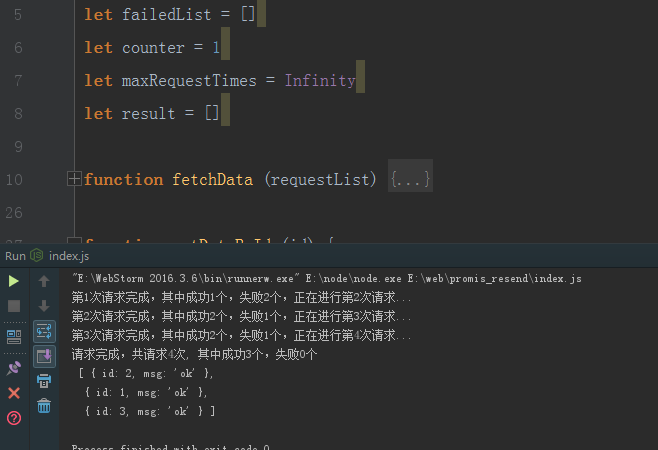

let counter = 1 // Number of requests

let maxRequestTimes = 5 // The maximum number of requests, because it's possible that other pages can't be accessed, and the number of requests is useless --

let result = [] // The final result

function fetchData (requestList) { // Here is the processing of request results

Promise.all(requestList).then(resolve => {

result = result.concat(resolve.filter(i => i)) // When the filter returns true, the array item is reserved. Because the return value is not given in the catch of getDataById (not needed here), there will be undefined in the resolve, which needs to be filtered out

let failedLength = failedList.length

if (failedLength > 0 && counter < maxRequestTimes) { // If there are requests in the failure list, and the number of requests does not exceed the set value, the next request will be made and the log will be printed

console.log(`The first ${counter}Requests completed, of which succeeded ${RequestList.length - failedLength}Fail ${failedLength}In progress ${++counter}Secondary request...`)

fetchData(failedList)

failedList = [] // Here, you need to clear the failedList, otherwise it will be called all the time. Don't worry, the next request that fails will be filled in the failedList with getDataById.

} else { // Indicates that all requests are successful or the maximum number of requests has been reached. Here we can do further processing on the result.

console.log(`Request complete of requests ${counter}second, Among them success ${RequestList.length - failedLength}Fail ${failedLength}individual\n`, result)

counter = 1

}

}).catch(e => {

console.log(e)

})

}