It is expected to take a week to complete this article, and to study every aspect of the basic entry provided by the Flink documentation (for the purpose of participating in Ali's Flink Programming Competition):

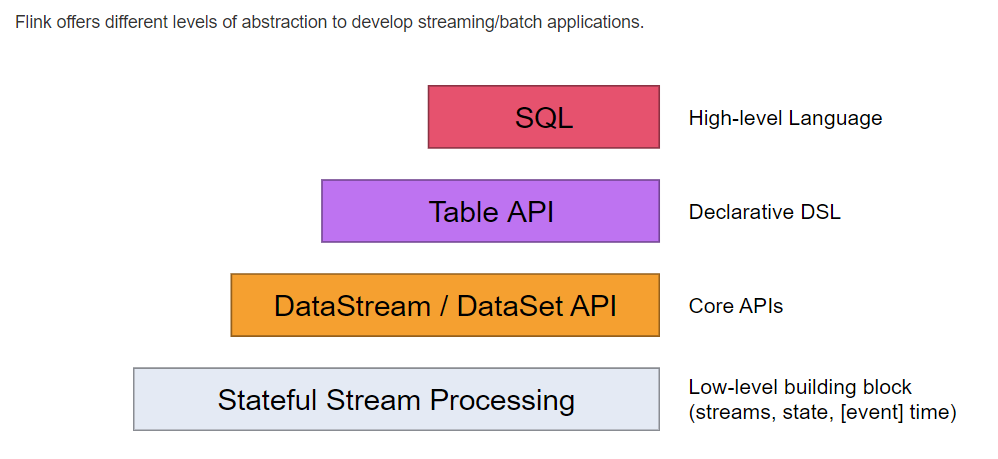

Figure Flink provides different levels of Abstract Programming models, starting with the lowest level of Stateful Stream Processing, which is embedded in the DataStream API through Process Function (it is said that the DataSet API will be removed in Flink's future iterations, and DataStream is for unbounded datasets in the existing understandingDataSet is for bounded datasets). Then explore Process Function.

ProcessFunction

It is a low-level streaming operation that provides access to the basic building blocks. basic building blocks built by streaming applications corresponding to the following three concepts.(But the basic building blocks of the Flink program are streams and transformations, as mentioned in the subsequent document reading.)

(1) events (stream element, stream data)

(2) State (Fault Tolerance and Consistency Status, concepts that only exist in keyed stream, which is temporarily unknown and subsequently supplemented)

(3) timer (event time and processing time, also the concept of time that appears in keyed stream s, will be mentioned later).

ProcessFunction triggers invoke by receiving every event in the input stream. keyed state and timer can be accessed.

This state mechanism provides a context to monitor and process each element's state.This is the property on the keyedstream stream that each key binds to.

Low-Level Joins

To achieve low-level operations in two input streams, applications can use CoProcessFunction, which binds two different input streams and retrieves records in two different input streams by calling processElement1(...) and processElement2(...), respectively.

Implementing a low-level join typically follows the following pattern:

1. Create a state for an input source (or both)

2. Update the state when an element in the input source is received

3. When receiving an element from another input source, explore the state and produce connection results

For example, you may connect customer data to financial transactions while preserving the status of customer information. If you are concerned about making a complete and deterministic connection in case of an out-of-order event, you can use a timer to evaluate and publish the connection for transactions when the watermark for the customer data stream has passed through the transaction.

DataStream<Tuple2<String, String>> stream = ...;

// apply the process function onto a keyed stream

// keyBy(0), 0 refers to the field index in a Tuple tuple, for example, keyBy String

// The main thing to look at is the functions in process.

// This example means that he will not update those keyed elements within 60 seconds

DataStream<Tuple2<String, Long>> result = stream

.keyBy(0)

.process(new CountWithTimeoutFunction());

/**

* The data type stored in the state

* This class holds three variables, one is the key, one is the count of keys, and the other is the last time the key was updated (in this case, keyedStream)

*/

public class CountWithTimestamp {

public String key;

public long count;

public long lastModified;

}

/**

* The implementation of the ProcessFunction that maintains the count and timeouts

*/

public class CountWithTimeoutFunction

extends KeyedProcessFunction<Tuple, Tuple2<String, String>, Tuple2<String, Long>> {

// A member variable ValueState type is maintained to save state.

/** The state that is maintained by this process function */

private ValueState<CountWithTimestamp> state;

@Override

public void open(Configuration parameters) throws Exception {

state = getRuntimeContext().getState(new ValueStateDescriptor<>("myState", CountWithTimestamp.class));

}

@Override

public void processElement(

Tuple2<String, String> value,

Context ctx,

Collector<Tuple2<String, Long>> out) throws Exception {

// retrieve the current count

CountWithTimestamp current = state.value();

if (current == null) {

current = new CountWithTimestamp();

current.key = value.f0;

}

// update the state's count

current.count++;

// set the state's timestamp to the record's assigned event time timestamp

current.lastModified = ctx.timestamp();

// write the state back

state.update(current);

// schedule the next timer 60 seconds from the current event time

ctx.timerService().registerEventTimeTimer(current.lastModified + 60000);

}

// This onTimer event corresponds to the registerEventTimeTimer event above.Like a mechanism for registration and callback,

//Based on Flink's time mechanism, this timer must also have concepts such as process event in theory

//out.collect for collection.This is collected in the top DataStream type variable.

@Override

public void onTimer(

long timestamp,

OnTimerContext ctx,

Collector<Tuple2<String, Long>> out) throws Exception {

// get the state for the key that scheduled the timer

CountWithTimestamp result = state.value();

// check if this is an outdated timer or the latest timer

if (timestamp == result.lastModified + 60000) {

// emit the state on timeout

out.collect(new Tuple2<String, Long>(result.key, result.count));

}

}

}

Timers:

TimeService If more than one timer is registered for the same timestamp.Only the onTimer method will be called once

Flink synchronizes calls to onTimer() and processElement(), so you don't have to worry about concurrent state modifications.

(It's clear that Flink's documentation writers are confused and illogical about where to write, compared to Spark's.Despise it).

The timer is mainly explained in the following section. Because the document here is confused, give up first.

https://cloud.tencent.com/developer/article/1172583