1, Foreword

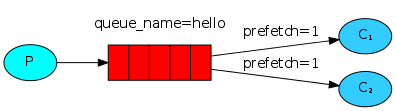

As mentioned earlier, if multiple consumers subscribe to messages in the same Queue at the same time, the messages in the Queue will be shared equally among multiple consumers. At this time, if the processing time of each message is different, it may cause some consumers to be busy all the time, while others will soon finish the work at hand and remain idle. We can set prefetchCount to limit the number of messages that the Queue sends to each consumer at a time. For example, if we set prefetchCount=1, the Queue will send a message to each consumer at a time. After the consumer processes the message, the Queue will send another message to the consumer.

2, Examples

Production side:

# -*- coding: UTF-8 -*-

import pika

connection = pika.BlockingConnection(pika.ConnectionParameters(

host='localhost'))

channel = connection.channel()

# Declare queues and persist them

channel.queue_declare(queue='task_queue', durable=True)

# information content

message = "Hello,World!"

channel.basic_publish(exchange='',

routing_key='task_queue',

body=message,

properties=pika.BasicProperties(

delivery_mode=2, # Message persistence

))

print('[x] Sent %r' % message)

connection.close()

Consumer:

# -*- coding: UTF-8 -*-

import pika

import time

import random

connection = pika.BlockingConnection(pika.ConnectionParameters(

host='localhost'))

channel = connection.channel()

# Declare a queue and persist it

channel.queue_declare(queue='task_queue', durable=True)

print(' [*] Waiting for messages. To exit press CTRL+C')

def callback(ch, method, properties, body):

print('[x] Received %r' % body)

time.sleep(random.randint(1, 20))

print('[x] Done')

# When the message processing is completed, rabbitmq will be notified actively, and then rabbitmq will be deleted

# This message in the queue

ch.basic_ack(delivery_tag=method.delivery_tag)

# prefetch_count = 1 if there is a message in the consumer that does not

# After processing, no new messages will be sent to this consumer

channel.basic_qos(prefetch_count=1)

channel.basic_consume(callback,

queue='task_queue')

# Always put it away. It's not stuck here

channel.start_consuming()

You can run multiple consumers to continuously accept messages. You can see that the next message will be accepted only when the message processing in the consumer is completed.