Pool Area is a core container in Netty memory pool. Its main function is to manage a series of Pool Chunks and Pool Subpages created, and to delegate the final application action to these two sub-containers according to the different memory size of the application. This paper first introduces the overall structure of PoolArena, then introduces its main attributes, and then introduces the process of PoolArena application and memory release from the point of view of source code.

1. Overall structure

Overall, PoolArena is an abstraction of memory application and release. It has two subclasses, which are structured as follows:

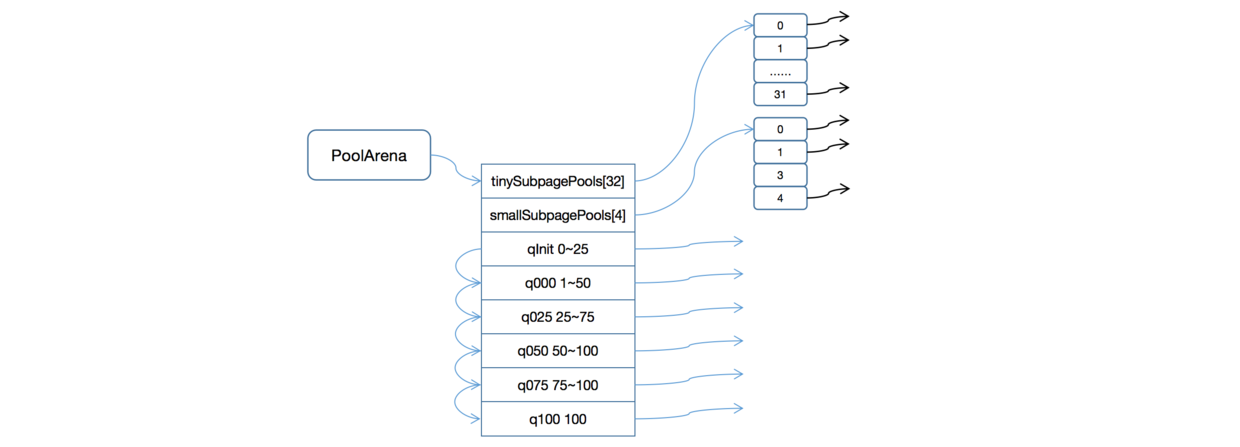

DirectArena and HeapArena are two specific implementations of PoolArena to manage different types of memory applications and releases, and the main memory processing work is in PoolArena. Structurally, Pool Area consists of three sub-memory pools: tinySubpagePools, smallSubpagePools and a series of Pool ChunkLists. Both tinySubpagePools and smallSubpagePools are arrays of PoolSubpage s with 32 and 4 lengths respectively; Pool ChunkList is mainly a container, which can store a series of Pool Chunk objects, and Netty will classify Pool ChunkList into different levels of containers according to memory usage. The following is the structure diagram of PoolArena in its initial state:

With regard to the structure of PoolArena, the following points need to be explained:

- In the initial state, tinySubpagePools is an array of 32 lengths, smallSubpagePools is an array of 4 lengths, and the rest of the object types are Pool ChunkLists, except that Pool Arena classifies them as qInit - > memory usage of 0-25, q000 - > memory usage of 1-50, Q025 - > memory usage of 25-75, q050 - > memory usage of 50-75, q075 - > according to their memory usage. Memory usage is 75-100, Q100 - > memory usage is 100.

- Initially, each element in the tinySubpagePools and smallSubpagePools arrays was empty, while there was no Pool Chunk in the Pool ChunkList. As can be seen from the figure, the Pool ChunkList not only holds Pool Chunk objects internally, but also has a pointer to the Pool ChunkList with the next higher utilization. The reason why Pool Area is so designed is that if a new Pool Chunk is created, it can be added to the Pool Chunk List only by adding it to qInit, which will be passed down in turn according to the current utilization rate of Pool Chunk to ensure that it belongs to a Pool Chunk List within its utilization range.

- In the tinySubpagePools array, memory with size less than or equal to 496 bytes is stored. It divides 0-496 bytes into 31 grades according to 16 bytes, and stores them in bits 1-31 of tinySubpagePools. It should be noted that each element in tinySubpagePools holds a PoolSubpage list. That is to say, in the tinySubpage Pools array, the memory size maintained by PoolSubpage stored in bit 1 is 16 byte, that maintained by PoolSubpage stored in bit 2 is 32 byte, and that maintained by PoolSubpage stored in bit 3 is 48 byte. By analogy, the memory size maintained by PoolSubpage stored in bit 31 is 496 byte. Readers can read my previous article on the implementation principle of Pool Subpage. Pool Subpage of Netty Memory Pool;

- The smallSubpagePools array is 4 in length, and its maintained memory size is 496 byte~8 KB. The division of memory in smallSubpage Pools is based on the exponential power of 2, that is to say, the memory size maintained by each element is exponential power of 2. For example, the memory size maintained by Pool Subpage stored in bit 0 is 512 byte, number 1 is 1024 byte, number 2 is 2048te, and number 3 is 4096. It should be noted that the maintained memory size here refers to the maximum memory size, such as the application memory size of 5000 > 4096 byte, then Pool Area will expand it to 8092, and then submit it to Pool Chunk for application.

- The qInit, q000, q025, q050, q075 and q100 in the figure are all a Pool Chunk List. Their main function is to maintain the Pool Chunk whose size matches the current usage rate. With regard to the Pool Chunk List, the following points need to be explained:

- Pool Chunk List maintains a head pointer of Pool Chunk, and Pool Chunk itself is a one-way list. When a new Pool Chunk needs to be added to the current Pool Chunk List, it will add the Pool Chunk to the head of the list.

- Pool ChunkList is also a single list, as shown in the figure, which maintains a Pool ChunkList pointer for the next level of usage internally, in accordance with the order in the figure. The advantage of this approach is that when you need to add a Pool Chunk to a Pool Chunk List, you only need to call the add() method of qInit. Each Pool Chunk List checks whether the target Pool Chunk usage rate conforms to the current Pool Chunk List. If satisfied, it is added to the Pool Chunk list maintained by the current Pool Chunk List. If not, it is submitted to the next Pool C. HunkList processing;

- Each Pool ChunkList in the figure is followed by a number range, which represents the current Pool ChunkList maintenance usage range, such as qInit maintenance usage rate of 0-25%, q000 maintenance usage rate of 1-50%, and so on.

- When Pool Chunk List maintains Pool Chunk, it also moves it, such as a Pool Chunk memory utilization rate of 23%, currently in qInit. When a memory application is made, 5% of the memory is requested from the Pool Chunk. At this time, the memory utilization rate reaches 30%, which is no longer in line with the current Pool Chunk List (qInit - > 0-25%) memory utilization rate. At this time, Pool Chunk List will make use of Pool Chunk List. If it is handled by its next Pool Chunk List, q000 will determine that the 30% usage of the received Pool Chunk List is in line with the current definition of Pool Chunk List usage, and add it to the current Pool Chunk List.

- As for the application process of memory, we take the application of 30byte memory as an example to explain:

- As can be seen from the above memory partition, the memory size range of Pool Area is tinySubpage Pools - > below 496 byte, smallSubpage Pools - > 512 ~ 4096 byte, Pool ChunkList - > 8KB ~ 16M. PoolArena first determines the memory range of the target memory, 30 < 496 byte, and then submits it to tinySubpage Pools for application.

- Because each level of memory block partition in tinySubpage Pools is in 16byte, Pool Area expands the target memory to a multiple of its first 16, or 32 (if the size of the memory applied is in smallSubpage Pools or Pool Chunk List, the way to expand it is to find exponential power of the first 2 larger than its value). 32 corresponds to the Pool List with the subscript of tinySubpagePools as 2, where tinySubpagePools[2] is fetched, and then judging from the next node of its header node whether there is enough memory (the header node does not save memory blocks), if there is, encapsulating the applied memory block as a ByteBuf object to return;

- In the initial state, the tinySubpagePools array elements are empty, so the corresponding memory block will not be applied according to the above steps, and the application action will be submitted to the Pool ChunkList. PoolArena will start with qInit, q000, and ____________ q100 applies for memory. If it is applied in one of them, it encapsulates the applied memory block into a ByteBuf object and returns it.

- Initially, each PoolChunkList does not have a PoolChunk object available. At this point, PoolArena creates a new PoolChunk object, and each PoolChunk object maintains a memory size of 16M. Then the memory application action is handed over to Pool Chunk. After Pool Chunk is applied to memory, Pool Arena will add the created Pool Chunk to qInit in the same way as before, and qInit will move it to the Pool Chunk List with the corresponding utilization rate according to the size of the memory used by the Pool Chunk.

- Regarding the way Pool Chunk applies for memory, it should be noted here that we apply for 30 byte memory, while the minimum Pool Chunk memory application is 8 KB. Therefore, after Pool Chunk has applied for 8KB memory, Pool Chunk will submit it it to a Pool Subpage for maintenance, and set the size of the memory block maintained by the Pool Subpage to 32 byte. Then, according to the size of the memory block maintained by the Pool Chunk, it will be placed in the corresponding location of the tinySubpage Pools, which is the Pool Subpage list of tinySubpagePools[2]. After putting it into the list, it applies for the expanded memory of the target memory in the PoolSubpage, that is, 32 byte, and finally encapsulates the applied memory into a ByteBuf object and returns it.

- As you can see, the maintenance of memory blocks by PoolArena is a dynamic process, which will be handed over to different objects according to the size of the target memory blocks. The advantage of this is that since memory application is a high-frequency operation shared by multiple threads, partitioning memory can reduce lock competition in concurrent processing. The following figure shows a structure of PoolArena after multiple memory requests:

2. PoolArena's main attributes

There are many attribute values in Pool Area that are used to control Pool Subpage, Pook Chunk and Pool Chunk List. When reading the source code, if you can understand the role of these attribute values, it will greatly deepen the understanding of Netty memory pool. Here we introduce the main attributes of PoolArena:

// This parameter specifies the length of the tinySubpagePools array, because the memory block difference for each element of tinySubpagePools is 16, // So the length of the array is 512/16, which is 512 > > > > > 4 here. static final int numTinySubpagePools = 512 >>> 4; // Recorded references to Pooled ByteBuf Allocator final PooledByteBufAllocator parent; // The bottom of Pool Chunk is a balanced binary tree, which specifies the depth of the binary tree. private final int maxOrder; // This parameter specifies the size of the memory block specified by each leaf node in Pool Chunk final int pageSize; // Specifies the power of leaf node size 8KB 2 by default of 13. The main function of this field is that it belongs to a binary tree in the computational target memory. // The layer number can be quickly calculated by using the difference of its memory size relative to pageShifts. final int pageShifts; // The initial size of Pool Chunk is specified, defaulting to 16M final int chunkSize; // Because the size of the PoolSubpage is 8KB=8196, the value of the field is // -8192=>=> 1111 1111 1111 1111 1110 0000 0000 0000 // In this way, when judging whether the target memory is less than 8KB, only the target memory and the number need to be operated, as long as the result of the operation is equal to 0, // This means that the target memory is less than 8KB, so you can judge that it should be in tinySubpagePools or smallSubpagePools first. // Make memory requests final int subpageOverflowMask; // This parameter specifies the length of the smallSubpagePools array, which defaults to 4 final int numSmallSubpagePools; // Calibration values for direct memory caching are specified final int directMemoryCacheAlignment; // Specifies a judgment variable for the direct memory cache calibration value final int directMemoryCacheAlignmentMask; // An array of PaolSubpages with memory blocks less than 512 bytes, which is hierarchical, such as the first layer for 16 byte size only // Memory block applications, layer 2 is only used for 32 byte memory block applications,... Layer 31 is only used for applications for memory blocks of 496 byte size private final PoolSubpage<T>[] tinySubpagePools; // For applications with 512 byte~8 KB memory size, the array length is 4, and the applied memory block size is 512 byte, 1024 byte, and // 2048 byte and 4096 byte. private final PoolSubpage<T>[] smallSubpagePools; // User Maintenance Usage Rate of Pool Chunk 50-100% private final PoolChunkList<T> q050; // User Maintenance Utilization Rate of Pool Chunk at 25-75% private final PoolChunkList<T> q025; // User Maintenance Utilization Rate 1-50% of Pool Chunk private final PoolChunkList<T> q000; // User Maintenance Utilization Rate of Pool Chunk at 0-25% private final PoolChunkList<T> qInit; // User Maintenance Usage of ool Chunk at 75-100% private final PoolChunkList<T> q075; // User Maintenance Usage of 100% Pool Chunk private final PoolChunkList<T> q100; // Records how many threads are currently using PoolArena, and when each thread requests new memory, it finds the least used one. // PoolArena makes memory requests, which reduces competition between threads final AtomicInteger numThreadCaches = new AtomicInteger();

3. Implementing Source Code Explanation

3.1 Memory Request

PoolArena controls memory applications mainly according to the previous description. With regard to Pool Chunk and Pool Subpage's control over memory application and release, readers can read my previous articles: Pool Chunk Principle of Netty Memory Pool and Pool Subpage of Netty Memory Pool . Here we mainly explain the memory application at the PoolArena level. The following is the source code of its allocate() method:

PooledByteBuf<T> allocate(PoolThreadCache cache, int reqCapacity, int maxCapacity) { // Here, the newByteBuf() method creates a PooledByteBuf object, but the object is uninitialized. // That is to say, its internal ByteBuffer, reader Index, writer Index and other parameters are default values. PooledByteBuf<T> buf = newByteBuf(maxCapacity); // Initialize the relevant memory data for the ByteBuf created in the corresponding way. Here we explain it in DirectArena, so here we go. // Memory is requested by its allocate() method allocate(cache, buf, reqCapacity); return buf; }

The above method is mainly an entry method. First, create a ByteBuf object with default values for all attributes, and then submit the real application action to the allocate() method.

private void allocate(PoolThreadCache cache, PooledByteBuf<T> buf, final int reqCapacity) { // The main function of normalizeCapacity() method is to regulate the target capacity. The main rules are as follows: // 1. If the target capacity is less than 16 bytes, 16 is returned. // 2. If the target capacity is greater than 16 bytes and less than 512 bytes, it returns a multiple of the first 16 bytes larger than the number of target bytes in 16 bytes. // For example, if the application is 100 bytes, then the multiple of 16 greater than 100 is 112, and thus 112 bytes are returned. // 3. If the target capacity is greater than 512 bytes, the exponential power of the first 2 larger than the target capacity is returned. // For example, 1000 bytes of application, 1024 will be returned. final int normCapacity = normalizeCapacity(reqCapacity); // Determine whether the target capacity is less than 8KB, and apply for memory in tiny or small mode when the target capacity is less than 8KB. if (isTinyOrSmall(normCapacity)) { int tableIdx; PoolSubpage<T>[] table; boolean tiny = isTiny(normCapacity); // Determine whether the target capacity is less than 512 bytes and less than 512 bytes is tiny type if (tiny) { // Here we first attempt to apply for memory from the cache of the current thread. If it is applied, it will be returned directly. In this method, the applied memory will be used. // Memory initializes ByteBuf objects if (cache.allocateTiny(this, buf, reqCapacity, normCapacity)) { return; } // If memory cannot be applied from the current thread cache, try to apply from tinySubpagePools, where the tinyIdx() method // That is to calculate which element of the target memory is in the tinySubpagePools array tableIdx = tinyIdx(normCapacity); table = tinySubpagePools; } else { // If the target memory is between 512 byte and 8 KB, try to request memory from smallSubpagePools. Here we start with // Application for small level memory in the cache of the current thread, if applied, returns directly if (cache.allocateSmall(this, buf, reqCapacity, normCapacity)) { return; } // If you cannot apply for small level memory from the cache of the current thread, try applying from smallSubpagePools. // Here, the smallIdx() method calculates which element the target memory block is in the smallSubpagePools tableIdx = smallIdx(normCapacity); table = smallSubpagePools; } // Getting the header node of the target element final PoolSubpage<T> head = table[tableIdx]; // Note here that because the head is locked, s!= head is judged in the synchronization block. // That is to say, there is an unused PoolSubpage in the PoolSubpage list, because if the node is exhausted, // It will be removed from the current list. That is to say, as long as s!= head, the allocate() method here // It must be able to apply for the required memory blocks. synchronized (head) { final PoolSubpage<T> s = head.next; // S!= head proves that there is a Pool Subpage available in the current Pool Subpage list and that it must be able to apply to memory. // Because the exhausted PoolSubpage will be removed from the list if (s != head) { // Request memory from PoolSubpage long handle = s.allocate(); // Initialization of ByteBuf through the memory of the application s.chunk.initBufWithSubpage(buf, null, handle, reqCapacity); // Update the number of applications of type tiny incTinySmallAllocation(tiny); return; } } synchronized (this) { // Here, it means that the target memory block cannot be applied in the target Pool Subpage list, so try to apply from Pool Chunk. allocateNormal(buf, reqCapacity, normCapacity); } // Update the number of applications of type tiny incTinySmallAllocation(tiny); return; } // If the target memory is larger than 8KB, then judge whether the target memory is larger than 16M, if it is larger than 16M. // It is not managed using a memory pool, and if it is less than 16M, it goes to the Pool ChunkList to apply for memory. if (normCapacity <= chunkSize) { // Less than 16M, first apply to the cache of the current thread, and return directly if the application arrives, if not. // Apply to Pool Chunk List if (cache.allocateNormal(this, buf, reqCapacity, normCapacity)) { return; } synchronized (this) { // Not enough memory can be requested in the cache of the current thread, so try to apply for memory in the Pool ChunkList allocateNormal(buf, reqCapacity, normCapacity); ++allocationsNormal; } } else { // For memory larger than 16M, Netty does not maintain it, but applies directly and returns it to the user for use. allocateHuge(buf, reqCapacity); } }

The above code is the main process for PoolArena to apply for the target memory block. First, it determines which memory level the target memory is at, such as tiny, small or normal, and then expands the target memory according to the allocation method of the target memory level. Next, we will try to apply for the target memory from the cache of the current thread. If it can be applied for, it will be returned directly. If it cannot be applied for, it will be applied at the current level. For memory applications at the tiny and small levels, if they are not available, the application action is handed over to the Pool Chunk List. Here we mainly look at how Pool Area applies for memory in the Pool Chunk List. Here is the source code of allocateNormal():

private void allocateNormal(PooledByteBuf<T> buf, int reqCapacity, int normCapacity) { // The application actions are handed over to each Pool Chunk List in the order of q050 - > Q025 - > q000 - > qInit - > q075. // If memory is applied in the corresponding Pool ChunkList, it is returned directly. if (q050.allocate(buf, reqCapacity, normCapacity) || q025.allocate(buf, reqCapacity, normCapacity) || q000.allocate(buf, reqCapacity, normCapacity) || qInit.allocate(buf, reqCapacity, normCapacity) || q075.allocate(buf, reqCapacity, normCapacity)) { return; } // Since memory cannot be applied in the target Pool Chunk List, a Pool Chunk is created directly here. // Then the target memory is applied in the Pool Chunk, and finally the Pool Chunk is added to qInit. PoolChunk<T> c = newChunk(pageSize, maxOrder, pageShifts, chunkSize); boolean success = c.allocate(buf, reqCapacity, normCapacity); qInit.add(c); }

The application process here is relatively simple, first of all, to apply for memory in each Pool Chunk List in a certain order, if the application arrives, it returns directly, if not, it creates a Pool Chunk to apply. It should be noted that when applying for memory in a Pool Chunk List, the application action is essentially handed over to its internal Pool Chunk for application. If applied, it will also determine whether the current Pool Chunk memory utilization exceeds the current Pool Chunk List threshold, and if exceeded, it will move to the next Pool Chunk List.

3.2 Memory Release

For memory release, PoolArena is mainly divided into two situations: pooling and non-pooling. If non-pooling, the target memory block will be destroyed directly, and if pooling, it will be added to the cache of the current thread. The following is the source code for the free() method:

void free(PoolChunk<T> chunk, ByteBuffer nioBuffer, long handle, int normCapacity, PoolThreadCache cache) { // If it is non-pooled, the target memory block is destroyed directly and the relevant data is updated. if (chunk.unpooled) { int size = chunk.chunkSize(); destroyChunk(chunk); activeBytesHuge.add(-size); deallocationsHuge.increment(); } else { // If it is pooled, first determine which type it is, tiny, small or normal. // It is then handled by the current thread's cache and returned directly if the addition is successful. SizeClass sizeClass = sizeClass(normCapacity); if (cache != null && cache.add(this, chunk, nioBuffer, handle, normCapacity, sizeClass)) { return; } // If the current thread's cache is full, the target memory block is returned to the common memory block for processing. freeChunk(chunk, handle, sizeClass, nioBuffer); } }

4. summary

This paper first explains the overall structure of PoolArena, and explains how PoolArena controls the flow of memory applications, then introduces the role of various attributes in PoolArena, and finally explains how PoolArena controls the flow of memory applications from the source point of view.