1. What is pkuseg

Pkuseg is a new Chinese word segmentation toolkit developed by the language computing and machine learning research group of Peking University. GitHub address: https://github.com/lancopku/pkuseg-python

2. Characteristics

-

Multi domain word segmentation. Different from the previous general Chinese word segmentation tools, this toolkit is also committed to providing personalized pre training models for data in different fields. According to the domain characteristics of the text to be segmented, users can freely choose different models. At present, we support word segmentation pre training models in news field, network field, medicine field, tourism field and mixed field. In use, if the user knows the domain to be segmented, the corresponding model can be loaded for word segmentation. If the user cannot determine the specific domain, it is recommended to use the general model trained in the mixed domain. Refer to example.txt for examples of word segmentation in various fields.

-

High segmentation accuracy. Compared with other word segmentation toolkits, the toolkit greatly improves the accuracy of word segmentation in different fields. According to the test results, pkuseg reduces the word segmentation error rates by 79.33% and 63.67% on the sample data sets (MSRA and CTB8), respectively.

-

Support user self training model. Support users to use new annotation data for training.

-

Support part of speech tagging.

3. Compilation and installation

pip install pkuseg

Then use import pkuseg to reference

4. Mode of use

4.1 default configuration word segmentation

(if the user cannot determine the word segmentation field, it is recommended to use the default model word segmentation)

import pkuseg

seg = pkuseg.pkuseg() # Load model with default configuration

text = seg.cut('I Love Beijing Tiananmen ') # Progressive participle

print(text)

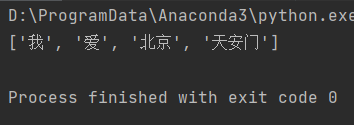

Operation results:

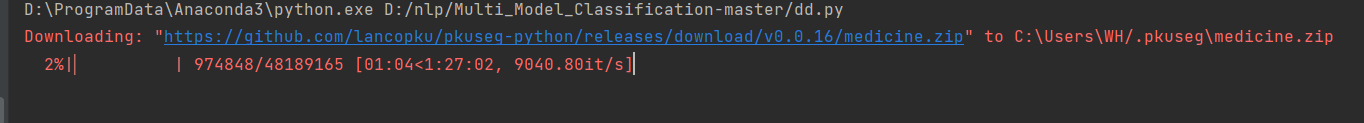

4.2 domain segmentation

(if the user specifies the word segmentation domain, it is recommended to use the sub domain model for word segmentation)

import pkuseg

seg = pkuseg.pkuseg(model_name='medicine') # The program will automatically download the corresponding detailed domain model

text = seg.cut('I Love Beijing Tiananmen ') # Progressive participle

print(text)

4.3 part of speech tagging

Please refer to tags.txt for the detailed meaning of each part of speech label

import pkuseg

seg = pkuseg.pkuseg(postag=True) # Enable part of speech tagging

text = seg.cut('I Love Beijing Tiananmen ') # Word segmentation and part of speech tagging

print(text)

4.4 word segmentation of documents

import pkuseg

# Word segmentation of the input.txt file is output to output.txt

# Open 10 processes

pkuseg.test('input.txt', 'output.txt', nthread=10)

4.5 additional use of user-defined Dictionaries

import pkuseg

seg = pkuseg.pkuseg(user_dict='my_dict.txt') # The given user dictionary is "my_dict.txt" in the current directory

text = seg.cut('I Love Beijing Tiananmen ') # Progressive participle

print(text)

4.6 word segmentation using self training model (taking CTB8 model as an example)

import pkuseg

seg = pkuseg.pkuseg(model_name='./ctb8') # Suppose that the user has downloaded the ctb8 model and placed it in the '. / ctb8' directory. Load the model by setting model_name

text = seg.cut('I Love Beijing Tiananmen ') # Progressive participle

print(text)

4.7 training new model (model random initialization)

import pkuseg

# The training file is' msr_training.utf8 '

# The test file is' msr_test_gold.utf8 '

# Save the trained model to the '. / models' directory

In training mode, the last round of model will be saved as the final model

# At present, only utf-8 coding is supported. Training set and test set require all words to be separated by single or multiple spaces

pkuseg.train('msr_training.utf8', 'msr_test_gold.utf8', './models')

4.8 fine tune training (continue training from preloaded model)

import pkuseg

# The training file is' train.txt '

# The test file is' test.txt '

# Load the model in the '. / pre trained' directory, save the trained model in '. / models', and train for 10 rounds

pkuseg.train('train.txt', 'test.txt', './models', train_iter=10, init_model='./pretrained')

4.9 parameter description

1) Model configuration

pkuseg.pkuseg(model_name = "default", user_dict = "default", postag = False) model_name Model path. "default",The default parameter indicates that our pre trained hybrid domain model is used(Only for pip Downloaded users). "news", Use the news domain model. "web", Use the network domain model. "medicine", Use the medical domain model. "tourism", Use the tourism domain model. model_path, Load the model from the user specified path. user_dict Set user dictionary. "default", The default parameter is the dictionary provided by us. None, Do not use dictionaries. dict_path, When using the default dictionary, the user-defined dictionary will be used additionally. You can fill in the path of your own user dictionary. The dictionary format is one word per line (if you choose to mark the part of speech and know the part of speech of the word, write the word and part of speech in this line, and use tab Character separated). postag Whether to conduct part of speech analysis. False, The default parameter is word segmentation without part of speech tagging. True, Part of speech tagging will be carried out at the same time of word segmentation.

2) Word segmentation of documents

pkuseg.test(readFile, outputFile, model_name = "default", user_dict = "default", postag = False, nthread = 10) readFile Enter the file path. outputFile Output file path. model_name Model path. with pkuseg.pkuseg user_dict Set user dictionary. with pkuseg.pkuseg postag Set whether the part of speech analysis function is enabled. with pkuseg.pkuseg nthread The number of processes open during the test.

3) Model training

pkuseg.train(trainFile, testFile, savedir, train_iter = 20, init_model = None) trainFile Training file path. testFile Test file path. savedir Save path of training model. train_iter Number of training rounds. init_model Initialize the model. The default is None Indicates that the default initialization is used. Users can fill in the path of the model they want to initialize, such as init_model='./models/'.

4.10 multi process word segmentation

When the above code example is run in a file, if multi process function is involved, be sure to use if name == '_main_’ Protect global statements. See multi process word segmentation for details.

5. Pre training model

Users installed from pip only need to set the model when using the domain segmentation function_ If the name field is the corresponding domain, the corresponding detailed domain model will be automatically downloaded.

Users who download from github need to download the corresponding pre training model and set the model_ The name field is the path of the pre training model. The pre training model can be downloaded in the release section. The following is a description of the pre training model:

News: a model trained on MSRA (news corpus).

web: a model trained on microblog (network text corpus).

Medicine: a model for training in the field of medicine.

Tourism: a model for training in the field of tourism.

Mixed: a general model for mixed dataset training. This model comes with the pip package.

reference resources:

del_ The name field is the path of the pre training model. The pre training model can be downloaded in the release section. The following is a description of the pre training model:

News: a model trained on MSRA (news corpus).

web: a model trained on microblog (network text corpus).

Medicine: a model for training in the field of medicine.

Tourism: a model for training in the field of tourism.

Mixed: a general model for mixed dataset training. This model comes with the pip package.

reference resources:

https://blog.csdn.net/TFATS/article/details/108851344