testing environment

Configuration directly affects the execution speed. First, go up to the tester configuration.

- cpu i7 5500U

- Memory 8G ddr3 1600

- php 7.1

- mysql 5.5.40

- CodeIgniter 3.1.2

What are the factors that affect writing efficiency?

-

database engine

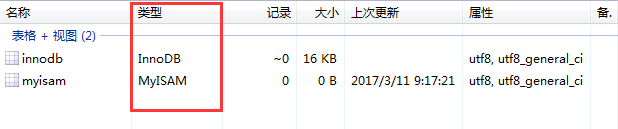

MyISAM, InnoDB, two common database engines in development, and other database engines I haven't used in development, so I won't test them here.

Look at the library table structure first.

There are two tables under the test library: the two engines mentioned above:

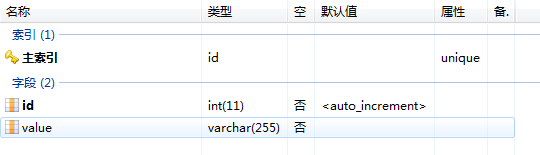

Each table is structured as follows (a self-increasing id, a varchar type to insert fields):

Insert 20w data into two tables to see the efficiency by default:

The PHP code is as follows:

/** * * Test insertion efficiency * * @return void * */ public function insertTest(){ set_time_limit(0); //Prevent over 300 s 500 errors $t1 = microtime(true); //Random insertion of num bar for ($i=1; $i<=200000; $i++){ $result = $this->db->insert('myisam', ['value' => uniqid().$i]); } //Program run time $t2 = microtime(true); echo 'Time consuming:'.round($t2-$t1,3).'second<br>'; echo 'Memory consumption:'.round(memory_get_usage()/1048576,2)." M<br/>"; }

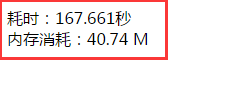

Implementation results:

Myisam is about three minutes away from the 20w data.

Look at the default execution time of InnoDb:

It took 6 minutes and 49 seconds to insert 1w pieces of data. There was no way to wait. According to this amount of data, it was estimated that 6 minutes and 49* 20 =???

Later, due to the increase of data volume, it will also affect the insertion performance, so the default state of InnoDb to insert 20w single field data takes more than two hours, which is unbearable.

-

Business logic

Obviously, the above business logic is problematic. Each data insertion once increases the cost of mysql, and every data insertion needs to be reconnected. It must be a waste of resources to connect mysql. So CI provides insert_batch(), which writes data in batches. Thinkphp 3.2 is also supported by addAll(). There should be other frameworks!

The principle is simple: splice two-dimensional arrays into sql

A single Sql will be as follows:

$sql = "INSERT INTO TEST (value) VALUES ('helloworld1')";

$sql = "INSERT INTO TEST (value) VALUES ('helloworld2')";

The stitching is as follows:

$sql = "INSERT INTO TEST (value) VALUES ('helloworld1'), ('helloworld2')";

It's obvious that batch insertion is much faster.

Or 20w data, how fast is batch query under myisam? (The existing data will affect the insertion efficiency, and the myisam table has been cleared)

Or code it

/** * * Test batch insertion efficiency * * @return void * @author lidalin.se@gmail.com * */ public function insertTest(){ set_time_limit(0); //Prevent over 300 s 500 errors $t1 = microtime(true); //Random insertion of num bar for ($i=1; $i<=200000; $i++){ $data[$i] = ['value' => uniqid().$i]; } //Program run time $t2 = microtime(true); echo 'Cycle time:'.round($t2-$t1,3).'second<br>'; $this->db->insert_batch('myisam', $data); //Batch insertion $t3 = microtime(true); echo 'Insertion time-consuming:'.round($t3-$t2,3).'second<br>'; echo 'Memory consumption:'.round(memory_get_usage()/1048576,2)." M<br/>"; }

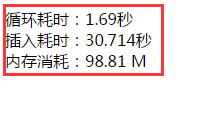

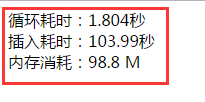

Implementation results:

Five times faster than the previous 167 seconds of single insertion. Memory consumption increased by about 1.5 times, memory exchange time is desirable~~~

What speed will InnoDB have?

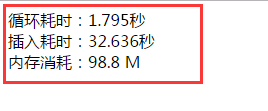

Implementation results:

At last, the execution was completed, and it was very fast. Compared with the previous two hours, the efficiency also increased by N times.

-

Modify configuration parameters to improve performance:

InnoDB engine is really so slow? So low?

The obvious answer is: NO

One reason InnoDB writes so slowly is that:

innodb_flush_log_at_trx_commit

Parametric configuration issues

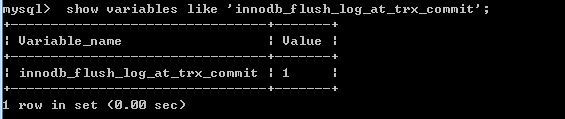

The default values are as follows:

Refer to the mysql documentation for values:

https://dev.mysql.com/doc/refman/5.7/en/innodb-parameters.html

When innodb_flush_log_at_trx_commit=0, log buffer will write to log file once every second, and the flush (refresh) to disk operation of log file is carried out simultaneously. At this time, transaction commit will not actively trigger the operation of writing to disk.

When innodb_flush_log_at_trx_commit=1 (default), MySQL writes log buffer data to log f ile and flush log f ile to disk each time a transaction is committed.

When innodb_flush_log_at_trx_commit=2, MySQL writes log buffer data to log file each time a transaction is committed, but does not actively trigger flush (refresh to disk) operations to occur simultaneously. However, MySQL performs flush (refresh to disk) operations once a second.

Set the value to 2 and try again:

3 times faster, almost the same as MyISAM.

Later on, InnoDB writes slowly, which may be a configuration problem.

-

Can it be optimized?

What else can be optimized?

Since we use insert_batch of the framework, take a look at the CI source code:

/** * The "set_insert_batch" function. Allows key/value pairs to be set for batch inserts * * @param mixed * @param string * @param bool * @return CI_DB_query_builder */ public function set_insert_batch($key, $value = '', $escape = NULL) { $key = $this->_object_to_array_batch($key); if ( ! is_array($key)) { $key = array($key => $value); } is_bool($escape) OR $escape = $this->_protect_identifiers; $keys = array_keys($this->_object_to_array(current($key))); sort($keys); foreach ($key as $row) { $row = $this->_object_to_array($row); if (count(array_diff($keys, array_keys($row))) > 0 OR count(array_diff(array_keys($row), $keys)) > 0) { // batch function above returns an error on an empty array $this->qb_set[] = array(); return; } ksort($row); // puts $row in the same order as our keys if ($escape !== FALSE) { $clean = array(); foreach ($row as $value) { $clean[] = $this->escape($value); } $row = $clean; } $this->qb_set[] = '('.implode(',', $row).')'; } foreach ($keys as $k) { $this->qb_keys[] = $this->protect_identifiers($k, FALSE, $escape); } return $this; }

The data we pass in will be recycled and judged. So the suggestion statement splices itself

The code was modified as follows:

/** * * Test insertion efficiency * * @return void * @author lidalin.se@gmail.com * */ public function insertTest(){ set_time_limit(0); //Prevent over 300 s 500 errors $t1 = microtime(true); $sql = "insert into innodb (value) VALUES"; //Random insertion of num bar for ($i=1; $i<=200000; $i++){ $val = uniqid().$i; $sql .= "('{$val}'),"; } $sql = substr($sql,0,-1); //Program run time $t2 = microtime(true); echo 'Cycle time:'.round($t2-$t1,3).'second<br>'; $this->db->query($sql); //Batch insertion $t3 = microtime(true); echo 'Insertion time-consuming:'.round($t3-$t2,3).'second<br>'; echo 'Memory consumption:'.round(memory_get_usage()/1048576,2)." M<br/>"; }

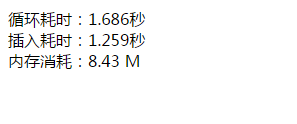

Implementation results:

20W data InnoDB loops for 1.6 seconds and inserts for 1.2 seconds. Is the speed cool?

Splicing statements may report errors

Set it up.

max_allowed_packet = 500M

Allow mysql to accept packet sizes.

Welcome to reprint:

For reprint, please note: https://my.oschina.net/famoustone/blog/856736