After selecting the architecture and setting the super parameters, we enter the training stage. Our goal is to find the parameter value that minimizes the loss function. After training, we need to use these parameters to make future predictions. Sometimes we need to extract parameters to reuse them in other environments and save the model to disk so that it can be executed in other software

This section describes:

1. Access parameters for tuning, diagnostics, and visualization

2. Parameter initialization

3. Sharing parameters between different model components

#We first focus on multi-layer perceptron with single hidden layer

example:

import torch from torch import nn net = nn.Sequential(nn.Linear(4, 8), nn.ReLU(), nn.Linear(8, 1)) X = torch.rand(size=(2, 4))

Parameter access

You can access any layer of the model through an index. This is like the model is a list. Check the parameters of the second fully connected layer

print(net[2].state_dict())

Target parameters

The bias is extracted from the second neural network layer. After extraction, a parameter class instance is returned, and the value of the parameter is further accessed.

# print(type(net[2].bias)) # print(net[2].bias) # print(net[2].bias.data)

Parameters are composite objects that contain values, gradients, and additional information. This is why we need to explicitly request values. In addition to values, we can also access the gradient of each parameter. Since we have not called the back propagation of this network, the gradient of the parameters is in the initial state

net[2].weight.grad == None

Access all parameters at once

Access the parameters of the first full connection layer

print(*[(name, param.shape) for name, param in net[0].named_parameters()])

Access parameters for all layers

print(*[(name, param.shape) for name, param in net.named_parameters()])

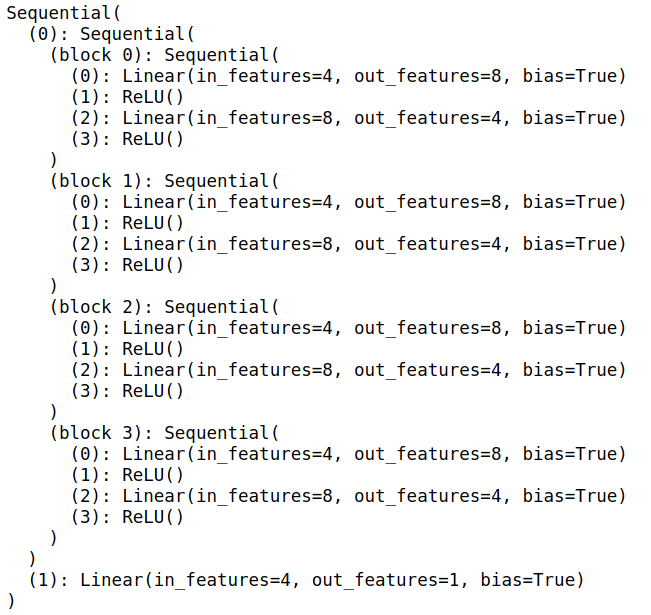

Collect parameters from nested blocks

First define a function that generates blocks, and then combine these blocks into larger blocks

def block1():

return nn.Sequential(nn.Linear(4,8),nn.ReLU(),nn.Linear(8,4),nn.ReLU())

def block2():

net = nn.Sequential()

for i in range(4):

net.add_module(f'block {i}',block1())

return net

regnet = nn.Sequential(block2(),nn.Linear(4,1))

X = torch.rand(size=(2,4))

regnet(X)View the organization of the network

print(rgnet)

You can access all layers of the network like an access list

rgnet[0][1][0].bias.data

Parameter initialization

PyTorch's nn.init module provides a variety of preset initialization methods

Built in initialization

Call the built-in initializer, initialize all weight parameters to Gaussian random variables with standard deviation of 0.01, and set the offset parameters to 0

def init_normal(m):

if type(m) == nn.Linear:

nn.init.normal_(m.weight, mean=0, std=0.01)

nn.init.zeros_(m.bias)

net.apply(init_normal)

net[0].weight.data[0], net[0].bias.data[0]All parameters are initialized to the given constant

def init_constant(m):

if type(m) == nn.Linear:

nn.init.constant_(m.weight, 1)

nn.init.zeros_(m.bias)

net.apply(init_constant)

net[0].weight.data[0], net[0].bias.data[0]Apply different initialization methods to some blocks

def xavier(m):

if type(m) == nn.Linear:

nn.init.xavier_uniform_(m.weight)

def init_42(m):

if type(m) == nn.Linear:

nn.init.constant_(m.weight, 42)

net[0].apply(xavier)

net[2].apply(init_42)

print(net[0].weight.data[0])

print(net[2].weight.data)Custom initialization

def my_init(m):

if type(m) == nn.Linear:

print("Init", *[(name, param.shape)

for name, param in m.named_parameters()][0])

nn.init.uniform_(m.weight, -10, 10)

m.weight.data *= m.weight.data.abs() >= 5

net.apply(my_init)

net[0].weight[:2]Parameter binding

# We need to give the shared layer a name so that its parameters can be referenced.

shared = nn.Linear(8, 8)

net = nn.Sequential(nn.Linear(4, 8), nn.ReLU(),

shared, nn.ReLU(),

shared, nn.ReLU(),

nn.Linear(8, 1))

net(X)

# Check whether the parameters are the same

print(net[2].weight.data[0] == net[4].weight.data[0])

net[2].weight.data[0, 0] = 100

# Make sure they are actually the same object, not just the same value.

print(net[2].weight.data[0] == net[4].weight.data[0])Summary

- There are several ways to access, initialize, and bind model parameters.

- We can use custom initialization methods.

Source: hands on learning and deep learning