[PaddleNLP] thousand words data set: emotion analysis - SKEP

This project uses the pre training model SKEP complete Thousand words data set: emotion analysis match

It contains three sub tasks: sentence level emotion classification, evaluation object level emotion classification and viewpoint extraction

Welcome to praise, fork and pay attention!

Heavy update!!!

2021 / 6 / 24 V1.5 NEW 👉 Ernie_ Integration of gram and SKEP prediction results (evaluation object extraction task) 👈 remarkable effect!!!

If necessary, Ernie_gram view extraction tutorial, please refer to Jiang Liu: [paddlenlp] thousand words data set: emotion analysis

Don't forget to order a star and fork for him too!!!

preface

hello everyone

The competition is NLP punch in camp Big homework. The following is the code to realize the emotion analysis task through the content learned in this course.

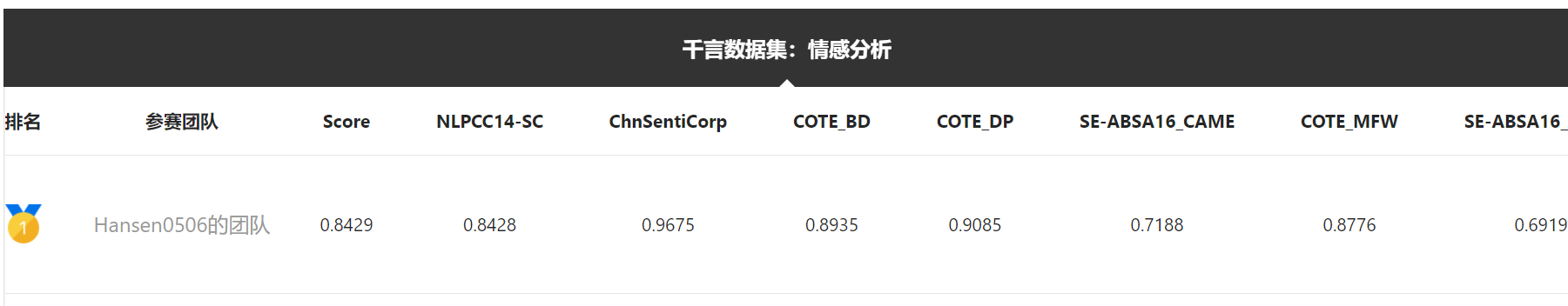

Previous results:

Just getting started + there is no basis for machine learning, and there is still a lot of room for tuning and processing.

We strongly recommend that you refer to the following source code:

- SKEP example is very important!!!

- load_dataset

- You can point a star to the link of PaddleNLP above!

Found good things, but not yet used: VisualDL2.2 It can realize hyperparametric visualization

Dataset preparation

Put all data set compressed packages under the work folder

# Unzip the data set to the data folder. Note that it needs to be executed every time you open it. Restart does not need to be executed !unzip -q work/ChnSentiCorp.zip -d data !unzip -q work/NLPCC14-SC.zip -d data !unzip -q work/SE-ABSA16_CAME.zip -d data !unzip -q work/SE-ABSA16_PHNS.zip -d data !unzip -q work/COTE-BD.zip -d data !unzip -q work/COTE-DP.zip -d data !unzip -q work/COTE-MFW.zip -d data

# Update paddlenlp !pip install --upgrade paddlenlp -i https://pypi.org/simple

1, Sentence level affective analysis

The emotional polarity classification of a given text is often used in film review analysis, online forum public opinion analysis and other scenes.

Data read in – sentence level data

contain:

- load_ds: the verification set can be divided from the training set

- load_test: read in test

import os

import random

from paddlenlp.datasets import MapDataset

# for train and dev sets

def load_ds(datafiles, split_train=False, dev_size=0):

'''

intput:

datafiles -- str or list[str] -- the path of train or dev sets

split_train -- Boolean -- split from train or not

dev_size -- int -- split how much data from train

output:

MapDataset

'''

datas = []

def read(ds_file):

with open(ds_file, 'r', encoding='utf-8') as fp:

next(fp) # Skip header

for line in fp.readlines():

data = line[:-1].split('\t')

if len(data)==2:

yield ({'text':data[1], 'label':int(data[0])})

elif len(data)==3:

yield ({'text':data[2], 'label':int(data[1])})

def write_tsv(tsv, datas):

with open(tsv, mode='w', encoding='UTF-8') as f:

for line in datas:

f.write(line)

# Cut a part from the train to dev

def spilt_train4dev(train_ds, dev_size):

with open(train_ds, 'r', encoding='UTF-8') as f:

for i, line in enumerate(f):

datas.append(line)

datas_tmp=datas[1:] # title line should not shuffle

random.shuffle(datas_tmp)

if 1-os.path.exists(os.path.dirname(train_ds)+'/tem'):

os.mkdir(os.path.dirname(train_ds)+'/tem')

# remember the title line

write_tsv(os.path.dirname(train_ds)+'/tem/train.tsv', datas[0:1]+datas_tmp[:-dev_size])

write_tsv(os.path.dirname(train_ds)+'/tem/dev.tsv', datas[0:1]+datas_tmp[-dev_size:])

if split_train:

if 1-isinstance(datafiles, str):

print("If you want to split the train, make sure that \'datafiles\' is a train set path str.")

return None

if dev_size == 0:

print("Please set size of dev set, as dev_size=...")

return None

spilt_train4dev(datafiles, dev_size)

datafiles = [os.path.dirname(datafiles)+'/tem/train.tsv', os.path.dirname(datafiles)+'/tem/dev.tsv']

if isinstance(datafiles, str):

return MapDataset(list(read(datafiles)))

elif isinstance(datafiles, list) or isinstance(datafiles, tuple):

return [MapDataset(list(read(datafile))) for datafile in datafiles]

def load_test(datafile):

'''

intput:

datafile -- str -- the path of test set

output:

MapDataset

'''

def read(test_file):

with open(test_file, 'r', encoding='UTF-8') as f:

for i, line in enumerate(f):

if i==0:

continue

data = line[:-1].split('\t')

yield {'text':data[1], 'label':'', 'qid':data[0]}

return MapDataset(list(read(datafile)))

1. ChnSentiCorp

Only one dataset can be executed at a time!!!

32 256 2e-5 6

# You can use the official load directly_ Dataset is more convenient

# In this paper, a part of the train is divided into dev, which is in a unified format, so the load function defined by ourselves is adopted

# from paddlenlp.datasets import load_dataset

# train_ds, dev_ds, test_ds = load_dataset("chnsenticorp", splits=["train", "dev", "test"])

train_ds, dev_ds= load_ds(datafiles=['./data/ChnSentiCorp/train.tsv', './data/ChnSentiCorp/dev.tsv']) print(train_ds[0]) print(dev_ds[0]) print(type(train_ds[0])) test_ds = load_test(datafile='./data/ChnSentiCorp/test.tsv') print(test_ds[0])

2. NLPCC14-SC

train_ds, dev_ds = load_ds(datafiles='./data/NLPCC14-SC/train.tsv', split_train=True, dev_size=1000) print(train_ds[0]) print(dev_ds[0]) test_ds = load_test(datafile='./data/NLPCC14-SC/test.tsv') print(test_ds[0])

SKEP model construction

PaddleNLP has implemented the SKEP pre training model, and SKEP loading can be realized through one line of code.

Sentence level affective analysis model is a common model for SKEP fine tune text classification. Firstly, the sentence semantic features are extracted by SKEP, and then the semantic features are classified.

from paddlenlp.transformers import SkepForSequenceClassification, SkepTokenizer # num_classes: two categories, 0 and 1, indicate negative and positive model = SkepForSequenceClassification.from_pretrained(pretrained_model_name_or_path="skep_ernie_1.0_large_ch", num_classes=2) tokenizer = SkepTokenizer.from_pretrained(pretrained_model_name_or_path="skep_ernie_1.0_large_ch")

SkepForSequenceClassification can be used for sentence level emotion analysis and target level emotion analysis tasks. It obtains the representation of the input text through the pre training model SKEP, and then classifies the text representation.

-

pretrained_model_name_or_path: model name. "Skip_ernie_1.0_large_ch", "skip_ernie_2.0_large_en" are supported.

- "skep_ernie_1.0_large_ch": it refers to SKEP model in pre training Ernie_ 1.0_ large_ On the basis of CH, the Chinese pre training model is obtained by continuous pre training on massive Chinese data;

- "skep_ernie_2.0_large_en": it refers to SKEP model in pre training Ernie_ 2.0_ large_ An English pre training model based on en and continuous pre training on massive English data;

-

num_classes: number of data set classification categories.

For details about SKEP model implementation, refer to: https://github.com/PaddlePaddle/PaddleNLP/tree/develop/paddlenlp/transformers/skep

import os

from functools import partial

import numpy as np

import paddle

import paddle.nn.functional as F

from paddlenlp.data import Stack, Tuple, Pad

from utils import create_dataloader

def convert_example(example,

tokenizer,

max_seq_length=512,

is_test=False):

"""

Builds model inputs from a sequence or a pair of sequence for sequence classification tasks

by concatenating and adding special tokens. And creates a mask from the two sequences passed

to be used in a sequence-pair classification task.

A skep_ernie_1.0_large_ch/skep_ernie_2.0_large_en sequence has the following format:

::

- single sequence: ``[CLS] X [SEP]``

- pair of sequences: ``[CLS] A [SEP] B [SEP]``

A skep_ernie_1.0_large_ch/skep_ernie_2.0_large_en sequence pair mask has the following format:

::

0 0 0 0 0 0 0 0 0 0 0 1 1 1 1 1 1 1 1 1

| first sequence | second sequence |

If `token_ids_1` is `None`, this method only returns the first portion of the mask (0s).

Args:

example(obj:`list[str]`): List of input data, containing text and label if it have label.

tokenizer(obj:`PretrainedTokenizer`): This tokenizer inherits from :class:`~paddlenlp.transformers.PretrainedTokenizer`

which contains most of the methods. Users should refer to the superclass for more information regarding methods.

max_seq_len(obj:`int`): The maximum total input sequence length after tokenization.

Sequences longer than this will be truncated, sequences shorter will be padded.

is_test(obj:`False`, defaults to `False`): Whether the example contains label or not.

Returns:

input_ids(obj:`list[int]`): The list of token ids.

token_type_ids(obj: `list[int]`): List of sequence pair mask.

label(obj:`int`, optional): The input label if not is_test.

"""

encoded_inputs = tokenizer(

text=example["text"], max_seq_len=max_seq_length)

input_ids = encoded_inputs["input_ids"]

token_type_ids = encoded_inputs["token_type_ids"]

if not is_test:

label = np.array([example["label"]], dtype="int64")

return input_ids, token_type_ids, label

else:

qid = np.array([example["qid"]], dtype="int64")

return input_ids, token_type_ids, qid

batch_size = 32

max_seq_length = 256

trans_func = partial(

convert_example,

tokenizer=tokenizer,

max_seq_length=max_seq_length)

batchify_fn = lambda samples, fn=Tuple(

Pad(axis=0, pad_val=tokenizer.pad_token_id), # input_ids

Pad(axis=0, pad_val=tokenizer.pad_token_type_id), # token_type_ids

Stack() # labels

): [data for data in fn(samples)]

train_data_loader = create_dataloader(

train_ds,

mode='train',

batch_size=batch_size,

batchify_fn=batchify_fn,

trans_fn=trans_func)

dev_data_loader = create_dataloader(

dev_ds,

mode='dev',

batch_size=batch_size,

batchify_fn=batchify_fn,

trans_fn=trans_func)

Model training and evaluation (store the results of each data set separately!!!)

After defining the loss function, optimizer and evaluation index, you can start training.

Recommended super parameter settings:

- max_seq_length=256

- batch_size=32

- learning_rate=2e-5

- epochs=10

In actual operation, batch can be adjusted according to the size of video memory_ Size and max_seq_length size.

import time

from utils import evaluate

epochs = 10

ckpt_dir = "skep_sentence"

num_training_steps = len(train_data_loader) * epochs

# optimizer = paddle.optimizer.AdamW(

# learning_rate=2e-5,

# parameters=model.parameters())

decay_params = [

p.name for n, p in model.named_parameters()

if not any(nd in n for nd in ["bias", "norm"])

]

optimizer = paddle.optimizer.AdamW(

learning_rate=2e-6,

parameters=model.parameters(),

weight_decay=0.01, # test weight_decay

apply_decay_param_fun=lambda x: x in decay_params # test weight_decay

)

criterion = paddle.nn.loss.CrossEntropyLoss()

metric = paddle.metric.Accuracy()

global_step = 0

tic_train = time.time()

for epoch in range(1, epochs + 1):

for step, batch in enumerate(train_data_loader, start=1):

input_ids, token_type_ids, labels = batch

logits = model(input_ids, token_type_ids)

loss = criterion(logits, labels)

probs = F.softmax(logits, axis=1)

correct = metric.compute(probs, labels)

metric.update(correct)

acc = metric.accumulate()

global_step += 1

if global_step % 10 == 0:

print(

"global step %d, epoch: %d, batch: %d, loss: %.5f, accu: %.5f, speed: %.2f step/s"

% (global_step, epoch, step, loss, acc,

10 / (time.time() - tic_train)))

tic_train = time.time()

loss.backward()

optimizer.step()

optimizer.clear_grad()

if global_step % 100 == 0:

save_dir = os.path.join(ckpt_dir, "model_%d" % global_step)

if not os.path.exists(save_dir):

os.makedirs(save_dir)

evaluate(model, criterion, metric, dev_data_loader)

model.save_pretrained(save_dir)

tokenizer.save_pretrained(save_dir)

Prediction results (pay attention to changing the path of the model)

The trained model can also predict the emotion of the text.

import numpy as np

import paddle

batch_size=24

trans_func = partial(

convert_example,

tokenizer=tokenizer,

max_seq_length=max_seq_length,

is_test=True)

batchify_fn = lambda samples, fn=Tuple(

Pad(axis=0, pad_val=tokenizer.pad_token_id), # input

Pad(axis=0, pad_val=tokenizer.pad_token_type_id), # segment

Stack() # qid

): [data for data in fn(samples)]

test_data_loader = create_dataloader(

test_ds,

mode='test',

batch_size=batch_size,

batchify_fn=batchify_fn,

trans_fn=trans_func)

# Change the loaded parameter path according to the actual operation

params_path = 'skep_sentence2t_weight_2e-6/model_1800_83700/model_state.pdparams'

if params_path and os.path.isfile(params_path):

state_dict = paddle.load(params_path)

model.set_dict(state_dict)

print("Loaded parameters from %s" % params_path)

label_map = {0: '0', 1: '1'}

results = []

model.eval()

for batch in test_data_loader:

input_ids, token_type_ids, qids = batch

logits = model(input_ids, token_type_ids)

probs = F.softmax(logits, axis=-1)

idx = paddle.argmax(probs, axis=1).numpy()

idx = idx.tolist()

labels = [label_map[i] for i in idx]

qids = qids.numpy().tolist()

results.extend(zip(qids, labels))

res_dir = "./results/2_weight_2e-6/1800_83700"

if not os.path.exists(res_dir):

os.makedirs(res_dir)

with open(os.path.join(res_dir, "NLPCC14-SC.tsv"), 'w', encoding="utf8") as f:

f.write("index\tprediction\n")

for qid, label in results:

f.write(str(qid[0])+"\t"+label+"\n")

2, Goal level emotion analysis

In the e-commerce product analysis scenario, in addition to analyzing the emotional polarity of the overall commodity, it is also refined to take the specific "aspects" of the commodity as the analysis subject for emotional analysis (aspect level), as follows:

- This potato chip tastes a little salty and too spicy, but it tastes crisp.

There is a negative evaluation on the taste of potato chips (salty, too spicy), but a positive evaluation on the taste (very crisp).

- I like Hawaii very much, but the seafood here is too expensive.

There is a positive evaluation about Hawaii (like it), but there is a negative evaluation about Hawaiian seafood (the price is too expensive).

Data read in – target level dataset

Similar to the sentence level approach

import os

import random

from paddlenlp.datasets import MapDataset

def load_ds(datafiles, split_train=False, dev_size=0):

datas = []

def read(ds_file):

with open(ds_file, 'r', encoding='utf-8') as fp:

# if fp.readline().split('\t')[0] == 'label':

next(fp) # Skip header

for line in fp.readlines():

data = line[:-1].split('\t')

yield ({'text':data[1], 'text_pair':data[2], 'label':int(data[0])})

def write_tsv(tsv, datas):

with open(tsv, mode='w', encoding='UTF-8') as f:

for line in datas:

f.write(line)

def spilt_train4dev(train_ds, dev_size):

with open(train_ds, 'r', encoding='UTF-8') as f:

for i, line in enumerate(f):

datas.append(line)

datas_tmp=datas[1:] # title line should not shuffle

random.shuffle(datas_tmp)

if 1-os.path.exists(os.path.dirname(train_ds)+'/tem'):

os.mkdir(os.path.dirname(train_ds)+'/tem')

# remember the title line

write_tsv(os.path.dirname(train_ds)+'/tem/train.tsv', datas[0:1]+datas_tmp[:-dev_size])

write_tsv(os.path.dirname(train_ds)+'/tem/dev.tsv', datas[0:1]+datas_tmp[-dev_size:])

if split_train:

if 1-isinstance(datafiles, str):

print("If you want to split the train, make sure that \'datafiles\' is a train set path str.")

return None

if dev_size == 0:

print("Please set size of dev set, as dev_size=...")

return None

spilt_train4dev(datafiles, dev_size)

datafiles = [os.path.dirname(datafiles)+'/tem/train.tsv', os.path.dirname(datafiles)+'/tem/dev.tsv']

if isinstance(datafiles, str):

return MapDataset(list(read(datafiles)))

elif isinstance(datafiles, list) or isinstance(datafiles, tuple):

return [MapDataset(list(read(datafile))) for datafile in datafiles]

def load_test(datafile):

def read(test_file):

with open(test_file, 'r', encoding='UTF-8') as f:

for i, line in enumerate(f):

if i==0:

continue

data = line[:-1].split('\t')

yield {'text':data[1], 'text_pair':data[2]}

return MapDataset(list(read(datafile)))

3. SE-ABSA16_PHNS

Only one dataset can be executed at a time!!!

train_ds, dev_ds = load_ds(datafiles='./data/SE-ABSA16_PHNS/train.tsv', split_train=True, dev_size=100) print(train_ds[0]) print(dev_ds[0]) test_ds = load_test(datafile='./data/SE-ABSA16_PHNS/test.tsv') print(test_ds[0])

4. SE-ABSA16_CAME

train_ds, dev_ds = load_ds(datafiles='./data/SE-ABSA16_CAME/train.tsv', split_train=True, dev_size=100) print(train_ds[0]) print(dev_ds[0]) test_ds = load_test(datafile='./data/SE-ABSA16_CAME/test.tsv') print(test_ds[0])

SKEP model construction

The target level affective analysis model also uses the SkepForSequenceClassification model, but the input of the target level affective analysis model is not only a sentence, but a sentence pair. One sentence describes the "aspect of the evaluation object" and the other describes the "comment on this aspect", as shown in the figure below.

from paddlenlp.transformers import SkepForSequenceClassification, SkepTokenizer

# num_classes: two categories, 0 and 1, indicating negative and positive

model = SkepForSequenceClassification.from_pretrained('skep_ernie_1.0_large_ch', num_classes=2)

tokenizer = SkepTokenizer.from_pretrained('skep_ernie_1.0_large_ch')

from functools import partial

import os

import time

import numpy as np

import paddle

import paddle.nn.functional as F

from paddlenlp.data import Stack, Tuple, Pad

from utils import create_dataloader

def convert_example(example,

tokenizer,

max_seq_length=512,

is_test=False,

dataset_name="chnsenticorp"):

"""

Builds model inputs from a sequence or a pair of sequence for sequence classification tasks

by concatenating and adding special tokens. And creates a mask from the two sequences passed

to be used in a sequence-pair classification task.

A skep_ernie_1.0_large_ch/skep_ernie_2.0_large_en sequence has the following format:

::

- single sequence: ``[CLS] X [SEP]``

- pair of sequences: ``[CLS] A [SEP] B [SEP]``

A skep_ernie_1.0_large_ch/skep_ernie_2.0_large_en sequence pair mask has the following format:

::

0 0 0 0 0 0 0 0 0 0 0 1 1 1 1 1 1 1 1 1

| first sequence | second sequence |

If `token_ids_1` is `None`, this method only returns the first portion of the mask (0s).

note: There is no need token type ids for skep_roberta_large_ch model.

Args:

example(obj:`list[str]`): List of input data, containing text and label if it have label.

tokenizer(obj:`PretrainedTokenizer`): This tokenizer inherits from :class:`~paddlenlp.transformers.PretrainedTokenizer`

which contains most of the methods. Users should refer to the superclass for more information regarding methods.

max_seq_len(obj:`int`): The maximum total input sequence length after tokenization.

Sequences longer than this will be truncated, sequences shorter will be padded.

is_test(obj:`False`, defaults to `False`): Whether the example contains label or not.

dataset_name((obj:`str`, defaults to "chnsenticorp"): The dataset name, "chnsenticorp" or "sst-2".

Returns:

input_ids(obj:`list[int]`): The list of token ids.

token_type_ids(obj: `list[int]`): List of sequence pair mask.

label(obj:`numpy.array`, data type of int64, optional): The input label if not is_test.

"""

encoded_inputs = tokenizer(

text=example["text"],

text_pair=example["text_pair"],

max_seq_len=max_seq_length)

input_ids = encoded_inputs["input_ids"]

token_type_ids = encoded_inputs["token_type_ids"]

if not is_test:

label = np.array([example["label"]], dtype="int64")

return input_ids, token_type_ids, label

else:

return input_ids, token_type_ids

max_seq_length=512

batch_size=16

trans_func = partial(

convert_example,

tokenizer=tokenizer,

max_seq_length=max_seq_length)

batchify_fn = lambda samples, fn=Tuple(

Pad(axis=0, pad_val=tokenizer.pad_token_id), # input_ids

Pad(axis=0, pad_val=tokenizer.pad_token_type_id), # token_type_ids

Stack(dtype="int64") # labels

): [data for data in fn(samples)]

train_data_loader = create_dataloader(

train_ds,

mode='train',

batch_size=batch_size,

batchify_fn=batchify_fn,

trans_fn=trans_func)

dev_data_loader = create_dataloader(

dev_ds,

mode='dev',

batch_size=batch_size,

batchify_fn=batchify_fn,

trans_fn=trans_func)

Model training and evaluation

After defining the loss function, optimizer and evaluation index, you can start training.

from utils import evaluate

epochs = 12

num_training_steps = len(train_data_loader) * epochs

# optimizer = paddle.optimizer.AdamW(

# learning_rate=2e-6,

# parameters=model.parameters())

decay_params = [

p.name for n, p in model.named_parameters()

if not any(nd in n for nd in ["bias", "norm"])

]

optimizer = paddle.optimizer.AdamW(

learning_rate=1e-6,

parameters=model.parameters(),

weight_decay=0.01, # test weight_decay

apply_decay_param_fun=lambda x: x in decay_params # test weight_decay

)

criterion = paddle.nn.loss.CrossEntropyLoss()

metric = paddle.metric.Accuracy()

ckpt_dir = "skep_aspect"

global_step = 0

tic_train = time.time()

for epoch in range(1, epochs + 1):

for step, batch in enumerate(train_data_loader, start=1):

input_ids, token_type_ids, labels = batch

logits = model(input_ids, token_type_ids)

loss = criterion(logits, labels)

probs = F.softmax(logits, axis=1)

correct = metric.compute(probs, labels)

metric.update(correct)

acc = metric.accumulate()

global_step += 1

if global_step % 10 == 0:

print(

"global step %d, epoch: %d, batch: %d, loss: %.5f, accu: %.5f, speed: %.2f step/s"

% (global_step, epoch, step, loss, acc,

10 / (time.time() - tic_train)))

tic_train = time.time()

loss.backward()

optimizer.step()

optimizer.clear_grad()

if global_step % 50 == 0:

save_dir = os.path.join(ckpt_dir, "model_%d" % global_step)

if not os.path.exists(save_dir):

os.makedirs(save_dir)

#The evaluate here directly copies the above items, which may not be correct

evaluate(model, criterion, metric, dev_data_loader)

model.save_pretrained(save_dir)

tokenizer.save_pretrained(save_dir)

Prediction results

The trained model can also predict the emotion of the evaluation object.

@paddle.no_grad()

def predict(model, data_loader, label_map):

"""

Given a prediction dataset, it gives the prediction results.

Args:

model(obj:`paddle.nn.Layer`): A model to classify texts.

data_loader(obj:`paddle.io.DataLoader`): The dataset loader which generates batches.

label_map(obj:`dict`): The label id (key) to label str (value) map.

"""

model.eval()

results = []

for batch in data_loader:

input_ids, token_type_ids = batch

logits = model(input_ids, token_type_ids)

probs = F.softmax(logits, axis=1)

idx = paddle.argmax(probs, axis=1).numpy()

idx = idx.tolist()

labels = [label_map[i] for i in idx]

results.extend(labels)

return results

label_map = {0: '0', 1: '1'}

trans_func = partial(

convert_example,

tokenizer=tokenizer,

max_seq_length=max_seq_length,

is_test=True)

batchify_fn = lambda samples, fn=Tuple(

Pad(axis=0, pad_val=tokenizer.pad_token_id), # input_ids

Pad(axis=0, pad_val=tokenizer.pad_token_type_id), # token_type_ids

): [data for data in fn(samples)]

batch_size=16

test_data_loader = create_dataloader(

test_ds,

mode='test',

batch_size=batch_size,

batchify_fn=batchify_fn,

trans_fn=trans_func)

# Change the loaded parameter path according to the actual operation

params_path = 'skep_aspect4_weight_1e-6/model_500_73000/model_state.pdparams'

if params_path and os.path.isfile(params_path):

state_dict = paddle.load(params_path)

model.set_dict(state_dict)

print("Loaded parameters from %s" % params_path)

results = predict(model, test_data_loader, label_map)

res_dir = "./results/4_weight_1e-6/500_73000"

if not os.path.exists(res_dir):

os.makedirs(res_dir)

with open(os.path.join(res_dir, "SE-ABSA16_CAME.tsv"), 'w', encoding="utf8") as f:

f.write("index\tprediction\n")

for idx, label in enumerate(results):

f.write(str(idx)+"\t"+label+"\n")

3, Evaluation object extraction

Data read in – object dataset

Object = []

😦

import os

import random

from paddlenlp.datasets import MapDataset

def load_ds(datafiles, split_train=False, dev_size=0):

datas = []

def read(ds_file):

with open(ds_file, 'r', encoding='utf-8') as fp:

# if fp.readline().split('\t')[0] == 'label':

next(fp) # Skip header

for line in fp.readlines():

# print('1\n')

line_stripped = line.strip().split('\t')

if not line_stripped:

continue

# In the dataset, the entity and text are not on the same line.....

try:

example = [line_stripped[indice] for indice in (0,1)]

entity, text = example[0], example[1]

start_idx = text.index(entity)

except:

# drop the dirty data

continue

labels = [2] * len(text)

labels[start_idx] = 0

for idx in range(start_idx + 1, start_idx + len(entity)):

labels[idx] = 1

yield {

"tokens": list(text),

"labels": labels,

"entity": entity

}

def write_tsv(tsv, datas):

with open(tsv, mode='w', encoding='UTF-8') as f:

for line in datas:

f.write(line)

def spilt_train4dev(train_ds, dev_size):

with open(train_ds, 'r', encoding='UTF-8') as f:

for i, line in enumerate(f):

datas.append(line)

datas_tmp=datas[1:] # title line should not shuffle

random.shuffle(datas_tmp)

if 1-os.path.exists(os.path.dirname(train_ds)+'/tem'):

os.mkdir(os.path.dirname(train_ds)+'/tem')

# remember the title line

write_tsv(os.path.dirname(train_ds)+'/tem/train.tsv', datas[0:1]+datas_tmp[:-dev_size])

write_tsv(os.path.dirname(train_ds)+'/tem/dev.tsv', datas[0:1]+datas_tmp[-dev_size:])

if split_train:

if 1-isinstance(datafiles, str):

print("If you want to split the train, make sure that \'datafiles\' is a train set path str.")

return None

if dev_size == 0:

print("Please set size of dev set, as dev_size=...")

return None

spilt_train4dev(datafiles, dev_size)

datafiles = [os.path.dirname(datafiles)+'/tem/train.tsv', os.path.dirname(datafiles)+'/tem/dev.tsv']

if isinstance(datafiles, str):

return MapDataset(list(read(datafiles)))

elif isinstance(datafiles, list) or isinstance(datafiles, tuple):

return [MapDataset(list(read(datafile))) for datafile in datafiles]

def load_test(datafile):

def read(test_file):

with open(test_file, 'r', encoding='UTF-8') as fp:

# if fp.readline().split('\t')[0] == 'label':

next(fp) # Skip header

for line in fp.readlines():

# print('1\n')

line_stripped = line.strip().split('\t')

if not line_stripped:

continue

# example = [line_stripped[indice] for indice in field_indices]

# example = [line_stripped[indice] for indice in (0,1)]

# In the dataset, the entity and text are not on the same line.....

try:

example = [line_stripped[indice] for indice in (0,1)]

entity, text = example[0], example[1]

except:

# drop the dirty data

continue

yield {"tokens": list(text)}

return MapDataset(list(read(datafile)))

5. COTE-DP

train_ds = load_ds(datafiles='./data/COTE-DP/train.tsv') print(train_ds[0]) test_ds = load_test(datafile='./data/COTE-DP/test.tsv') print(test_ds[0])

6. COTE-BD

train_ds = load_ds(datafiles='./data/COTE-BD/train.tsv') print(train_ds[0]) test_ds = load_test(datafile='./data/COTE-BD/test.tsv') print(test_ds[0])

7. COTE-MFW

train_ds = load_ds(datafiles='./data/COTE-MFW/train.tsv') print(train_ds[1]) test_ds = load_test(datafile='./data/COTE-MFW/test.tsv') print(test_ds[0])

SKEP model construction

It's a little different from the one above

from paddlenlp.transformers import SkepCrfForTokenClassification, SkepTokenizer, SkepModel

# num_classes: Class III, B/I/O

skep = SkepModel.from_pretrained('skep_ernie_1.0_large_ch')

model = SkepCrfForTokenClassification(skep, num_classes=3)

tokenizer = SkepTokenizer.from_pretrained(pretrained_model_name_or_path="skep_ernie_1.0_large_ch")

# # The COTE_DP dataset labels with "BIO" schema.

# label_map = {label: idx for idx, label in enumerate(train_ds.label_list)}

# # `no_entity_label` represents that the token isn't an entity.

# # print(type(no_entity_label_idx))

no_entity_label_idx = 2

from functools import partial

import os

import time

import numpy as np

import paddle

import paddle.nn.functional as F

from paddlenlp.data import Stack, Tuple, Pad

from utils import create_dataloader

def convert_example_to_feature(example,

tokenizer,

max_seq_len=512,

no_entity_label="O",

is_test=False):

"""

Builds model inputs from a sequence or a pair of sequence for sequence classification tasks

by concatenating and adding special tokens. And creates a mask from the two sequences passed

to be used in a sequence-pair classification task.

A skep_ernie_1.0_large_ch/skep_ernie_2.0_large_en sequence has the following format:

::

- single sequence: ``[CLS] X [SEP]``

- pair of sequences: ``[CLS] A [SEP] B [SEP]``

A skep_ernie_1.0_large_ch/skep_ernie_2.0_large_en sequence pair mask has the following format:

::

0 0 0 0 0 0 0 0 0 0 0 1 1 1 1 1 1 1 1 1

| first sequence | second sequence |

If `token_ids_1` is `None`, this method only returns the first portion of the mask (0s).

Args:

example(obj:`list[str]`): List of input data, containing text and label if it have label.

tokenizer(obj:`PretrainedTokenizer`): This tokenizer inherits from :class:`~paddlenlp.transformers.PretrainedTokenizer`

which contains most of the methods. Users should refer to the superclass for more information regarding methods.

max_seq_len(obj:`int`): The maximum total input sequence length after tokenization.

Sequences longer than this will be truncated, sequences shorter will be padded.

no_entity_label(obj:`str`, defaults to "O"): The label represents that the token isn't an entity.

is_test(obj:`False`, defaults to `False`): Whether the example contains label or not.

Returns:

input_ids(obj:`list[int]`): The list of token ids.

token_type_ids(obj: `list[int]`): List of sequence pair mask.

label(obj:`list[int]`, optional): The input label if not test data.

"""

tokens = example['tokens']

labels = example['labels']

tokenized_input = tokenizer(

tokens,

return_length=True,

is_split_into_words=True,

max_seq_len=max_seq_len)

input_ids = tokenized_input['input_ids']

token_type_ids = tokenized_input['token_type_ids']

seq_len = tokenized_input['seq_len']

if is_test:

return input_ids, token_type_ids, seq_len

else:

labels = labels[:(max_seq_len - 2)]

encoded_label = np.array([no_entity_label] + labels + [no_entity_label], dtype="int64")

return input_ids, token_type_ids, seq_len, encoded_label

max_seq_length=256

batch_size=40

trans_func = partial(

convert_example_to_feature,

tokenizer=tokenizer,

max_seq_len=max_seq_length,

no_entity_label=no_entity_label_idx,

is_test=False)

batchify_fn = lambda samples, fn=Tuple(

Pad(axis=0, pad_val=tokenizer.vocab[tokenizer.pad_token]), # input ids

Pad(axis=0, pad_val=tokenizer.vocab[tokenizer.pad_token]), # token type ids

Stack(dtype='int64'), # sequence lens

Pad(axis=0, pad_val=no_entity_label_idx) # labels

): [data for data in fn(samples)]

train_data_loader = create_dataloader(

train_ds,

mode='train',

batch_size=batch_size,

batchify_fn=batchify_fn,

trans_fn=trans_func)

# dev_data_loader = create_dataloader(

# dev_ds,

# mode='dev',

# batch_size=batch_size,

# batchify_fn=batchify_fn,

# trans_fn=trans_func)

model training

import time

from utils import evaluate

from paddlenlp.metrics import ChunkEvaluator

epochs = 10

num_training_steps = len(train_data_loader) * epochs

# # test weight_decay

# decay_params = [

# p.name for n, p in model.named_parameters()

# if not any(nd in n for nd in ["bias", "norm"])

# ]

# optimizer = paddle.optimizer.AdamW(

# learning_rate=1e-5,

# parameters=model.parameters(),

# weight_decay=0.01, # test weight_decay

# apply_decay_param_fun=lambda x: x in decay_params # test weight_decay

# )

optimizer = paddle.optimizer.AdamW(

learning_rate=1e-6,

parameters=model.parameters(),

)

# metric = ChunkEvaluator(label_list=train_ds.label_list, suffix=True)

metric = ChunkEvaluator(label_list=['B', 'I', 'O'], suffix=True)

ckpt_dir = "skep_opinion7_1e-6"

global_step = 0

tic_train = time.time()

for epoch in range(1, epochs + 1):

for step, batch in enumerate(train_data_loader, start=1):

input_ids, token_type_ids, seq_lens, labels = batch

loss = model(

input_ids, token_type_ids, seq_lens=seq_lens, labels=labels)

avg_loss = paddle.mean(loss)

global_step += 1

if global_step % 10 == 0:

print(

"global step %d, epoch: %d, batch: %d, loss: %.5f, speed: %.2f step/s"

% (global_step, epoch, step, avg_loss,

10 / (time.time() - tic_train)))

tic_train = time.time()

loss.backward()

optimizer.step()

optimizer.clear_grad()

if global_step % 200 == 0:

save_dir = os.path.join(ckpt_dir, "model_%d" % global_step)

if not os.path.exists(save_dir):

os.makedirs(save_dir)

file_name = os.path.join(save_dir, "model_state.pdparam")

# Need better way to get inner model of DataParallel

paddle.save(model.state_dict(), file_name)

Prediction results

import re

def convert_example_to_feature(example, tokenizer, max_seq_length=512, is_test=False):

"""

Builds model inputs from a sequence or a pair of sequence for sequence classification tasks

by concatenating and adding special tokens. And creates a mask from the two sequences passed

to be used in a sequence-pair classification task.

A skep_ernie_1.0_large_ch/skep_ernie_2.0_large_en sequence has the following format:

::

- single sequence: ``[CLS] X [SEP]``

- pair of sequences: ``[CLS] A [SEP] B [SEP]``

A skep_ernie_1.0_large_ch/skep_ernie_2.0_large_en sequence pair mask has the following format:

::

0 0 0 0 0 0 0 0 0 0 0 1 1 1 1 1 1 1 1 1

| first sequence | second sequence |

If `token_ids_1` is `None`, this method only returns the first portion of the mask (0s).

Args:

example(obj:`list[str]`): List of input data, containing text and label if it have label.

tokenizer(obj:`PretrainedTokenizer`): This tokenizer inherits from :class:`~paddlenlp.transformers.PretrainedTokenizer`

which contains most of the methods. Users should refer to the superclass for more information regarding methods.

max_seq_len(obj:`int`): The maximum total input sequence length after tokenization.

Sequences longer than this will be truncated, sequences shorter will be padded.

Returns:

input_ids(obj:`list[int]`): The list of token ids.

token_type_ids(obj: `list[int]`): List of sequence pair mask.

"""

tokens = example["tokens"]

encoded_inputs = tokenizer(

tokens,

return_length=True,

is_split_into_words=True,

max_seq_len=max_seq_length)

input_ids = encoded_inputs["input_ids"]

token_type_ids = encoded_inputs["token_type_ids"]

seq_len = encoded_inputs["seq_len"]

return input_ids, token_type_ids, seq_len

def parse_predict_result(predictions, seq_lens, label_map):

"""

Parses the prediction results to the label tag.

"""

pred_tag = []

for idx, pred in enumerate(predictions):

seq_len = seq_lens[idx]

# drop the "[CLS]" and "[SEP]" token

tag = [label_map[i] for i in pred[1:seq_len - 1]]

pred_tag.append(tag)

return pred_tag

@paddle.no_grad()

def predict(model, data_loader, label_map):

"""

Given a prediction dataset, it gives the prediction results.

Args:

model(obj:`paddle.nn.Layer`): A model to classify texts.

data_loader(obj:`paddle.io.DataLoader`): The dataset loader which generates batches.

label_map(obj:`dict`): The label id (key) to label str (value) map.

"""

model.eval()

results = []

for input_ids, token_type_ids, seq_lens in data_loader:

preds = model(input_ids, token_type_ids, seq_lens=seq_lens)

tags = parse_predict_result(preds.numpy(), seq_lens.numpy(), label_map)

results.extend(tags)

return results

# The COTE_DP dataset labels with "BIO" schema.

label_map = {0: "B", 1: "I", 2: "O"}

# `no_entity_label` represents that the token isn't an entity.

no_entity_label_idx = 2

batch_size=96

trans_func = partial(

convert_example_to_feature,

tokenizer=tokenizer,

max_seq_length=max_seq_length)

batchify_fn = lambda samples, fn=Tuple(

Pad(axis=0, pad_val=tokenizer.vocab[tokenizer.pad_token]), # input ids

Pad(axis=0, pad_val=tokenizer.vocab[tokenizer.pad_token]), # token type ids

Stack(dtype='int64'), # sequence lens

): [data for data in fn(samples)]

test_data_loader = create_dataloader(

test_ds,

mode='test',

batch_size=batch_size,

batchify_fn=batchify_fn,

trans_fn=trans_func)

# Change the loaded parameter path according to the actual operation,!!! Pay attention to whether the loading is successful!!!

params_path = 'skep_opinion7_1e-6/model_10200_10/model_state.pdparam'

if params_path and os.path.isfile(params_path):

state_dict = paddle.load(params_path)

model.set_dict(state_dict)

print("Loaded parameters from %s" % params_path)

else:

print("MODEL LOAD FAILURE")

exit

results = predict(model, test_data_loader, label_map)

# Processing symbols

punc = '~`!#$%^&*()_+-=|\';":/.,?><~·!@#¥%......&*()-+-=": ';,. ,?><{}'

res_dir = "./results/7_1e-6/10200_10"

if not os.path.exists(res_dir):

os.makedirs(res_dir)

with open(os.path.join(res_dir, "COTE_MFW.tsv"), 'w', encoding="utf8") as f:

f.write("index\tprediction\n")

for idx, example in enumerate(test_ds.data):

tag = []

# to find "B...I...I"

for idx1, letter in enumerate(results[idx]):

if letter == 'B':

i = idx1

try:

while(results[idx][i+1]=='I'):

i = i+1

except:

pass

tag.append(re.sub(r"[%s]+" %punc, "", "".join(example['tokens'][idx1:i+1])))

if tag == []:

# If no entity is found, it should be predicted as none, otherwise the competition comparison will be abnormal

tag.append('nothing')

f.write(str(idx)+"\t"+"\x01".join(tag)+"\n")

Submit

Compress the forecast results into a zip file and submit Thousand words competition website

summary

This project is a major operation of PaddleNLP punch in camp, including the following deficiencies:

Instead of cross checking, take a part from the training set as the verification set

The data were not analyzed and processed

Can't adjust parameters (inexperienced)

This project is my first project completed with Paddle and the first deep learning project.

I have learned a lot through practice and found that there is still a lot to learn. I hope you can point out the deficiencies in the comment area.

You are also welcome to like, fork and pay attention!

I got silver level in AI Studio and lit 4 badges to turn on each other~ https://aistudio.baidu.com/aistudio/personalcenter/thirdview/815060