background

- Read the fueling source code! - by Lu Xun

- A picture is worth a thousand words. --By Golgi

Explain:

- Kernel version: 4.14

- ARM64 processor, Contex-A53, dual core

- Using tool: Source Insight 3.5, Visio

1. overview

In this article, let's take a look at the address space of user state processes, mainly including the following:

- vma;

- malloc;

- mmap;

In the process address space, our common code segment, data segment, bss segment, etc. are actually a segment of address space area. Linux calls the region in the address space Virtual Memory Area, or VMA for short, which is described by struct VM? Area? Struct.

In memory application and mapping, a virtual address area will be applied in the address space, and this part of the operation is closely related to vma, so this paper will put vma/malloc/mmap into one to analyze.

Start exploring.

2. Data structure

It mainly involves two structures: struct mm struct and struct VM area struct.

-

struct mm_struct

It is used to describe all the information related to the process address space. This structure is also included in the process descriptor. For the description of key fields, see the comments.

struct mm_struct {

struct vm_area_struct *mmap; /* list of VMAs */ //Chain header to VMA object

struct rb_root mm_rb; //Root of the red black tree pointing to the VMA object

u64 vmacache_seqnum; /* per-thread vmacache */

#ifdef CONFIG_MMU

unsigned long (*get_unmapped_area) (struct file *filp,

unsigned long addr, unsigned long len,

unsigned long pgoff, unsigned long flags); // A method of searching effective linear address interval in process address space

#endif

unsigned long mmap_base; /* base of mmap area */

unsigned long mmap_legacy_base; /* base of mmap area in bottom-up allocations */

#ifdef CONFIG_HAVE_ARCH_COMPAT_MMAP_BASES

/* Base adresses for compatible mmap() */

unsigned long mmap_compat_base;

unsigned long mmap_compat_legacy_base;

#endif

unsigned long task_size; /* size of task vm space */

unsigned long highest_vm_end; /* highest vma end address */

pgd_t * pgd; //Point to page Global

/**

* @mm_users: The number of users including userspace.

*

* Use mmget()/mmget_not_zero()/mmput() to modify. When this drops

* to 0 (i.e. when the task exits and there are no other temporary

* reference holders), we also release a reference on @mm_count

* (which may then free the &struct mm_struct if @mm_count also

* drops to 0).

*/

atomic_t mm_users; //Use counter

/**

* @mm_count: The number of references to &struct mm_struct

* (@mm_users count as 1).

*

* Use mmgrab()/mmdrop() to modify. When this drops to 0, the

* &struct mm_struct is freed.

*/

atomic_t mm_count; //Use counter

atomic_long_t nr_ptes; /* PTE page table pages */ //Number of process page tables

#if CONFIG_PGTABLE_LEVELS > 2

atomic_long_t nr_pmds; /* PMD page table pages */

#endif

int map_count; /* number of VMAs */ //The number of VMA

spinlock_t page_table_lock; /* Protects page tables and some counters */

struct rw_semaphore mmap_sem;

struct list_head mmlist; /* List of maybe swapped mm's. These are globally strung

* together off init_mm.mmlist, and are protected

* by mmlist_lock

*/

unsigned long hiwater_rss; /* High-watermark of RSS usage */

unsigned long hiwater_vm; /* High-water virtual memory usage */

unsigned long total_vm; /* Total pages mapped */ //Number of pages in the process address space

unsigned long locked_vm; /* Pages that have PG_mlocked set */ //Locked pages, cannot be changed out

unsigned long pinned_vm; /* Refcount permanently increased */

unsigned long data_vm; /* VM_WRITE & ~VM_SHARED & ~VM_STACK */ //Number of pages in segment memory

unsigned long exec_vm; /* VM_EXEC & ~VM_WRITE & ~VM_STACK */ //Number of pages that can perform memory mapping

unsigned long stack_vm; /* VM_STACK */ //Number of pages in user state stack

unsigned long def_flags;

unsigned long start_code, end_code, start_data, end_data; //Address of code segment, data segment, etc

unsigned long start_brk, brk, start_stack; //The address of the stack segment. start_stack indicates the starting address of the user state stack. brk is the current last address of the heap

unsigned long arg_start, arg_end, env_start, env_end; //Address of command line parameter, address of environment variable

unsigned long saved_auxv[AT_VECTOR_SIZE]; /* for /proc/PID/auxv */

/*

* Special counters, in some configurations protected by the

* page_table_lock, in other configurations by being atomic.

*/

struct mm_rss_stat rss_stat;

struct linux_binfmt *binfmt;

cpumask_var_t cpu_vm_mask_var;

/* Architecture-specific MM context */

mm_context_t context;

unsigned long flags; /* Must use atomic bitops to access the bits */

struct core_state *core_state; /* coredumping support */

#ifdef CONFIG_MEMBARRIER

atomic_t membarrier_state;

#endif

#ifdef CONFIG_AIO

spinlock_t ioctx_lock;

struct kioctx_table __rcu *ioctx_table;

#endif

#ifdef CONFIG_MEMCG

/*

* "owner" points to a task that is regarded as the canonical

* user/owner of this mm. All of the following must be true in

* order for it to be changed:

*

* current == mm->owner

* current->mm != mm

* new_owner->mm == mm

* new_owner->alloc_lock is held

*/

struct task_struct __rcu *owner;

#endif

struct user_namespace *user_ns;

/* store ref to file /proc/<pid>/exe symlink points to */

struct file __rcu *exe_file;

#ifdef CONFIG_MMU_NOTIFIER

struct mmu_notifier_mm *mmu_notifier_mm;

#endif

#if defined(CONFIG_TRANSPARENT_HUGEPAGE) && !USE_SPLIT_PMD_PTLOCKS

pgtable_t pmd_huge_pte; /* protected by page_table_lock */

#endif

#ifdef CONFIG_CPUMASK_OFFSTACK

struct cpumask cpumask_allocation;

#endif

#ifdef CONFIG_NUMA_BALANCING

/*

* numa_next_scan is the next time that the PTEs will be marked

* pte_numa. NUMA hinting faults will gather statistics and migrate

* pages to new nodes if necessary.

*/

unsigned long numa_next_scan;

/* Restart point for scanning and setting pte_numa */

unsigned long numa_scan_offset;

/* numa_scan_seq prevents two threads setting pte_numa */

int numa_scan_seq;

#endif

/*

* An operation with batched TLB flushing is going on. Anything that

* can move process memory needs to flush the TLB when moving a

* PROT_NONE or PROT_NUMA mapped page.

*/

atomic_t tlb_flush_pending;

#ifdef CONFIG_ARCH_WANT_BATCHED_UNMAP_TLB_FLUSH

/* See flush_tlb_batched_pending() */

bool tlb_flush_batched;

#endif

struct uprobes_state uprobes_state;

#ifdef CONFIG_HUGETLB_PAGE

atomic_long_t hugetlb_usage;

#endif

struct work_struct async_put_work;

#if IS_ENABLED(CONFIG_HMM)

/* HMM needs to track a few things per mm */

struct hmm *hmm;

#endif

} __randomize_layout;-

struct vm_area_struct

It is used to describe a virtual area in the process address space. Each VMA corresponds to a struct VM area struct.

/*

* This struct defines a memory VMM memory area. There is one of these

* per VM-area/task. A VM area is any part of the process virtual memory

* space that has a special rule for the page-fault handlers (ie a shared

* library, the executable area etc).

*/

struct vm_area_struct {

/* The first cache line has the info for VMA tree walking. */

unsigned long vm_start; /* Our start address within vm_mm. */ //Initial address

unsigned long vm_end; /* The first byte after our end address

within vm_mm. */ //The end address is not included in the interval

/* linked list of VM areas per task, sorted by address */ //Linked list sorted by starting address

struct vm_area_struct *vm_next, *vm_prev;

struct rb_node vm_rb; //Red black tree node

/*

* Largest free memory gap in bytes to the left of this VMA.

* Either between this VMA and vma->vm_prev, or between one of the

* VMAs below us in the VMA rbtree and its ->vm_prev. This helps

* get_unmapped_area find a free area of the right size.

*/

unsigned long rb_subtree_gap;

/* Second cache line starts here. */

struct mm_struct *vm_mm; /* The address space we belong to. */

pgprot_t vm_page_prot; /* Access permissions of this VMA. */

unsigned long vm_flags; /* Flags, see mm.h. */

/*

* For areas with an address space and backing store,

* linkage into the address_space->i_mmap interval tree.

*/

struct {

struct rb_node rb;

unsigned long rb_subtree_last;

} shared;

/*

* A file's MAP_PRIVATE vma can be in both i_mmap tree and anon_vma

* list, after a COW of one of the file pages. A MAP_SHARED vma

* can only be in the i_mmap tree. An anonymous MAP_PRIVATE, stack

* or brk vma (with NULL file) can only be in an anon_vma list.

*/

struct list_head anon_vma_chain; /* Serialized by mmap_sem &

* page_table_lock */

struct anon_vma *anon_vma; /* Serialized by page_table_lock */

/* Function pointers to deal with this struct. */

const struct vm_operations_struct *vm_ops;

/* Information about our backing store: */

unsigned long vm_pgoff; /* Offset (within vm_file) in PAGE_SIZE

units */

struct file * vm_file; /* File we map to (can be NULL). */ //Point to an open instance of a file

void * vm_private_data; /* was vm_pte (shared mem) */

atomic_long_t swap_readahead_info;

#ifndef CONFIG_MMU

struct vm_region *vm_region; /* NOMMU mapping region */

#endif

#ifdef CONFIG_NUMA

struct mempolicy *vm_policy; /* NUMA policy for the VMA */

#endif

struct vm_userfaultfd_ctx vm_userfaultfd_ctx;

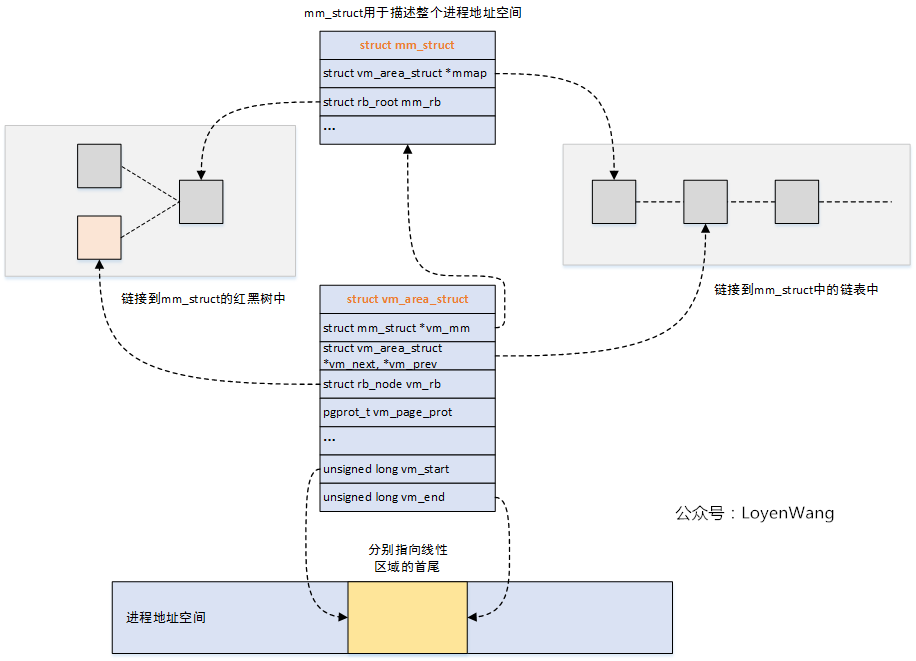

} __randomize_layout;Here comes the diagram:

Is it familiar? This is similar to the vmap mechanism in the kernel.

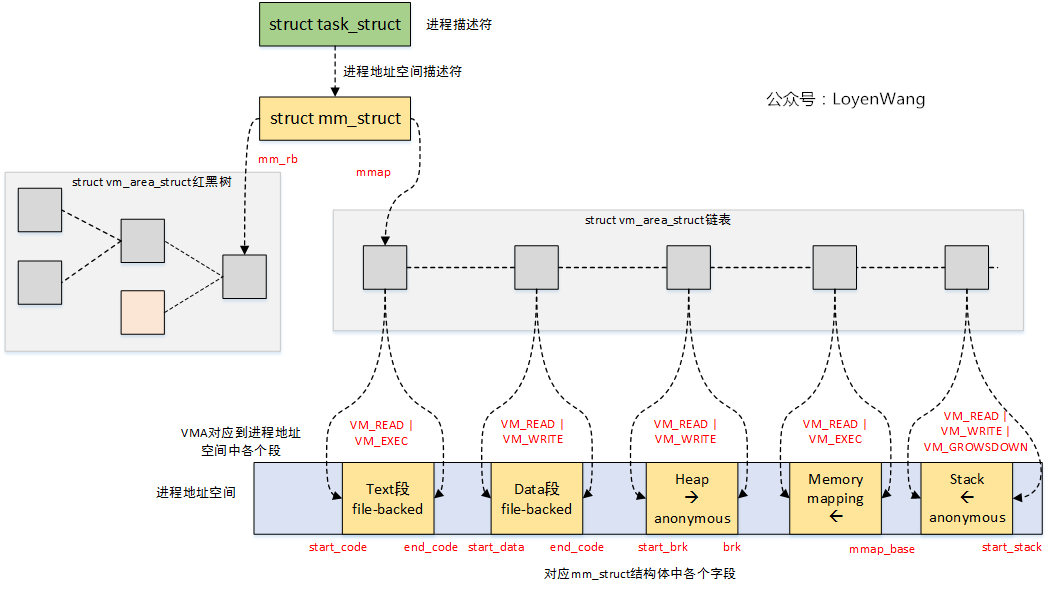

Take a macro look at the Vmas in the process address space:

For VMA operation, there are the following interfaces:

/* VMA Lookup */

/* Look up the first VMA which satisfies addr < vm_end, NULL if none. */

extern struct vm_area_struct * find_vma(struct mm_struct * mm, unsigned long addr); //Find the first VMA block that satisfies addr < VM end

extern struct vm_area_struct * find_vma_prev(struct mm_struct * mm, unsigned long addr,

struct vm_area_struct **pprev); //Similar to find ﹣ VMA function, the difference is that it will return the previous VMA of VMA link;

static inline struct vm_area_struct * find_vma_intersection(struct mm_struct * mm, unsigned long start_addr, unsigned long end_addr); //Find VMA that intersects the start addr ~ end addr region

/* VMA Insertion */

extern int insert_vm_struct(struct mm_struct *, struct vm_area_struct *); //Insert VMA into red black tree and link list

/* VMA Merger */

extern struct vm_area_struct *vma_merge(struct mm_struct *,

struct vm_area_struct *prev, unsigned long addr, unsigned long end,

unsigned long vm_flags, struct anon_vma *, struct file *, pgoff_t,

struct mempolicy *, struct vm_userfaultfd_ctx); //Merge VMA with nearby VMA

/* VMA Resolution */

extern int split_vma(struct mm_struct *, struct vm_area_struct *,

unsigned long addr, int new_below); //Divide VMA into two Vmas with addr as the boundary lineThe above operations are basically operations for the red black tree.

3. malloc

Everyone is familiar with malloc, so how does it interact with the underlying layer and apply to memory?

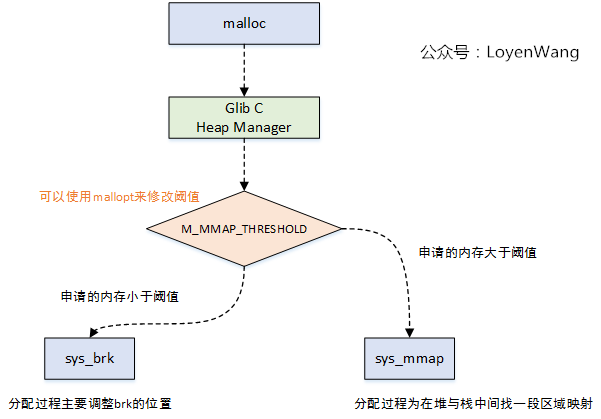

Here's the picture:

As shown in the figure, malloc will eventually adjust the sys{brk function and sys{mmap function at the bottom layer, call the sys{brk function when allocating small memory, dynamically adjust the BRK position in the process address space; when allocating large memory, call the sys{mmap function, and find a domain between the heap and the stack for mapping.

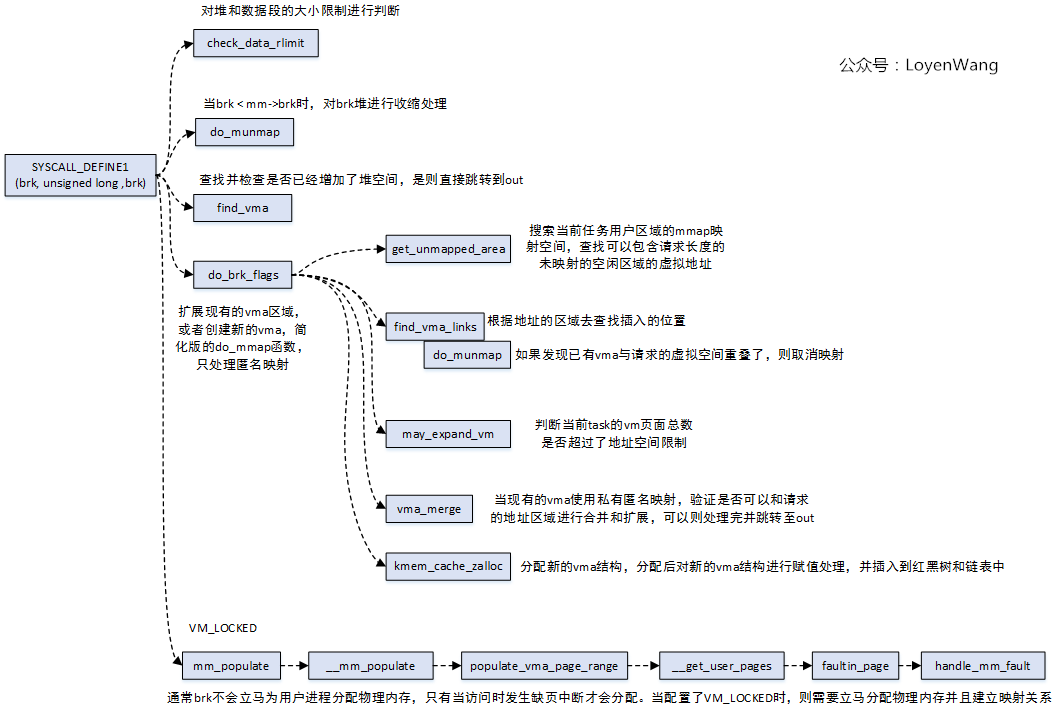

First, let's look at the sys_brk function, which is defined by SYSCALL_DEFINE1. The overall function call process is as follows:

From the function call process, we can see that there are many operations for vma, so the combined effect chart is as follows:

The whole process seems clear and simple. Each process uses struct mm struct to describe its own process address space. These spaces are some vma areas managed by a red black tree and a linked list. Therefore, for malloc processing, the location of brk will be dynamically adjusted, and the specific size will be specified by VM start ~ VM end in struct VM area struct structure. In the actual process, it will be processed according to whether the request allocation area overlaps with the existing vma, or reapply a vma to describe the area, and finally insert it into the red black tree and the linked list.

After this application is completed, only a section of area is opened up. Generally, physical memory will not be allocated immediately. The allocation of physical memory will be handled after page missing exception occurs during access. This will be further analyzed in the following articles.

4. mmap

mmap is used for memory mapping, that is, mapping a region to its own process address space, which is divided into two types:

- File mapping: map the file area to the process space, and store the file on the storage device;

- Anonymous mapping: there is no region mapping corresponding to the file, and the content is stored in physical memory;

At the same time, it can be divided into two types according to whether other processes are visible:

- Private mapping: copies the data source without affecting other processes;

- Sharing mapping: the shared process can be seen;

According to the arrangement and combination, there are the following situations:

- Private anonymous mapping: usually used when allocating large memory, such as heap, stack, bss segment, etc;

- Shared anonymous mapping: commonly used for communication between parent and child processes, to create / dev/zero devices in the memory file system;

- Private file mapping: commonly used such as dynamic library loading, code segment, data segment, etc;

- Shared file mapping: commonly used for inter process communication, file reading and writing, etc;

The common prot permissions and flags are as follows:

#define PROT_READ 0x1 /* page can be read */ #define PROT_WRITE 0x2 /* page can be written */ #define PROT_EXEC 0x4 /* page can be executed */ #define PROT_SEM 0x8 /* page may be used for atomic ops */ #define PROT_NONE 0x0 /* page can not be accessed */ #define PROT_GROWSDOWN 0x01000000 /* mprotect flag: extend change to start of growsdown vma */ #define PROT_GROWSUP 0x02000000 /* mprotect flag: extend change to end of growsup vma */ #define MAP_SHARED 0x01 /* Share changes */ #define MAP_PRIVATE 0x02 /* Changes are private */ #define MAP_TYPE 0x0f /* Mask for type of mapping */ #define MAP_FIXED 0x10 /* Interpret addr exactly */ #define MAP_ANONYMOUS 0x20 /* don't use a file */ #define MAP_GROWSDOWN 0x0100 /* stack-like segment */ #define MAP_DENYWRITE 0x0800 /* ETXTBSY */ #define MAP_EXECUTABLE 0x1000 /* mark it as an executable */ #define MAP_LOCKED 0x2000 /* pages are locked */ #define MAP_NORESERVE 0x4000 /* don't check for reservations */ #define MAP_POPULATE 0x8000 /* populate (prefault) pagetables */ #define MAP_NONBLOCK 0x10000 /* do not block on IO */ #define MAP_STACK 0x20000 /* give out an address that is best suited for process/thread stacks */ #define MAP_HUGETLB 0x40000 /* create a huge page mapping */

The operation of mmap will eventually call the do ﹣ mmap function. Finally, a call graph is given: