Article Directory

- Preface

- First: The theoretical basis of CEPH

- 2: Deploy CEPH cluster

- 3: docking between CEPH and openstack

Preface

First: The theoretical basis of CEPH

1.1: Overview

- Ceph is a distributed cluster system. It is a reliable, auto-balanced and auto-recovered distributed storage system. The main advantage of Ceph is distributed storage. When storing each data, the location of the data storage will be calculated to make the data distribution as balanced as possible. There is no traditional single point failure problem and it can be expanded horizontally.

- Ceph can provide object storage (RADOSGW), block device storage (RBD), file system service (CephFS), object storage can dock network disks (owncloud) application business, etc. Its block device storage can dock (IaaS), the current mainstream IaaS transport platform software, such as OpenStack, CloudStack, Zstack, Eucalyptus, and kvm.

- At least one monitor and two OSD daemons are required, and MS (Metadata server) is required to run the Ceph file system client.

1.2: Related concepts

- What is OSD

- OSDs are those that store data, process replication of data, reply, fill back equally, and check other OSDs

The heartbeat of the daemon, providing some monitoring information to the monitor

- OSDs are those that store data, process replication of data, reply, fill back equally, and check other OSDs

- What is Monitor

- Monitor the status information of the entire cluster, when Ceph's cluster is two copies, at least two OSDs are required to achieve a healthy state, while guarding various charts (OSD charts, PG groups, Crush charts, and so on)

- ceph-mgr

- Ceph Manager Daemon, short for ceph-mgr.The main purpose of this component is to share and extend some of the capabilities of monitor s.

Reduce the burden on monitor s to better manage ceph storage system ceph

- Ceph Manager Daemon, short for ceph-mgr.The main purpose of this component is to share and extend some of the capabilities of monitor s.

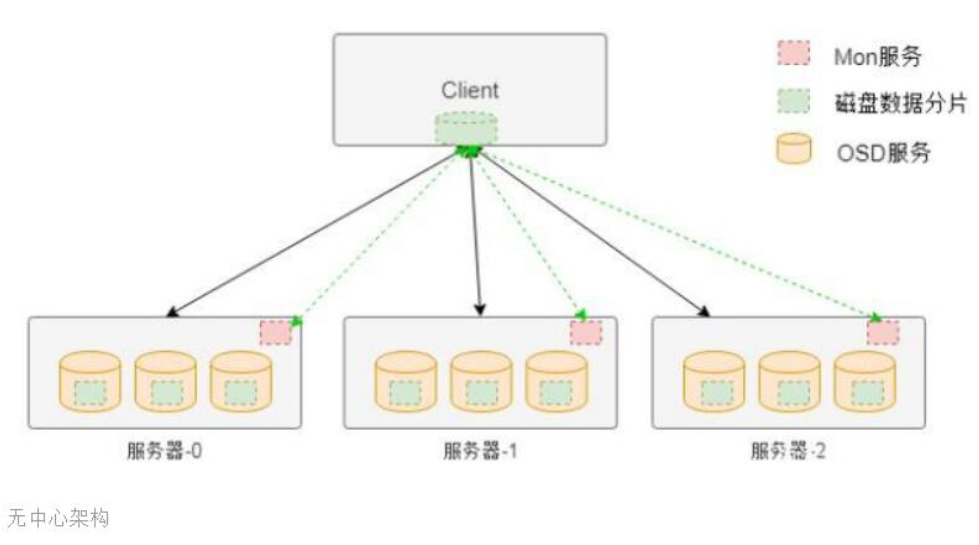

1.3: No central architecture at all

-

Completely Centerless Architecture 1-Computing Mode (ceph)

-

The diagram shows the architecture of the ceph storage system, in which there is no central node unlike HDFS.Clients calculate where they write data through a device mapping relationship so that they can communicate directly with the storage node, thereby avoiding performance bottlenecks for the central node.

-

The core components in the ceph storage system architecture are the Mon service, OSD service, MDS service, and so on.For block storage types, only software from Mon services, OSD services, and clients is required.The Mon service is used to maintain the hardware logic relationship of the storage system, mainly online information such as servers and hard disks.Mon services guarantee the availability of their services through clustering.OSD services are used to manage disks and achieve true data read and write. Usually one disk corresponds to one OSD service.

-

-

The general process for a client to access storage is that after startup, the client pulls the storage resource layout information from the Mon service first, then calculates the desired data location (including specific physical server information and disk information) based on the layout information and the name of the data written, and then the location information communicates directly, reading or writing the data.

2: Deploy CEPH cluster

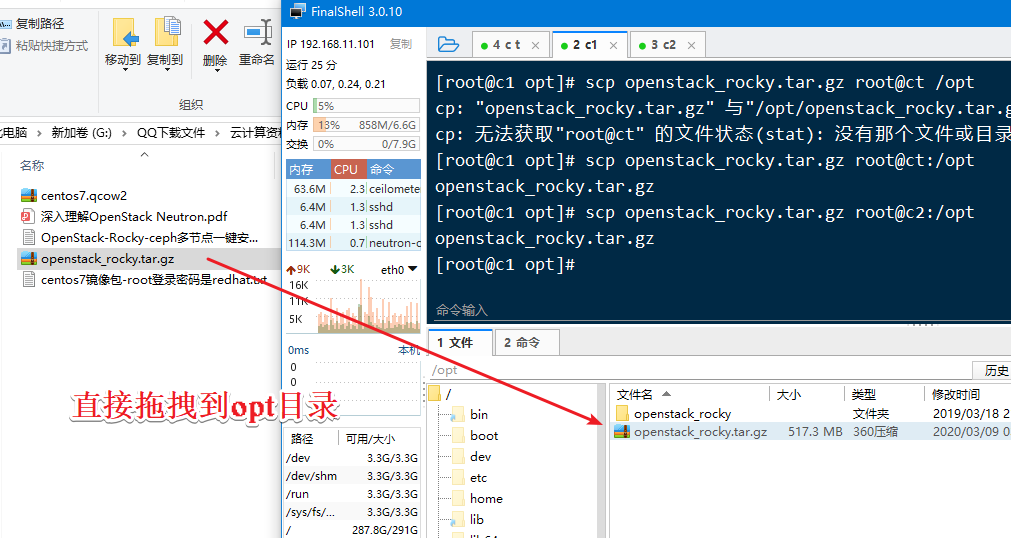

- This experiment uses the previous openstack multi-node environment, specific reference blog https://blog.csdn.net/CN_TangZheng/article/details/104543185

- Local source mode is used, since the experimental environment is limited to 777 memory allocation, 32G memory allocation using 888 memory allocation is recommended

2.1: Deploying a CEPH environment

-

Before deploying CEPH, you must clean up the data associated with and store

- 1. If OpenStack installs an instance, it must be deleted - deleted in dashboard console

- 2. If the mirror produced by OPenStack must be deleted - delete it in dashboard console

- 3. If the cinder block of OpenStack is deleted - delete in dashboard console

-

Three Nodes iptables Firewall Off

'//The three nodes operate the same, showing only the operations of the control node' [root@ct ~]# systemctl stop iptables [root@ct ~]# systemctl disable iptables Removed symlink /etc/systemd/system/basic.target.wants/iptables.service. -

Check nodes for interactivity-free, hostname, hosts, firewall shutdown, etc.

-

Local source configuration for three nodes (showing only the operations of the control node)

[root@ct opt]# tar zxvf openstack_rocky.tar.gz '//Unzip Uploaded Package' [root@ct opt]# ls openstack_rocky openstack_rocky.tar.gz [root@ct opt]# cd /etc/yum.repos.d [root@ct yum.repos.d]# vi local.repo [openstack] name=openstack baseurl=file:///opt/openstack_rocky '//The name of the folder just unzipped is the same as before, so there is no need to modify it' gpgcheck=0 enabled=1 [mnt] name=mnt baseurl=file:///mnt gpgcheck=0 enabled=1 [root@ct yum.repos.d]# yum clean all '//Clear Cache' //Plugin loaded: fastestmirror //Cleaning up software source: mnt openstack Cleaning up list of fastest mirrors [root@ct yum.repos.d]# yum makecache '//Create Cache'

2.2: CEPH cluster building

-

Three nodes install Python-setuptools tools and ceph software

[root@ct yum.repos.d]# yum -y install python-setuptools [root@ct yum.repos.d]# yum -y install ceph

-

On the control node, create the CEPH profile directory and install ceph-deploy

[root@ct yum.repos.d]# mkdir -p /etc/ceph [root@ct yum.repos.d]# yum -y install ceph-deploy

-

Control node creates three mon

[root@ct yum.repos.d]# cd /etc/ceph [root@ct ceph]# ceph-deploy new ct c1 c2 [root@ct ceph]# more /etc/ceph/ceph.conf [global] fsid = 8c9d2d27-492b-48a4-beb6-7de453cf45d6 mon_initial_members = ct, c1, c2 mon_host = 192.168.11.100,192.168.11.101,192.168.11.102 auth_cluster_required = cephx auth_service_required = cephx auth_client_required = cephx

-

Control node operation: initialize mon and collect the secret key (three nodes)

[root@ct ceph]# ceph-deploy mon create-initial [root@ct ceph]# ls ceph.bootstrap-mds.keyring ceph.bootstrap-rgw.keyring ceph-deploy-ceph.log ceph.bootstrap-mgr.keyring ceph.client.admin.keyring ceph.mon.keyring ceph.bootstrap-osd.keyring ceph.conf rbdmap

-

Control Node Create OSD

[root@ct ceph]# ceph-deploy osd create --data /dev/sdb ct [root@ct ceph]# ceph-deploy osd create --data /dev/sdb c1 [root@ct ceph]# ceph-deploy osd create --data /dev/sdb c2

-

Send configuration file and admin key to ct c1 c2 using ceph-deploy

[root@ct ceph]# ceph-deploy admin ct c1 c2

-

Add permissions to Keyrings for each node of ct c1 c2.

[root@ct ceph]# chmod +x /etc/ceph/ceph.client.admin.keyring [root@c1 ceph]# chmod +x /etc/ceph/ceph.client.admin.keyring [root@c2 ceph]# chmod +x /etc/ceph/ceph.client.admin.keyring

-

Create mgr management service

[root@ct ceph]# ceph-deploy mgr create ct c1 c2

-

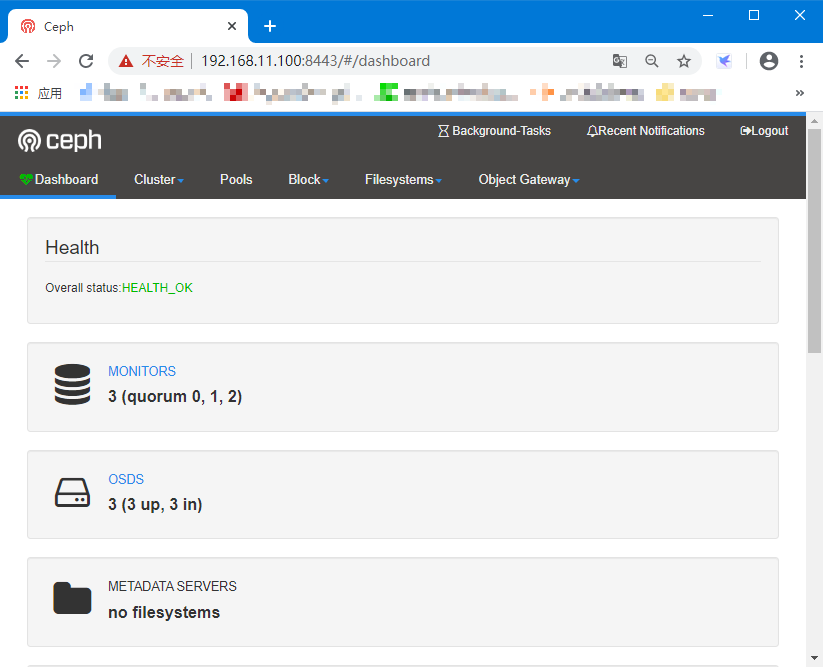

View ceph cluster status

[root@ct ceph]# ceph -s cluster: id: 8c9d2d27-492b-48a4-beb6-7de453cf45d6 health: HEALTH_OK '//Health OK' services: mon: 3 daemons, quorum ct,c1,c2 mgr: ct(active), standbys: c1, c2 osd: 3 osds: 3 up, 3 in data: pools: 0 pools, 0 pgs objects: 0 objects, 0 B usage: 3.0 GiB used, 3.0 TiB / 3.0 TiB avail pgs:

-

Creating three pool s (volumes, vms, images) 64 docked to openstack is PG

[root@ct ceph]# ceph osd pool create volumes 64 pool 'volumes' created [root@ct ceph]# ceph osd pool create vms 64 pool 'vms' created [root@ct ceph]# ceph osd pool create images 64 pool 'images' created

-

View CEPH status

[root@ct ceph]# ceph mon stat'//view monstatus' e1: 3 mons at {c1=192.168.11.101:6789/0,c2=192.168.11.102:6789/0,ct=192.168.11.100:6789/0}, election epoch 4, leader 0 ct, quorum 0,1,2 ct,c1,c2 [root@ct ceph]# ceph osd status '//View osd status' +----+------+-------+-------+--------+---------+--------+---------+-----------+ | id | host | used | avail | wr ops | wr data | rd ops | rd data | state | +----+------+-------+-------+--------+---------+--------+---------+-----------+ | 0 | ct | 1026M | 1022G | 0 | 0 | 0 | 0 | exists,up | | 1 | c1 | 1026M | 1022G | 0 | 0 | 0 | 0 | exists,up | | 2 | c2 | 1026M | 1022G | 0 | 0 | 0 | 0 | exists,up | +----+------+-------+-------+--------+---------+--------+---------+-----------+ [root@ct ceph]# ceph osd lspools '//View pools created by osd' 1 volumes 2 vms 3 images

2.3: CEPH Cluster Management Page Installation

-

Check CEPH status without error

-

Enable dashboard module

[root@ct ceph]# ceph mgr module enable dashboard

-

Create https certificate

[root@ct ceph]# ceph dashboard create-self-signed-cert Self-signed certificate created

-

View mgr services

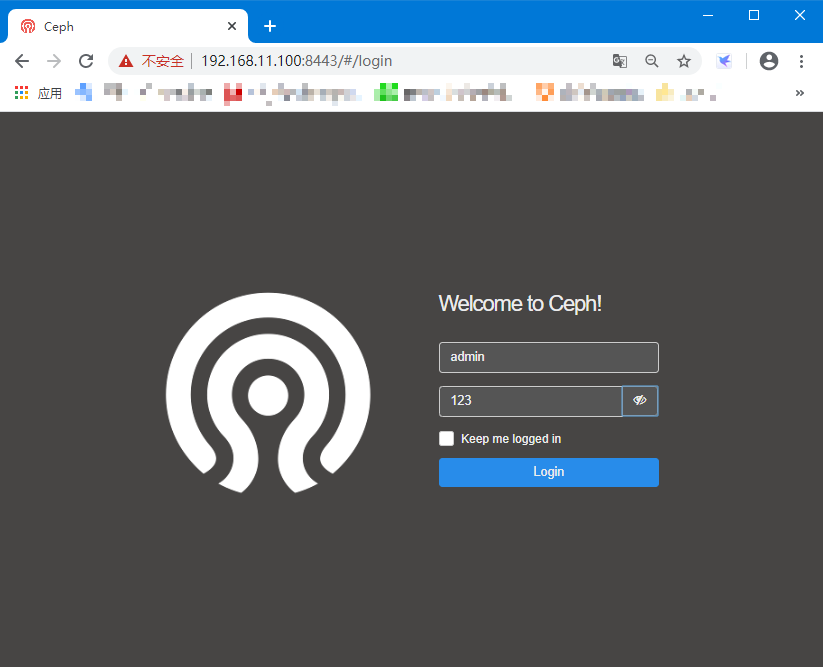

[root@ct ceph]# ceph mgr services { "dashboard": "https://ct:8443/" }

-

Set Account and Password

[root@ct ceph]# ceph dashboard set-login-credentials admin 123 '//admin is account 123 is password this can be changed' Username and password updated -

Open the ceph page in your browser at https://192.168.11.100:8443

3: docking between CEPH and openstack

3.1: Preparing for initialization of CEPH and OPenStack docking environment

-

Control node creates client.cinder and sets permissions

[root@ct ceph]# ceph auth get-or-create client.cinder mon 'allow r' osd 'allow class-read object_prefix rbd_children,allow rwx pool=volumes,allow rwx pool=vms,allow rx pool=images' [client.cinder] key = AQBQ7GRett8kAhAA4Q2fFNQybe0RJaEubK8eFQ==

-

Control node to create client.glass and set permissions

[root@ct ceph]# ceph auth get-or-create client.glance mon 'allow r' osd 'allow class-read object_prefix rbd_children,allow rwx pool=images' [client.glance] key = AQB37GRe64mXGxAApD5AVQ4Js7++tZQvVz1RgA==

-

Transfer the secret key to the docked node, because the glance itself is mounted on the control node, so it does not need to be sent to other nodes

[root@ct ceph]# chown glance.glance /etc/ceph/ceph.client.glance.keyring '//Set Ownership Group' -

Pass the secret key to the docking node, pass the client.cinder node to another node as it is also installed on the controller by default

[root@ct ceph]# ceph auth get-or-create client.cinder | tee /etc/ceph/ceph.client.cinder.keyring [client.cinder] key = AQBQ7GRett8kAhAA4Q2fFNQybe0RJaEubK8eFQ== [root@ct ceph]# chown cinder.cinder /etc/ceph/ceph.client.cinder.keyring -

You also need to pass client.cinder to the compute node (which is called by the compute node)

'//Since the computing node needs to store the user's client.cinder user's key file in libvirt, the following actions are required' [root@ct ceph]# ceph auth get-key client.cinder |ssh c1 tee client.cinder.key AQBQ7GRett8kAhAA4Q2fFNQybe0RJaEubK8eFQ== [root@ct ceph]# ceph auth get-key client.cinder |ssh c2 tee client.cinder.key AQBQ7GRett8kAhAA4Q2fFNQybe0RJaEubK8eFQ== [root@c1 ceph]# ls ~ '//c1 node to see if ' anaconda-ks.cfg client.cinder.key [root@c2 ceph]# ls ~ '//c2 node to see if ' anaconda-ks.cfg client.cinder.key

3.2: On the computing node running nova-compute, add the temporary key file to libvirt and delete it

- Configure libvirt secret

- KVM virtual machines require librbd to access the ceph cluster

- Librbd needs account authentication to access ceph

- So here, you need to set up account information for libvirt

3.2.1: Operate on the c1 node

-

Generate UUID

[root@c1 ceph]# uuidgen f4cd0fff-b0e4-4699-88a8-72149e9865d7 '//Copy this UUID and use it afterwards' -

Create a key file using the following to ensure that a unique UUID is generated using the previous step

[root@c1 ceph]# cd /root [root@c1 ~]# cat >secret.xml <<EOF > <secret ephemeral='no' private='no'> > <uuid>f4cd0fff-b0e4-4699-88a8-72149e9865d7</uuid> > <usage type='ceph'> > <name>client.cinder secret</name> > </usage> > </secret> > EOF [root@c1 ~]# ls anaconda-ks.cfg client.cinder.key secret.xml

-

Define and save the secret key.Use this key in subsequent steps

[root@c1 ~]# virsh secret-define --file secret.xml //Generate secret f4cd0fff-b0e4-4699-88a8-72149e9865d7

-

Set the secret key and delete the temporary file.The steps to delete files are optional to keep the system clean

[root@c1 ~]# virsh secret-set-value --secret f4cd0fff-b0e4-4699-88a8-72149e9865d7 --base64 $(cat client.cinder.key) && rm -rf client.cinder.key secret.xml secret Value Setting

3.2.2: Operating on C2 Node

-

Create a key file

[root@c2 ceph]# cd /root [root@c2 ~]# cat >secret.xml <<EOF > <secret ephemeral='no' private='no'> > <uuid>f4cd0fff-b0e4-4699-88a8-72149e9865d7</uuid> > <usage type='ceph'> > <name>client.cinder secret</name> > </usage> > </secret> > EOF [root@c2 ~]# ls anaconda-ks.cfg client.cinder.key secret.xml

-

Define and save the key

[root@c2 ~]# virsh secret-define --file secret.xml //Generate secret f4cd0fff-b0e4-4699-88a8-72149e9865d7

-

Set the secret key and delete the temporary file

[root@c2 ~]# virsh secret-set-value --secret f4cd0fff-b0e4-4699-88a8-72149e9865d7 --base64 $(cat client.cinder.key) && rm -rf client.cinder.key secret.xml secret Value Setting

-

Monitoring of open ceph on control nodes

[root@ct ceph]# ceph osd pool application enable vms mon enabled application 'mon' on pool 'vms' [root@ct ceph]# ceph osd pool application enable images mon enabled application 'mon' on pool 'images' [root@ct ceph]# ceph osd pool application enable volumes mon enabled application 'mon' on pool 'volumes'

3.3:Ceph docking Glance

-

Log in to the glance node (control node) and modify it

-

Backup Profile

[root@ct ceph]# cp /etc/glance/glance-api.conf /etc/glance/glance-api.conf.bak

-

Modify Docking Profile

[root@ct ceph]# vi /etc/glance/glance-api.conf 2054 stores=rbd '//2054 rows modified to rbd format (stored type format)' 2108 default_store=rbd '//Modify Default Storage Format' 2442 #filesystem_store_datadir=/var/lib/glance/images/ '//Comment out mirror local storage' 2605 rbd_store_chunk_size = 8 '//Uncomment' 2626 rbd_store_pool = images '//Uncomment' 2645 rbd_store_user =glance '//Uncomment, Specify User' 2664 rbd_store_ceph_conf = /etc/ceph/ceph.conf '//Uncomment, specify ceph path'

-

Find glance user, dock above

[root@ct ceph]# source /root/keystonerc_admin [root@ct ceph(keystone_admin)]# openstack user list |grep glance | e2ee9c40f2f646d19041c28b031449d8 | glance |

-

Restart OpenStack-glance-api service

[root@ct ceph(keystone_admin)]# systemctl restart openstack-glance-api

-

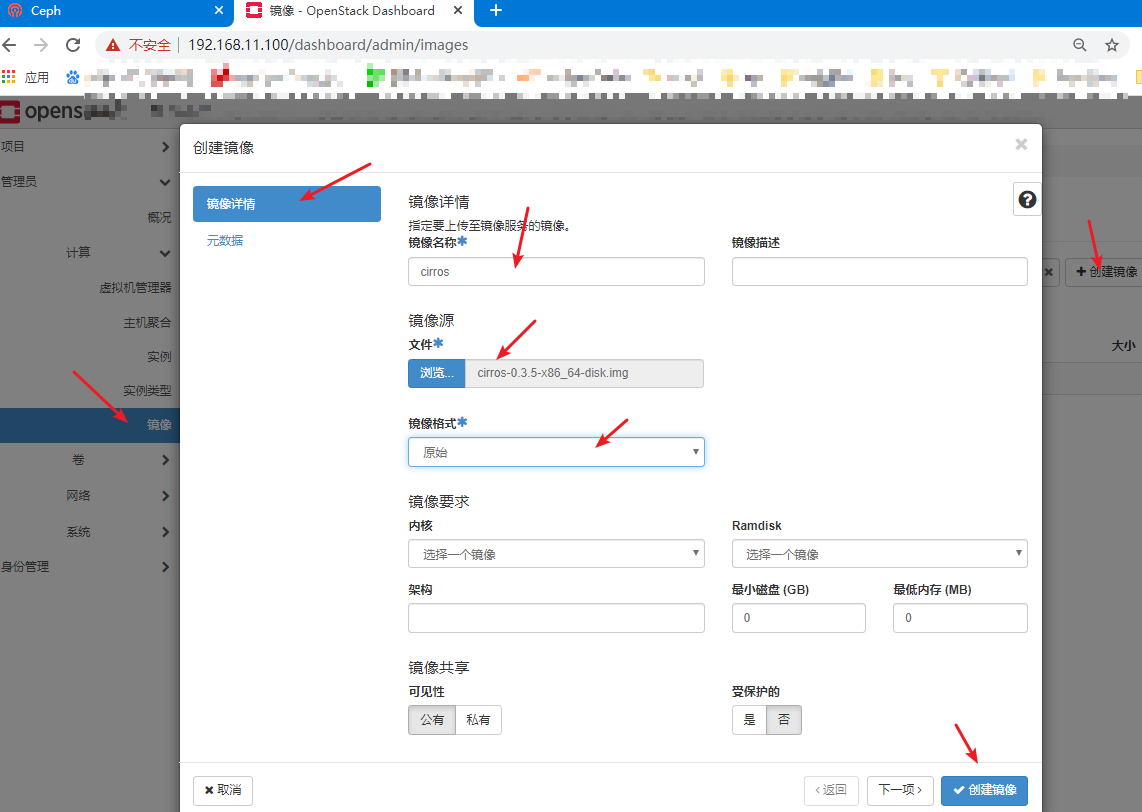

Upload mirror for testing

[root@ct ceph(keystone_admin)]# ceph df '//View Mirror Size' GLOBAL: SIZE AVAIL RAW USED %RAW USED 3.0 TiB 3.0 TiB 3.0 GiB 0.10 POOLS: NAME ID USED %USED MAX AVAIL OBJECTS volumes 1 0 B 0 972 GiB 0 vms 2 0 B 0 972 GiB 0 images 3 13 MiB '//Upload 13M Successful' 0 972 GiB 8 [root@ct ceph(keystone_admin)]# rbd ls images '//View Mirror' 0ab61b48-4e57-4df3-a029-af6843ff4fa5

-

Confirm that there are still mirrors locally

[root@ct ceph(keystone_admin)]# ls /var/lib/glance/images '//It's normal to find no mirror underneath '

3.4:ceph docking with cinder

-

Back up the cinder.conf configuration file and modify it

[root@ct ceph(keystone_admin)]# cp /etc/cinder/cinder.conf /etc/cinder/cinder.conf.bak [root@ct ceph(keystone_admin)]# vi /etc/cinder/cinder.conf 409 enabled_backends=ceph '//409 rows modified to ceph format' 5261 #[lvm] 5262 #volume_backend_name=lvm 5263 #volume_driver=cinder.volume.drivers.lvm.LVMVolumeDriver 5264 #iscsi_ip_address=192.168.11.100 5265 #iscsi_helper=lioadm 5266 #volume_group=cinder-volumes 5267 #volumes_dir=/var/lib/cinder/volumes 5268 5269 [ceph] '//Add a ceph paragraph at the end of the line' 5270 default_volume_type= ceph 5271 glance_api_version = 2 5272 volume_driver = cinder.volume.drivers.rbd.RBDDriver 5273 volume_backend_name = ceph 5274 rbd_pool = volumes 5275 rbd_ceph_conf = /etc/ceph/ceph.conf 5276 rbd_flatten_volume_from_snapshot = false 5277 rbd_max_clone_depth = 5 5278 rbd_store_chunk_size = 4 5279 rados_connect_timeout = -1 5280 rbd_user = cinder 5281 rbd_secret_uuid = f4cd0fff-b0e4-4699-88a8-72149e9865d7 '//Use previous UUID'

-

Restart cinder service

[root@ct ceph(keystone_admin)]# systemctl restart openstack-cinder-volume

-

There are several types of cinder volumes to see

[root@ct ceph(keystone_admin)]# cinder type-list +--------------------------------------+-------+-------------+-----------+ | ID | Name | Description | Is_Public | +--------------------------------------+-------+-------------+-----------+ | c33f5b0a-b74e-47ab-92f0-162be0bafa1f | iscsi | - | True | +--------------------------------------+-------+-------------+-----------+

-

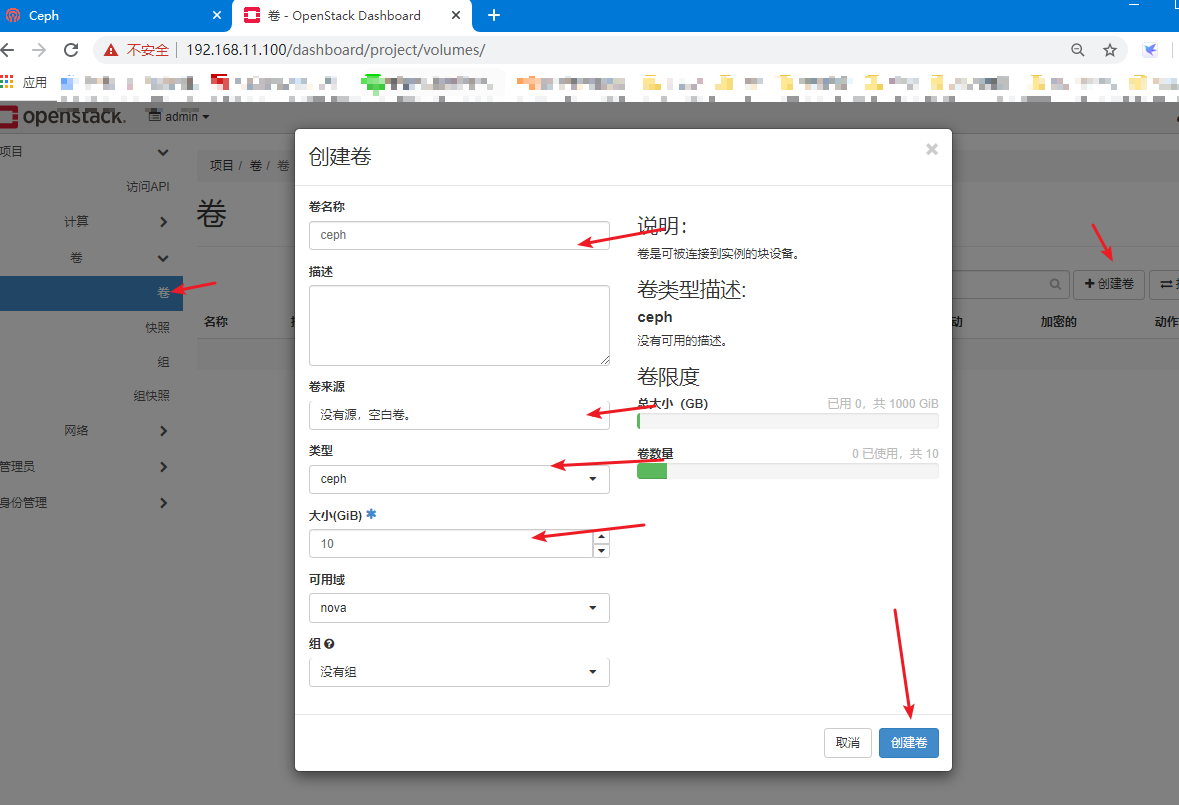

The command line creates the corresponding type for the ceph storage backend of the cinder

[root@ct ceph(keystone_admin)]# cinder type-create ceph '//Create ceph type' +--------------------------------------+------+-------------+-----------+ | ID | Name | Description | Is_Public | +--------------------------------------+------+-------------+-----------+ | e1137ee4-0a45-4a21-9c16-55dfb7e3d737 | ceph | - | True | +--------------------------------------+------+-------------+-----------+ [root@ct ceph(keystone_admin)]# cinder type-list '//View Type' +--------------------------------------+-------+-------------+-----------+ | ID | Name | Description | Is_Public | +--------------------------------------+-------+-------------+-----------+ | c33f5b0a-b74e-47ab-92f0-162be0bafa1f | iscsi | - | True | | e1137ee4-0a45-4a21-9c16-55dfb7e3d737 | ceph | - | True | +--------------------------------------+-------+-------------+-----------+ [root@ct ceph(keystone_admin)]# cinder type-key ceph set volume_backend_name=ceph '//Set back-end storage type volume_backend_name=ceph Make sure the top space is not empty'

-

create volume

-

View created volumes

[root@ct ceph(keystone_admin)]# ceph osd lspools 1 volumes 2 vms 3 images [root@ct ceph(keystone_admin)]# rbd ls volumes volume-47728c44-85db-4257-a723-2ab8c5249f5d

-

Open cinder service

[root@ct ceph(keystone_admin)]# systemctl start openstack-cinder-api.service openstack-cinder-scheduler.service [root@ct ceph(keystone_admin)]# systemctl enable openstack-cinder-api.service openstack-cinder-scheduler.service

3.5:Ceph docking with Nova

-

Backup profile (C1, C2 nodes)

[root@c1 ~]# cp /etc/nova/nova.conf /etc/nova/nova.conf.bak [root@c2 ~]# cp /etc/nova/nova.conf /etc/nova/nova.conf.bak

-

Modify the configuration file (C1, C2 nodes) (c1 only)

7072 images_type=rbd '//7072 lines modified to rbd format, uncomment' 7096 images_rbd_pool=vms '//7096 lines, removing comments, changing to what VMS declares in CEPH' 7099 images_rbd_ceph_conf =/etc/ceph/ceph.conf '//7099 lines, uncomment, add CEPH profile path' 7256 rbd_user=cinder '//7256 lines, uncomment, cinder' 7261 rbd_secret_uuid=f4cd0fff-b0e4-4699-88a8-72149e9865d7 '//7261 lines, uncomment, add UUID value' 6932 disk_cachemodes ="network=writeback" '//6932 lines, uncomment, add "network=writeback" hard disk cache mode' live_migration_flag="VIR_MIGRATE_UNDEFINE_SOURCE,VIR_MIGRATE_PEER2PEER,VIR_MIGRATE_LIVE, VIR_MIGRATE_PERSIST_DEST,VIR_MIGRATE_TUNNELLED" '//Find out if adding an entire row near live_migration enables thermal Migration' hw_disk_discard=unmap '//Uncomment and add unmap'

-

Install Libvirt (C1, C2 nodes)

[root@c1 ~]# yum -y install libvirt [root@c2 ~]# yum -y install libvirt

-

Edit calculation nodes (C1, C2 nodes) (show C1 node operations only)

[root@c1 ~]# vi /etc/ceph/ceph.conf '//End Line Add' [client] rbd cache=true rbd cache writethrough until flush=true admin socket = /var/run/ceph/guests/$cluster-$type.$id.$pid.$cctid.asok log file = /var/log/qemu/qemu-guest-$pid.log rbd concurrent management ops = 20 [root@c1 ~]# mkdir -p /var/run/ceph/guests/ /var/log/qemu/ '//'

-

Create profile directory and permissions

[root@c1 ~]# mkdir -p /var/run/ceph/guests/ /var/log/qemu/ [root@c1 ~]# chown 777 -R /var/run/ceph/guests/ /var/log/qemu/

-

Copy the key under/ect/ceph/of the control node to the two calculation nodes

[root@ct ceph(keystone_admin)]# cd /etc/ceph [root@ct ceph(keystone_admin)]# scp ceph.client.cinder.keyring root@c1:/etc/ceph ceph.client.cinder.keyring 100% 64 83.5KB/s 00:00 [root@ct ceph(keystone_admin)]# scp ceph.client.cinder.keyring root@c2:/etc/ceph ceph.client.cinder.keyring 100% 64 52.2KB/s 00:00

-

Calculate Node Restart Service

[root@c1 ~]# systemctl restart libvirtd [root@c1 ~]# systemctl enable libvirtd [root@c1 ~]# systemctl restart openstack-nova-compute '//c2 node same operation' -

The experiment was successful. Thank you for watching.Create your own instance tests!