About OpenResty

Link: https://www.zhihu.com/question/266535644/answer/705067582

Source: Zhihu

The copyright belongs to the author. For commercial reprint, please contact the author for authorization, and for non-commercial reprint, please indicate the source.

OpenResty solves the pain point of high concurrency. At present, most of the backstage services are written in java, but it is very complicated to write stable and highly concurrent services in java. The first is the selection of servers. There are several types of web servers, including tomcat, apache,weblogic and commercial webhere. 1. Tomcat officially claims that the concurrent volume is 1000, If you want to develop a million concurrent services, 1000000 / 3000, you need 1000 servers. It's impossible to think about it. 2. The concurrency of apache is worse than that of Tomcat. The concurrency of 200-300 3 and weblogic is slightly better, with an average of about 3000, but it does not reach an order of magnitude

However, nginx is different. It is easy to handle tens of thousands of requests and its memory consumption is not high. Before, we just used it as load balancing. We didn't think of it as a web server. The emergence of OpenResty solves the obstacle to enjoying the high concurrency advantage of nginx, because nginx uses asynchronous event model, which is different from the traditional programming idea, lua is a script language that uses c to interpret and execute (with high execution efficiency). It can use the traditional synchronous programming idea to process slightly complex logic after nginx requests are received.

For systems with high concurrency, they are all memory based or cache based. It is unrealistic to use mysql to support high concurrency. The concurrency of mysql is 4000-8000. Beyond this amount, the performance of mysql will decline sharply. A memory read takes tens of nanoseconds, and a cache read takes a few milliseconds. You may be unfamiliar with nanoseconds. One nanosecond is equal to one millionth of one second, and one millisecond is equal to one thousandth of one second. After the request comes, you can directly go to the memory to read, write the data into the memory when you need to interact with the database, and then store it in batches for rapid response.

web traffic also conforms to the principle of 28. 80% of the traffic is concentrated on 20% of the pages, such as the home page of e-commerce and the product details page. openResty is used to support the high concurrency access of the product details page, and other high concurrency frames are used to process purchase orders, shopping carts and other links, such as java's NIO network framework netty.

java's netty is also a powerful tool for dealing with high concurrency. However, I have tested that the overall performance can reach 80% of nginx. Therefore, let nginx do all the dirty work. Netty is used for key businesses.

Current server

CentOS 8.2 64 bit

Install the version according to the official recommended installation process

http://openresty.org/cn/installation.html

CentOS

You can add in your CentOS system openresty Warehouse so that we can easily install or update our software packages in the future (through yum check-update Command). Run the following command to add our warehouse (for CentOS 8 or above, the following yum Replace all with dnf):

# add the yum repo: wget https://openresty.org/package/centos/openresty.repo sudo mv openresty.repo /etc/yum.repos.d/ # update the yum index: sudo yum check-update

Then you can install the software package as follows, such as openresty:

sudo yum install -y openresty

Novice on the road Hello World

http://openresty.org/cn/getting-started.html

1 create directory

mkdir ~/work cd ~/work mkdir logs/ conf/

work/conf

work/log

2 create a conf/nginx.conf file to output helloworld

worker_processes 1;

error_log logs/error.log;

events {

worker_connections 1024;

}

http {

server {

listen 8080;

location / {

default_type text/html;

content_by_lua_block {

ngx.say("<p>hello, world</p>")

}

}

}

}

3. Configure environment variables

PATH=/usr/local/openresty/nginx/sbin:$PATH export PATH

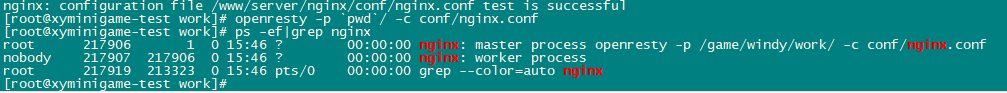

Start nginx

openresty -p `pwd`/ -c conf/nginx.conf

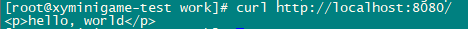

4. Call http locally to access our service

curl http://localhost:8080/

success

Supplementary openresty instruction

openresty instructions nginx version: openresty/1.17.8.2 Usage: nginx [-?hvVtTq] [-s signal] [-c filename] [-p prefix] [-g directives] Options: -?,-h : this help -v : show version and exit -V : show version and configure options then exit -t : test configuration and exit -T : test configuration, dump it and exit -q : suppress non-error messages during configuration testing -s signal : send signal to a master process: stop, quit, reopen, reload -p prefix : set prefix path (default: /usr/local/openresty/nginx/) -c filename : set configuration file (default: conf/nginx.conf) -g directives : set global directives out of configuration file

Lua-resty_http

https://github.com/ledgetech/lua-resty-http

Lua resty HTTP is based on Openresty/ngx_lua's HTTP client supports POST method to upload data.

The basic usage is to invoke lua script in conf file.

Citation article https://blog.csdn.net/panguangyuu/article/details/88892330

1 install the resty.http Library

Get git project

git clone https://github.com/ledgetech/lua-resty-http.git

#Copy the file directly to the lualib of openresty

cd lua-resty-http/lib/resty cp ./http_headers.lua /usr/local/openresty/lualib/resty/ cp ./http.lua /usr/local/openresty/lualib/resty/

2 use http

git request

local http = require('resty.http')

local httpc = http.new()

local res, err = httpc:request_uri('http://localhost/hello', {

keepalive_timeout = 2000 -- millisecond

})

if not res or res.status then

ngx.log(ngx.ERR, "request error#", err)

return

end

ngx.log(ngx.ERR, "request status#", res.status)

ngx.say(res.body)

post request

local http = require "resty.http"

local httpc = http.new()

local res, err = httpc:request_uri("http://localhost/hello", {

method = "POST",

body = "a=1&b=2",

headers = {

["Content-Type"] = "application/x-www-form-urlencoded",

},

keepalive_timeout = 60,

keepalive_pool = 10

})

if not res or res.status then

ngx.log(ngx.ERR, "request error#", err)

return

end

ngx.log(ngx.ERR, "request status#", res.status)

ngx.say(res.body)