1. introduction

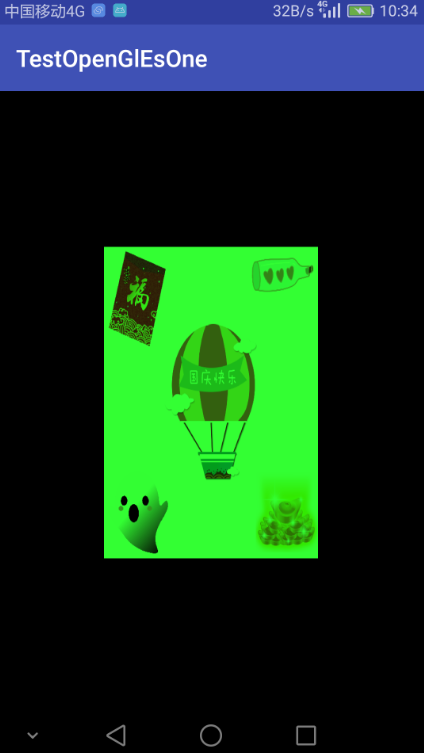

In the previous article, a plane figure has been drawn. This time, we will make texture mapping on the surface of the figure drawn last time.

Picture preparation: (width and height must be N power of 2)

The final image is in Bitmap form. Now consider how to map this image to the drawing plane?

So we also need an array float [], which is used to set the texture coordinate data. The texture coordinate data is based on the upper left corner (0, 0) and the lower right corner (1, 1) of the picture

As we know in the previous article, the face array is drawn as {0,1,2,3,4,5}, so we need to map a texture coordinate data for each vertex of the component face, as follows:

float[]{

0f, 0f, 0f, 1f, 1f, 1f, / / three points on the first face

0f,0f , 1f,1f , 1f,0f

}

In this way, all the images are mapped to the drawing plane.

2. code

public class OtherShader implements GLSurfaceView.Renderer{

FloatBuffer vertextBuffer;

//Texture coordinate data

FloatBuffer textureBuffer;

ByteBuffer faceBuffer;

private float roate;

//Texture used

int texture;

Context context;

public OtherShader(Context context) {

this.context = context;

vertextBuffer = floatArray2Buffer(vertex);

faceBuffer = ByteBuffer.wrap(face);

textureBuffer = floatArray2Buffer(text);

}

float[] vertex = new float[]{

-0.5f , 0.5f , 0f ,

-0.5f , -0.5f , 0f ,

0.5f , -0.5f , 0f ,

-0.5f , 0.5f , 0f ,

0.5f , -0.5f , 0f ,

0.5f , 0.5f , 0f

};

byte[] face = new byte[]{

0,1,2,

3,4,5

};

//Texture coordinate data

float[] text = {

0f,0f , 0f,1f , 1f,1f,

0f,0f , 1f,1f , 1f,0f

};

//The conversion of vertex color array to IntBuffer is required by OpenGl ES, and vertex color can not be set

private IntBuffer intArray2Buffer(int[] rect1color) {

IntBuffer intBuffer;

//Do not use this method to get IntBuffer. Because of the limitation of Android platform, Buffer must be native Buffer, so it should be created through allocateDirect()

//And the Buffer must be sorted, so the order() method is used for sorting

//intBuffer = IntBuffer.wrap(rect1color);

//Because an int=4 bytes

ByteBuffer bb = ByteBuffer.allocateDirect(rect1color.length * 4);

bb.order(ByteOrder.nativeOrder());

intBuffer = bb.asIntBuffer();

intBuffer.put(rect1color);

intBuffer.position(0); //Data moved to first point

return intBuffer;

}

//Converting the vertex position array to FloatBuffer is required by OpenGl ES

private FloatBuffer floatArray2Buffer(float[] rect1) {

FloatBuffer floatBuffer;

//Do not use this method to get the FloatBuffer. Because of the limitation of Android platform, the Buffer must be native Buffer, so it should be created through allocateDirect()

//And the Buffer must be sorted, so the order() method is used for sorting

//floatBuffer = FloatBuffer.wrap(rect1);

//Because an int=4 bytes

ByteBuffer bb = ByteBuffer.allocateDirect(rect1.length * 4);

bb.order(ByteOrder.nativeOrder());

floatBuffer = bb.asFloatBuffer();

floatBuffer.put(rect1);

floatBuffer.position(0); //Data moved to first point

return floatBuffer;

}

@Override

public void onSurfaceCreated(GL10 gl, EGLConfig config) {

//Turn off anti jitter

gl.glDisable(GL10.GL_DITHER);

//Fix system perspective

gl.glHint(GL10.GL_PERSPECTIVE_CORRECTION_HINT , GL10.GL_FASTEST);

//Set shadow smoothing mode

gl.glShadeModel(GL10.GL_SMOOTH);

//Enable depth test to record Z-axis depth

gl.glEnable(GL10.GL_DEPTH_TEST);

//Set the type of depth test

gl.glDepthFunc(GL10.GL_LEQUAL);

//*******Enable 2D texture*************

gl.glEnable(GL10.GL_TEXTURE_2D);

//Loading texture

loadTexture(gl);

}

private void loadTexture(GL10 gl) {

Bitmap bitmap = null;

bitmap = BitmapFactory.decodeResource(context.getResources() , R.drawable.bj);

int[] textures = new int[1];

gl.glGenTextures(1 , textures , 0);

texture = textures[0];

gl.glBindTexture(GL10.GL_TEXTURE_2D , texture);

gl.glTexParameterf(GL10.GL_TEXTURE_2D , GL10.GL_TEXTURE_MIN_FILTER , GL10.GL_NEAREST);

gl.glTexParameterf(GL10.GL_TEXTURE_2D , GL10.GL_TEXTURE_MAG_FILTER , GL10.GL_LINEAR);

gl.glTexParameterf(GL10.GL_TEXTURE_2D , GL10.GL_TEXTURE_WRAP_S , GL10.GL_REPEAT);

gl.glTexParameterf(GL10.GL_TEXTURE_2D , GL10.GL_TEXTURE_WRAP_T , GL10.GL_REPEAT);

GLUtils.texImage2D(GL10.GL_TEXTURE_2D , 0 , bitmap , 0);

if (bitmap != null){

bitmap.recycle();

}

}

@Override

public void onSurfaceChanged(GL10 gl, int width, int height) {

//Set the size and location of the 3D window

gl.glViewport(0 , 0 , width , height );

//Set matrix mode to projection matrix

gl.glMatrixMode(GL10.GL_PROJECTION);

//Initialize unit matrix

gl.glLoadIdentity();

//Calculate the aspect ratio of perspective window

float ratio = (float)width/height;

//Set the space size of perspective window to - 1,1, - 1,1, - 1,1 by default

//gl.glFrustumf(-ratio , ratio , -1 , 1 ,1 , 10);

}

@Override

public void onDrawFrame(GL10 gl) {

//Clear screen cache and depth cache

gl.glClear(GL10.GL_COLOR_BUFFER_BIT | GL10.GL_DEPTH_BUFFER_BIT);

//Enable vertex coordinate data

gl.glEnableClientState(GL10.GL_VERTEX_ARRAY);

//Set the current rectangle stack as the model stack

gl.glMatrixMode(GL10.GL_MODELVIEW);

//Enable array of texture map coordinates

gl.glEnableClientState(GL10.GL_TEXTURE_COORD_ARRAY);

//Reset the current model view matrix

gl.glLoadIdentity();

//If you need to fill the plane with color, you also need to disable vertex color data

gl.glColor4f(0.2f , 1.0f , 0.2f , 0.0f);

gl.glDisableClientState(GL10.GL_COLOR_ARRAY);

//Rotate around y axis

//gl.glRotatef(roate , 0f , 1f , 0f);

//Set vertex position data

gl.glVertexPointer(3 , GL10.GL_FLOAT , 0 , vertextBuffer);

//Set map array

gl.glTexCoordPointer(2 , GL10.GL_FLOAT , 0 , textureBuffer);

//Draw plane from vertex / / Map

gl.glBindTexture(GL10.GL_TEXTURE_2D , texture);

gl.glDrawElements(GL10.GL_TRIANGLES, faceBuffer.remaining() , GL10.GL_UNSIGNED_BYTE , faceBuffer);

//Stop drawing

gl.glFinish();

//Disable coordinate data

gl.glDisableClientState(GL10.GL_VERTEX_ARRAY);

//Disable texture array

gl.glDisableClientState(GL10.GL_TEXTURE_COORD_ARRAY);

roate+=1;

if (roate == 360){

roate = 0;

}

}

}