Background: judge whether there is a pig in the vehicle in the video returned by on-board monitoring

Solution: do object recognition in the returned video. If there is a pig, frame it and give a representative value

day1 2021-10-27

Imagine: object recognition and frame it in the figure

1. Align the sample

In the beginning, there was no concept of positive sample and negative sample blabla blabla, so we fooled around

Search the pig's data packet in csdn and find a data packet with 1447 figure + marked file. Because I haven't done CV things before, I feel awesome. Download it quickly.

After downloading, it looks like this. All the pictures are cut with good purity, and a description of the pig is generated in the corresponding xml file

(later, I found it useless because I can't handle xml)

2. Find negative samples

csdn searches a pile of cat and dog data as negative samples. The packet comes with neg.txt

3. Carry out gray processing on positive samples and reduce them

4. Install opencv

pip install opencv_python

5. Sample reduction + gray processing

import cv2

path = Write your picture address, such as "D:/train/new/pos/"

for i in range(1, 1447): How many pictures are there and how wide are they written

print(path+str(i)+'.jpg') Corresponding picture path

img = cv2.imread(path+str(i)+'.jpg', cv2.IMREAD_GRAYSCALE)

img5050 = cv2.resize(img, (100,100))

cv2.imshow("img",img5050)

cv2.waitKey(20)

cv2.imwrite('D:/train/new/pos/pos/'+str(i)+'.jpg', img5050) Give the processed picture storage address

6. Describe the positive sample and generate a pos.txt file

import os

def create_pos_n_neg():

for img in os.listdir('D:/train/test2/pos'): The path of the picture,

line = 'pos' + '/' + img + ' 1 0 0 100 100\n' Generate a description of the picture

print(line)

with open('D:/train/test2/pos.txt', 'a') as f: Files written

f.write(line)

if __name__ == '__main__':

create_pos_n_neg()

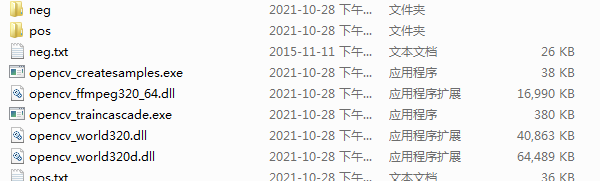

7. The file structure of this matter should be as follows: neg puts the negative sample picture, POS puts the positive sample picture, neg.txt describes the negative sample, and pos.txt describes the positive sample

The corresponding five opencv files are downloaded, https://pan.baidu.com/s/14plhrufj2hQR3Es3aYqoIg

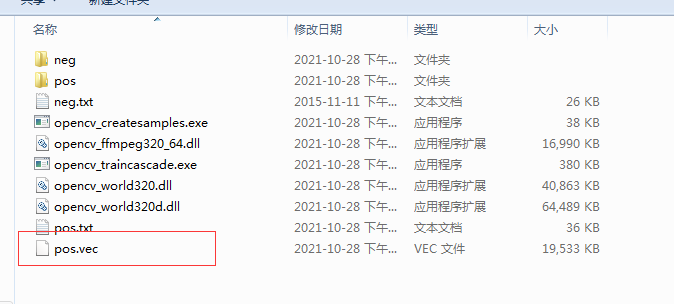

8. Use createsample to generate pos.vec

Open cmd, switch to the folder where the pile above is stored, and enter the command below

-vec is the name of the vec produced, - info is understood as specifying where vec comes from, - num is the number of samples, - w -h is wide and high

opencv_createsamples.exe -vec pos.vec -info pos.txt -num 1447 -w 100 -h 100

9. This little thing appears

10. Train the classifier with traincascade. First, create an xml folder

As above, open cmd and enter the following command

-numPos positive samples, - numNeg negative samples, - vec positive samples, - bg negative samples, - data xml refers to the generated xml file - w -h width and height. If LBP is used, the model is cut into 24*24 -numStages layers, which can be arbitrary

opencv_traincascade.exe -data xml -vec pos.vec -bg neg.txt -numPos 1447 -numNeg 2000 -numStages 20 -w 100 -h 100 -minHitRate 0.9999 -maxFalseAlarmRate 0.5 -mode ALL

Record the pit you stepped on here

1.numPos has no capital P

2. The sample is too large to fit in the memory, and it will crash as soon as training (solution: cut the picture down, such as 20 * 20)

3. There are too many layers to fit the memory

11. Training completed

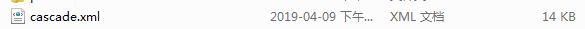

If this thing appears in the xml folder, it means that the training is ok

12. The whole code quotes cascade to identify the pig in the video

from PIL import Image, ImageDraw, ImageFont

import cv2

import numpy as np

import time

# Because it is messy to display Chinese directly with opencv, first convert the picture format into the format of PIL library, write Chinese with PIL method, and then convert it into CV format

def change_cv2_draw(image, strs, local, sizes, colour):

cv2img = cv2.cvtColor(image, cv2.COLOR_BGR2RGB)

pilimg = Image.fromarray(cv2img)

draw = ImageDraw.Draw(pilimg)

font = ImageFont.truetype("SIMYOU.TTF", sizes, encoding="utf-8") # SIMYOU.TTF is a font file

draw.text(local, strs, colour, font=font)

image = cv2.cvtColor(np.array(pilimg), cv2.COLOR_RGB2BGR)

return image

# src is the input image

# Classifier is the classifier corresponding to the recognized object

# strs is the Chinese description of the recognized object

# colors indicates the color of the box

# minSize is the minimum size of the identified object. When the size of the identified object is lower than this size, it will not be detected, that is, it will not be recognized

# minSize is the maximum size of the identified object. When it is larger than this size, it is not recognized

def myClassifier(src, classifier, strs, colors, minSize, maxSize):

gray = cv2.cvtColor(src, cv2.COLOR_BGR2GRAY)

# The parameters in the detectMultiScale() method are explained in detail in another blog: https://blog.csdn.net/GottaYiWanLiu/article/details/90442274

obj = classifier.detectMultiScale(gray, scaleFactor=1.15, minNeighbors=5, minSize=minSize, maxSize=maxSize)

for (x, y, w, h) in obj:

cv2.rectangle(src, (x, y), (x + w, y + w), colors, 2) # Frame, (x,y) is the vertex of the upper left corner of the identified object, (w, h) is the width and height

src = change_cv2_draw(src, strs, (x, y - 20), 20, colors) # Display Chinese

return src

# cap=cv2.VideoCapture(0)

cap = cv2.VideoCapture("C:/train/pigtest.mp4") #Here is the corresponding video file

pig = cv2.CascadeClassifier("C:/train/pig/xml/cascade.xml") Here is the corresponding cascade

while True:

_, frame = cap.read()

frame = myClassifier(frame, pig, "pig", (0, 0, 255), (100, 100), (800, 800))

there pig Is the title of the description box

cv2.imshow("frame", frame)

cv2.waitKey(30)

13. See the effect

rnm refund! Garbage garbage garbage garbage