catalogue

Principle and application process

Create matcher - > bfmatcher() and its parameter interpretation

Two matching methods of BFMatcher object - > BF. Match() and bf.knnMatch()

The functions drawMatches() and drawMatchsKnn() for drawing matching points

Look at an example of SURF and ORB (different matching methods)

FLANN nearest neighbor based matcher

Matcher generation method FlannBasedMatcher() and interpretation of two input parameters

The first specifies the nearest neighbor algorithm to use

In previous articles, we have discussed many feature detection algorithms. This time, we will discuss how to use relevant methods for feature matching.

Brute force matcher:

Principle and application process

The way to use is to extract the image features of key points from the picture by using the feature description algorithms such as ORB, SIFT and SURF, generate the feature description, compare the similarity distance of the two images with the brute force algorithm, and finally return the nearest key point.

Correlation function:

Create matcher - > bfmatcher() and its parameter interpretation

BFMatcher() creates a BFMatcher object with two optional parameters:

- normType

The normType parameter is used to specify the type of distance test to be used. The default value is cv.NORM_L2, which is very suitable for SIFT and SURF (cv.NORM_L1 is also OK). Cv.norm should be used for ORB,BRIEF,BRISK algorithms using binary descriptors_ Hamming, which returns the Hamming distance between the two test objects. If the parameter of ORB algorithm is set to MTA_ If k = = 3 or 4, normType should be set to cv.NORM_HAMMING2.

- corssCheck

Boolean variable corssCheck. The default value is False. If it is set to True, the matching condition will be more strict. The best matching (i, j) will be returned only when the i-th feature point in A is closest to the j-th feature point in B, and the j-th feature point in B is also closest to the i-th feature point in A (no other point in A is closest to j). That is, the two feature points should match each other. This can provide unified results, which can be used to replace the ratio test method proposed by D.Lowe in SIFT article.

Two matching methods of BFMatcher object - > BF. Match() and bf.knnMatch()

The former returns the best match, and the latter returns K Matches for each key point (the first k are selected after descending), where k is set by the user.

The functions drawMatches() and drawMatchsKnn() for drawing matching points

The former arranges the two images horizontally, and then draws a straight line between the nearest matching points (from the original image to the target image).

In the latter case, if the previous matching method is bf.knnMatch(), the function drawMatchsKnn() is used to draw matching lines for each key point and its K best matching points. If k equals 2, 2 best match lines are drawn for each key.

Look at an example of SURF and ORB (different matching methods)

import cv2 as cv

import numpy as np

from matplotlib import pyplot as plt

img1 = cv.imread('img/box.png', 0)

img2 = cv.imread('img/box_in_scene.png', 0)

def orb_bf_demo():

#Create ORB object

orb = cv.ORB_create()

kp1, des1 = orb.detectAndCompute(img1, None)

kp2, des2 = orb.detectAndCompute(img2, None)

#Create bf objects and set initial values

bf = cv.BFMatcher(cv.NORM_HAMMING, crossCheck=True)

matches = bf.match(des1, des2)

#The matching results are arranged in descending order according to the distance between feature points

matches = sorted(matches, key= lambda x:x.distance)

#Top 10 matches

img3 = cv.drawMatches(img1, kp1, img2, kp2, matches[:10], None, flags=2)

cv.namedWindow('img',cv.WINDOW_AUTOSIZE)

cv.imshow('orb_img',img3)

def sift_bf_demo():

#Create SIFT object

sift = cv.xfeatures2d.SIFT_create()

kp1, des1 = sift.detectAndCompute(img1, None)

kp2, des2 = sift.detectAndCompute(img2, None)

#Use the default parameter cv.Norm_L2 ,crossCheck=False

bf = cv.BFMatcher()

matches = bf.knnMatch(des1, des2, k=2)

#In the ratio test, first obtain the points B (closest) and C (second closest) closest to A. only when B/C is less than the threshold (0.75) is it considered to be a match

#Because it is assumed that the matching is one-to-one, the ideal distance of the real matching is 0

good = []

for m, n in matches:

if m.distance < 0.75*n.distance:

good.append([m])

img3 = cv.drawMatchesKnn(img1, kp1, img2, kp2, good, None, flags=2)

cv.namedWindow('img', cv.WINDOW_AUTOSIZE)

cv.imshow('sift_img', img3)

orb_bf_demo()

sift_bf_demo()

cv.waitKey(0)

cv.destroyAllWindows()effect:

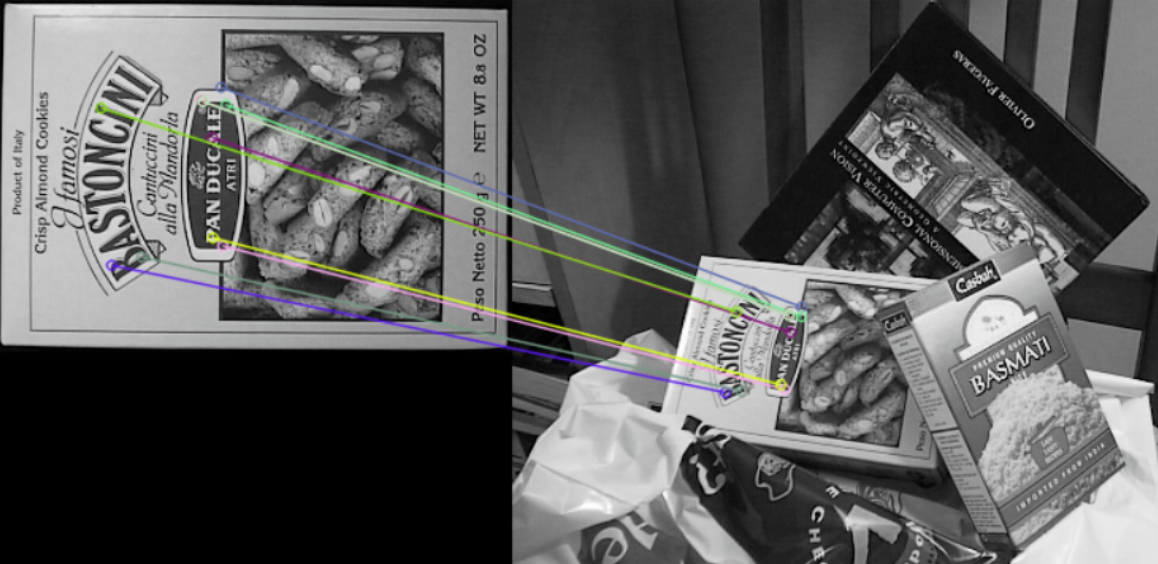

Effect of ORB

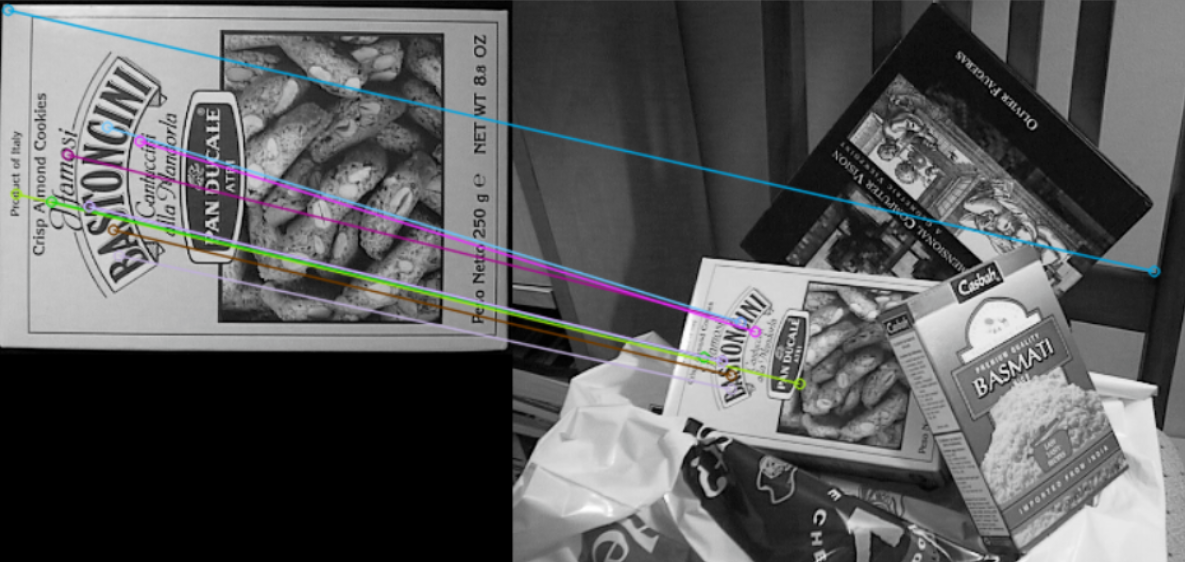

SIFT effect

FLANN nearest neighbor based matcher

advantage:

FLANN is the abbreviation of Fast_Library_for_Approximate_Nearest_Neighbors. It contains some optimization algorithms for searching fast nearest neighbors and high-dimensional features in large data sets. It is faster than BFMatcher in large data sets.

FLANN belongs to homography matching. Homography refers to that the image can still have higher detection and matching accuracy after projection distortion. It can roughly avoid the influence of rotation and zoom.

Matcher generation method FlannBasedMatcher() and interpretation of two input parameters

For the FLANN based matcher generation function FlannBasedMatcher(), we need to pass two parameters.

The first specifies the nearest neighbor algorithm to use

Three optional algorithms: random K-D tree algorithm, priority search k-means tree and hierarchical clustering tree

Prepare the first parameter based on SIFT and SURF feature description algorithms:

index_params=dict(algorithm=FLANN_INDEX_KDTREE,trees=5)

Based on ORB, the first parameter can be prepared as follows:

index_params = dict(algorithm=FLANN_INDEX_LSH, table_number = 6, key_size = 12, multi_probe_level = 1)

The second specifies the number of times the tree in the index should be traversed recursively (higher values lead to higher accuracy).

But it also takes more time if you want to change the value).

search_params = dict(checks=100).

Consolidate:

import numpy as np

import cv2

from matplotlib import pyplot as plt

img1 = cv2.imread('box.png',0) # queryImage

img2 = cv2.imread('box_in_scene.png',0) # trainImage

# Initiate SIFT detector

sift = cv2.SIFT()

# find the keypoints and descriptors with SIFT

kp1, des1 = sift.detectAndCompute(img1,None)

kp2, des2 = sift.detectAndCompute(img2,None)

# FLANN parameters

FLANN_INDEX_KDTREE = 0

index_params = dict(algorithm = FLANN_INDEX_KDTREE, trees = 5)

search_params = dict(checks=50) # or pass empty dictionary

flann = cv2.FlannBasedMatcher(index_params,search_params)

matches = flann.knnMatch(des1,des2,k=2)

# Need to draw only good matches, so create a maskmatches

Mask = [[0,0] for i in xrange(len(matches))]

# ratio test as per Lowe's paper

for i,(m,n) in enumerate(matches):

if m.distance < 0.7*n.distance:

matchesMask[i]=[1,0]

draw_params = dict(matchColor = (0,255,0),

singlePointColor = (255,0,0),

matchesMask = matchesMask,

flags = 0)

img3 = cv2.drawMatchesKnn(img1,kp1,img2,kp2,matches,None,**draw_params)

plt.imshow(img3,),

plt.show()