This paper is mainly used to record some problems encountered in learning CRF.

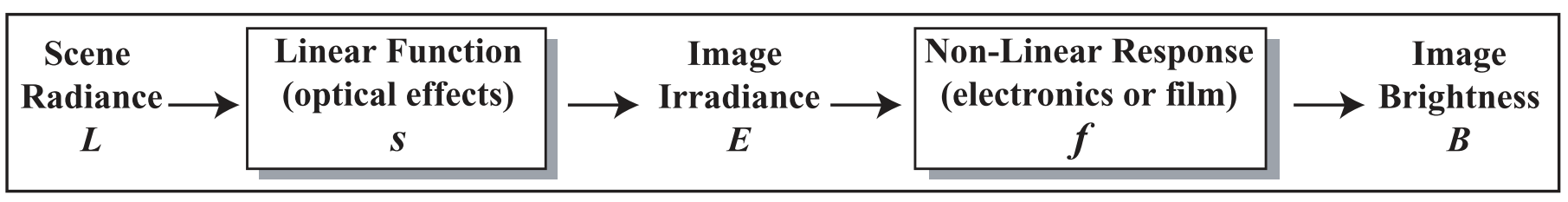

CRF model

B = f ( V ( t ⋅ L ) ) B=f(V( t\cdot L)) B=f(V(t ⋅ L)), where B B B is the camera gray value brightness, L L L is the radiation in the environment, t t t is the exposure time, V V V is the camera lens parameter, f f f is the camera response function CRF.

Some papers think that the camera lens parameters are linear and do not consider it, but some consider attenuation, that is, the brightness near the edge of the image will be darker, but I don't see that they are calculated at the same time. If two nonlinear functions are calculated at the same time, I think there may be uncertainty. Please consult your friends here.

Control camera exposure

First, determine the exposure duration

t

t

t. Because the USB camera of V4L2 is used, some problems are encountered in the adjustment process. Details are recorded here: V4L camera usage record.

Using OpenCV to obtain HDR image

First, learn how to synthesize HDR images with multiple images. Main References: Learnopuncv website

Pseudo code:

// load images & exposure time

images.push_back(src);

const vector<float> times = {1/30.0f, 0.25, 2.5, 15.0};

// Calculate CRF

Mat responseDebevec; // response if a CV_32FC3 value.

Ptr<CalibrateDebevec> calibrateDebevec = createCalibrateDebevec();

calibrateDebevec->process(images, responseDebevec, times);

// HDR is calculated by Debevec method

Mat hdr;

Ptr<MergeDebevec> mergeDebevec = createMergeDebevec();

mergeDebevec->process(images, hdr, times, responseDebevec);

// map to ldr for draw/showing.

Mat ldr;

Ptr<Tonemap> tonemap = createTonemap(2.2f); // Debevec algorithm needs one-step tone mapping

tonemap->process(hdr, ldr);

// Calculate HDR using Mertens method (known exposure parameters are not required)

Ptr<MergeMertens> mergeMertens = createMergeMertens(); // Mertens algorithm no

Mat mm_img;

mergeMertens->process(images, mm_img);

Note: all data formats are in float or 32FC1/32FC3 format, which took me half a day.

Calculate CRF

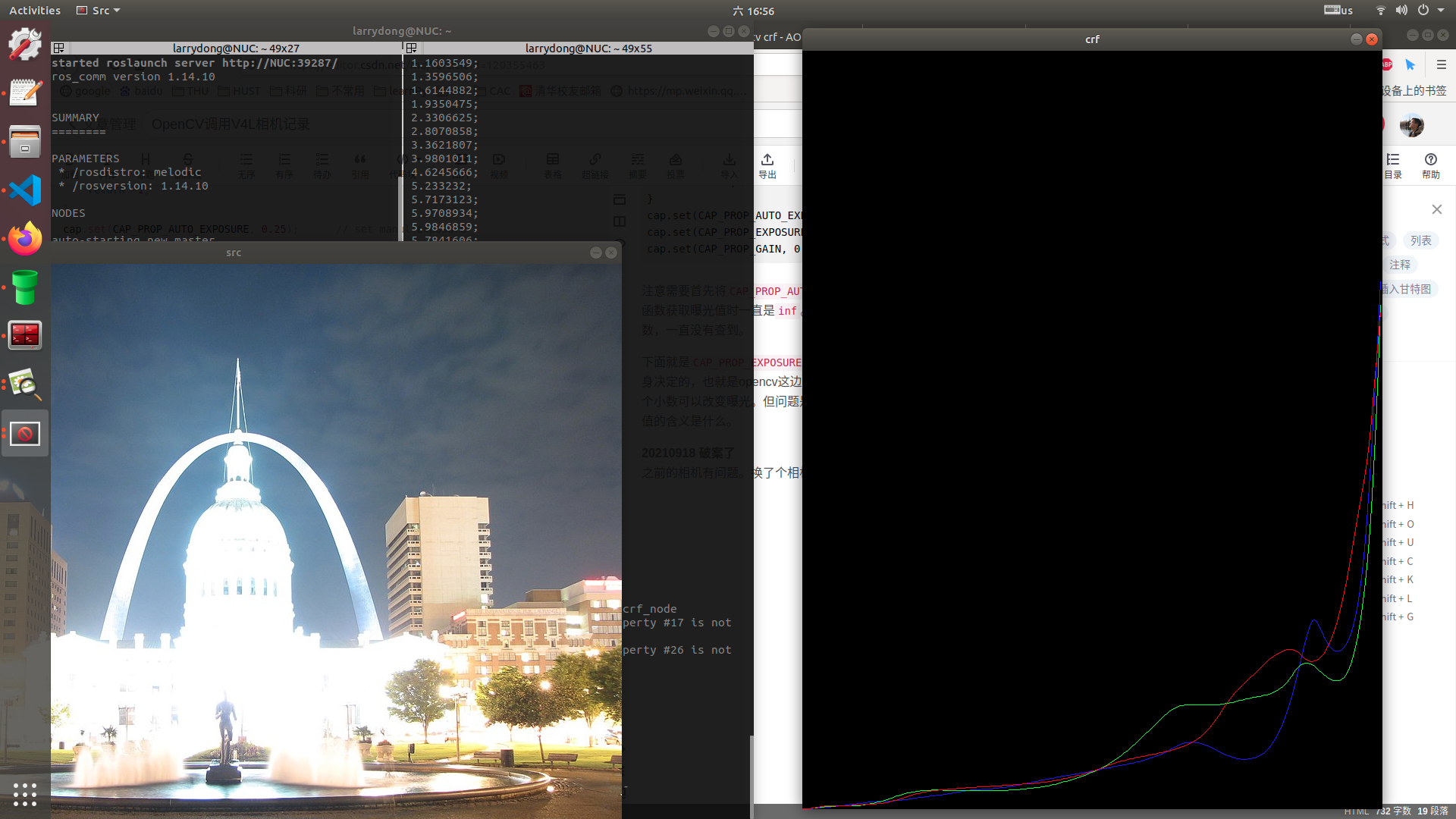

The response Debevec shown above is CRF. Try to draw it here:

const int width_scale = 3, height_scale = 20;

const int width = 255 * width_scale, height = 40 * height_scale;

Mat img = Mat::zeros(Size(width, height), CV_8UC3);

Point b_old = Point(0, height);

Point g_old = Point(0, height);

Point r_old = Point(0, height);

for (int i = 0; i < 255; ++i){

Vec3f v3f = responseDebevec.at<Vec3f>(i, 0);

float ib = v3f[0];

float ig = v3f[1];

float ir = v3f[2];

Point b_new = Point(i * width_scale, height - ib * height_scale);

Point g_new = Point(i * width_scale, height - ig * height_scale);

Point r_new = Point(i * width_scale, height - ir * height_scale);

line(img, b_old, b_new, Scalar(255, 0, 0));

line(img, g_old, g_new, Scalar(0, 255, 0));

line(img, r_old, r_new, Scalar(0, 0, 255));

b_old = b_new;

g_old = g_new;

r_old = r_new;

}

imshow("crf", img);

But there is a problem, that is, when I take different images with the camera, the calculated CRF is different. The trend may be close, but the values vary widely. I don't know why. I guess there may be a problem with opencv when setting the exposure time through V4L? But they are generally in the form of exp index, so we think they are not all bad.

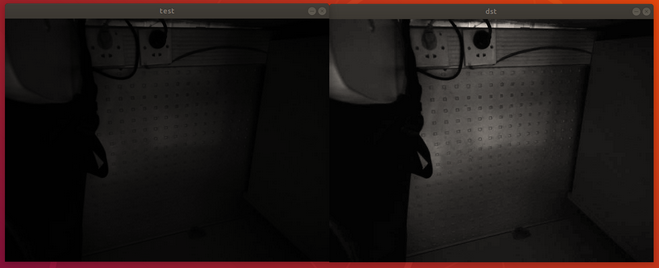

Calculate the Irradiance through CRF

After knowing the CRF and exposure, the irradiance of the corresponding scene can be calculated from the image gray value.

Since the CRF measured by me is different each time, but basically conforms to the exponential form, the following is adopted: B = a ⋅ e x p ( b ⋅ L ⋅ t ) + c B=a\cdot exp(b\cdot L \cdot t)+c B=a ⋅ exp(b ⋅ L ⋅ t)+c. I made up this equation myself, given B = 0 , L = 0 B=0, L=0 B=0,L=0 and B = 255 , L = 1 B=255, L=1 B=255,L=1 two boundaries, assuming a = 1 a=1 a=1, calculation inverse:

inline double calInverseCRF(uchar intensity){

// Model equation: a x exp(b x intensity) - 1 = irradiance;

const float maxIrradiance = 1; // normalized to 1.

const float a = 1.0f; // given a value;

const float b = (log(maxIrradiance + 1) - log(a)) / 255;

return a * exp(b * intensity) - 1;

}

Mat testImg;

cvtColor(images[1], testImg, COLOR_BGR2GRAY);

assert(testImg.type() == CV_8UC1);

imshow("test", testImg);

Mat irradiance = Mat::zeros(testImg.size(), CV_32FC1);

for(int i=0; i<testImg.rows; ++i){

for(int j=0; j<testImg.cols; ++j){

float value = testImg.at<uchar>(i, j);

irradiance.at<float>(i, j) = calInverseCRF(value);

}

}

const double exposureTime = times[1]; // not used now.

Mat intensity = irradiance / exposureTime;

normalize(intensity, intensity, 1.0, 0.0, NORM_MINMAX);

imshow("dst", intensity);

result:

Summary of problems encountered

- CRF is a three channel. I try to convert it into a gray image to calculate a CRF. The calculation is always wrong.