Template Matching (TM) is to find the area most similar to template image in an image. The principle of this method is simple and fast. It can be applied to many fields such as target recognition, target tracking and so on. The corresponding function in OpenCV is matchTemplate or cvMatchTemplate (refer to opencvdoc). Here is a brief introduction:

1. Functional prototype

C++: void matchTemplate(InputArray image, InputArray templ, OutputArray result, int method); C: void cvMatchTemplate(const CvArr* image, const CvArr* templ, CvArr* result, int method);

2. Parametric Interpretation

-

Image: Input image. Must be 8-bit or 32-bit floating-point type.

-

templ: Template images for search. It must be less than the input image and the same data type.

-

Result: Match the result image. It must be a single channel 32-bit floating-point type, and its size is (W-w+1)* (H-h+1), where W, H are the width and height of the input image, W and H are the width and height of the template image respectively.

-

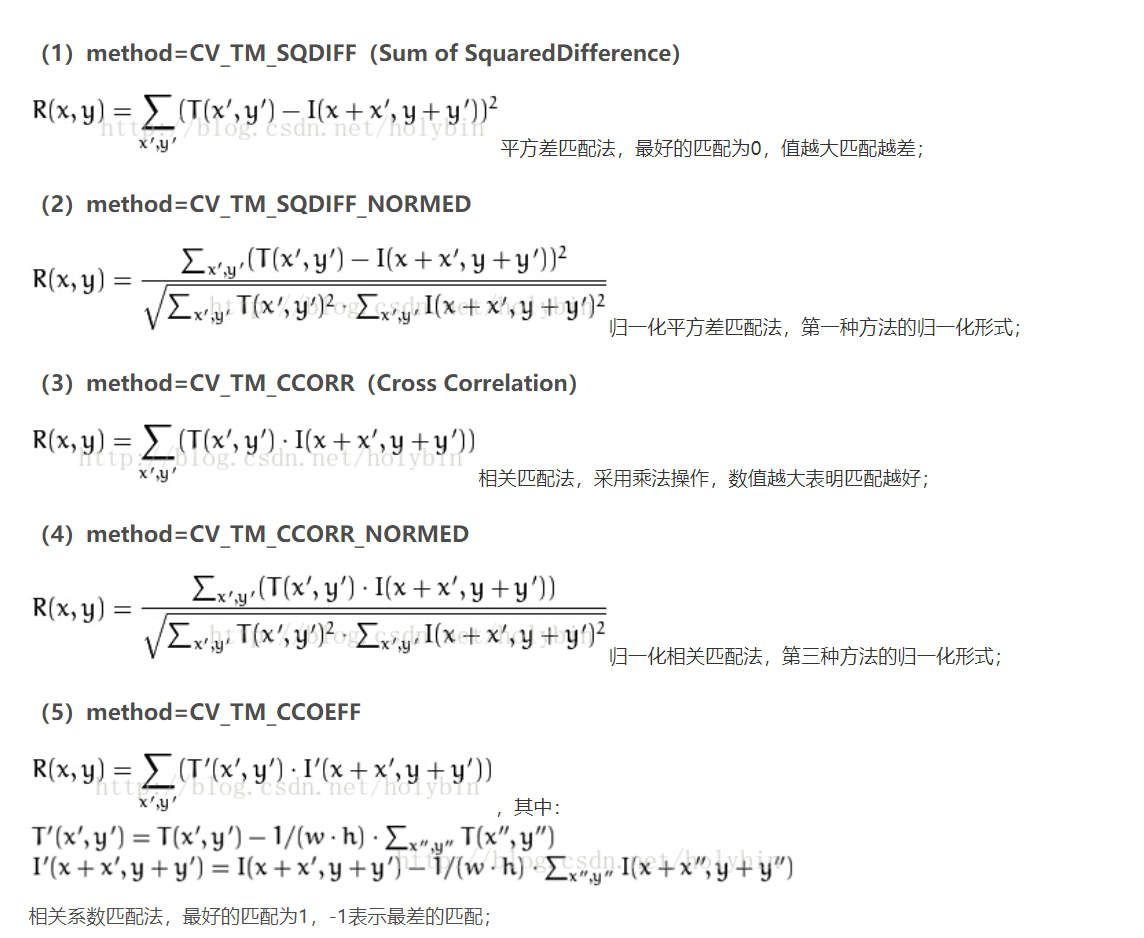

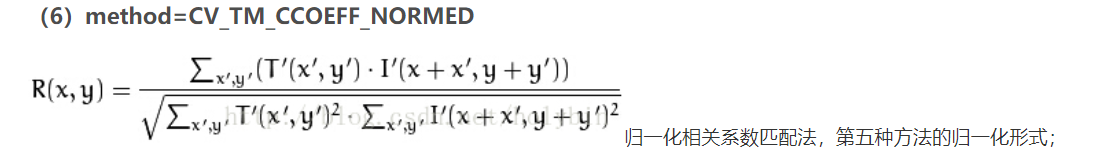

Method: The method of similarity measurement. Specifically as follows (here T is templ, I is image, R is result, x'is from 0 to w-1, y' is from 0 to h-1):

Finally, it should be noted that:

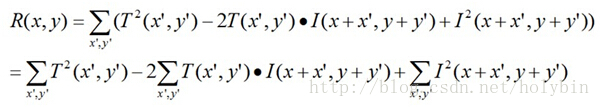

(1) The smaller the value of the former two methods is, the more matching the latter four methods are. The reason is that we can get the formula of the first method CV_TM_SQDIFF by expanding it:

The first term (energy of template image T) is a constant, the third term (local energy of image I) can also approximate a constant, and the remaining second term is the same as the calculation formula of the third method CV_TM_CCORR. We know that the greater the Cross Correlation coefficient, the greater the similarity, so the smaller the similarity of the first and second methods, the higher the similarity of the third and fourth methods. The calculation formulas of the fifth and sixth methods are similar to those of the third and fourth methods, so the greater the similarity of the first and second methods. The higher.

If both the input image and the template image are color images, the similarity of the three channels is calculated respectively, and then the average value is calculated.

3. Functional description

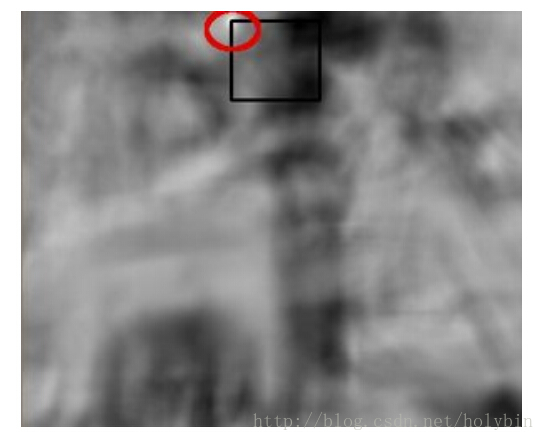

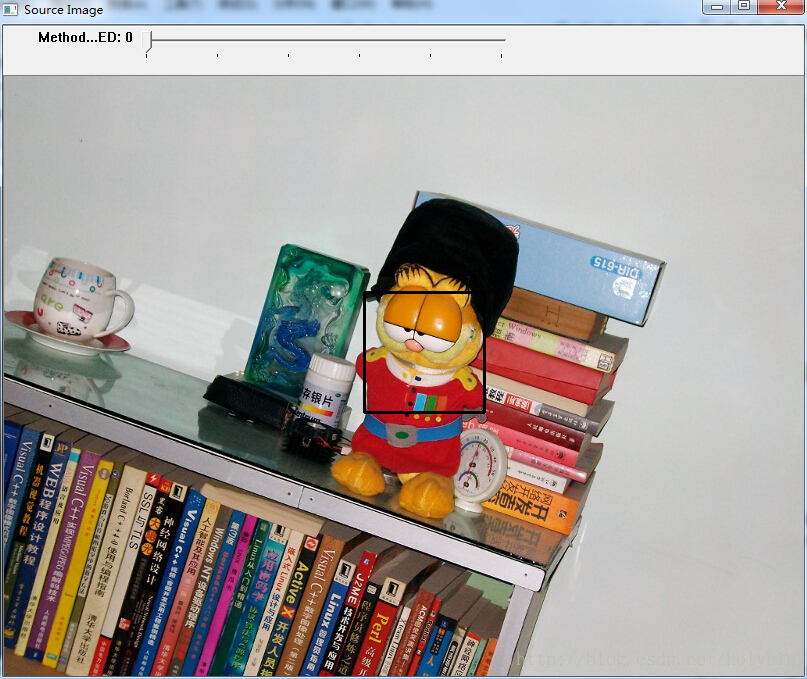

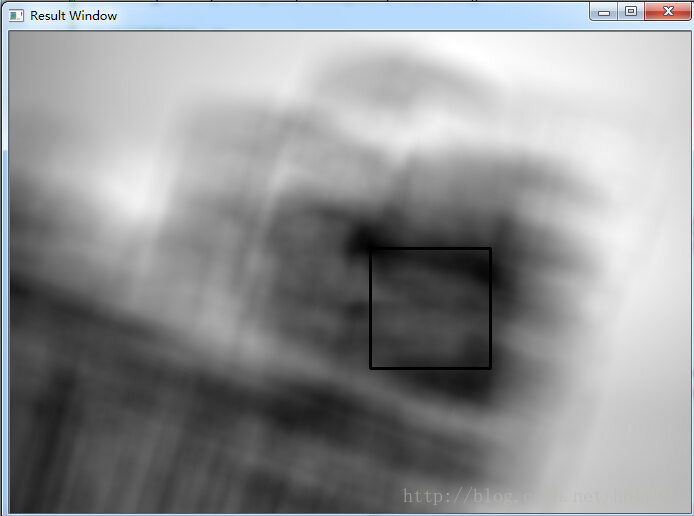

Function by sliding in the input image image (from left to right, from top to bottom), to find the similarity between each location block (search window) and templ template image, and save the results in the result image result. The brightness of each point in the image indicates the matching degree between the input image and the template image. Then the maximum or minimum matching points in the result can be located by some method (minMaxLoc function is commonly used). Finally, the matching area is marked according to the matching points and the rectangular frame of the template image (as follows). The highlight of the red circle is the best matching point, and the black frame is the rectangular frame of the template image.

Code examples

/** * Object matching using function matchTemplate */ #include "opencv2/highgui/highgui.hpp" #include "opencv2/imgproc/imgproc.hpp" #include <iostream> #include <stdio.h> using namespace std; using namespace cv; /// Global variables/// Mat srcImg; //original image Mat templImg; //Template image Mat resultImg; //Matching result image const char* imageWindow = "Source Image"; //Original Image Display Window const char* resultWindow = "Result Window"; //Matching result image display window int matchMethod; //Matching method index int maxTrackbar = 5; //Scope of slider (corresponding to the number of matching methods) /// Function declaration/// void MatchingMethod( int, void* ); //Matching function int main( int argc, char** argv ) { // Load the original image and template image srcImg = imread( "D:\\opencv_pic\\cat0.jpg", 1 ); templImg = imread( "D:\\opencv_pic\\cat3d120.jpg", 1 ); // Create a display window namedWindow( imageWindow, CV_WINDOW_AUTOSIZE ); namedWindow( resultWindow, CV_WINDOW_AUTOSIZE ); // Create slider char* trackbarLabel = "Method: \n \ 0: SQDIFF \n \ 1: SQDIFF NORMED \n \ 2: TM CCORR \n \ 3: TM CCORR NORMED \n \ 4: TM COEFF \n \ 5: TM COEFF NORMED"; //Parameter: slider name display window name matching method index slider range callback function createTrackbar( trackbarLabel, imageWindow, &matchMethod, maxTrackbar, MatchingMethod ); MatchingMethod( 0, 0 ); waitKey(0); return 0; } /// Function Definition/// void MatchingMethod( int, void* ) //Matching function { // Deep copy for display Mat displayImg; srcImg.copyTo( displayImg ); // Create matching result images to store matching results for each template location // The matching result image size is: (W-w+1)*(H-h+1) int result_cols = srcImg.cols - templImg.cols + 1; int result_rows = srcImg.rows - templImg.rows + 1; resultImg.create( result_cols, result_rows, CV_32FC1 ); // Matching and normalization matchTemplate( srcImg, templImg, resultImg, matchMethod ); normalize( resultImg, resultImg, 0, 1, NORM_MINMAX, -1, Mat() ); // Find the best match using minMaxLoc double minVal, maxVal; Point minLoc, maxLoc, matchLoc; minMaxLoc( resultImg, &minVal, &maxVal, &minLoc, &maxLoc, Mat() ); // For CV_TM_SQDIFF and CV_TM_SQDIFF_NORMED, the minimum value is the best match; for other methods, the maximum value is the best match. if( matchMethod == CV_TM_SQDIFF || matchMethod == CV_TM_SQDIFF_NORMED ) { matchLoc = minLoc; } else { matchLoc = maxLoc; } // Mark the best matching box in the top left corner of the original image and the matching result image with the best matching point rectangle( displayImg, matchLoc, Point( matchLoc.x + templImg.cols , matchLoc.y + templImg.rows ), Scalar::all(0), 2, 8, 0 ); rectangle( resultImg, matchLoc, Point( matchLoc.x + templImg.cols , matchLoc.y + templImg.rows ), Scalar::all(0), 2, 8, 0 ); imshow( imageWindow, displayImg ); imshow( resultWindow, resultImg ); return; }

Author: holybin

Source: CSDN

Original: https://blog.csdn.net/holybin/article/details/40541933

Copyright Statement: This article is the original article of the blogger. Please attach a link to the blog article for reprinting.