Introduction: there are wall problems in google's products in China. As a cloud native machine learning suite, kubeflow helps the team greatly. For the team without turning over the wall, building kubeflow based on the domestic image can help you solve many problems. Here, we provide you a set of kubeflow 0.6 installation scheme based on the domestic Alibaba cloud image.

Environmental preparation

kubeflow has high environmental requirements. See the official requirements: at least one worker node with a minimum of:

- 4 CPU

- 50 GB storage

- 12 GB memory

Of course, it can be installed even if it fails to meet the requirements, but there will be resource problems in later use, because this is the whole package installation scheme.

A kubernetes cluster has been installed. Here, I use the cluster installed by lancher.

sudo docker run -d --restart=unless-stopped -p 80:80 -p 443:443 rancher/rancher

Here I choose the version 1.14 of k8s. The version compatibility between kubeflow and k8s can be viewed Official website description , my kubeflow is version 0.6.

If you want to install directly, you can directly adjust to kubeflow one key installation part

kustomize

Download kustomize file

The official tutorial uses kfclt For installation, kfclt essentially uses kustmize to install, so here I download the kustmize file directly and install it by modifying the image.

Official kustmize document Download address

git clone https://github.com/kubeflow/manifests cd manifests git checkout v0.6-branch cd <target>/base kubectl kustomize . | tee <output file>

There are many files. You can export them by script or generate kfctl generate all -V with kfctl command

kustomize/ ├── ambassador.yaml ├── api-service.yaml ├── argo.yaml ├── centraldashboard.yaml ├── jupyter-web-app.yaml ├── katib.yaml ├── metacontroller.yaml ├── minio.yaml ├── mysql.yaml ├── notebook-controller.yaml ├── persistent-agent.yaml ├── pipelines-runner.yaml ├── pipelines-ui.yaml ├── pipelines-viewer.yaml ├── pytorch-operator.yaml ├── scheduledworkflow.yaml ├── tensorboard.yaml └── tf-job-operator.yaml

ambassador microservice gateway argo for task workflow choreography Dashboard Kanban page of central dashboard kubeflow TF job operator deep learning framework engine, a CRD based on tensorflow, Resource type kind is TFJob katib super parameter server

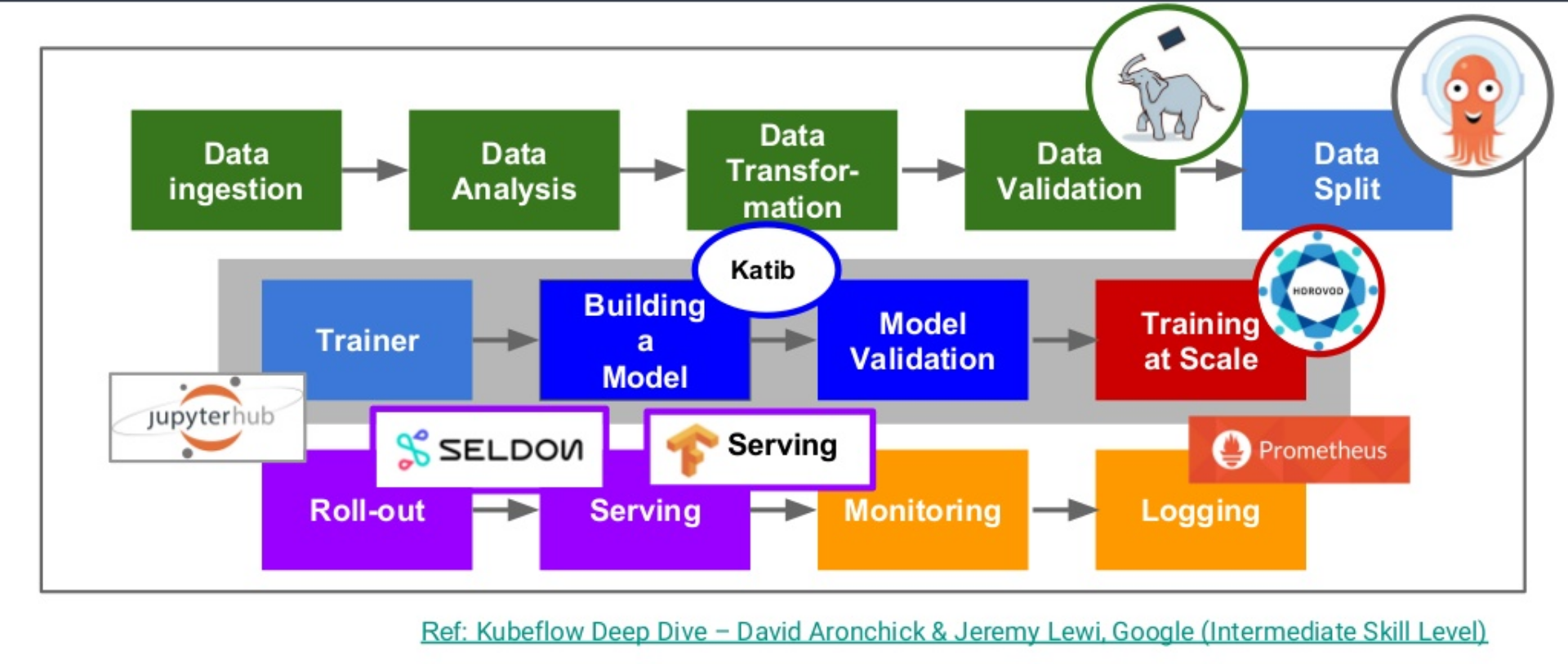

Using process of machine learning Suite

Modify kustomize file

Modify kustomize image

To modify the image:

grc_image = [ "gcr.io/kubeflow-images-public/ingress-setup:latest", "gcr.io/kubeflow-images-public/admission-webhook:v20190520-v0-139-gcee39dbc-dirty-0d8f4c", "gcr.io/kubeflow-images-public/kubernetes-sigs/application:1.0-beta", "gcr.io/kubeflow-images-public/centraldashboard:v20190823-v0.6.0-rc.0-69-gcb7dab59", "gcr.io/kubeflow-images-public/jupyter-web-app:9419d4d", "gcr.io/kubeflow-images-public/katib/v1alpha2/katib-controller:v0.6.0-rc.0", "gcr.io/kubeflow-images-public/katib/v1alpha2/katib-manager:v0.6.0-rc.0", "gcr.io/kubeflow-images-public/katib/v1alpha2/katib-manager-rest:v0.6.0-rc.0", "gcr.io/kubeflow-images-public/katib/v1alpha2/suggestion-bayesianoptimization:v0.6.0-rc.0", "gcr.io/kubeflow-images-public/katib/v1alpha2/suggestion-grid:v0.6.0-rc.0", "gcr.io/kubeflow-images-public/katib/v1alpha2/suggestion-hyperband:v0.6.0-rc.0", "gcr.io/kubeflow-images-public/katib/v1alpha2/suggestion-nasrl:v0.6.0-rc.0", "gcr.io/kubeflow-images-public/katib/v1alpha2/suggestion-random:v0.6.0-rc.0", "gcr.io/kubeflow-images-public/katib/v1alpha2/katib-ui:v0.6.0-rc.0", "gcr.io/kubeflow-images-public/metadata:v0.1.8", "gcr.io/kubeflow-images-public/metadata-frontend:v0.1.8", "gcr.io/ml-pipeline/api-server:0.1.23", "gcr.io/ml-pipeline/persistenceagent:0.1.23", "gcr.io/ml-pipeline/scheduledworkflow:0.1.23", "gcr.io/ml-pipeline/frontend:0.1.23", "gcr.io/ml-pipeline/viewer-crd-controller:0.1.23", "gcr.io/kubeflow-images-public/notebook-controller:v20190603-v0-175-geeca4530-e3b0c4", "gcr.io/kubeflow-images-public/profile-controller:v20190619-v0-219-gbd3daa8c-dirty-1ced0e", "gcr.io/kubeflow-images-public/kfam:v20190612-v0-170-ga06cdb79-dirty-a33ee4", "gcr.io/kubeflow-images-public/pytorch-operator:v1.0.0-rc.0", "gcr.io/google_containers/spartakus-amd64:v1.1.0", "gcr.io/kubeflow-images-public/tf_operator:v0.6.0.rc0", "gcr.io/arrikto/kubeflow/oidc-authservice:v0.2" ] doc_image = [ "registry.cn-shenzhen.aliyuncs.com/shikanon/kubeflow-images-public.ingress-setup:latest", "registry.cn-shenzhen.aliyuncs.com/shikanon/kubeflow-images-public.admission-webhook:v20190520-v0-139-gcee39dbc-dirty-0d8f4c", "registry.cn-shenzhen.aliyuncs.com/shikanon/kubeflow-images-public.kubernetes-sigs.application:1.0-beta", "registry.cn-shenzhen.aliyuncs.com/shikanon/kubeflow-images-public.centraldashboard:v20190823-v0.6.0-rc.0-69-gcb7dab59", "registry.cn-shenzhen.aliyuncs.com/shikanon/kubeflow-images-public.jupyter-web-app:9419d4d", "registry.cn-shenzhen.aliyuncs.com/shikanon/kubeflow-images-public.katib.v1alpha2.katib-controller:v0.6.0-rc.0", "registry.cn-shenzhen.aliyuncs.com/shikanon/kubeflow-images-public.katib.v1alpha2.katib-manager:v0.6.0-rc.0", "registry.cn-shenzhen.aliyuncs.com/shikanon/kubeflow-images-public.katib.v1alpha2.katib-manager-rest:v0.6.0-rc.0", "registry.cn-shenzhen.aliyuncs.com/shikanon/kubeflow-images-public.katib.v1alpha2.suggestion-bayesianoptimization:v0.6.0-rc.0", "registry.cn-shenzhen.aliyuncs.com/shikanon/kubeflow-images-public.katib.v1alpha2.suggestion-grid:v0.6.0-rc.0", "registry.cn-shenzhen.aliyuncs.com/shikanon/kubeflow-images-public.katib.v1alpha2.suggestion-hyperband:v0.6.0-rc.0", "registry.cn-shenzhen.aliyuncs.com/shikanon/kubeflow-images-public.katib.v1alpha2.suggestion-nasrl:v0.6.0-rc.0", "registry.cn-shenzhen.aliyuncs.com/shikanon/kubeflow-images-public.katib.v1alpha2.suggestion-random:v0.6.0-rc.0", "registry.cn-shenzhen.aliyuncs.com/shikanon/kubeflow-images-public.katib.v1alpha2.katib-ui:v0.6.0-rc.0", "registry.cn-shenzhen.aliyuncs.com/shikanon/kubeflow-images-public.metadata:v0.1.8", "registry.cn-shenzhen.aliyuncs.com/shikanon/kubeflow-images-public.metadata-frontend:v0.1.8", "registry.cn-shenzhen.aliyuncs.com/shikanon/ml-pipeline.api-server:0.1.23", "registry.cn-shenzhen.aliyuncs.com/shikanon/ml-pipeline.persistenceagent:0.1.23", "registry.cn-shenzhen.aliyuncs.com/shikanon/ml-pipeline.scheduledworkflow:0.1.23", "registry.cn-shenzhen.aliyuncs.com/shikanon/ml-pipeline.frontend:0.1.23", "registry.cn-shenzhen.aliyuncs.com/shikanon/ml-pipeline.viewer-crd-controller:0.1.23", "registry.cn-shenzhen.aliyuncs.com/shikanon/kubeflow-images-public.notebook-controller:v20190603-v0-175-geeca4530-e3b0c4", "registry.cn-shenzhen.aliyuncs.com/shikanon/kubeflow-images-public.profile-controller:v20190619-v0-219-gbd3daa8c-dirty-1ced0e", "registry.cn-shenzhen.aliyuncs.com/shikanon/kubeflow-images-public.kfam:v20190612-v0-170-ga06cdb79-dirty-a33ee4", "registry.cn-shenzhen.aliyuncs.com/shikanon/kubeflow-images-public.pytorch-operator:v1.0.0-rc.0", "registry.cn-shenzhen.aliyuncs.com/shikanon/google_containers.spartakus-amd64:v1.1.0", "registry.cn-shenzhen.aliyuncs.com/shikanon/kubeflow-images-public.tf_operator:v0.6.0.rc0", "registry.cn-shenzhen.aliyuncs.com/shikanon/arrikto.kubeflow.oidc-authservice:v0.2" ]

Modify PVC to use dynamic storage

Modify pvc storage with local path provider Dynamic distribution PV.

To install the local path provider:

kubectl apply -f https://raw.githubusercontent.com/rancher/local-path-provisioner/master/deploy/local-path-storage.yaml

If you want to use it directly in kubeflow, you also need to change StorageClass to the default storage:

... apiVersion: storage.k8s.io/v1 kind: StorageClass metadata: name: local-path annotations: #Add as default StorageClass storageclass.beta.kubernetes.io/is-default-class: "true" provisioner: rancher.io/local-path volumeBindingMode: WaitForFirstConsumer reclaimPolicy: Delete ...

After completion, you can build a PVC to try:

apiVersion: v1 kind: PersistentVolumeClaim metadata: name: local-path-pvc namespace: default spec: accessModes: - ReadWriteOnce resources: requests: storage: 2Gi

Note: if it is not set as the default storageclass, you need to add storageclassname: local path in PVC for binding

One button installation

Here I made a one click domestic image kubeflow project: https://github.com/shikanon/kubeflow-manifests

A pit in the middle

Coredns CrashLoopBackOff problem

Log log:

kubectl -n kube-system logs coredns-6998d84bf5-r4dbk E1028 06:36:35.489403 1 reflector.go:134] github.com/coredns/coredns/plugin/kubernetes/controller.go:322: Failed to list *v1.Namespace: Get https://10.96.0.1:443/api/v1/namespaces?limit=500&resourceVersion=0: dial tcp 10.96.0.1:443: connect: no route to host E1028 06:36:35.489403 1 reflector.go:134] github.com/coredns/coredns/plugin/kubernetes/controller.go:322: Failed to list *v1.Namespace: Get https://10.96.0.1:443/api/v1/namespaces?limit=500&resourceVersion=0: dial tcp 10.96.0.1:443: connect: no route to host log: exiting because of error: log: cannot create log: open /tmp/coredns.coredns-8686dcc4fd-7fwcz.unknownuser.log.ERROR.20191028-063635.1: no such file or directory

If the rules of iptables are disordered or caused by cache, the solution is as follows:

iptables --flush iptables -tnat --flush

This operation will lose firewall rules