//If the current cache does not meet the requirements, close it

if (cacheCandidate != null && cacheResponse == null) {

closeQuietly(cacheCandidate.body()); // The cache candidate wasn't applicable. Close it.

}

// If the network cannot be used and there is no qualified cache, a 504 error will be thrown directly

if (networkRequest == null && cacheResponse == null) {

return new Response.Builder()

.request(chain.request())

.protocol(Protocol.HTTP_1_1)

.code(504)

.message("Unsatisfiable Request (only-if-cached)")

.body(Util.EMPTY_RESPONSE)

.sentRequestAtMillis(-1L)

.receivedResponseAtMillis(System.currentTimeMillis())

.build();

}

// If there is a cache and the network is not used, the cache result is returned directly

if (networkRequest == null) {

return cacheResponse.newBuilder()

.cacheResponse(stripBody(cacheResponse))

.build();

}

//Try to get a reply over the network

Response networkResponse = null;

try {

networkResponse = chain.proceed(networkRequest);

} finally {

// If we're crashing on I/O or otherwise, don't leak the cache body.

if (networkResponse == null && cacheCandidate != null) {

closeQuietly(cacheCandidate.body());

}

}

// If there is a cache and a request is initiated at the same time, it indicates that this is a Conditional Get request

if (cacheResponse != null) {

// If the server returns NOT_MODIFIED, the cache is valid, and the local cache and network response are combined

if (networkResponse.code() == HTTP_NOT_MODIFIED) {

Response response = cacheResponse.newBuilder()

.headers(combine(cacheResponse.headers(), networkResponse.headers()))

.sentRequestAtMillis(networkResponse.sentRequestAtMillis())

.receivedResponseAtMillis(networkResponse.receivedResponseAtMillis())

.cacheResponse(stripBody(cacheResponse))

.networkResponse(stripBody(networkResponse))

.build();

networkResponse.body().close();

// Update the cache after combining headers but before stripping the

// Content-Encoding header (as performed by initContentStream()).

cache.trackConditionalCacheHit();

cache.update(cacheResponse, response);

return response;

} else {// If the response resource is updated, turn off the original cache

closeQuietly(cacheResponse.body());

}

}

Response response = networkResponse.newBuilder()

.cacheResponse(stripBody(cacheResponse))

.networkResponse(stripBody(networkResponse))

.build();

if (cache != null) {

if (HttpHeaders.hasBody(response) && CacheStrategy.isCacheable(response, networkRequest)) {

// Write network response to cache

CacheRequest cacheRequest = cache.put(response);

return cacheWritingResponse(cacheRequest, response);

}

if (HttpMethod.invalidatesCache(networkRequest.method())) {

try {

cache.remove(networkRequest);

} catch (IOException ignored) {

// The cache cannot be written.

}

}

}

return response;

```

}

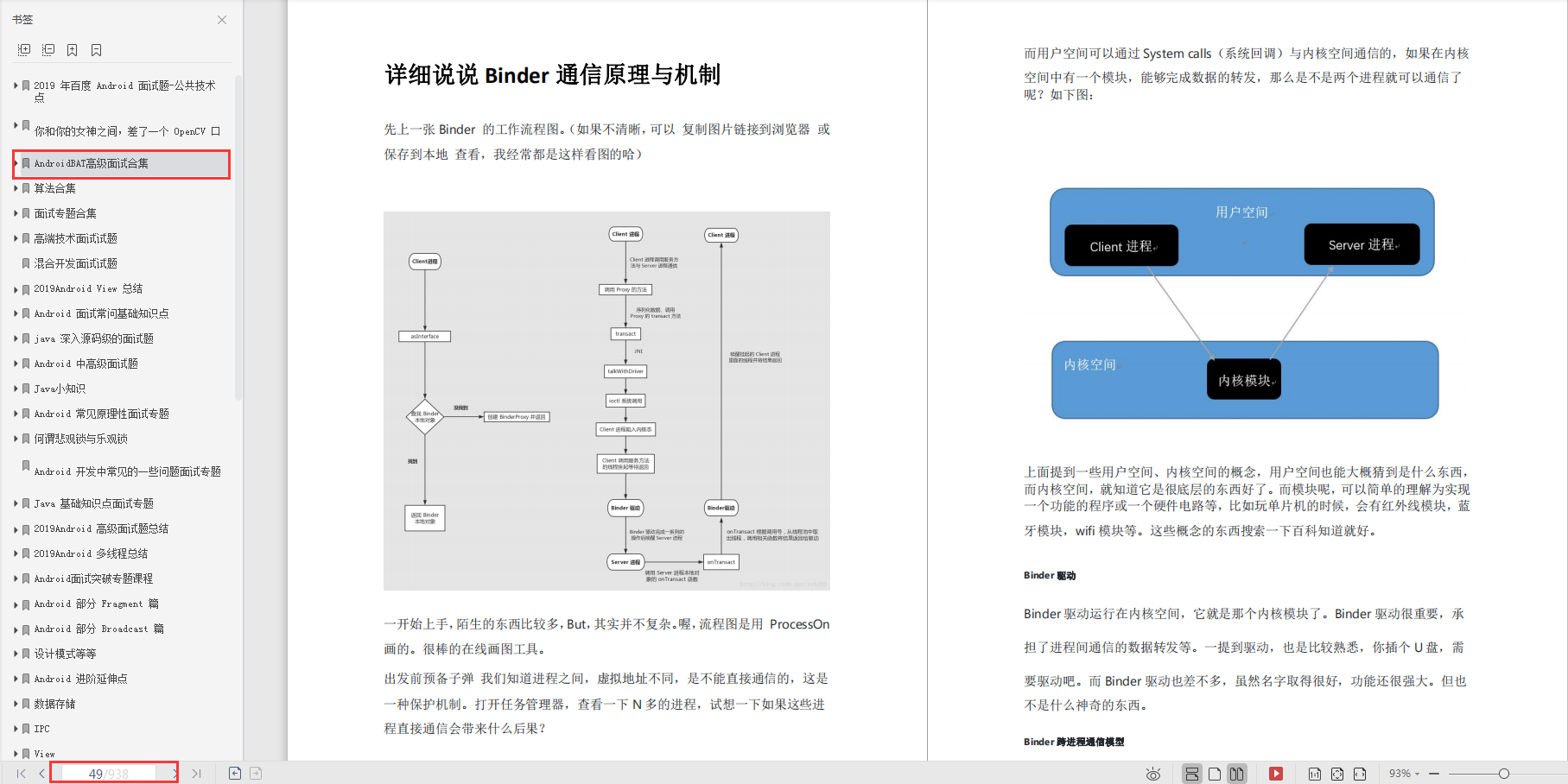

The core logic is marked in the code in the form of Chinese comments. You can look at the code. As can be seen from the above code, almost all actions are based on CacheStrategy Based on the cache policy, let's see how the cache policy is generated, and the relevant code is implemented in CacheStrategy$Factory.get()Method:

\[CacheStrategy$Factory\]

```

/**

* Returns a strategy to satisfy {@code request} using the a cached response {@code response}.

*/

public CacheStrategy get() {

CacheStrategy candidate = getCandidate();

if (candidate.networkRequest != null && request.cacheControl().onlyIfCached()) {

// We're forbidden from using the network and the cache is insufficient.

return new CacheStrategy(null, null);

}

return candidate;

}

/** Returns a strategy to use assuming the request can use the network. */

private CacheStrategy getCandidate() {

// If there is no local cache, initiate a network request

if (cacheResponse == null) {

return new CacheStrategy(request, null);

}

// If the current request is HTTPS and there is no TLS handshake in the cache, re initiate the network request

if (request.isHttps() && cacheResponse.handshake() == null) {

return new CacheStrategy(request, null);

}

// If this response shouldn't have been stored, it should never be used

// as a response source. This check should be redundant as long as the

// persistence store is well-behaved and the rules are constant.

if (!isCacheable(cacheResponse, request)) {

return new CacheStrategy(request, null);

}

//If the current caching policy is no caching or conditional get, initiate a network request

CacheControl requestCaching = request.cacheControl();

if (requestCaching.noCache() || hasConditions(request)) {

return new CacheStrategy(request, null);

}

//ageMillis: cache age

long ageMillis = cacheResponseAge();

//freshMillis: cache freshness time

long freshMillis = computeFreshnessLifetime();

if (requestCaching.maxAgeSeconds() != -1) {

freshMillis = Math.min(freshMillis, SECONDS.toMillis(requestCaching.maxAgeSeconds()));

}

long minFreshMillis = 0;

if (requestCaching.minFreshSeconds() != -1) {

minFreshMillis = SECONDS.toMillis(requestCaching.minFreshSeconds());

}

long maxStaleMillis = 0;

CacheControl responseCaching = cacheResponse.cacheControl();

if (!responseCaching.mustRevalidate() && requestCaching.maxStaleSeconds() != -1) {

maxStaleMillis = SECONDS.toMillis(requestCaching.maxStaleSeconds());

}

//If age + min fresh > = max age & & age + min fresh < Max age + Max stale, although the cache has expired, / / the cache can continue to be used, but a 110 warning code is added to the header

if (!responseCaching.noCache() && ageMillis + minFreshMillis < freshMillis + maxStaleMillis) {

Response.Builder builder = cacheResponse.newBuilder();

if (ageMillis + minFreshMillis >= freshMillis) {

builder.addHeader("Warning", "110 HttpURLConnection \"Response is stale\"");

}

long oneDayMillis = 24 * 60 * 60 * 1000L;

if (ageMillis > oneDayMillis && isFreshnessLifetimeHeuristic()) {

builder.addHeader("Warning", "113 HttpURLConnection \"Heuristic expiration\"");

}

return new CacheStrategy(null, builder.build());

}

// Initiate a conditional get request

String conditionName;

String conditionValue;

if (etag != null) {

conditionName = "If-None-Match";

conditionValue = etag;

} else if (lastModified != null) {

conditionName = "If-Modified-Since";

conditionValue = lastModifiedString;

} else if (servedDate != null) {

conditionName = "If-Modified-Since";

conditionValue = servedDateString;

} else {

return new CacheStrategy(request, null); // No condition! Make a regular request.

}

Headers.Builder conditionalRequestHeaders = request.headers().newBuilder();

Internal.instance.addLenient(conditionalRequestHeaders, conditionName, conditionValue);

Request conditionalRequest = request.newBuilder()

.headers(conditionalRequestHeaders.build())

.build();

return new CacheStrategy(conditionalRequest, cacheResponse);

}

```

You can see its core logic in getCandidate Function. Basically HTTP For the implementation of cache protocol, the core code logic has been explained by Chinese notes. Just look at the code directly.

3. DiskLruCache

Cache Internal pass DiskLruCache Administration cache Create, read, clean up and so on at the file system level. Next, let's take a look DiskLruCache Main logic:

public final class DiskLruCache implements Closeable, Flushable {

final FileSystem fileSystem;

final File directory;

private final File journalFile;

private final File journalFileTmp;

private final File journalFileBackup;

private final int appVersion;

private long maxSize;

final int valueCount;

private long size = 0;

BufferedSink journalWriter;

final LinkedHashMap<String, Entry> lruEntries = new LinkedHashMap<>(0, 0.75f, true);

// Must be read and written when synchronized on 'this'.

boolean initialized;

boolean closed;

boolean mostRecentTrimFailed;

boolean mostRecentRebuildFailed;

/\*\*

* To differentiate between old and current snapshots, each entry is given a sequence number each

* time an edit is committed. A snapshot is stale if its sequence number is not equal to its

* entry's sequence number.

\*/

private long nextSequenceNumber = 0;

/\*\* Used to run 'cleanupRunnable' for journal rebuilds. \*/

private final Executor executor;

private final Runnable cleanupRunnable = new Runnable() {

public void run() {

...

}

};

...

}

3.1 journalFile

DiskLruCache Internal log file, right cache Each read and write of the corresponds to a log record, DiskLruCache Analysis and creation through analysis logs cache. The log file format is as follows:

```

libcore.io.DiskLruCache

1

100

2

CLEAN 3400330d1dfc7f3f7f4b8d4d803dfcf6 832 21054

DIRTY 335c4c6028171cfddfbaae1a9c313c52

CLEAN 335c4c6028171cfddfbaae1a9c313c52 3934 2342

REMOVE 335c4c6028171cfddfbaae1a9c313c52

DIRTY 1ab96a171faeeee38496d8b330771a7a

CLEAN 1ab96a171faeeee38496d8b330771a7a 1600 234

READ 335c4c6028171cfddfbaae1a9c313c52

READ 3400330d1dfc7f3f7f4b8d4d803dfcf6

The first 5 lines are fixed, namely: constant: libcore.io.DiskLruCache;diskCache edition; Application version; valueCount(Later introduction),Blank line

Next, each line corresponds to one cache entry The format of primary status record of is:[Status( DIRTY,CLEAN,READ,REMOVE),key,State correlation value(Optional)]:

- DIRTY:Indicate a cache entry Being created or updated, each successful DIRTY All records should correspond to one CLEAN or REMOVE Operation. If one DIRTY Missing expected match CLEAN/REMOVE,Then corresponding entry The operation failed and needs to be removed from the lruEntries Delete in

- CLEAN:explain cache It has been operated successfully and can be read normally. every last CLEAN Each row also needs to be recorded value Length of

- READ: Record once cache read operation

- REMOVE:Record once cache eliminate

```

There are four main application scenarios for log files:

DiskCacheLru Created by reading the log file during initialization cache Container: lruEntries. At the same time, the log filtering operation is unsuccessful cache Item. Related logic in DiskLruCache.readJournalLine,DiskLruCache.processJournal

After initialization, in order to avoid the continuous expansion of log files, the logs are reconstructed and streamlined. The specific logic is shown in DiskLruCache.rebuildJournal

Whenever there is cache It is recorded in the log file during operation for use during the next initialization

When there are too many redundant logs, call cleanUpRunnable Thread rebuild log

3.2 DiskLruCache.Entry

every last DiskLruCache.Entry Corresponding to one cache record:

private final class Entry {

final String key;

```

/** Lengths of this entry's files. */

final long[] lengths;

final File[] cleanFiles;

final File[] dirtyFiles;

/** True if this entry has ever been published. */

boolean readable;

/** The ongoing edit or null if this entry is not being edited. */

Editor currentEditor;

/** The sequence number of the most recently committed edit to this entry. */

long sequenceNumber;

Entry(String key) {

this.key = key;

lengths = new long[valueCount];

cleanFiles = new File[valueCount];

dirtyFiles = new File[valueCount];

// The names are repetitive so re-use the same builder to avoid allocations.

StringBuilder fileBuilder = new StringBuilder(key).append('.');

int truncateTo = fileBuilder.length();

for (int i = 0; i < valueCount; i++) {

fileBuilder.append(i);

cleanFiles[i] = new File(directory, fileBuilder.toString());

fileBuilder.append(".tmp");

dirtyFiles[i] = new File(directory, fileBuilder.toString());

fileBuilder.setLength(truncateTo);

}

}

...

/**

* Returns a snapshot of this entry. This opens all streams eagerly to guarantee that we see a

* single published snapshot. If we opened streams lazily then the streams could come from

* different edits.

*/

Snapshot snapshot() {

if (!Thread.holdsLock(DiskLruCache.this)) throw new AssertionError();

Source[] sources = new Source[valueCount];

long[] lengths = this.lengths.clone(); // Defensive copy since these can be zeroed out.

try {

for (int i = 0; i < valueCount; i++) {

sources[i] = fileSystem.source(cleanFiles[i]);

}

return new Snapshot(key, sequenceNumber, sources, lengths);

} catch (FileNotFoundException e) {

// A file must have been deleted manually!

for (int i = 0; i < valueCount; i++) {

if (sources[i] != null) {

Util.closeQuietly(sources[i]);

} else {

break;

}

}

// Since the entry is no longer valid, remove it so the metadata is accurate (i.e. the cache

// size.)

try {

removeEntry(this);

} catch (IOException ignored) {

}

return null;

}

}

```

}

One Entry It mainly consists of the following parts:

key: each cache There is one key As its identifier. current cache of key For its corresponding URL of MD5 character string

cleanFiles/dirtyFiles: every last Entry Corresponding to multiple files, the number of corresponding files is determined by DiskLruCache.valueCount appoint. Currently in OkHttp in valueCount Is 2. That is, each cache Corresponding to 2 cleanFiles,2 individual dirtyFiles. The first of them cleanFiles/dirtyFiles record cache of meta Data (e.g URL,Creation time, SSL Handshake record, etc.), the second file record cache The real content of. cleanFiles Record stable cache result, dirtyFiles The record is in the created or updated state cache

currentEditor: entry Editor, right entry All operations of are done through its editor. A synchronization lock has been added inside the editor

3.3 cleanupRunnable

Cleanup thread to rebuild thin logs:

private final Runnable cleanupRunnable = new Runnable() {

public void run() {

synchronized (DiskLruCache.this) {

if (!initialized | closed) {

return; // Nothing to do

}

```

try {

trimToSize();

} catch (IOException ignored) {

mostRecentTrimFailed = true;

}

try {

if (journalRebuildRequired()) {

rebuildJournal();

redundantOpCount = 0;

}

} catch (IOException e) {

mostRecentRebuildFailed = true;

journalWriter = Okio.buffer(Okio.blackhole());

}

}

}

```

};

The trigger condition is journalRebuildRequired()Method:

/\*\*

* We only rebuild the journal when it will halve the size of the journal and eliminate at least

* 2000 ops.

\*/

boolean journalRebuildRequired() {

final int redundantOpCompactThreshold = 2000;

return redundantOpCount >= redundantOpCompactThreshold

&& redundantOpCount >= lruEntries.size();

}

Execute when the redundant log exceeds the general of the log file itself and the total number of logs exceeds 2000

3.4 SnapShot

cache Snapshot, recording specific cache Content at a particular time. Every time DiskLruCache The target is returned when the request is made cache A snapshot of,Related logic in DiskLruCache.get Medium:

\[DiskLruCache.java\]

/\*\*

* Returns a snapshot of the entry named {@code key}, or null if it doesn't exist is not currently

* readable. If a value is returned, it is moved to the head of the LRU queue.

\*/

public synchronized Snapshot get(String key) throws IOException {

initialize();

```

checkNotClosed();

validateKey(key);

Entry entry = lruEntries.get(key);

if (entry == null || !entry.readable) return null;

Snapshot snapshot = entry.snapshot();

if (snapshot == null) return null;

redundantOpCount++;

//Logging

journalWriter.writeUtf8(READ).writeByte(' ').writeUtf8(key).writeByte('\n');

if (journalRebuildRequired()) {

executor.execute(cleanupRunnable);

}

return snapshot;

```

}

3.5 lruEntries

Administration cache entry The data structure of the container is LinkedHashMap. adopt LinkedHashMap Its implementation logic reaches cache of LRU replace

3.6 FileSystem

use Okio yes File The packaging is simplified I/O Operation.

3.7 DiskLruCache.edit

DiskLruCache Can be seen as Cache In the specific implementation of the file system layer, there is a one-to-one correspondence between its basic operation interfaces:

Cache.get() —>DiskLruCache.get()

Cache.put()—>DiskLruCache.edit() //cache insert

Cache.remove()—>DiskLruCache.remove()

Cache.update()—>DiskLruCache.edit()//cache update

among get Operation at 3.4 Already introduced, remove The operation is relatively simple, put and update Roughly similar logic, because of space constraints, only introduced here Cache.put Operation logic and other operations. Just look at the code:

\[okhttp3.Cache.java\]

CacheRequest put(Response response) {

String requestMethod = response.request().method();

```

if (HttpMethod.invalidatesCache(response.request().method())) {

try {

remove(response.request());

} catch (IOException ignored) {

// The cache cannot be written.

}

return null;

}

if (!requestMethod.equals("GET")) {

// Don't cache non-GET responses. We're technically allowed to cache

// HEAD requests and some POST requests, but the complexity of doing

// so is high and the benefit is low.

return null;

}

if (HttpHeaders.hasVaryAll(response)) {

return null;

}

Entry entry = new Entry(response);

DiskLruCache.Editor editor = null;

try {

editor = cache.edit(key(response.request().url()));

if (editor == null) {

return null;

}

entry.writeTo(editor);

return new CacheRequestImpl(editor);

} catch (IOException e) {

abortQuietly(editor);

return null;

}

```

}

You can see that the core logic is editor = cache.edit(key(response.request().url()));,Relevant codes are in DiskLruCache.edit:

\[okhttp3.internal.cache.DiskLruCache.java\]

synchronized Editor edit(String key, long expectedSequenceNumber) throws IOException {

initialize();

```

checkNotClosed();

validateKey(key);

Entry entry = lruEntries.get(key);

if (expectedSequenceNumber != ANY_SEQUENCE_NUMBER && (entry == null

|| entry.sequenceNumber != expectedSequenceNumber)) {

return null; // Snapshot is stale.

}

if (entry != null && entry.currentEditor != null) {

return null; // The current cache entry is being manipulated by another object

}

if (mostRecentTrimFailed || mostRecentRebuildFailed) {

// The OS has become our enemy! If the trim job failed, it means we are storing more data than

// requested by the user. Do not allow edits so we do not go over that limit any further. If

// the journal rebuild failed, the journal writer will not be active, meaning we will not be

last

Promise everyone to prepare for the golden three silver four, the real question of the big factory interview is coming!

This information will be published on blogs and forums since the spring recruit. Collect high-quality interview questions in Android Development on the website, and then find the best solution throughout the network. Each interview question is a 100% real question + the best answer. Package knowledge context + many details.

You can save the time of searching information on the Internet to learn, and you can also share it with your friends to learn together.

CodeChina open source project: Android learning notes summary + mobile architecture Video + big factory interview real questions + project actual combat source code

"960 most complete Android Development Notes in the whole network"

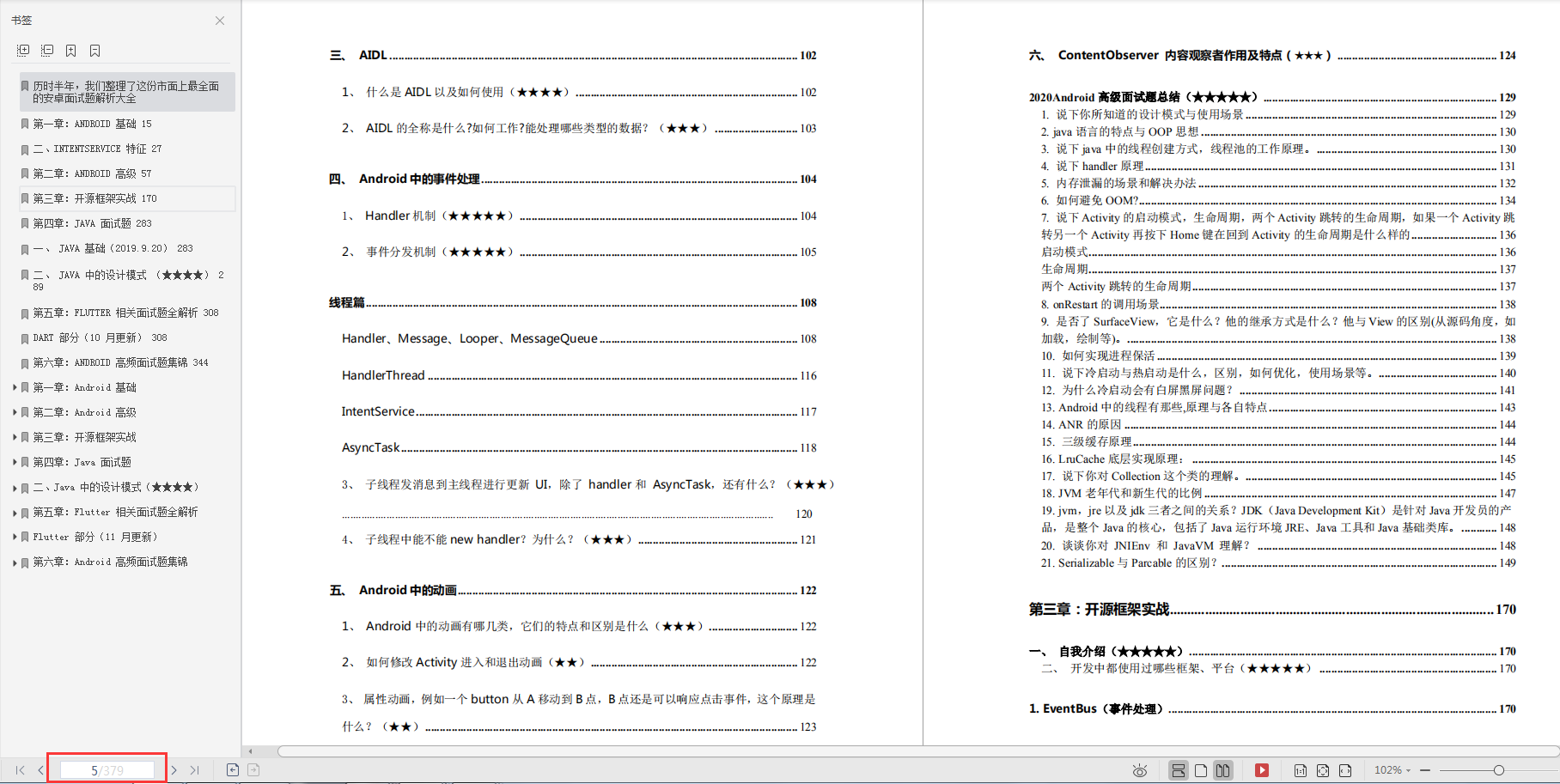

379 pages of Android development interview

It includes the questions asked in the interview of front-line Internet companies such as Tencent, Baidu, Xiaomi, Ali, LETV, meituan, 58, cheetah, 360, Sina and Sohu. Familiarity with the knowledge points listed in this article will greatly increase the probability of passing the first two rounds of technical interviews.

How to use it?

1. You can directly look through the required knowledge points through the directory index to find out omissions and fill vacancies.

2. The number of five pointed stars indicates the frequency of interview questions and represents the important recommendation index

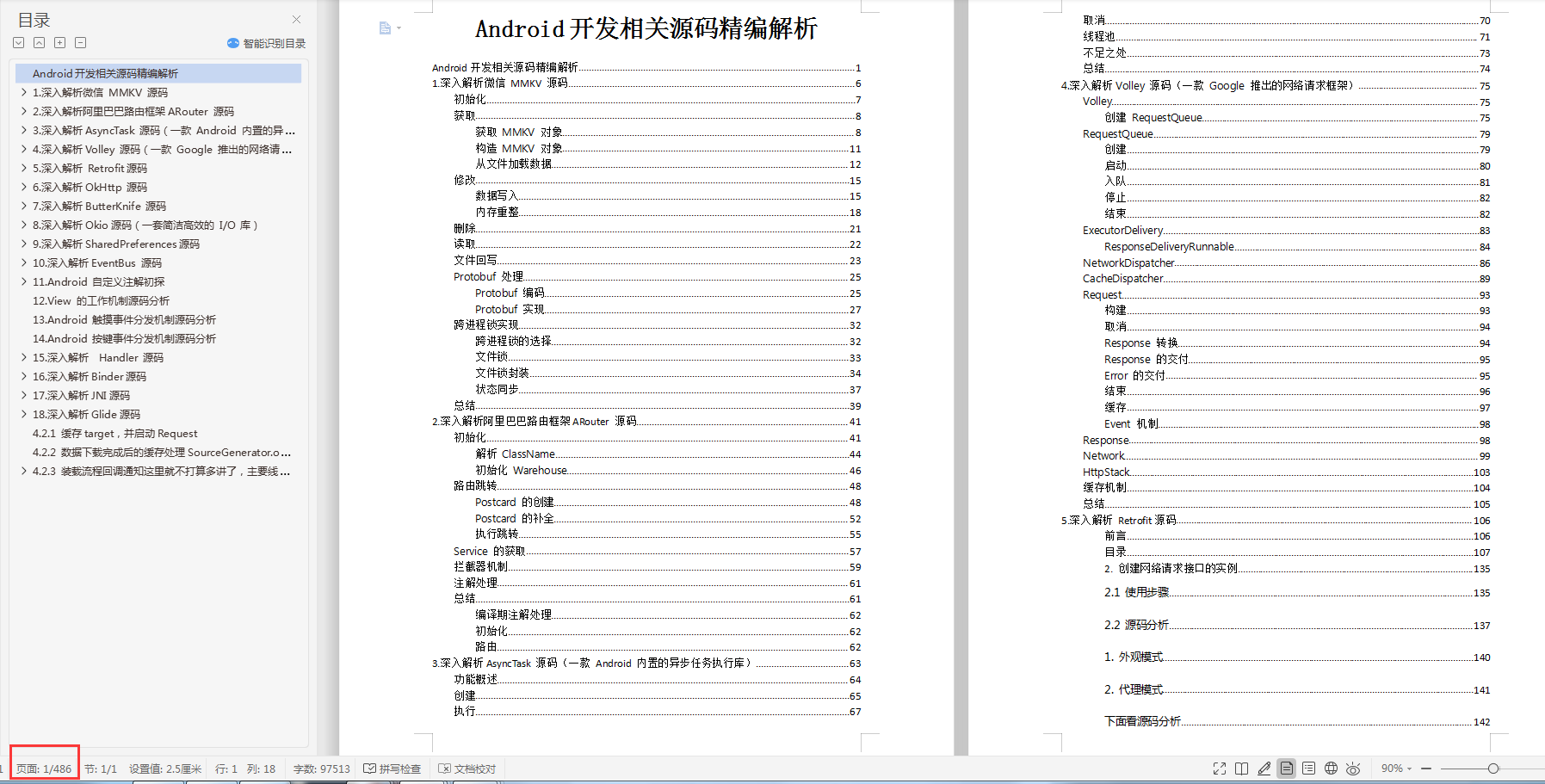

507 page Android development related source code analysis

As long as programmers, whether Java or Android, don't read the source code and only look at the API documents, they will just stay superficial, which is unfavorable to the establishment and completion of our knowledge system and the improvement of practical technology.

What can really exercise your ability is to read the source code directly, not only limited to reading the source code of major systems, but also various excellent open source libraries.

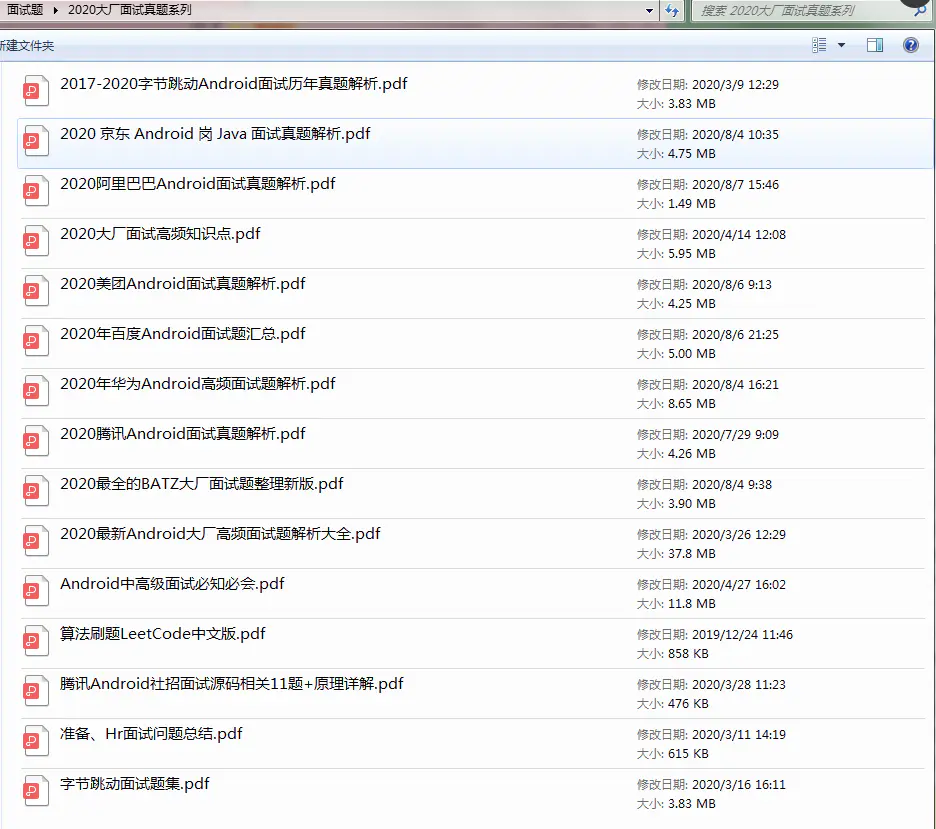

Analysis of real interview questions in 2020-2021 for big BAT companies such as Tencent, byte beating, Alibaba and Baidu

Data collection is not easy. If you like this article or it is helpful to you, you might as well like it and forward it. The article will be updated continuously. Absolutely dry!!!

rnal rebuild failed, the journal writer will not be active, meaning we will not be

last

Promise everyone to prepare for the golden three silver four, the real question of the big factory interview is coming!

This information will be published on blogs and forums since the spring recruit. Collect high-quality interview questions in Android Development on the website, and then find the best solution throughout the network. Each interview question is a 100% real question + the best answer. Package knowledge context + many details.

You can save the time of searching information on the Internet to learn, and you can also share it with your friends to learn together.

CodeChina open source project: Android learning notes summary + mobile architecture Video + big factory interview real questions + project actual combat source code

"960 most complete Android Development Notes in the whole network"

[external chain picture transferring... (img-dFEM1yMr-1630937247575)]

379 pages of Android development interview

It includes the questions asked in the interview of front-line Internet companies such as Tencent, Baidu, Xiaomi, Ali, LETV, meituan, 58, cheetah, 360, Sina and Sohu. Familiarity with the knowledge points listed in this article will greatly increase the probability of passing the first two rounds of technical interviews.

How to use it?

1. You can directly look through the required knowledge points through the directory index to find out omissions and fill vacancies.

2. The number of five pointed stars indicates the frequency of interview questions and represents the important recommendation index

[external chain picture transferring... (img-8bZ9AaYi-1630937247577)]

507 page Android development related source code analysis

As long as programmers, whether Java or Android, don't read the source code and only look at the API documents, they will just stay superficial, which is unfavorable to the establishment and completion of our knowledge system and the improvement of practical technology.

What can really exercise your ability is to read the source code directly, not only limited to reading the source code of major systems, but also various excellent open source libraries.

[external chain picture transferring... (img-PtK5Axnq-1630937247579)]

Analysis of real interview questions in 2020-2021 for big BAT companies such as Tencent, byte beating, Alibaba and Baidu

[external chain picture transferring... (img-VkHITIw1-1630937247581)]

Data collection is not easy. If you like this article or it is helpful to you, you might as well like it and forward it. The article will be updated continuously. Absolutely dry!!!