V. text preprocessing

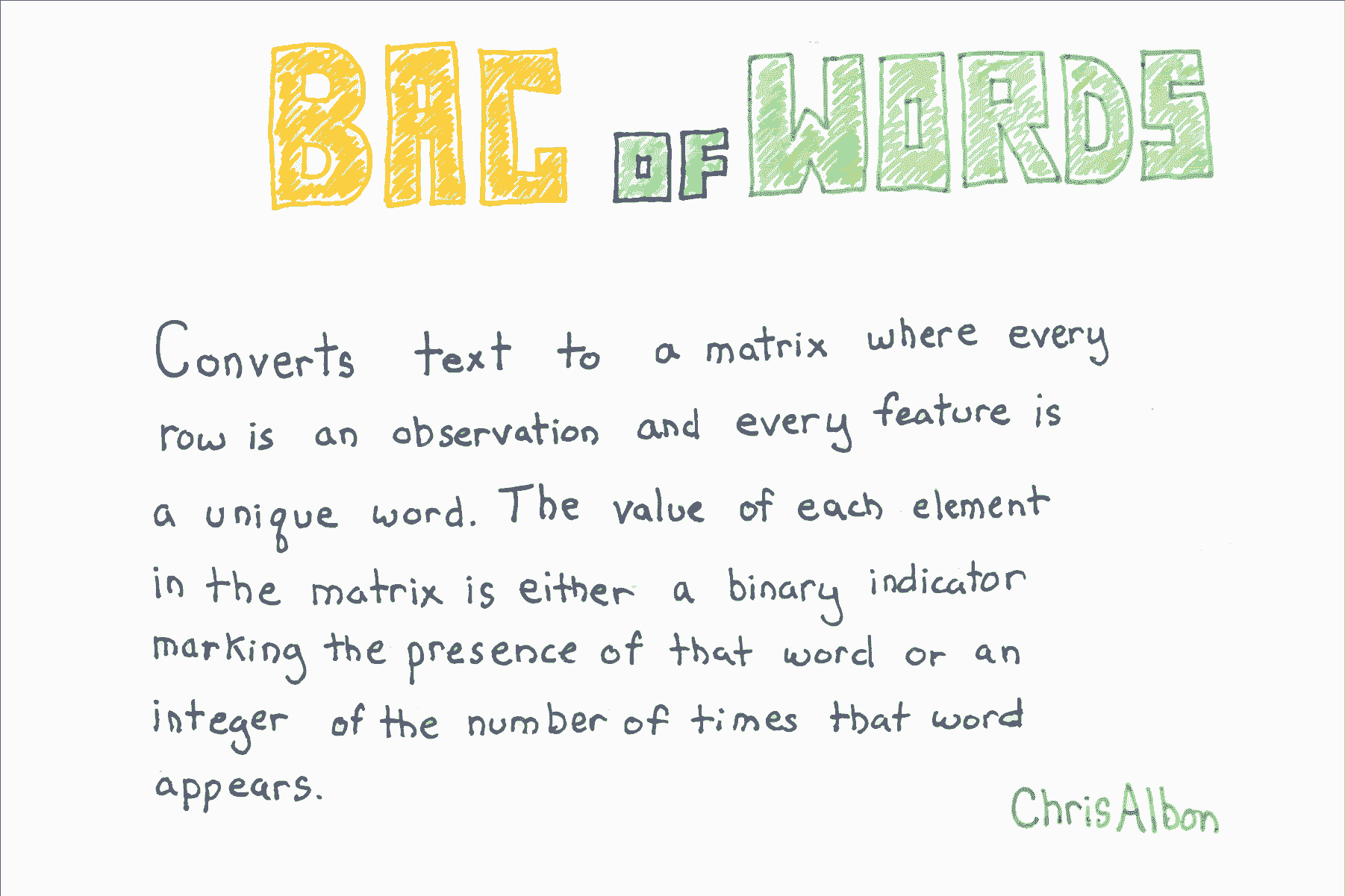

Word bag

image

# Loading Library import numpy as np from sklearn.feature_extraction.text import CountVectorizer import pandas as pd # Create text text_data = np.array(['I love Brazil. Brazil!', 'Sweden is best', 'Germany beats both']) # Creating feature matrix of word bag count = CountVectorizer() bag_of_words = count.fit_transform(text_data) # Show feature matrix bag_of_words.toarray() ''' array([[0, 0, 0, 2, 0, 0, 1, 0], [0, 1, 0, 0, 0, 1, 0, 1], [1, 0, 1, 0, 1, 0, 0, 0]], dtype=int64) ''' # Get feature name feature_names = count.get_feature_names() # View feature names feature_names # ['beats', 'best', 'both', 'brazil', 'germany', 'is', 'love', 'sweden'] # Create data frame pd.DataFrame(bag_of_words.toarray(), columns=feature_names)

| beats | best | both | brazil | germany | is | love | sweden | |

|---|---|---|---|---|---|---|---|---|

| 0 | 0 | 0 | 0 | 2 | 0 | 0 | 1 | 0 |

| 1 | 0 | 1 | 0 | 0 | 0 | 1 | 0 | 1 |

| 2 | 1 | 0 | 1 | 0 | 1 | 0 | 0 | 0 |

Parsing HTML

# Loading Library from bs4 import BeautifulSoup # Create some HTML code html = "<div class='full_name'><span style='font-weight:bold'>Masego</span> Azra</div>" # Parsing html soup = BeautifulSoup(html, "lxml") # Find the < div > with the "full_name" class to display the text soup.find("div", { "class" : "full_name" }).text # 'Masego Azra'

Remove punctuation

# Loading Library import string import numpy as np # Create text text_data = ['Hi!!!! I. Love. This. Song....', '10000% Agree!!!! #LoveIT', 'Right?!?!'] # Create a function and remove all punctuation using string.punctuation def remove_punctuation(sentence: str) -> str: return sentence.translate(str.maketrans('', '', string.punctuation)) # Application function [remove_punctuation(sentence) for sentence in text_data] # ['Hi I Love This Song', '10000 Agree LoveIT', 'Right']

Remove stop word

# Loading Library from nltk.corpus import stopwords # The first time you need to download a collection of stop words import nltk nltk.download('stopwords') ''' [nltk_data] Downloading package stopwords to [nltk_data] /Users/chrisalbon/nltk_data... [nltk_data] Package stopwords is already up-to-date! True ''' # Create word Tags tokenized_words = ['i', 'am', 'going', 'to', 'go', 'to', 'the', 'store', 'and', 'park'] # Load stop words stop_words = stopwords.words('english') # Show stop words stop_words[:5] # ['i', 'me', 'my', 'myself', 'we'] # Remove stop word [word for word in tokenized_words if word not in stop_words] # ['going', 'go', 'store', 'park']

Replacement character

# Import library import re # Create text text_data = ['Interrobang. By Aishwarya Henriette', 'Parking And Going. By Karl Gautier', 'Today Is The night. By Jarek Prakash'] # Remove period remove_periods = [string.replace('.', '') for string in text_data] # Display text remove_periods ''' ['Interrobang By Aishwarya Henriette', 'Parking And Going By Karl Gautier', 'Today Is The night By Jarek Prakash'] ''' # Create function def replace_letters_with_X(string: str) -> str: return re.sub(r'[a-zA-Z]', 'X', string) # Application function [replace_letters_with_X(string) for string in remove_periods] ''' ['XXXXXXXXXXX XX XXXXXXXXX XXXXXXXXX', 'XXXXXXX XXX XXXXX XX XXXX XXXXXXX', 'XXXXX XX XXX XXXXX XX XXXXX XXXXXXX'] '''

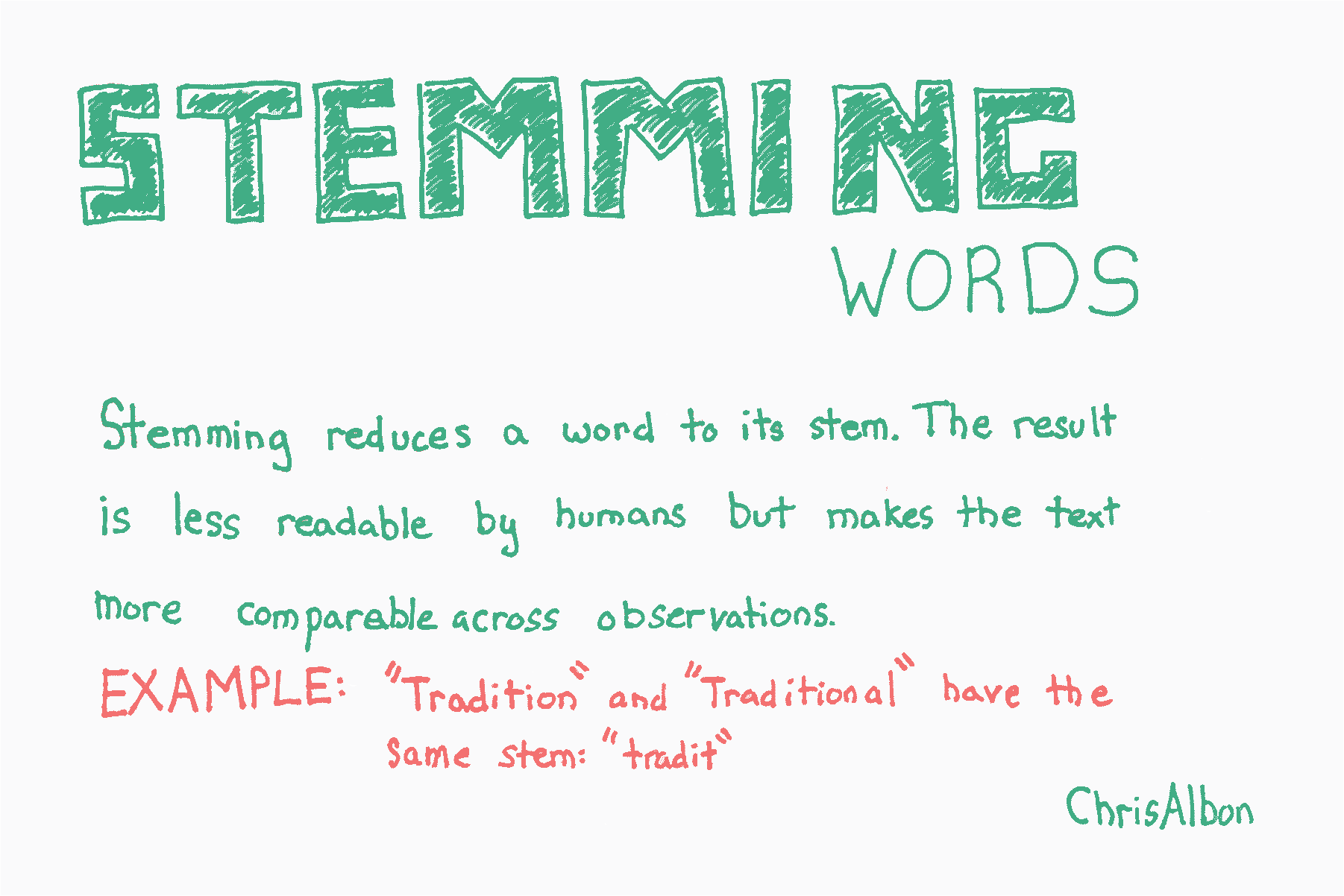

Stem extraction

image

# Loading Library from nltk.stem.porter import PorterStemmer # Create word Tags tokenized_words = ['i', 'am', 'humbled', 'by', 'this', 'traditional', 'meeting']

Stem extraction simplifies words into stem by recognizing and deleting affixes (e.g. gerunds) while maintaining the basic meaning of words. The porter Stemmer of NLTK implements the widely used Porter Stemmer algorithm.

# Create extractor porter = PorterStemmer() # Application extractor [porter.stem(word) for word in tokenized_words] # ['i', 'am', 'humbl', 'by', 'thi', 'tradit', 'meet']

Remove blank

# Create text text_data = [' Interrobang. By Aishwarya Henriette ', 'Parking And Going. By Karl Gautier', ' Today Is The night. By Jarek Prakash '] # Remove blank strip_whitespace = [string.strip() for string in text_data] # Display text strip_whitespace ''' ['Interrobang. By Aishwarya Henriette', 'Parking And Going. By Karl Gautier', 'Today Is The night. By Jarek Prakash'] '''

Part of speech tagging

# Loading Library from nltk import pos_tag from nltk import word_tokenize # Create text text_data = "Chris loved outdoor running" # Part of speech tagger with pre training text_tagged = pos_tag(word_tokenize(text_data)) # Display part of speech text_tagged # [('Chris', 'NNP'), ('loved', 'VBD'), ('outdoor', 'RP'), ('running', 'VBG')]

The output is a list of tuples that contain tags for words and parts of speech. NLTK uses Penn Treebank part of speech tags.

| Label | Part of speech |

|---|---|

| NNP | Proper noun, singular |

| NN | Noun, singular or collective |

| RB | adverb |

| VBD | Verb, past tense |

| VBG | Verb, gerund or present participle |

| JJ | Adjective |

| PRP | Personal pronoun |

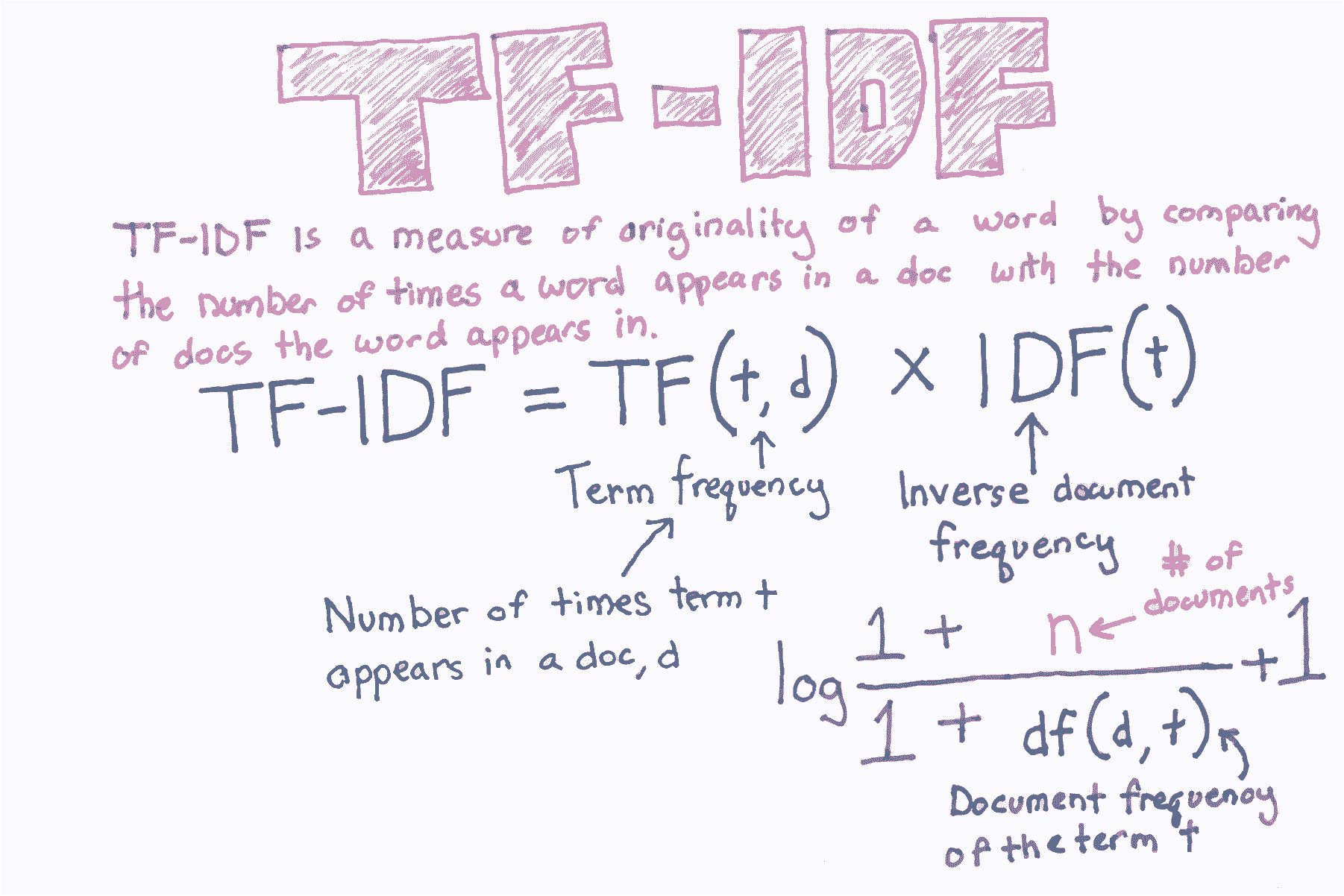

TF-IDF

image

# Loading Library import numpy as np from sklearn.feature_extraction.text import TfidfVectorizer import pandas as pd # Create text text_data = np.array(['I love Brazil. Brazil!', 'Sweden is best', 'Germany beats both']) # Creating TF IDF feature matrix tfidf = TfidfVectorizer() feature_matrix = tfidf.fit_transform(text_data) # Show TF IDF characteristic matrix feature_matrix.toarray() ''' array([[ 0. , 0. , 0. , 0.89442719, 0. , 0. , 0.4472136 , 0. ], [ 0. , 0.57735027, 0. , 0. , 0. , 0.57735027, 0. , 0.57735027], [ 0.57735027, 0. , 0.57735027, 0. , 0.57735027, 0. , 0. , 0. ]]) ''' # Show TF IDF characteristic matrix tfidf.get_feature_names() # ['beats', 'best', 'both', 'brazil', 'germany', 'is', 'love', 'sweden'] # Create data frame pd.DataFrame(feature_matrix.toarray(), columns=tfidf.get_feature_names())

| beats | best | both | brazil | germany | is | love | sweden | |

|---|---|---|---|---|---|---|---|---|

| 0 | 0.00000 | 0.00000 | 0.00000 | 0.894427 | 0.00000 | 0.00000 | 0.447214 | 0.00000 |

| 1 | 0.00000 | 0.57735 | 0.00000 | 0.000000 | 0.00000 | 0.57735 | 0.000000 | 0.57735 |

| 2 | 0.57735 | 0.00000 | 0.57735 | 0.000000 | 0.57735 | 0.00000 | 0.000000 | 0.00000 |

Text segmentation

# Loading Library from nltk.tokenize import word_tokenize, sent_tokenize # Create text string = "The science of today is the technology of tomorrow. Tomorrow is today." # Segmentation of text word_tokenize(string) ''' ['The', 'science', 'of', 'today', 'is', 'the', 'technology', 'of', 'tomorrow', '.', 'Tomorrow', 'is', 'today', '.'] ''' # Participle sentences sent_tokenize(string) # ['The science of today is the technology of tomorrow.', 'Tomorrow is today.']