Before that, I wrote a Bayesian classification problem. This time, LR is used to realize a classification problem (Library adjustment). The first is to collect data. This time, I used a small project data set of a certain profile Tycoon (or I can collect a data set myself to classify). The format is as follows:

1. Take the students to buy 300223 Beijing Junzheng at about 15 yuan this morning. It is expected to rise sharply tomorrow. Please pay attention not to buy it! Gold stock hotline: 400-6289775 modern investment [Guofu consulting] 0. At 14:26 on the 13th of your last 7544 card, the online banking expenditure (consumption) was 158 yuan. Industrial and Commercial Bank of China

The steps are as follows: data preprocessing (this process can be realized by code, or can be processed manually in advance, and the format of the data set used this time, invalid data and garbled data have been cleaned up) - > data is divided into training set, test set and prediction set - > data is generated into word frequency dictionary - > features are extracted, text features are digitized - > Training LR model - > model evaluation - > Advanced Row prediction

Here's a brief introduction to the unique hot code: assuming that the thesaurus is me / love / you / China, the unique hot code has 4 digits. In fact, I love you 1110 and I love China 1101. (1 stands for existence, 0 stands for nonexistence) (1110 actually stands for me, love and you)

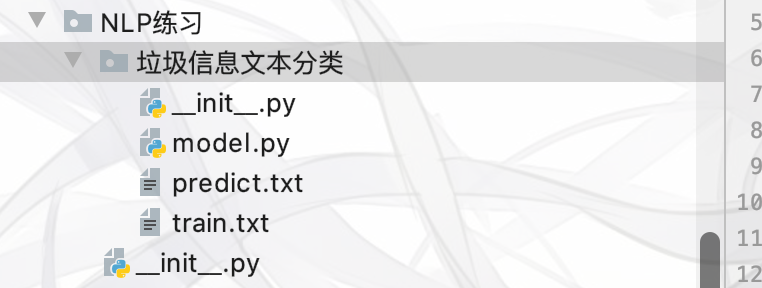

The file structure is as follows: the data file is train.txt, predict.txt, and the code file is model.py

1. First, load the required library file

import collections import itertools import operator import array import jieba import sklearn import sklearn.linear_model as linear_model

2. Write a data processing function to separate data labels and contents (the test_size parameter of this function is also a flag, if it is 0, it will not be divided, otherwise it will be divided)

# Label and text are separated, and training set and test set are divided

def data_xy(data_path, test_size):

assert test_size >= 0 and test_size < 1.0, '0 <= test_size < 1'

# List: y is the label, x is the text

y = list()

x = list()

for line in open(data_path, "r").readlines():

# line[:-1] to remove the last element (line break), split('\t', 1) is divided by \ T. see the data set for details

label, text = line[:-1].split('\t', 1)

# Using the jieba word segmentation tool for word segmentation

x.append(list(jieba.cut(text)))

y.append(int(label))

# The parameter test_size of 0 means no partition

if test_size == 0:

return x, y

# When test_size is 0-1, use sklearn package to divide data set

# For details, please refer to https://blog.csdn.net/zhuqiang9607/article/details/83686308

return sklearn.model_selection.train_test_split(

x, y, test_size=test_size, random_state=1028)

3. Establish word frequency dictionary and filter the functions of low frequency words

# Create word frequency dictionary and filter low frequency words

def build_dict(text_list, min_freq):

# According to the incoming text list, create a dictionary with the minimum frequency of min_freq, and return the dictionary word - > word

assert min_freq >= 0 and min_freq < 100, 'Please input a reasonable minimum word frequency'

# collections.Counter quickly counts the number of occurrences of each element in a dictionary. The parameter must be a one-dimensional list

freq_dict = collections.Counter(itertools.chain(*text_list))

# Sort by word frequency

freq_list = sorted(freq_dict.items(), key=operator.itemgetter(1), reverse=True)

# Filter low frequency words

words, _ = zip(*filter(lambda wc: wc[1] >= min_freq, freq_list))

return dict(zip(words, range(len(words))))4. The function of converting text to unique hot code

# Convert incoming text to vector / unique hot code

def text2vect(text_list, word2id):

# The returned result size is [n_samples, dict_size]

X = list()

for text in text_list:

# Create array length, length [0] * len(word2id)

vect = array.array('l', [0] * len(word2id))

for word in text:

if word not in word2id:

continue

vect[word2id[word]] = 1

X.append(vect)

return X5. Functions of various evaluation results of the model

def evaluate(model, X, y):

# Evaluate the data set and return the evaluation results, including: accuracy, AUC value

accuracy = model.score(X, y)

fpr, tpr, thresholds = sklearn.metrics.roc_curve(y, model.predict_proba(X)[:, 1], pos_label=1)

return accuracy, sklearn.metrics.auc(fpr, tpr)

Main function

# Main function

if __name__ == "__main__":

# There is a monitoring model, tag 0 -- > normal SMS, tag 1 -- > spam SMS

data = "train.txt"

predict = "predict.txt"

# step 1: divide words into train ing set, test set and predict ion set

X_train, X_test, Y_train, Y_test = data_xy(data, 0.2)

X_predict, Y_predict = data_xy(predict, 0)

# step 2: create dictionary

word2id = build_dict(X_train, min_freq=5)

# step 3: extract features and digitize text features. The bag of words model is used here. There are TF IDF, word2vec, etc

X_train = text2vect(X_train, word2id)

X_test = text2vect(X_test, word2id)

X_predict = text2vect(X_predict, word2id)

# step 4: training model, we use the logistic regression model to solve the problem of this two classification, and directly call the LR model encapsulated in sklearn,

# For detailed parameter description, please refer to skilern's official website API

lr = linear_model.LogisticRegression(C=1)

lr.fit(X_train, Y_train)

# step 5: training set model evaluation

accuracy, auc = evaluate(lr, X_train, Y_train)

print("Training set accuracy:", accuracy * 100)

print("Training set AUC Value:", auc)

# step 6: test set model evaluation

accuracy, auc = evaluate(lr, X_test, Y_test)

print("Test set accuracy:", accuracy * 100)

print("test AUC Value:", auc)

# step 7: make prediction and output prediction results and actual results

label_predict = lr.predict(X_predict)

# The format of the output label ﹣ predict and Y ﹣ predict is not uniform. It's hard to output them. Take a look at the data types and then unify them

# print(type(label_predict),type(Y_predict))

# <class 'numpy.ndarray'> <class 'list'>

print("Model forecast label:", label_predict.tolist())

print("Data actual label:", Y_predict)The complete code is as follows:

import collections

import itertools

import operator

import array

import jieba

import sklearn

import sklearn.linear_model as linear_model

# Label and text are separated, and training set and test set are divided

def data_xy(data_path, test_size):

assert test_size >= 0 and test_size < 1.0, '0 <= test_size < 1'

# List: y is the label, x is the text

y = list()

x = list()

for line in open(data_path, "r").readlines():

# line[:-1] to remove the last element (line break), split('\t', 1) is divided by \ T. see the data set for details

label, text = line[:-1].split('\t', 1)

# Using the jieba word segmentation tool for word segmentation

x.append(list(jieba.cut(text)))

y.append(int(label))

# The parameter test_size of 0 means no partition

if test_size == 0:

return x, y

# When test_size is 0-1, use sklearn package to divide data set

# For details, please refer to https://blog.csdn.net/zhuqiang9607/article/details/83686308

return sklearn.model_selection.train_test_split(

x, y, test_size=test_size, random_state=1028)

# Create word frequency dictionary and filter low frequency words

def build_dict(text_list, min_freq):

# According to the incoming text list, create a dictionary with the minimum frequency of min_freq, and return the dictionary word - > word

assert min_freq >= 0 and min_freq < 100, 'Please input a reasonable minimum word frequency'

# collections.Counter quickly counts the number of occurrences of each element in a dictionary. The parameter must be a one-dimensional list

freq_dict = collections.Counter(itertools.chain(*text_list))

# Sort by word frequency

freq_list = sorted(freq_dict.items(), key=operator.itemgetter(1), reverse=True)

# Filter low frequency words

words, _ = zip(*filter(lambda wc: wc[1] >= min_freq, freq_list))

return dict(zip(words, range(len(words))))

# Convert incoming text to vector / unique hot code

def text2vect(text_list, word2id):

# The returned result size is [n_samples, dict_size]

X = list()

for text in text_list:

# Create array length, length [0] * len(word2id)

vect = array.array('l', [0] * len(word2id))

for word in text:

if word not in word2id:

continue

vect[word2id[word]] = 1

X.append(vect)

return X

def evaluate(model, X, y):

# Evaluate the data set and return the evaluation results, including: accuracy, AUC value

accuracy = model.score(X, y)

fpr, tpr, thresholds = sklearn.metrics.roc_curve(y, model.predict_proba(X)[:, 1], pos_label=1)

return accuracy, sklearn.metrics.auc(fpr, tpr)

# Main function

if __name__ == "__main__":

# There is a monitoring model, tag 0 -- > normal SMS, tag 1 -- > spam SMS

data = "train.txt"

predict = "predict.txt"

# step 1: divide words into train ing set, test set and predict ion set

X_train, X_test, Y_train, Y_test = data_xy(data, 0.2)

X_predict, Y_predict = data_xy(predict, 0)

# step 2: create dictionary

word2id = build_dict(X_train, min_freq=5)

# step 3: extract features and digitize text features. The bag of words model is used here. There are TF IDF, word2vec, etc

X_train = text2vect(X_train, word2id)

X_test = text2vect(X_test, word2id)

X_predict = text2vect(X_predict, word2id)

# step 4: training model, we use the logistic regression model to solve the problem of this two classification, and directly call the LR model encapsulated in sklearn,

# For detailed parameter description, please refer to skilern's official website API

lr = linear_model.LogisticRegression(C=1)

lr.fit(X_train, Y_train)

# step 5: training set model evaluation

accuracy, auc = evaluate(lr, X_train, Y_train)

print("Training set accuracy:", accuracy * 100)

print("Training set AUC Value:", auc)

# step 6: test set model evaluation

accuracy, auc = evaluate(lr, X_test, Y_test)

print("Test set accuracy:", accuracy * 100)

print("test AUC Value:", auc)

# step 7: make prediction and output prediction results and actual results

label_predict = lr.predict(X_predict)

# The format of the output label ﹣ predict and Y ﹣ predict is not uniform. It's hard to output them. Take a look at the data types and then unify them

# print(type(label_predict),type(Y_predict))

# <class 'numpy.ndarray'> <class 'list'>

print("Model forecast label:", label_predict.tolist())

print("Data actual label:", Y_predict)Operation result:

Training set accuracy: 99.40760993392573 AUC value of training set: 0.99955892953795253 Test set accuracy: 96.81093394077449 Test AUC value: 0.9925394550652651 Model forecast label: [0, 1, 0, 0, 1, 0, 1, 1, 0, 0] Data actual label: [0, 1, 0, 0, 1, 0, 1, 1, 0]

(the above code can be run directly, the corresponding data can be crawled by itself, and the format can follow the example.)