Shared memory for nginx is one of the main reasons it can achieve high performance, mainly for file caching.This article first explains how shared memory is used, then how nginx manages shared memory.

1. Use examples

nginx declares that the instructions for shared memory are:

proxy_cache_path /Users/Mike/nginx-cache levels=1:2 keys_zone=one:10m max_size=10g inactive=60m use_temp_path=off;

Here is only one declared name with a maximum of 10g of shared memory available.The meanings of these parameters are as follows:

- /Users/Mike/nginx-cache: This is a path parameter that specifies where files cached in shared memory will be stored.The reason why files are generated here is that in response to an upstream service, a file can be generated and stored on nginx, and subsequently, if the same request is made, the file can be read directly or the cache in shared memory can be read in response to the client.

- Levels: on the linux operating system, if all files are placed in one folder, then when there are a large number of files, one disk driver may not be able to read so many files. If placed in multiple folders, you can take advantage of the advantages of multiple drivers and reading.The levels parameter here specifies how folders are generated.Assuming that nginx generates a file named e0bd866067979426a92306b1b98ad9 for some response data of the upstream service, for levels=1:2 above, it will start at the end of the file name, taking a bit (9) as the first-level subdirectory name, and then two bits (ad) as the second-level subdirectory name.

- keys_zone: This parameter specifies the name of the current shared memory, one here, followed by 10m to indicate that the current shared memory used to store key s is 10m in size.

- max_size: This parameter specifies the maximum memory available for the current shared memory;

- inactive: This parameter specifies the maximum lifetime of the current shared memory and will be eliminated by the LRU algorithm if there are no requests to access the memory data during this period.

- use_temp_path: This parameter specifies whether the generated files should be placed in a temporary folder before being moved to the specified folder.

2. Working principle

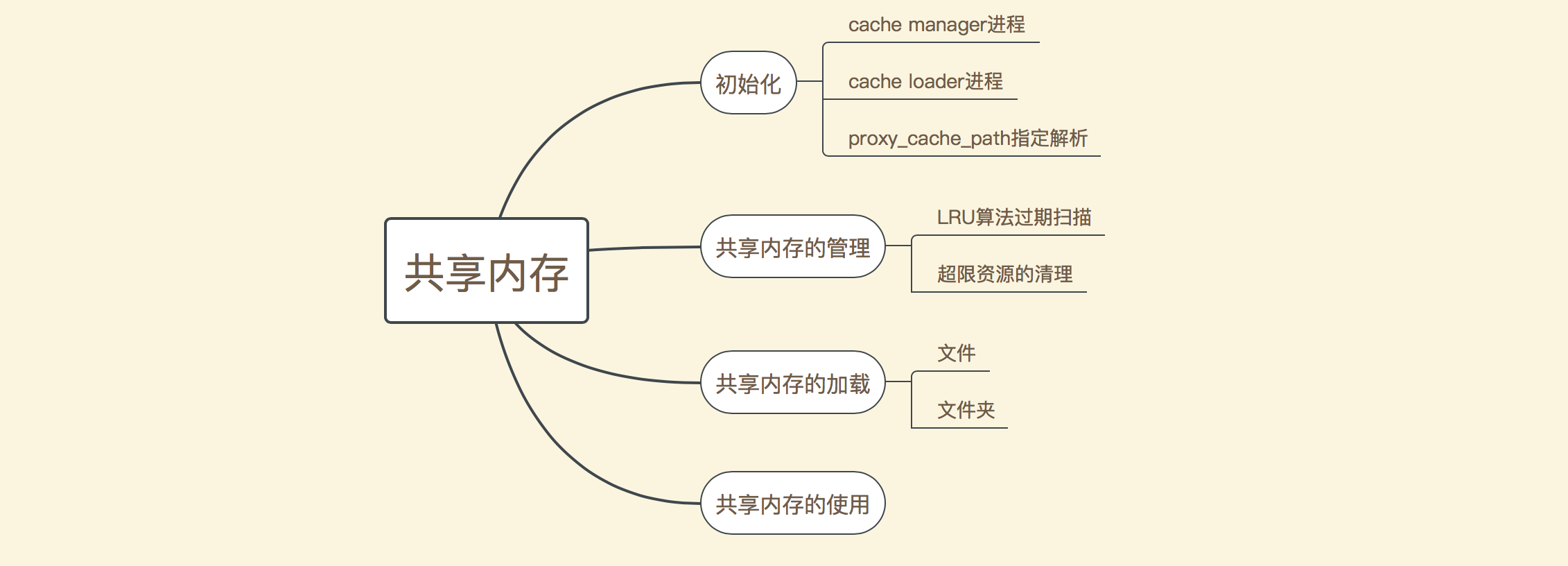

Shared memory management is divided into the following sections:

You can see that it is mainly divided into initialization, management of shared memory, loading of shared memory, and use of shared memory.During initialization, the proxy_cache_path directive is parsed first, and then the cache manager and cache loader processes are started, respectively; here, the cache manager process is mainly for shared memory management, which either cleans up expired data through the LRU algorithm or forces the deletion of some unreferenced memory data when resources are tight; and the main task of the cache loader process isAfter nginx starts, it reads the existing files in the file storage directory and loads them into shared memory. Shared memory is mainly used to cache the response data after processing requests. This part will be explained in the following articles. This paper mainly explains how the first three parts work.

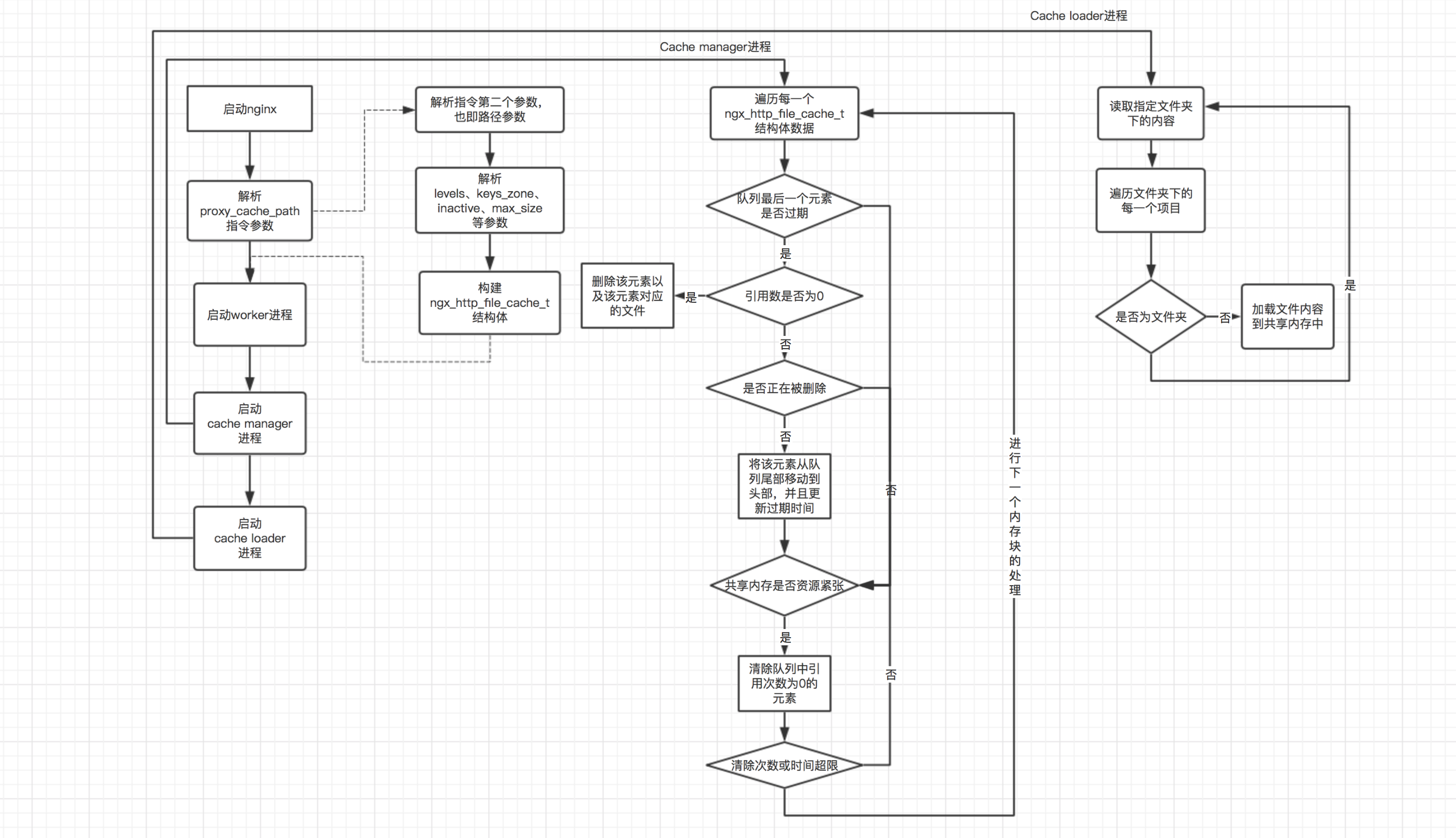

Shared memory management can be divided into three main sections as described above (the use of shared memory will be explained later).Here is a diagram of the process flow of these three parts:

You can see from the above flowchart that in the main process, the main tasks are to parse the proxy_cache_path directive, start the cache manager process, and start the cache loader process.In the cache manager process, the main work is divided into two parts: 1. Check whether the end of the queue element is out of date, delete the element and the file corresponding to that element if it is out of date and the reference number is 0; 2. Check if the current shared memory resources are tight, delete all elements and their files with reference number 0, regardless of whether they are out of date.In the process of the cache loader process, files in the directory where files are stored and their subdirectories are iterated through recursively, then loaded into shared memory.It is important to note that the cache manager process enters the next cycle after each iteration of all shared memory blocks, and the cache loader process executes once 60 seconds after nginx starts, then exits the process.

3. Source Code Interpretation

3.1 proxy_cache_path directive parsing

For the parsing of nginx instructions, a ngx_command_t structure is defined in the corresponding module with a set method specifying the method used to parse the current instruction.The following is the definition of the ngx_command_t structure for proxy_cache_path:

static ngx_command_t ngx_http_proxy_commands[] = { { ngx_string("proxy_cache_path"), // Specifies the name of the current instruction // Specifies where to use the current instruction, the http module, and specifies the number of parameters for the current module, which must be greater than or equal to 2 NGX_HTTP_MAIN_CONF|NGX_CONF_2MORE, // Specifies the method pointed to by the set() method ngx_http_file_cache_set_slot, NGX_HTTP_MAIN_CONF_OFFSET, offsetof(ngx_http_proxy_main_conf_t, caches), &ngx_http_proxy_module } }

You can see that the parsing method used by this directive is ngx_http_file_cache_set_slot(), where we read the source code for this method directly:

char *ngx_http_file_cache_set_slot(ngx_conf_t *cf, ngx_command_t *cmd, void *conf) { char *confp = conf; off_t max_size; u_char *last, *p; time_t inactive; ssize_t size; ngx_str_t s, name, *value; ngx_int_t loader_files, manager_files; ngx_msec_t loader_sleep, manager_sleep, loader_threshold, manager_threshold; ngx_uint_t i, n, use_temp_path; ngx_array_t *caches; ngx_http_file_cache_t *cache, **ce; cache = ngx_pcalloc(cf->pool, sizeof(ngx_http_file_cache_t)); if (cache == NULL) { return NGX_CONF_ERROR; } cache->path = ngx_pcalloc(cf->pool, sizeof(ngx_path_t)); if (cache->path == NULL) { return NGX_CONF_ERROR; } // Initialize default values for each property use_temp_path = 1; inactive = 600; loader_files = 100; loader_sleep = 50; loader_threshold = 200; manager_files = 100; manager_sleep = 50; manager_threshold = 200; name.len = 0; size = 0; max_size = NGX_MAX_OFF_T_VALUE; // Example configuration: proxy_cache_path /Users/Mike/nginx-cache levels=1:2 keys_zone=one:10m max_size=10g inactive=60m use_temp_path=off; // Here, cf->args->elts stores the individual token items that are included when parsing the proxy_cache_path directive. // The token term refers to a space-delimited character fragment value = cf->args->elts; // value[1] is the first parameter of the configuration, which is the root path where the cache file will be saved cache->path->name = value[1]; if (cache->path->name.data[cache->path->name.len - 1] == '/') { cache->path->name.len--; } if (ngx_conf_full_name(cf->cycle, &cache->path->name, 0) != NGX_OK) { return NGX_CONF_ERROR; } // Parse starting with the third parameter for (i = 2; i < cf->args->nelts; i++) { // Parse the levels subparameter if the third parameter starts with "levels=" if (ngx_strncmp(value[i].data, "levels=", 7) == 0) { p = value[i].data + 7; // Calculate the actual location where the parsing begins last = value[i].data + value[i].len; // Calculate the position of the last character // Start parsing 1:2 for (n = 0; n < NGX_MAX_PATH_LEVEL && p < last; n++) { if (*p > '0' && *p < '3') { // Get the current parameter values, such as 1 and 2 to resolve cache->path->level[n] = *p++ - '0'; cache->path->len += cache->path->level[n] + 1; if (p == last) { break; } // If the current character is a colon, continue parsing the next character; // Here, the NGX_MAX_PATH_LEVEL value is 3, which means that the levels parameter is followed by up to level 3 subdirectories if (*p++ == ':' && n < NGX_MAX_PATH_LEVEL - 1 && p < last) { continue; } goto invalid_levels; } goto invalid_levels; } if (cache->path->len < 10 + NGX_MAX_PATH_LEVEL) { continue; } invalid_levels: ngx_conf_log_error(NGX_LOG_EMERG, cf, 0, "invalid \"levels\" \"%V\"", &value[i]); return NGX_CONF_ERROR; } // If the current parameter starts with "use_temp_path=", then the use_temp_path parameter, which is on or off, is resolved. // Indicates whether the current cache file is first stored in a temporary folder, then written to the destination folder, or directly saved to the destination folder if off if (ngx_strncmp(value[i].data, "use_temp_path=", 14) == 0) { // If on, mark use_temp_path as 1 if (ngx_strcmp(&value[i].data[14], "on") == 0) { use_temp_path = 1; // If off, the tag use_temp_path is 0 } else if (ngx_strcmp(&value[i].data[14], "off") == 0) { use_temp_path = 0; // Return parse exception if not all } else { ngx_conf_log_error(NGX_LOG_EMERG, cf, 0, "invalid use_temp_path value \"%V\", " "it must be \"on\" or \"off\"", &value[i]); return NGX_CONF_ERROR; } continue; } // If the parameter starts with "keys_zone=", the keys_zone parameter is resolved.This parameter takes the form of keys_zone=one:10m, // Here one is a name for subsequent location configurations, while 10m is a size. // Indicates the size of the cache supplied to store the key if (ngx_strncmp(value[i].data, "keys_zone=", 10) == 0) { name.data = value[i].data + 10; p = (u_char *) ngx_strchr(name.data, ':'); if (p) { // Calculates the length of the name, which records the name of the current cache, which is one here name.len = p - name.data; p++; // Resolve the specified size size s.len = value[i].data + value[i].len - p; s.data = p; // Resolving the size will ultimately convert the specified size to the number of bytes, where the number of bytes must be greater than 8191 size = ngx_parse_size(&s); if (size > 8191) { continue; } } ngx_conf_log_error(NGX_LOG_EMERG, cf, 0, "invalid keys zone size \"%V\"", &value[i]); return NGX_CONF_ERROR; } // If the parameter starts with "inactive=", the inactive parameter is parsed.The parameter takes the form inactive=60m. // Indicates how long a cached file will expire after it has not been accessed if (ngx_strncmp(value[i].data, "inactive=", 9) == 0) { s.len = value[i].len - 9; s.data = value[i].data + 9; // Parsing time will eventually translate into time in seconds inactive = ngx_parse_time(&s, 1); if (inactive == (time_t) NGX_ERROR) { ngx_conf_log_error(NGX_LOG_EMERG, cf, 0, "invalid inactive value \"%V\"", &value[i]); return NGX_CONF_ERROR; } continue; } // If the parameter starts with "max_size=", the max_size parameter is parsed.This parameter takes the form of max_size=10g. // Indicates the maximum memory space that the current cache can use if (ngx_strncmp(value[i].data, "max_size=", 9) == 0) { s.len = value[i].len - 9; s.data = value[i].data + 9; // Converts the parsed value, eventually in bytes max_size = ngx_parse_offset(&s); if (max_size < 0) { ngx_conf_log_error(NGX_LOG_EMERG, cf, 0, "invalid max_size value \"%V\"", &value[i]); return NGX_CONF_ERROR; } continue; } // If the parameter starts with "loader_files=", the loader_files parameter is resolved.This parameter is like loader_files=100, // Indicates how many files in the cache directory will be loaded into the cache by default when nginx is started if (ngx_strncmp(value[i].data, "loader_files=", 13) == 0) { // Resolving the value of the loader_files parameter loader_files = ngx_atoi(value[i].data + 13, value[i].len - 13); if (loader_files == NGX_ERROR) { ngx_conf_log_error(NGX_LOG_EMERG, cf, 0, "invalid loader_files value \"%V\"", &value[i]); return NGX_CONF_ERROR; } continue; } // If the parameter starts with "loader_sleep=", the loader_sleep parameter is resolved.This parameter is like loader_sleep=10s, // Indicates how long to sleep after each file load before loading the next file if (ngx_strncmp(value[i].data, "loader_sleep=", 13) == 0) { s.len = value[i].len - 13; s.data = value[i].data + 13; // Convert the value of loader_sleep in milliseconds loader_sleep = ngx_parse_time(&s, 0); if (loader_sleep == (ngx_msec_t) NGX_ERROR) { ngx_conf_log_error(NGX_LOG_EMERG, cf, 0, "invalid loader_sleep value \"%V\"", &value[i]); return NGX_CONF_ERROR; } continue; } // If the parameter starts with "loader_threshold=", then the loader_threshold parameter, which is in the form of loader_threshold=10s, is parsed. // Indicates the maximum time a file can be loaded at a time if (ngx_strncmp(value[i].data, "loader_threshold=", 17) == 0) { s.len = value[i].len - 17; s.data = value[i].data + 17; // The value of loader_threshold s is parsed and converted, ultimately in milliseconds loader_threshold = ngx_parse_time(&s, 0); if (loader_threshold == (ngx_msec_t) NGX_ERROR) { ngx_conf_log_error(NGX_LOG_EMERG, cf, 0, "invalid loader_threshold value \"%V\"", &value[i]); return NGX_CONF_ERROR; } continue; } // If the parameter starts with "manager_files=", the manager_files parameter, which looks like manager_files=100, is parsed. // Indicates that when the cache space is exhausted, files will be deleted using the LRU algorithm, but the maximum number of files specified by manager_files will be deleted per iteration if (ngx_strncmp(value[i].data, "manager_files=", 14) == 0) { // Resolve manager_files parameter values manager_files = ngx_atoi(value[i].data + 14, value[i].len - 14); if (manager_files == NGX_ERROR) { ngx_conf_log_error(NGX_LOG_EMERG, cf, 0, "invalid manager_files value \"%V\"", &value[i]); return NGX_CONF_ERROR; } continue; } // If the parameter starts with "manager_sleep=", the manager_sleep parameter, which looks like manager_sleep=1s, is resolved. // Indicates the length of time specified by the manager_sleep parameter that will sleep after each iteration has completed if (ngx_strncmp(value[i].data, "manager_sleep=", 14) == 0) { s.len = value[i].len - 14; s.data = value[i].data + 14; // Resolve the value specified by manager_sleep manager_sleep = ngx_parse_time(&s, 0); if (manager_sleep == (ngx_msec_t) NGX_ERROR) { ngx_conf_log_error(NGX_LOG_EMERG, cf, 0, "invalid manager_sleep value \"%V\"", &value[i]); return NGX_CONF_ERROR; } continue; } // If the parameter starts with "manager_threshold=", then the manager_threshold parameter, which looks like manager_threshold=2s, is resolved. // Indicates that the maximum time spent per iteration of file cleanup cannot exceed the value specified by this parameter if (ngx_strncmp(value[i].data, "manager_threshold=", 18) == 0) { s.len = value[i].len - 18; s.data = value[i].data + 18; // Parse the manager_threshold parameter value and convert it to a value in milliseconds manager_threshold = ngx_parse_time(&s, 0); if (manager_threshold == (ngx_msec_t) NGX_ERROR) { ngx_conf_log_error(NGX_LOG_EMERG, cf, 0, "invalid manager_threshold value \"%V\"", &value[i]); return NGX_CONF_ERROR; } continue; } ngx_conf_log_error(NGX_LOG_EMERG, cf, 0, "invalid parameter \"%V\"", &value[i]); return NGX_CONF_ERROR; } if (name.len == 0 || size == 0) { ngx_conf_log_error(NGX_LOG_EMERG, cf, 0, "\"%V\" must have \"keys_zone\" parameter", &cmd->name); return NGX_CONF_ERROR; } // The cache->path->manager and cache->path->loader values here are two functions, and it is important to note that, // After nginx starts, two separate processes are started, a cache manager and a cache loader, where the cache manager // The method specified by cache->path->manager will be executed continuously for each shared memory in a loop. // This clears the cache.Another process, cache loader, only executes once 60 seconds after nginx starts. // The method executed is the one specified by cache->path->loader. // The main purpose of this method is to load existing file data into the current shared memory cache->path->manager = ngx_http_file_cache_manager; cache->path->loader = ngx_http_file_cache_loader; cache->path->data = cache; cache->path->conf_file = cf->conf_file->file.name.data; cache->path->line = cf->conf_file->line; cache->loader_files = loader_files; cache->loader_sleep = loader_sleep; cache->loader_threshold = loader_threshold; cache->manager_files = manager_files; cache->manager_sleep = manager_sleep; cache->manager_threshold = manager_threshold; // Adding the current paths to the cycle checks them later and creates paths if they do not exist if (ngx_add_path(cf, &cache->path) != NGX_OK) { return NGX_CONF_ERROR; } // Add the current shared memory to the list of shared memory specified by cf->cycle->shared_memory cache->shm_zone = ngx_shared_memory_add(cf, &name, size, cmd->post); if (cache->shm_zone == NULL) { return NGX_CONF_ERROR; } if (cache->shm_zone->data) { ngx_conf_log_error(NGX_LOG_EMERG, cf, 0, "duplicate zone \"%V\"", &name); return NGX_CONF_ERROR; } // This specifies the initialization method for each shared memory that is executed when the master process starts cache->shm_zone->init = ngx_http_file_cache_init; cache->shm_zone->data = cache; cache->use_temp_path = use_temp_path; cache->inactive = inactive; cache->max_size = max_size; caches = (ngx_array_t *) (confp + cmd->offset); ce = ngx_array_push(caches); if (ce == NULL) { return NGX_CONF_ERROR; } *ce = cache; return NGX_CONF_OK; }

The code above shows that in the proxy_cache_path method, a ngx_http_file_cache_t structure is primarily initialized.The attributes in the structure are resolved by parsing the parameters of proxy_cache_path.

3.2 cache manager and cache loader process startup

The entry method for nginx programs is the main() method of nginx.c. If the master-worker process mode is turned on, then the ngx_master_process_cycle() method will be entered at the end. This method will start the worker process to receive requests from clients, then start the cache manager and cache loader processes, respectively, and finally enter an infinite loop to process requests from clients.The instructions the user sends to nginx on the command line.The following is the source code for the cache manager and cache loader processes to start:

void ngx_master_process_cycle(ngx_cycle_t *cycle) { ... // Get the configuration of the core module ccf = (ngx_core_conf_t *) ngx_get_conf(cycle->conf_ctx, ngx_core_module); // Start each worker process ngx_start_worker_processes(cycle, ccf->worker_processes, NGX_PROCESS_RESPAWN); // Start the cache process ngx_start_cache_manager_processes(cycle, 0); ... }

For the startup of the cache manager and the cache loader processes, you can see that it is mainly in the ngx_start_cache_manager_processes() method, which has the following source code:

static void ngx_start_cache_manager_processes(ngx_cycle_t *cycle, ngx_uint_t respawn) { ngx_uint_t i, manager, loader; ngx_path_t **path; ngx_channel_t ch; manager = 0; loader = 0; path = ngx_cycle->paths.elts; for (i = 0; i < ngx_cycle->paths.nelts; i++) { // Find if any path specifies manager 1 if (path[i]->manager) { manager = 1; } // Find if any path specifies loader of 1 if (path[i]->loader) { loader = 1; } } // Return directly if no path has a manager specified as 1 if (manager == 0) { return; } // Create a process to execute the loop executed in the ngx_cache_manager_process_cycle() method, noting that // When calling back the ngx_cache_manager_process_cycle method, the second parameter passed in here is ngx_cache_manager_ctx ngx_spawn_process(cycle, ngx_cache_manager_process_cycle, &ngx_cache_manager_ctx, "cache manager process", respawn ? NGX_PROCESS_JUST_RESPAWN : NGX_PROCESS_RESPAWN); ngx_memzero(&ch, sizeof(ngx_channel_t)); // Create a ch structure to broadcast creation messages for the current process ch.command = NGX_CMD_OPEN_CHANNEL; ch.pid = ngx_processes[ngx_process_slot].pid; ch.slot = ngx_process_slot; ch.fd = ngx_processes[ngx_process_slot].channel[0]; // Broadcast messages created by the cache manager process ngx_pass_open_channel(cycle, &ch); if (loader == 0) { return; } // Create a process to execute the process specified by ngx_cache_manager_process_cycle(), and note that // When calling back the ngx_cache_manager_process_cycle method, the second parameter passed in here is ngx_cache_loader_ctx ngx_spawn_process(cycle, ngx_cache_manager_process_cycle, &ngx_cache_loader_ctx, "cache loader process", respawn ? NGX_PROCESS_JUST_SPAWN : NGX_PROCESS_NORESPAWN); // Create a ch structure to broadcast creation messages for the current process ch.command = NGX_CMD_OPEN_CHANNEL; ch.pid = ngx_processes[ngx_process_slot].pid; ch.slot = ngx_process_slot; ch.fd = ngx_processes[ngx_process_slot].channel[0]; // Broadcast messages created by the cache loader process ngx_pass_open_channel(cycle, &ch); }

The code above is fairly simple. First check to see if any path specifies the use of cache manager or cache loader, and if so, start the corresponding inheritance, otherwise the cache manager and cache loader processes will not be created.Both processes are started using the following methods:

// Start the cache manager process ngx_spawn_process(cycle, ngx_cache_manager_process_cycle, &ngx_cache_manager_ctx, "cache manager process", respawn ? NGX_PROCESS_JUST_RESPAWN : NGX_PROCESS_RESPAWN); // Start the cache loader process ngx_spawn_process(cycle, ngx_cache_manager_process_cycle, &ngx_cache_loader_ctx, "cache loader process", respawn ? NGX_PROCESS_JUST_SPAWN : NGX_PROCESS_NORESPAWN);

The ngx_spawn_process() method here creates a new process that executes the method specified by the second parameter, and the parameter passed in when the method is executed is the structure object specified by the third parameter here.Observe how the two above processes are started. The methods they execute after the new process is created are ngx_cache_manager_process_cycle(), but the parameters passed in when the method is called are different, one is ngx_cache_manager_ctx, the other is ngx_cache_loader_ctx.Let's first look at the definitions of these two structures:

// Here, ngx_cache_manager_process_handler specifies the method that the current cache manager process will execute. // The cache manager process specifies the name of the process, and the last zero indicates how long the current process will take to execute after it is started // ngx_cache_manager_process_handler() method, executed immediately here static ngx_cache_manager_ctx_t ngx_cache_manager_ctx = { ngx_cache_manager_process_handler, "cache manager process", 0 }; // Here, ngx_cache_loader_process_handler specifies the method that the current cache loader process will execute. // It does not execute the ngx_cache_loader_process_handler() method until 60 seconds after the cache loader process starts static ngx_cache_manager_ctx_t ngx_cache_loader_ctx = { ngx_cache_loader_process_handler, "cache loader process", 60000 };

You can see that these two architectures mainly define different behaviors of the two processes, cache manager and cache loader.Let's see how the ngx_cache_manager_process_cycle() method calls both methods:

static void ngx_cache_manager_process_cycle(ngx_cycle_t *cycle, void *data) { ngx_cache_manager_ctx_t *ctx = data; void *ident[4]; ngx_event_t ev; ngx_process = NGX_PROCESS_HELPER; // The current process is mainly used to handle cache manager and cache loader work, so it does not need to listen on socket s, so it needs to be shut down here ngx_close_listening_sockets(cycle); /* Set a moderate number of connections for a helper process. */ cycle->connection_n = 512; // Initialize the current process by setting some parameter properties and, finally, by setting the event to listen on the channel[1] handle for the current process, receiving messages from the master process ngx_worker_process_init(cycle, -1); ngx_memzero(&ev, sizeof(ngx_event_t)); // For the cache manager, the handler here points to the ngx_cache_manager_process_handler() method. // For the cache loader, the handler here points to the ngx_cache_loader_process_handler() method ev.handler = ctx->handler; ev.data = ident; ev.log = cycle->log; ident[3] = (void *) -1; // cache module does not require shared locks ngx_use_accept_mutex = 0; ngx_setproctitle(ctx->name); // Add the current event to the event queue with a delay of ctx->delay, which is 0 for the cache manager. // For the cache loader, the value is 60s. // It is important to note that, in the current event handling method, ngx_cache_manager_process_handler() if the current event has been processed, // The current event is added to the event queue again to achieve timed processing; for // ngx_cache_loader_process_handler() method, which does not handle the current event after one processing // Add it to the event queue again, so that the current event executes only once, and the cache loader process exits ngx_add_timer(&ev, ctx->delay); for ( ;; ) { // Exit the process if master marks the current process as terminate or quit if (ngx_terminate || ngx_quit) { ngx_log_error(NGX_LOG_NOTICE, cycle->log, 0, "exiting"); exit(0); } // If the master process sends a reopen message, reopen all cached files if (ngx_reopen) { ngx_reopen = 0; ngx_log_error(NGX_LOG_NOTICE, cycle->log, 0, "reopening logs"); ngx_reopen_files(cycle, -1); } // Execute Events in Event Queue ngx_process_events_and_timers(cycle); } }

In the code above, first an event object is created, ev.handler = ctx->handler; the logic required to handle the event, that is, the method corresponding to the first parameter in the two structures above, is specified; then the event is added to the event queue, that is, ngx_add_timer (&ev, ctx->delay); note that the second parameter here is aboveThe third parameter specified in the polygon two constructs, that is, to control the execution time of the hander() method in terms of the latency of the event; finally, in an infinite for loop, events in the event queue are continuously checked and events are processed using the ngx_process_events_and_timers() method.

3.3 cache manager process processing logic

The above explanation shows that the cache manager process is performed in the ngx_cache_manager_process_handler() method in the cache manager structure it defines.The source code for this method is as follows:

static void ngx_cache_manager_process_handler(ngx_event_t *ev) { ngx_uint_t i; ngx_msec_t next, n; ngx_path_t **path; next = 60 * 60 * 1000; path = ngx_cycle->paths.elts; for (i = 0; i < ngx_cycle->paths.nelts; i++) { // The manager method here points to the ngx_http_file_cache_manager() method if (path[i]->manager) { n = path[i]->manager(path[i]->data); next = (n <= next) ? n : next; ngx_time_update(); } } if (next == 0) { next = 1; } // The current event is added to the event queue again after one process is completed for the next process ngx_add_timer(ev, next); }

This first gets all the path definitions, then checks if its manager() method is empty, and if it is not, calls it.The manager () method here refers to the actual method defined in the previous Section 3.1 for parsing the proxy_cache_path directive, namely cache->path->manager = ngx_http_file_cache_manager; that is, it is the main method for managing cache.After calling the management method, the next step is to continue adding the current event to the event queue for the next cache management cycle.The following is the source code for the ngx_http_file_cache_manager() method:

static ngx_msec_t ngx_http_file_cache_manager(void *data) { // Here the ngx_http_file_cache_t structure is derived by parsing the proxy_cache_path configuration item ngx_http_file_cache_t *cache = data; off_t size; time_t wait; ngx_msec_t elapsed, next; ngx_uint_t count, watermark; cache->last = ngx_current_msec; cache->files = 0; // The ngx_http_file_cache_expire() method here continuously checks the end of the cache queue for expiration in an infinite loop // Shared memory, delete it and its corresponding files if it exists next = (ngx_msec_t) ngx_http_file_cache_expire(cache) * 1000; // next is the return value of the ngx_http_file_cache_expire() method, which returns 0 only in two cases: // 1. When the number of files deleted exceeds the number of files specified by manager_files; // 2. When the total time spent deleting individual files exceeds the total time specified by manager_threshold s; // If next0, you have completed a batch of cache cleanup, which requires a period of hibernation before the next cleanup. // The length of this hibernation is the value specified by manager_sleep.In other words, the next value here is actually the next time // Wait time to perform cache cleanup if (next == 0) { next = cache->manager_sleep; goto done; } for ( ;; ) { ngx_shmtx_lock(&cache->shpool->mutex); // Here size refers to the total size used by the current cache // count specifies the number of files in the current cache // watermark represents water level, which is 7/8 of the total number of files that can be stored size = cache->sh->size; count = cache->sh->count; watermark = cache->sh->watermark; ngx_shmtx_unlock(&cache->shpool->mutex); ngx_log_debug3(NGX_LOG_DEBUG_HTTP, ngx_cycle->log, 0, "http file cache size: %O c:%ui w:%i", size, count, (ngx_int_t) watermark); // If the current cache uses less memory than the maximum size that can be used and the number of cached files is less than the water level, // Explains that you can continue to store cache files, then jump out of the loop if (size < cache->max_size && count < watermark) { break; } // Walk here to indicate that shared memory is running low on available resources // This is mainly to force the deletion of unreferenced files in the current queue, whether or not they expire wait = ngx_http_file_cache_forced_expire(cache); // Calculate the next execution time if (wait > 0) { next = (ngx_msec_t) wait * 1000; break; } // Jump out of the loop if the current nginx has exited or terminated if (ngx_quit || ngx_terminate) { break; } // If the number of files currently deleted exceeds the number specified by manager_files, jump out of the loop. // And specify how long it will take to hibernate from the next cleanup if (++cache->files >= cache->manager_files) { next = cache->manager_sleep; break; } ngx_time_update(); elapsed = ngx_abs((ngx_msec_int_t) (ngx_current_msec - cache->last)); // If the current delete action takes longer than the time specified by manager_threshold s, jump out of the loop. // And specify how long it will take to hibernate from the next cleanup if (elapsed >= cache->manager_threshold) { next = cache->manager_sleep; break; } } done: elapsed = ngx_abs((ngx_msec_int_t) (ngx_current_msec - cache->last)); ngx_log_debug3(NGX_LOG_DEBUG_HTTP, ngx_cycle->log, 0, "http file cache manager: %ui e:%M n:%M", cache->files, elapsed, next); return next; }

In the ngx_http_file_cache_manager() method, the ngx_http_file_cache_expire() method first enters. The main purpose of this method is to check if the element at the end of the current shared memory queue is out of date and, if so, to determine whether it needs to be deleted, based on the number of references and whether it is being deleted.After this check, an infinite for loop is entered, where the main purpose of the loop is to check if the current shared memory is resource intensive, that is, if the memory used exceeds the maximum memory defined by max_size, or if the total number of files currently cached exceeds 7/8 of the total number of files.If one of these two conditions is met, a forced purge of the cache file is attempted, which is to delete all referenced 0 elements in the current shared memory and their corresponding disk files.Here we first read the ngx_http_file_cache_expire() method:

static time_t ngx_http_file_cache_expire(ngx_http_file_cache_t *cache) { u_char *name, *p; size_t len; time_t now, wait; ngx_path_t *path; ngx_msec_t elapsed; ngx_queue_t *q; ngx_http_file_cache_node_t *fcn; u_char key[2 * NGX_HTTP_CACHE_KEY_LEN]; ngx_log_debug0(NGX_LOG_DEBUG_HTTP, ngx_cycle->log, 0, "http file cache expire"); path = cache->path; len = path->name.len + 1 + path->len + 2 * NGX_HTTP_CACHE_KEY_LEN; name = ngx_alloc(len + 1, ngx_cycle->log); if (name == NULL) { return 10; } ngx_memcpy(name, path->name.data, path->name.len); now = ngx_time(); ngx_shmtx_lock(&cache->shpool->mutex); for ( ;; ) { // If the current nginx has exited or terminated, jump out of the current loop if (ngx_quit || ngx_terminate) { wait = 1; break; } // Jump out of the current loop if the current shared memory queue is empty if (ngx_queue_empty(&cache->sh->queue)) { wait = 10; break; } // Get the last element of the queue q = ngx_queue_last(&cache->sh->queue); // Get the node of the queue fcn = ngx_queue_data(q, ngx_http_file_cache_node_t, queue); // Calculate how long a node's expiration time is from the current time wait = fcn->expire - now; // Exit the current loop if the current node has not expired if (wait > 0) { wait = wait > 10 ? 10 : wait; break; } ngx_log_debug6(NGX_LOG_DEBUG_HTTP, ngx_cycle->log, 0, "http file cache expire: #%d %d %02xd%02xd%02xd%02xd", fcn->count, fcn->exists, fcn->key[0], fcn->key[1], fcn->key[2], fcn->key[3]); // Here count represents the current number of times a node has been referenced, and if it has 0 references, it is deleted directly if (fcn->count == 0) { // The main action here is to remove the current node from the queue and delete its corresponding files ngx_http_file_cache_delete(cache, q, name); goto next; } // If the current node is being deleted, the current process will not have to process it if (fcn->deleting) { wait = 1; break; } // Going here means that the current node has expired, but the number of references is greater than 0, and no process is deleting the node // The name of the file after the hex calculation of the node is calculated here p = ngx_hex_dump(key, (u_char *) &fcn->node.key, sizeof(ngx_rbtree_key_t)); len = NGX_HTTP_CACHE_KEY_LEN - sizeof(ngx_rbtree_key_t); (void) ngx_hex_dump(p, fcn->key, len); // Since the current node has expired in time, but there are requests referencing it and no processes deleting it, // That means the node should be kept, so here's an attempt to remove it from the end of the queue and recalculate the next expiration time for it. // Then insert it at the head of the queue ngx_queue_remove(q); fcn->expire = ngx_time() + cache->inactive; ngx_queue_insert_head(&cache->sh->queue, &fcn->queue); ngx_log_error(NGX_LOG_ALERT, ngx_cycle->log, 0, "ignore long locked inactive cache entry %*s, count:%d", (size_t) 2 * NGX_HTTP_CACHE_KEY_LEN, key, fcn->count); next: // Here is the logic that will not execute until the last node in the queue is deleted and the corresponding file is deleted // Here cache->files records the number of nodes that are currently processed, and manager_files means: // When the LRU algorithm forces file cleanup, the maximum number of files specified by this parameter is cleared, defaulting to 100. // So here if cache->files is greater than or equal to manager_files, jump out of the loop if (++cache->files >= cache->manager_files) { wait = 0; break; } // Time to update current nginx cache ngx_time_update(); // elapsed equals the total time taken for the current delete action elapsed = ngx_abs((ngx_msec_int_t) (ngx_current_msec - cache->last)); // Jump out of the current loop if the total time consumed exceeds the value specified by manager_threshold s if (elapsed >= cache->manager_threshold) { wait = 0; break; } } // Release the current lock ngx_shmtx_unlock(&cache->shpool->mutex); ngx_free(name); return wait; }

You can see that the main processing logic here is the element that first serves at the end of the queue. According to the LRU algorithm, the element at the end of the queue is the most likely element to expire, so you only need to examine it.Then check if the element is out of date and exit the current method if it is not, otherwise check if the current element has a reference number of 0, that is, if the current element has expired and a reference number of 0, delete the element and its corresponding disk file directly.If the current element reference number is not zero, it is checked to see if it is being deleted. It is important to note that if an element is being deleted, the deletion process sets its reference number to 1 to prevent other processes from deleting as well.If it is being deleted, the current process will not process the element. If it is not deleted, the current process will attempt to move the element from the end of the queue to the head of the queue. The main reason for this is that although the element has expired, its number of references is not zero, and no process is deleting the element, then it is still an active element, andIt needs to be moved to the head of the queue.

Here's how the cache manager forces element cleanup when resources are tight, such as the source code for the ngx_http_file_cache_forced_expire() method:

static time_t ngx_http_file_cache_forced_expire(ngx_http_file_cache_t *cache) { u_char *name; size_t len; time_t wait; ngx_uint_t tries; ngx_path_t *path; ngx_queue_t *q; ngx_http_file_cache_node_t *fcn; ngx_log_debug0(NGX_LOG_DEBUG_HTTP, ngx_cycle->log, 0, "http file cache forced expire"); path = cache->path; len = path->name.len + 1 + path->len + 2 * NGX_HTTP_CACHE_KEY_LEN; name = ngx_alloc(len + 1, ngx_cycle->log); if (name == NULL) { return 10; } ngx_memcpy(name, path->name.data, path->name.len); wait = 10; tries = 20; ngx_shmtx_lock(&cache->shpool->mutex); // Continuously iterate through each node in the queue for (q = ngx_queue_last(&cache->sh->queue); q != ngx_queue_sentinel(&cache->sh->queue); q = ngx_queue_prev(q)) { // Get data for the current node fcn = ngx_queue_data(q, ngx_http_file_cache_node_t, queue); ngx_log_debug6(NGX_LOG_DEBUG_HTTP, ngx_cycle->log, 0, "http file cache forced expire: #%d %d %02xd%02xd%02xd%02xd", fcn->count, fcn->exists, fcn->key[0], fcn->key[1], fcn->key[2], fcn->key[3]); // If the current node has 0 references, delete the node directly if (fcn->count == 0) { ngx_http_file_cache_delete(cache, q, name); wait = 0; } else { // Attempting the next node will jump out of the current cycle if the number of references to 20 consecutive nodes is greater than 0 if (--tries) { continue; } wait = 1; } break; } ngx_shmtx_unlock(&cache->shpool->mutex); ngx_free(name); return wait; }

You can see that the logic here is fairly simple, primarily by checking the number of references to elements in the queue from the end of the queue up to the front, then deleting them and checking the next element.If it's not zero, check the next element, so go back and forth.It is important to note here that if you check that there are a total of 20 elements being referenced, you jump out of the current cycle.

3.4 cache loader process processing logic

As mentioned earlier, the main processing flow of the cache loader is in the ngx_cache_loader_process_handler() method, which has the following main processing logic:

static void ngx_cache_loader_process_handler(ngx_event_t *ev) { ngx_uint_t i; ngx_path_t **path; ngx_cycle_t *cycle; cycle = (ngx_cycle_t *) ngx_cycle; path = cycle->paths.elts; for (i = 0; i < cycle->paths.nelts; i++) { if (ngx_terminate || ngx_quit) { break; } // The loader method here points to the ngx_http_file_cache_loader() method if (path[i]->loader) { path[i]->loader(path[i]->data); ngx_time_update(); } } // Exit the current process after loading is complete exit(0); }

The main processes of the cache loader and the cache manager are very similar here, mainly by calling the loader() method of each path for data loading, and the specific implementation of the loader() method is also defined when the proxy_cache_path configuration item is parsed, as follows (last part of section 3.1):

cache->path->loader = ngx_http_file_cache_loader;

Here we continue reading the source code for the ngx_http_file_cache_loader() method:

static void ngx_http_file_cache_loader(void *data) { ngx_http_file_cache_t *cache = data; ngx_tree_ctx_t tree; // If the load is complete or loading is in progress, return directly if (!cache->sh->cold || cache->sh->loading) { return; } // Attempt to lock if (!ngx_atomic_cmp_set(&cache->sh->loading, 0, ngx_pid)) { return; } ngx_log_debug0(NGX_LOG_DEBUG_HTTP, ngx_cycle->log, 0, "http file cache loader"); // Here tree is one of the main process objects loaded, which is done recursively tree.init_handler = NULL; // Encapsulates the operation of loading a single file tree.file_handler = ngx_http_file_cache_manage_file; // The main thing to do before loading a directory is to check if the current directory has operational privileges tree.pre_tree_handler = ngx_http_file_cache_manage_directory; // After loading a directory, this is actually an empty method tree.post_tree_handler = ngx_http_file_cache_noop; // The main thing here is to handle special files, files that are neither files nor folders. The main thing here is to delete the files. tree.spec_handler = ngx_http_file_cache_delete_file; tree.data = cache; tree.alloc = 0; tree.log = ngx_cycle->log; cache->last = ngx_current_msec; cache->files = 0; // Start recursively iterating through all the files in the specified directory, then process them as defined above, that is, load them into shared memory if (ngx_walk_tree(&tree, &cache->path->name) == NGX_ABORT) { cache->sh->loading = 0; return; } // Tag Load Status cache->sh->cold = 0; cache->sh->loading = 0; ngx_log_error(NGX_LOG_NOTICE, ngx_cycle->log, 0, "http file cache: %V %.3fM, bsize: %uz", &cache->path->name, ((double) cache->sh->size * cache->bsize) / (1024 * 1024), cache->bsize); }

During the loading process, first encapsulate the target load directory into a ngx_tree_ctx_t structure and specify for it the method used to load the files.The final loading logic is mainly in the ngx_walk_tree() method, and the entire loading process is achieved by recursion.The following is how the ngx_walk_tree() method works:

ngx_int_t ngx_walk_tree(ngx_tree_ctx_t *ctx, ngx_str_t *tree) { void *data, *prev; u_char *p, *name; size_t len; ngx_int_t rc; ngx_err_t err; ngx_str_t file, buf; ngx_dir_t dir; ngx_str_null(&buf); ngx_log_debug1(NGX_LOG_DEBUG_CORE, ctx->log, 0, "walk tree \"%V\"", tree); // Open target directory if (ngx_open_dir(tree, &dir) == NGX_ERROR) { ngx_log_error(NGX_LOG_CRIT, ctx->log, ngx_errno, ngx_open_dir_n " \"%s\" failed", tree->data); return NGX_ERROR; } prev = ctx->data; // The alloc passed in here is 0 and will not enter the current branch if (ctx->alloc) { data = ngx_alloc(ctx->alloc, ctx->log); if (data == NULL) { goto failed; } if (ctx->init_handler(data, prev) == NGX_ABORT) { goto failed; } ctx->data = data; } else { data = NULL; } for ( ;; ) { ngx_set_errno(0); // Read the contents of the current subdirectory if (ngx_read_dir(&dir) == NGX_ERROR) { err = ngx_errno; if (err == NGX_ENOMOREFILES) { rc = NGX_OK; } else { ngx_log_error(NGX_LOG_CRIT, ctx->log, err, ngx_read_dir_n " \"%s\" failed", tree->data); rc = NGX_ERROR; } goto done; } len = ngx_de_namelen(&dir); name = ngx_de_name(&dir); ngx_log_debug2(NGX_LOG_DEBUG_CORE, ctx->log, 0, "tree name %uz:\"%s\"", len, name); // If you are reading a., it means it is the current directory, skipping it if (len == 1 && name[0] == '.') { continue; } // If you are currently reading a.., it means that it skips the directory as an identity to return to the previous directory if (len == 2 && name[0] == '.' && name[1] == '.') { continue; } file.len = tree->len + 1 + len; // Update available cache size if (file.len + NGX_DIR_MASK_LEN > buf.len) { if (buf.len) { ngx_free(buf.data); } buf.len = tree->len + 1 + len + NGX_DIR_MASK_LEN; buf.data = ngx_alloc(buf.len + 1, ctx->log); if (buf.data == NULL) { goto failed; } } p = ngx_cpymem(buf.data, tree->data, tree->len); *p++ = '/'; ngx_memcpy(p, name, len + 1); file.data = buf.data; ngx_log_debug1(NGX_LOG_DEBUG_CORE, ctx->log, 0, "tree path \"%s\"", file.data); if (!dir.valid_info) { if (ngx_de_info(file.data, &dir) == NGX_FILE_ERROR) { ngx_log_error(NGX_LOG_CRIT, ctx->log, ngx_errno, ngx_de_info_n " \"%s\" failed", file.data); continue; } } // If a file is currently being read, call ctx->file_handler() to load the contents of the file if (ngx_de_is_file(&dir)) { ngx_log_debug1(NGX_LOG_DEBUG_CORE, ctx->log, 0, "tree file \"%s\"", file.data); // Set file properties ctx->size = ngx_de_size(&dir); ctx->fs_size = ngx_de_fs_size(&dir); ctx->access = ngx_de_access(&dir); ctx->mtime = ngx_de_mtime(&dir); if (ctx->file_handler(ctx, &file) == NGX_ABORT) { goto failed; } // If you are reading a directory, call the set pre_tree_handler() method first, then call // ngx_walk_tree() method, read subdirectories recursively, and finally call the set post_tree_handler() method } else if (ngx_de_is_dir(&dir)) { ngx_log_debug1(NGX_LOG_DEBUG_CORE, ctx->log, 0, "tree enter dir \"%s\"", file.data); ctx->access = ngx_de_access(&dir); ctx->mtime = ngx_de_mtime(&dir); // Apply pre-logic to read directories rc = ctx->pre_tree_handler(ctx, &file); if (rc == NGX_ABORT) { goto failed; } if (rc == NGX_DECLINED) { ngx_log_debug1(NGX_LOG_DEBUG_CORE, ctx->log, 0, "tree skip dir \"%s\"", file.data); continue; } // Recursively read the current directory if (ngx_walk_tree(ctx, &file) == NGX_ABORT) { goto failed; } ctx->access = ngx_de_access(&dir); ctx->mtime = ngx_de_mtime(&dir); // Apply post-logic to read directories if (ctx->post_tree_handler(ctx, &file) == NGX_ABORT) { goto failed; } } else { ngx_log_debug1(NGX_LOG_DEBUG_CORE, ctx->log, 0, "tree special \"%s\"", file.data); if (ctx->spec_handler(ctx, &file) == NGX_ABORT) { goto failed; } } } failed: rc = NGX_ABORT; done: if (buf.len) { ngx_free(buf.data); } if (data) { ngx_free(data); ctx->data = prev; } if (ngx_close_dir(&dir) == NGX_ERROR) { ngx_log_error(NGX_LOG_CRIT, ctx->log, ngx_errno, ngx_close_dir_n " \"%s\" failed", tree->data); } return rc; }

From the above process, you can see that the true logic for loading files is in the ngx_http_file_cache_manage_file() method, which has the following source code:

static ngx_int_t ngx_http_file_cache_manage_file(ngx_tree_ctx_t *ctx, ngx_str_t *path) { ngx_msec_t elapsed; ngx_http_file_cache_t *cache; cache = ctx->data; // Add files to shared memory if (ngx_http_file_cache_add_file(ctx, path) != NGX_OK) { (void) ngx_http_file_cache_delete_file(ctx, path); } // Sleep for a period of time if the number of files loaded exceeds the number specified by loader_files if (++cache->files >= cache->loader_files) { ngx_http_file_cache_loader_sleep(cache); } else { // Update the time of the current cache ngx_time_update(); // Calculate the current load hype time elapsed = ngx_abs((ngx_msec_int_t) (ngx_current_msec - cache->last)); ngx_log_debug1(NGX_LOG_DEBUG_HTTP, ngx_cycle->log, 0, "http file cache loader time elapsed: %M", elapsed); // If the load operation takes longer than the time specified by the loader_threshold s, sleep for the specified time if (elapsed >= cache->loader_threshold) { ngx_http_file_cache_loader_sleep(cache); } } return (ngx_quit || ngx_terminate) ? NGX_ABORT : NGX_OK; }

The loading logic here is relatively simple as a whole, the main process is to load the file into shared memory, and to determine if the number of files loaded exceeds the limit, if it exceeds the limit, the specified length of time will be dormant; in addition, to determine if the total time taken to load the file exceeds the specified length, if it exceeds, the specified length of time will be dormant.

4. Summary

This paper first explains the usage of nginx shared memory and the specific meaning of each parameter, then explains the implementation principle of shared memory, and finally focuses on the initialization of shared memory, the working principle of cache manager and cache loader.