Foreword: Nginx is often used by us as a reverse proxy or load balancing Web server. It is also used as the load balancing of gateway clusters on top of API gateways in the microservice architecture.

1. Introduction to Nginx

1.1, What is Nginx

Nginx is a high performance HTTP and reverse proxy Web server, which takes up less memory and has a strong concurrency capability.In fact, nginx does perform well on the same type of web server, and Nginx is a good alternative to Apache servers in case of high connection concurrency.

Nginx was designed and developed by Igor Sassoyev for the second-most visited rambler.ru site in Russia.Since its release in 2004, with the power of open source, it has approached maturity and perfection.Nginx is developed for performance optimization. Performance is its most important requirement and efficiency-oriented. It has been reported that Nginx can support up to 50,000 concurrent connections.

Nginx Knowledge Network Structure Diagram

Nginx Knowledge Network Structure Diagram

1.2, Nginx Common Functions

1 Reverse proxy

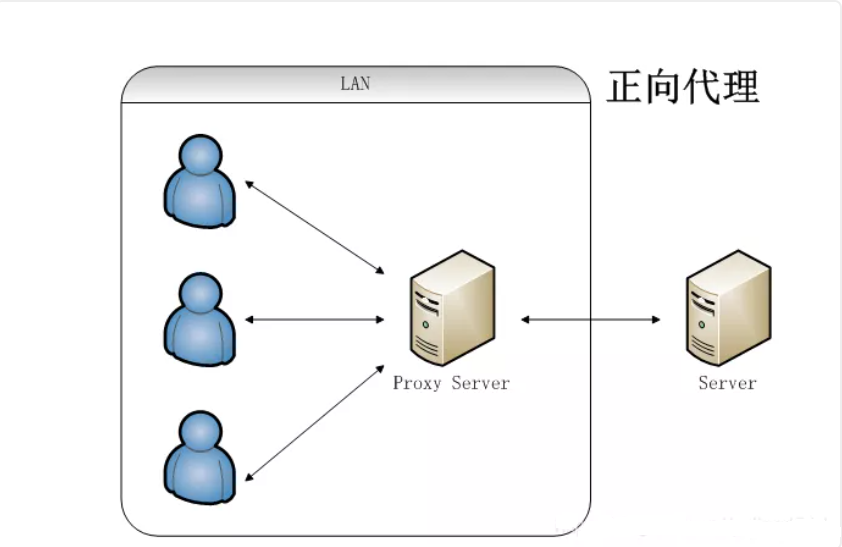

Forward proxy: It is not feasible for computer users in a local area network to access the network directly. They can only access the network through a proxy server. This proxy service is called forward proxy.

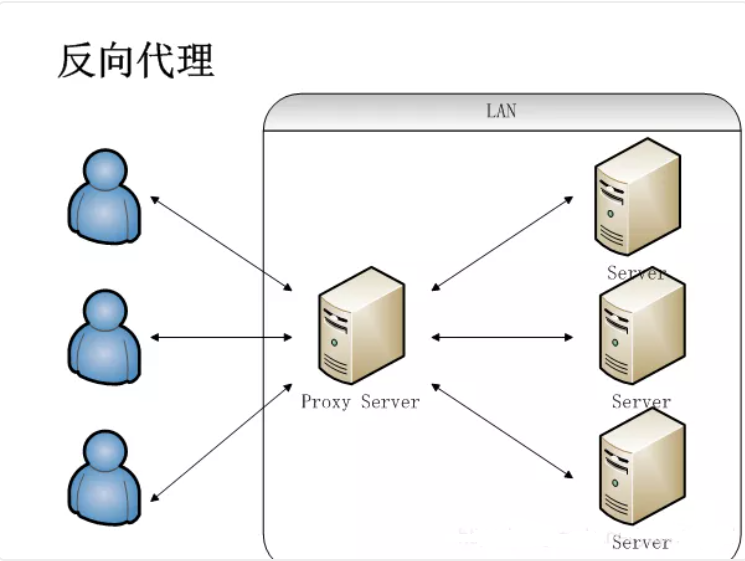

Reverse proxy: The client is not aware of the proxy, because the client does not need to configure to access the network. As long as the request is sent to the reverse proxy server, the reverse proxy server selects the target server to get the data, and then returns to the client, the reverse proxy server and the target server are a server to expose the proxy server address.Hide the real server IP address

(2) Load Balancing

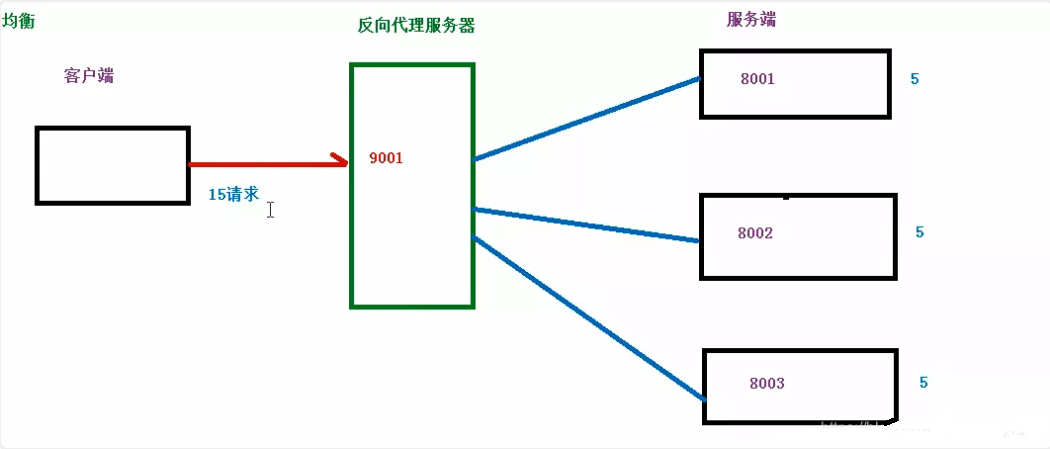

Common request and response processes: the client sends multiple requests to the server, the server processes the requests, and some may interact with the database. After the server processes the results, it returns them to the client.However, as the amount of information increases, access and data increases rapidly, the general architecture cannot meet the current needs.The first thing we think about is upgrading the server configuration, which can be caused by the increasing invalidation of Moore's Law. It is gradually not advisable to simply improve performance from hardware. How can we solve this need?

We can increase the number of servers, build clusters, distribute requests across servers, and change the situation where requests were centralized on a single server to distribute requests across multiple servers, which is what we call load balancing. Assuming that 15 requests are sent to the proxy server, the proxy server distributes them equally according to the number of servers, and each server processes five requests, a process called load balancing

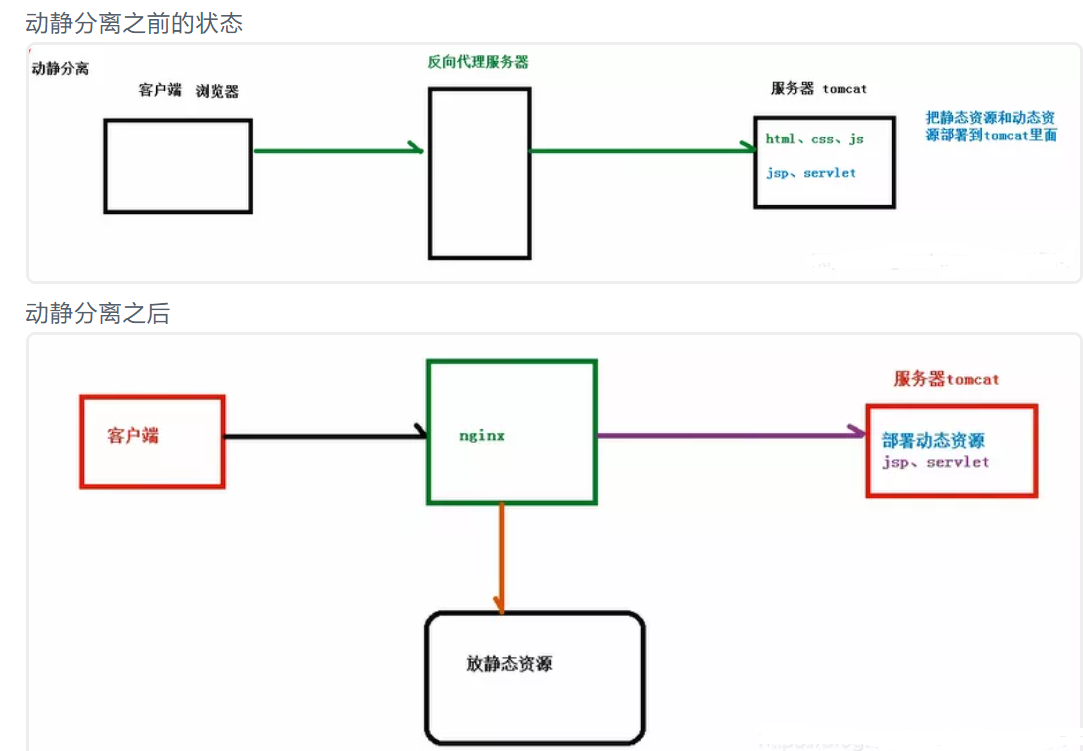

(3) Dynamic-static separation

In order to speed up the resolution of the website, you can give dynamic and static pages to different servers to resolve, speed up the resolution, and reduce the pressure from a single server

2. Nginx Installation and Common Commands

2.1. Install Nginx on Linux

centOS7 Install nginx and nginx Configuration

2.2, Common Nginx commands

View Version

./nginx -v

start-up

./nginx

Close (there are two ways, recommended. /nginx-s quit)

./nginx -s stop ./nginx -s quit

Check the correctness of the configuration culture nginx.conf format command:

./nginx -t

Reload nginx configuration

./nginx -s reload

3. Detailed description of Nginx configuration file

After installing Nginx, open the nginx.conf file in the conf folder, where the basic configuration of the Nginx server is stored by default.

The comment symbol in nginx.conf is: #

The default nginx configuration file nginx.conf is as follows:

#user nobody;

worker_processes 1;

#error_log logs/error.log;

#error_log logs/error.log notice;

#error_log logs/error.log info;

#pid logs/nginx.pid;

events {

worker_connections 1024;

}

http {

include mime.types;

default_type application/octet-stream;

#log_format main '$remote_addr - $remote_user [$time_local] "$request" '

# '$status $body_bytes_sent "$http_referer" '

# '"$http_user_agent" "$http_x_forwarded_for"';

#access_log logs/access.log main;

sendfile on;

#tcp_nopush on;

#keepalive_timeout 0;

keepalive_timeout 65;

#gzip on;

server {

listen 80;

server_name localhost;

#charset koi8-r;

#access_log logs/host.access.log main;

location / {

root html;

index index.html index.htm;

}

#error_page 404 /404.html;

# redirect server error pages to the static page /50x.html

#

error_page 500 502 503 504 /50x.html;

location = /50x.html {

root html;

}

# proxy the PHP scripts to Apache listening on 127.0.0.1:80

#

#location ~ \.php$ {

# proxy_pass http://127.0.0.1;

#}

# pass the PHP scripts to FastCGI server listening on 127.0.0.1:9000

#

#location ~ \.php$ {

# root html;

# fastcgi_pass 127.0.0.1:9000;

# fastcgi_index index.php;

# fastcgi_param SCRIPT_FILENAME /scripts$fastcgi_script_name;

# include fastcgi_params;

#}

# deny access to .htaccess files, if Apache's document root

# concurs with nginx's one

#

#location ~ /\.ht {

# deny all;

#}

}

# another virtual host using mix of IP-, name-, and port-based configuration

#

#server {

# listen 8000;

# listen somename:8080;

# server_name somename alias another.alias;

# location / {

# root html;

# index index.html index.htm;

# }

#}

# HTTPS server

#

#server {

# listen 443 ssl;

# server_name localhost;

# ssl_certificate cert.pem;

# ssl_certificate_key cert.key;

# ssl_session_cache shared:SSL:1m;

# ssl_session_timeout 5m;

# ssl_ciphers HIGH:!aNULL:!MD5;

# ssl_prefer_server_ciphers on;

# location / {

# root html;

# index index.html index.htm;

# }

#}

}3.1. Configuration file structure

... #Global Block

events { #events block

...

}

http #http block

{

... #http global block

server #server block

{

... #server global block

location [PATTERN] #location block

{

...

}

location [PATTERN]

{

...

}

}

server

{

...

}

... #http global block

}Global Block: From the beginning of the configuration file to the events block, it is mainly to set some configuration instructions that affect the overall operation of the nginx server.There are usually user groups running nginx servers, pid storage paths for nginx processes, log storage paths, profile introduction, allowed generation of worker process es, and so on.

Evets block: Configuration affects the nginx server or network connection to the user.There is a maximum number of connections per process, which event-driven model is chosen to handle connection requests, whether multiple network connections are allowed to be accepted at the same time, multiple network connection serialization is turned on, and so on.

http block: can nest multiple server s, configure proxy, cache, log definition, and most other features and configuration of third-party modules.Such as file introduction, mime-type definition, log customization, whether to use sendfile to transfer files, connection timeout, number of single connection requests, etc.

server block: Configure parameters related to the virtual host, there can be multiple servers in an http.

location block: Configure the route of the request and how various pages are processed.

3.2, http module description

As a web server, the http module is the core module of nginx, and there are many configuration items. There will be many actual business scenarios in the project. It needs to be properly configured according to the hardware information. Normally, the default configuration is sufficient!

http {

##

# Basic Configuration

##

sendfile on;

tcp_nopush on;

tcp_nodelay on;

keepalive_timeout 65;

types_hash_max_size 2048;

# server_tokens off;

# server_names_hash_bucket_size 64;

# server_name_in_redirect off;

include /etc/nginx/mime.types;

default_type application/octet-stream;

##

# SSL Certificate Configuration

##

ssl_protocols TLSv1 TLSv1.1 TLSv1.2; # Dropping SSLv3, ref: POODLE

ssl_prefer_server_ciphers on;

##

# Log Configuration

##

access_log /var/log/nginx/access.log;

error_log /var/log/nginx/error.log;

##

# Gzip Compression Configuration

##

gzip on;

gzip_disable "msie6";

# gzip_vary on;

# gzip_proxied any;

# gzip_comp_level 6;

# gzip_buffers 16 8k;

# gzip_http_version 1.1;

# gzip_types text/plain text/css application/json application/javascript

text/xml application/xml application/xml+rss text/javascript;

##

# Virtual Host Configuration

##

include /etc/nginx/conf.d/*.conf;

include /etc/nginx/sites-enabled/*;

}3.3, location directive description

-

This syntax is used to match the url, and the syntax is as follows

location\[ = | ~ | ~\* | ^~\] url{

}

-

=: For URLs without regular expressions, the string is required to match the url exactly, and if the match succeeds, the search down stops and the request is processed

-

~: Used to indicate that a url contains regular expressions and is case sensitive.

-

~*: Used to indicate that a url contains regular expressions and is not blind

-

^~: Before URLs without regular expressions, ask the ngin server to find the location with the highest matching between url and string, and use this location immediately to process the request instead of matching it

-

If a url contains regular expressions, no ~start identifier is required

-

^~: When indicating a match, only the first part of the match, such as location ^~/uri/ {...}, will match whenever an address starts with/uri/.

@: Indicates a redirection within nginx and does not process user requests directly, such as location @error {...}, which can then be inside another location.

Redirect to the @error path, such as location ~/test {error_Page 404 @error}.

3.4. Below is a configuration file for your understanding

################ Each instruction must have a semicolon ending ##########################

#Running User

user admin;

#Start a process, usually set to equal the number of CPUs (allow 1 processes to be generated)

worker_processes 1;

#Global error log and PID file

error_log /var/log/nginx/error.log;

pid /var/run/nginx.pid;

#Operating mode and maximum number of connections

events {

use epoll; #epoll is a way of multiplexing IO(I/O Multiplexing), but only for linux2.6 or above cores can greatly improve nginx performance

worker_connections 1024;#Maximum number of concurrent links for a single background worker process

# multi_accept on; #Sets whether a process accepts multiple network connections at the same time, defaulting to off

accept_mutex on; #Set up network connection serialization to prevent startle, default is on

}

#Set up an http server to leverage its reverse proxy capabilities to provide load balancing support

http {

#Set the mime type, defined by the mime.type file

include /etc/nginx/mime.types;

default_type application/octet-stream;

#Set Log Format

access_log /var/log/nginx/access.log;

#The sendfile directive specifies whether nginx calls the sendfile function (zero copy mode) to output a file. For normal applications,

#Must be set to on, if used for applications such as downloads, disk IO overload applications can be set to off to balance disk and network I/O processing speed and reduce uptime of the system.

sendfile on;

#tcp_nopush on;

#Connection timeout

#keepalive_timeout 0;

keepalive_timeout 65;

tcp_nodelay on;

#Turn on gzip compression

gzip on;

gzip_disable "MSIE [1-6]\.(?!.*SV1)";

#Set Request Buffer

client_header_buffer_size 1k;

large_client_header_buffers 4 4k;

include /etc/nginx/conf.d/*.conf;

include /etc/nginx/sites-enabled/*;

#Set load balancing server list

upstream mysvr {

#The weigth parameter represents the weight, and the higher the weight, the greater the probability of being assigned

#Squid on this machine opens port 3128

server 192.168.8.1:3128 weight=5;

server 192.168.8.2:80 weight=1;

server 192.168.8.3:80 weight=6;

}

server {

#Listen on port 80

listen 80;

#Define access using www.xx.com

server_name www.xx.com;

#Set the access log for this virtual host

access_log logs/www.xx.com.access.log main;

#Default request for load balancing

location / {

root /root; #Define the default site root location for the server

index index.php index.html index.htm; #Define the name of the index file on the first page

proxy_pass http://Mysvr; #Request to go to the list of servers defined by mysvr

deny 127.0.0.2; #ip rejected

allow 172.18.5.54; #ip allowed

}

# Define error prompt page

error_page 500 502 503 504 /50x.html;

location = /50x.html {

root /root;

}

#Static file, nginx handles itself

location ~ ^/(images|javascript|js|css|flash|media|static)/ {

root /var/www/virtual/htdocs;

#30 days after expiration, static files are not updated much, expiration can be set a little larger, if updated frequently, can be set a little smaller.

expires 30d;

}

#Set an address to view Nginx status

location /NginxStatus {

stub_status on;

access_log on;

auth_basic "NginxStatus";

auth_basic_user_file conf/htpasswd;

}

#Prohibit access to.htxxx files

location ~ /\.ht {

deny all;

}

}

}Upstream module: mainly responsible for load balancing configuration, defining upstream server cluster, proxy_in reverse proxyPass used for load balancing.Distribute requests to back-end servers using the default polling schedule.

Surprise: When a network is connected, multiple sleep processes are waked up at the same time, but only one process can get a link, which can affect system performance.

Reference link: