Nginx

This project is a minimalist tutorial of Nginx, which aims to help novices get started with Nginx quickly.

demos The examples in the directory simulate some common practical scenarios in work, and can be started by script with one click, so that you can quickly see the demonstration effect.

summary

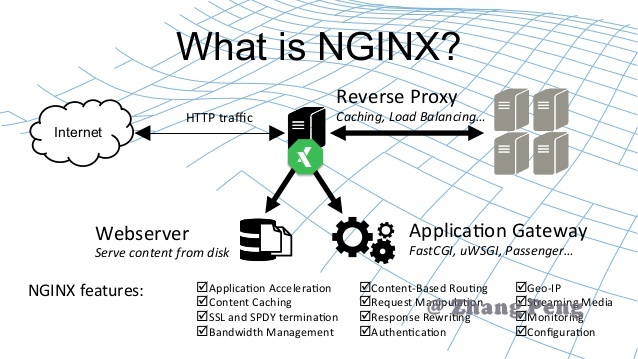

What is Nginx?

Nginx (engine x) It is a lightweight Web server, reverse proxy server and e-mail (IMAP/POP3) proxy server.

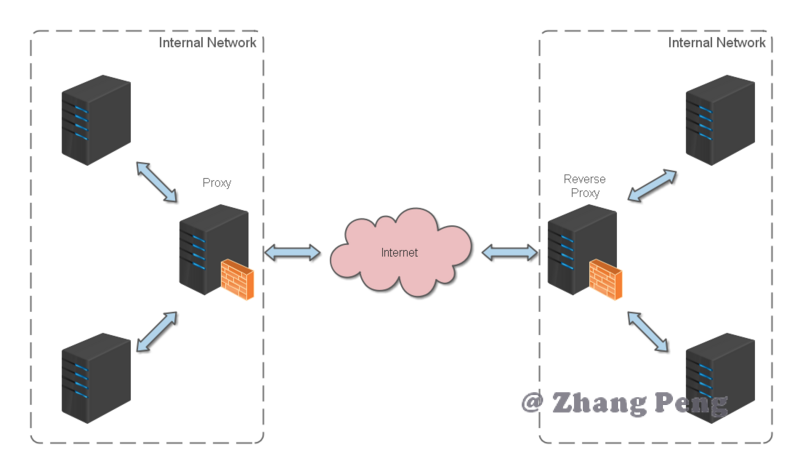

What is reverse proxy?

Reverse Proxy means that the proxy server accepts the connection request on the internet, then forwards the request to the server on the internal network, and returns the result obtained from the server to the client requesting connection on the internet. At this time, the proxy server acts as a Reverse Proxy server.

Installation and use

install

For detailed installation methods, please refer to: Nginx installation

use

nginx is easy to use, just a few commands.

Common commands are as follows:

nginx -s stop Quick close Nginx,Relevant information may not be saved and terminated quickly web Service. nginx -s quit Smooth closing Nginx,Save the relevant information and finish it in a scheduled manner web Service. nginx -s reload Because of the change Nginx Related configurations need to be reloaded. nginx -s reopen Reopen the log file. nginx -c filename by Nginx Specify a configuration file instead of the default. nginx -t Don't run, just test the configuration file. nginx The syntax of the configuration file is checked for correctness and an attempt is made to open the file referenced in the configuration file. nginx -v display nginx Version of. nginx -V display nginx Version, compiler version and configuration parameters.

If you don't want to hit the command every time, you can add a new startup batch file startup.bat in the nginx installation directory, and double-click it to run it. The contents are as follows:

@echo off rem If started before startup nginx And record it pid File, will kill Specify process nginx.exe -s stop rem Test the syntax correctness of the configuration file nginx.exe -t -c conf/nginx.conf rem display version information nginx.exe -v rem Start according to the specified configuration nginx nginx.exe -c conf/nginx.conf

If you are running under Linux, write a shell script, which is similar.

nginx configuration practice

I always believe that the configuration of various development tools is described in combination with actual combat, which will be easier to understand.

http reverse proxy configuration

Let's achieve a small goal first: not considering the complex configuration, we just complete an http reverse proxy.

The nginx.conf configuration file is as follows:

Note: conf / nginx.conf is the default configuration file of nginx. You can also use nginx -c to specify your profile

#Run user

#user somebody;

#Start the process, usually set equal to the number of CPUs

worker_processes 1;

#Global error log

error_log D:/Tools/nginx-1.10.1/logs/error.log;

error_log D:/Tools/nginx-1.10.1/logs/notice.log notice;

error_log D:/Tools/nginx-1.10.1/logs/info.log info;

#PID file, which records the process ID of the currently started nginx

pid D:/Tools/nginx-1.10.1/logs/nginx.pid;

#Working mode and upper limit of connections

events {

worker_connections 1024; #Maximum number of concurrent links for a single background worker process process

}

#Set up http server and use its reverse proxy function to provide load balancing support

http {

#Set the MIME type (mail support type), which is defined by the mime.types file

include D:/Tools/nginx-1.10.1/conf/mime.types;

default_type application/octet-stream;

#Set log

log_format main '[$remote_addr] - [$remote_user] [$time_local] "$request" '

'$status $body_bytes_sent "$http_referer" '

'"$http_user_agent" "$http_x_forwarded_for"';

access_log D:/Tools/nginx-1.10.1/logs/access.log main;

rewrite_log on;

#The sendfile instruction specifies whether nginx calls the sendfile function (zero copy mode) to output files. For normal applications,

#It must be set to on. If it is used for downloading and other applications, it can be set to off to balance the disk and network I/O processing speed and reduce the uptime of the system

sendfile on;

#tcp_nopush on;

#Connection timeout

keepalive_timeout 120;

tcp_nodelay on;

#gzip compression switch

#gzip on;

#Set actual server list

upstream zp_server1{

server 127.0.0.1:8089;

}

#HTTP server

server {

#Listen to port 80. Port 80 is a well-known port number, which is used for HTTP protocol

listen 80;

#Define access using www.xx.com

server_name www.helloworld.com;

#home page

index index.html

#Directory pointing to webapp

root D:\01_Workspace\Project\github\zp\SpringNotes\spring-security\spring-shiro\src\main\webapp;

#Coding format

charset utf-8;

#Agent configuration parameters

proxy_connect_timeout 180;

proxy_send_timeout 180;

proxy_read_timeout 180;

proxy_set_header Host $host;

proxy_set_header X-Forwarder-For $remote_addr;

#The path of the reverse proxy (bound to upstream), and the mapped path is set after the location

location / {

proxy_pass http://zp_server1;

}

#Static files, handled by nginx itself

location ~ ^/(images|javascript|js|css|flash|media|static)/ {

root D:\01_Workspace\Project\github\zp\SpringNotes\spring-security\spring-shiro\src\main\webapp\views;

#After 30 days, the static files are not updated very much. The expiration can be set larger. If they are updated frequently, they can be set smaller.

expires 30d;

}

#Set the address to view Nginx status

location /NginxStatus {

stub_status on;

access_log on;

auth_basic "NginxStatus";

auth_basic_user_file conf/htpasswd;

}

#Access to the. htxxx file is prohibited

location ~ /\.ht {

deny all;

}

#Error handling page (optional configuration)

#error_page 404 /404.html;

#error_page 500 502 503 504 /50x.html;

#location = /50x.html {

# root html;

#}

}

}

Well, let's try:

- When starting webapp, note that the port for starting binding should be the same as that in nginx upstream The ports set are consistent.

- Change the host: add a DNS record to the host file in the C:\Windows\System32\drivers\etc directory

127.0.0.1 www.helloworld.com

- Start the startup.bat command in the previous section

- Visit www.helloworld.com in the browser. Not surprisingly, you can already visit it.

Load balancing configuration

In the previous example, the proxy points to only one server.

However, in the actual operation of the website, there are mostly multiple servers running the same app. At this time, load balancing needs to be used to divert.

nginx can also implement simple load balancing functions.

Suppose such an application scenario: deploy the application on three servers in linux Environment: 192.168.1.11:80, 192.168.1.12:80 and 192.168.1.13:80. The domain name of the website is www.helloworld.com, and the public IP is 192.168.1.11. Deploy nginx on the server where the public IP is located to load balance all requests.

nginx.conf is configured as follows:

http {

#Set the MIME type, which is defined by the mime.type file

include /etc/nginx/mime.types;

default_type application/octet-stream;

#Set log format

access_log /var/log/nginx/access.log;

#List of servers to set load balancing

upstream load_balance_server {

#The weigth parameter represents the weight. The higher the weight, the greater the probability of being assigned

server 192.168.1.11:80 weight=5;

server 192.168.1.12:80 weight=1;

server 192.168.1.13:80 weight=6;

}

#HTTP server

server {

#Listen on port 80

listen 80;

#Define access using www.xx.com

server_name www.helloworld.com;

#Load balancing all requests

location / {

root /root; #Defines the default site root location for the server

index index.html index.htm; #Defines the name of the first page index file

proxy_pass http://load_balance_server ;# Request steering load_balance_server defined server list

#The following are some reverse proxy configurations (optional configuration)

#proxy_redirect off;

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

#The back-end Web server can obtain the user's real IP through x-forward-for

proxy_set_header X-Forwarded-For $remote_addr;

proxy_connect_timeout 90; #Timeout of nginx connection to backend server (proxy connection timeout)

proxy_send_timeout 90; #Back end server data return time (proxy sending timeout)

proxy_read_timeout 90; #Response time of back-end server after successful connection (agent receiving timeout)

proxy_buffer_size 4k; #Set the buffer size of proxy server (nginx) to save user header information

proxy_buffers 4 32k; #proxy_buffers buffer. If the average web page is less than 32k, set this way

proxy_busy_buffers_size 64k; #Buffer size under high load (proxy_buffers*2)

proxy_temp_file_write_size 64k; #Set the cache folder size. If it is larger than this value, it will be transferred from the upstream server

client_max_body_size 10m; #Maximum number of single file bytes allowed for client requests

client_body_buffer_size 128k; #The maximum number of bytes that the buffer agent can buffer client requests

}

}

}

The website has multiple webapp configurations

When a website has more and more rich functions, it is often necessary to peel off some relatively independent modules for independent maintenance. In this case, there are usually multiple webapp s.

For example, if the www.helloworld.com site has several webapp s, finance, product and admin. The ways of accessing these applications are distinguished by context:

www.helloworld.com/finance/

www.helloworld.com/product/

www.helloworld.com/admin/

As we know, the default port number of http is 80. If you start these three webapp applications on one server at the same time, you can't use port 80. Therefore, these three applications need to bind different port numbers respectively.

So, here's the problem. When users actually visit www.helloworld.com, they will not visit different webapp s with the corresponding port number. Therefore, you need to use the reverse proxy again.

Configuration is not difficult. Let's see how to do it:

http {

#Some basic configurations are omitted here

upstream product_server{

server www.helloworld.com:8081;

}

upstream admin_server{

server www.helloworld.com:8082;

}

upstream finance_server{

server www.helloworld.com:8083;

}

server {

#Some basic configurations are omitted here

#The default point is to the server of the product

location / {

proxy_pass http://product_server;

}

location /product/{

proxy_pass http://product_server;

}

location /admin/ {

proxy_pass http://admin_server;

}

location /finance/ {

proxy_pass http://finance_server;

}

}

}

https reverse proxy configuration

Some sites with high security requirements may use HTTPS (a secure HTTP protocol using ssl communication standard).

HTTP protocol and SSL standard are not supported here. However, there are several points to know when configuring https with nginx:

- The fixed port number of HTTPS is 443, which is different from port 80 of HTTP

- The SSL standard needs to introduce a security certificate, so you need to specify the certificate and its corresponding key in nginx.conf

Others are basically the same as http reverse proxy, except in Server Some configurations are somewhat different.

#HTTP server

server {

#Listen to port 443. 443 is a well-known port number, which is mainly used for HTTPS protocol

listen 443 ssl;

#Define access using www.xx.com

server_name www.helloworld.com;

#ssl certificate file location (common certificate file format: crt/pem)

ssl_certificate cert.pem;

#ssl certificate key location

ssl_certificate_key cert.key;

#ssl configuration parameters (optional configuration)

ssl_session_cache shared:SSL:1m;

ssl_session_timeout 5m;

#Digital signature, MD5 used here

ssl_ciphers HIGH:!aNULL:!MD5;

ssl_prefer_server_ciphers on;

location / {

root /root;

index index.html index.htm;

}

}

Static site configuration

Sometimes we need to configure static sites (that is, html files and a bunch of static resources).

For example: if all static resources are placed in / app/dist Under the directory, we just need to nginx.conf Specify the home page and the host of the site in.

The configuration is as follows:

worker_processes 1;

events {

worker_connections 1024;

}

http {

include mime.types;

default_type application/octet-stream;

sendfile on;

keepalive_timeout 65;

gzip on;

gzip_types text/plain application/x-javascript text/css application/xml text/javascript application/javascript image/jpeg image/gif image/png;

gzip_vary on;

server {

listen 80;

server_name static.zp.cn;

location / {

root /app/dist;

index index.html;

#Forward any requests to index.html

}

}

}

Then, add HOST:

127.0.0.1 static.zp.cn

At this point, you can access the static site by accessing static.zp.cn in your local browser.

Build file server

Sometimes, the team needs to archive some data or materials, so the file server is essential. Using Nginx, you can quickly and easily build a simple file service.

Configuration points in Nginx:

- Enable autoindex to display the directory. It is not enabled by default.

- Autoindex_ exact_ When size is turned on, the size of the file can be displayed.

- Autoindex_ Enable Localtime to display the modification time of the file.

- Root is used to set the root path of the open file service.

- Charset set to charset utf-8,gbk;, It can avoid the problem of Chinese garbled code (after setting under windows Server, it is still garbled, and I have not found a solution for the time being).

The most simplified configuration is as follows:

autoindex on;# display contents

autoindex_exact_size on;# display files size

autoindex_localtime on;# Display file time

server {

charset utf-8,gbk; # After setting under windows Server, it is still garbled and has no solution for the time being

listen 9050 default_server;

listen [::]:9050 default_server;

server_name _;

root /share/fs;

}

Cross domain solutions

In the development of web domain, the front end and back end separation mode is often used. In this mode, the front end and the back end are independent web applications respectively. For example, the back end is a Java program and the front end is a React or Vue application.

When independent web app s access each other, there is bound to be a cross domain problem. There are generally two ways to solve cross domain problems:

- CORS

Set the HTTP response header in the back-end server and add the domain name you need to run and access Access-Control-Allow-Origin Yes.

- jsonp

The back-end constructs json data according to the request and returns it. The front-end uses json to cross domains.

These two ideas are not discussed in this paper.

It should be noted that nginx also provides a cross domain solution according to the first idea.

For example, www.helloworld.com is composed of a front-end app and a back-end app. The front-end port number is 9000 and the back-end port number is 8080.

If the front-end and back-end use http to interact, the request will be rejected because there is a cross domain problem. Let's see how nginx solves this problem:

First, set CORS in the enable-cors.conf file:

# allow origin list

set $ACAO '*';

# set single origin

if ($http_origin ~* (www.helloworld.com)$) {

set $ACAO $http_origin;

}

if ($cors = "trueget") {

add_header 'Access-Control-Allow-Origin' "$http_origin";

add_header 'Access-Control-Allow-Credentials' 'true';

add_header 'Access-Control-Allow-Methods' 'GET, POST, OPTIONS';

add_header 'Access-Control-Allow-Headers' 'DNT,X-Mx-ReqToken,Keep-Alive,User-Agent,X-Requested-With,If-Modified-Since,Cache-Control,Content-Type';

}

if ($request_method = 'OPTIONS') {

set $cors "${cors}options";

}

if ($request_method = 'GET') {

set $cors "${cors}get";

}

if ($request_method = 'POST') {

set $cors "${cors}post";

}

Next, in your server include enable-cors.conf To introduce cross domain configuration:

# ----------------------------------------------------

# This file is the nginx configuration fragment for the project

# You can directly include in nginx config (recommended)

# Or copy to the existing nginx and configure it yourself

# www.helloworld.com domain name needs to be configured with dns hosts

# Among them, the api enables cors, which needs to be matched with another configuration file in this directory

# ----------------------------------------------------

upstream front_server{

server www.helloworld.com:9000;

}

upstream api_server{

server www.helloworld.com:8080;

}

server {

listen 80;

server_name www.helloworld.com;

location ~ ^/api/ {

include enable-cors.conf;

proxy_pass http://api_server;

rewrite "^/api/(.*)$" /$1 break;

}

location ~ ^/ {

proxy_pass http://front_server;

}

}

That's it.