Nginx and Tomcat deployment

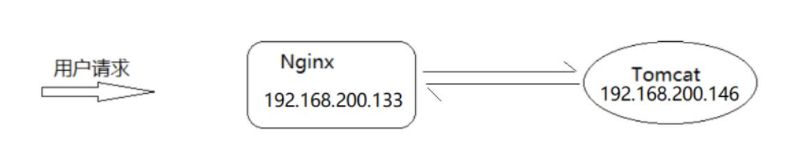

We all know that Nginx has very high performance in high concurrency scenarios and processing static resources, but in actual projects, in addition to static resources, there are background business code modules. Generally, background business will be deployed on Web servers such as Tomcat, weblogic or websphere. So how to use Nginx to receive user requests and forward them to the background web server?

Step analysis:

1.prepare Tomcat Environment, and in Tomcat Deploy one at the top web project 2.prepare Nginx Environment, use Nginx Receive requests and distribute them to Tomat upper

Environment preparation (Tomcat)

Browser access:

http://192.168.200.146:8080/demo/index.html

Get the link address of the dynamic resource:

http://192.168.200.146:8080/demo/getAddress

Tomcat will be used as the background web server this time

(1) Prepare a Tomcat on Centos

1.Tomcat Official website address:https://tomcat.apache.org/ 2.download tomcat,This course uses apache-tomcat-8.5.59.tar.gz 3.take tomcat Decompress mkdir web_tomcat tar -zxf apache-tomcat-8.5.59.tar.gz -C /web_tomcat

(2) Prepare a web project and package it as war

1.Transfer the data demo.war Upload to tomcat8 Under directory webapps Bao Xia 2.take tomcat Start and enter tomcat8 of bin Directory ./startup.sh

(3) Start tomcat for access test.

Static resources: http://192.168.200.146:8080/demo/index.html dynamic resource: http://192.168.200.146:8080/demo/getAddress

Environmental preparation (Nginx)

(1) Use the reverse proxy of Nginx to forward the request to Tomcat for processing.

upstream webservice {

server 192.168.200.146:8080;

}

server{

listen 80;

server_name localhost;

location /demo {

proxy_pass http://webservice;

}

}

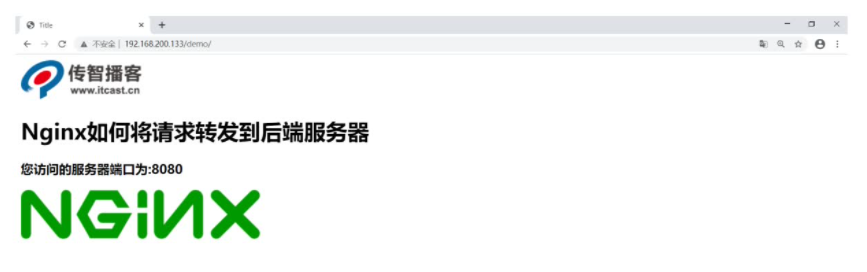

(2) Start access test

Dynamic and static separation

What is dynamic static separation?

Dynamic: business processing of background applications

Static: static resources of the website (html,javaScript,css,images and other files)

Separation: deploy and access the two separately to provide users with access. For example, in the future, all content related to static resources will be handed over to Nginx for deployment and access, and non static content will be handed over to a server similar to Tomcat for deployment and access.

Why the separation of motion and static?

As we mentioned earlier, the efficiency of Nginx in processing static resources is very high, and the concurrent access volume of Nginx is also among the best, while Tomcat is relatively weak. Therefore, after handing over static resources to Nginx, the access pressure of Tomcat server can be reduced and the access speed of static resources can be improved.

After the dynamic and static separation, the coupling between dynamic resources and static resources is reduced. For example, the downtime of dynamic resources will not affect the display of static resources.

How to realize dynamic and static separation?

There are many ways to realize dynamic and static separation. For example, static resources can be deployed to CDN, Nginx and other servers, and dynamic resources can be deployed to Tomcat,weblogic or websphere. This course only needs to use Nginx+Tomcat to realize dynamic and static separation.

requirement analysis

Implementation steps of dynamic and static separation

1. Delete all static resources in the demo.war project and repackage to generate a war package, which is provided in the data.

2. Deploy the war package to tomcat and delete the previously deployed content

Enter into tomcat of webapps Under the directory, delete the previous contents New war Copy package to webapps lower take tomcat start-up

3. Create the following directory on the server where Nginx is located, and put the corresponding static resources in the specified location

The contents of the index.html page are as follows:

<!DOCTYPE html>

<html lang="en">

<head>

<meta charset="UTF-8">

<title>Title</title>

<script src="js/jquery.min.js"></script>

<script>

$(function(){

$.get('http://192.168.200.133/demo/getAddress',function(data){

$("#msg").html(data);

});

});

</script>

</head>

<body>

<img src="images/logo.png"/>

<h1>Nginx How to forward requests to back-end servers</h1>

<h3 id="msg"></h3>

<img src="images/mv.png"/>

</body>

</html>

4. Configure the access of static and dynamic resources of Nginx

upstream webservice{

server 192.168.200.146:8080;

}

server {

listen 80;

server_name localhost;

#dynamic resource

location /demo {

proxy_pass http://webservice;

}

#Static resources

location ~/.*\.(png|jpg|gif|js){

root html/web;

gzip on;

}

location / {

root html/web;

index index.html index.htm;

}

}

5. Start the test and visit http://192.168.200.133/index.html

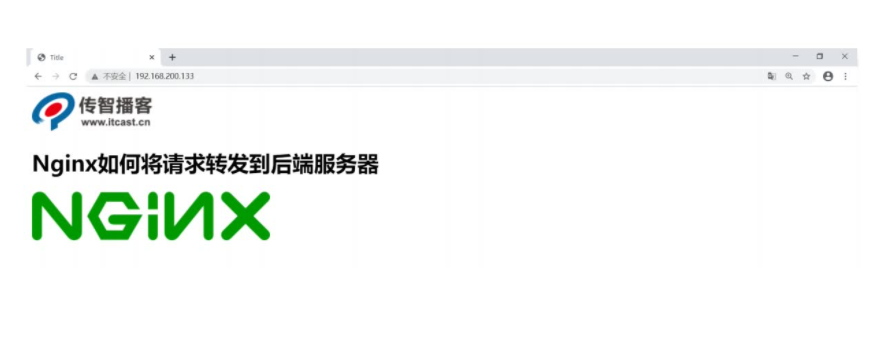

If the server after Tomcat goes down for some reason at a certain point in time, when we visit Nginx again, we will get the following results. The user can still see the page, but the statistics of access times are missing. This is the effect of reducing the coupling between the front and rear ends. The whole request only interacts with the rear server once, and js and images are returned directly from Nginx, It provides efficiency and reduces the pressure on the server.

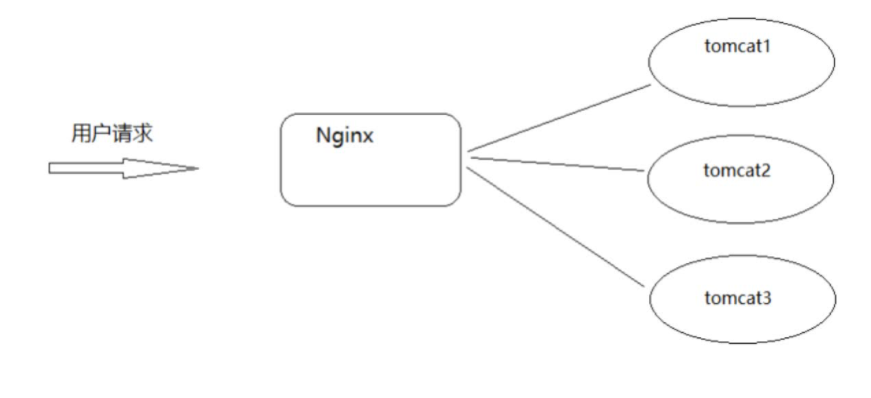

Nginx implements Tomcat cluster construction

When using Nginx and Tomcat to deploy the project, we use an Nginx server and a Tomcat server. The effect diagram is as follows:

Then the problem comes. If Tomcat goes down, the whole system will be incomplete. So how to solve the above problems? One server is easy to go down, so build more Tomcat servers, so as to improve the availability of the server. This is what we often call a cluster. Building a Tomcat cluster requires the knowledge of Nginx's reverse proxy and assignment balance. How to implement it? Let's analyze the principle first

Environmental preparation:

(1) Prepare three tomcat, use the port to distinguish [the actual environment should be three servers], modify the server.ml, and modify the port to 8081808280 respectively

(2) Start tomcat and access the test,

(3) Add the following content to the configuration file corresponding to Nginx:

upstream webservice{

server 192.168.200.146:8080;

server 192.168.200.146:8180;

server 192.168.200.146:8280;

}

Well, after completing the deployment of the above environment, we have solved the high availability of Tomcat, one server goes down, and the other two provide external services. At the same time, we can also realize the uninterrupted update of background servers. However, new problems arise. In the above environment, if Nginx goes down, the whole system will provide services to the outside world. How to solve this?