nginx load balancing

Based on the existing network structure, load balancing provides a cheap, effective and transparent method to expand the bandwidth of network equipment and servers, increase throughput, strengthen network data processing capacity, and improve network flexibility and availability.

With the development of the website and the increasing pressure on the server, we may first separate the database and static files. However, with the development, the request pressure of a single service API will also become great. At this time, we may need to do load balancing to spread the pressure faced by one server to multiple servers.

Nginx can be used not only as a powerful web server, but also as a reverse proxy server. Moreover, nginx can realize dynamic and static separation according to scheduling rules, and can also balance the load of back-end servers.

nginx load balancing configuration

nginx load balancing is mainly for proxy_ Configuration of pass and upstream.

First, we need to establish a Spring Boot project to provide external services to simulate our actual services. We can also configure other frameworks that can provide network request processing to provide services. What services are provided here is not related to nginx configuration.

The process of establishing the Spring Boot project will not be mentioned. Only the main code will be posted here:

import org.springframework.beans.factory.annotation.Value;

import org.springframework.boot.SpringApplication;

import org.springframework.boot.autoconfigure.SpringBootApplication;

import org.springframework.web.bind.annotation.GetMapping;

import org.springframework.web.bind.annotation.RestController;

@RestController

@SpringBootApplication

public class NginxtestApplication {

@Value("${server.port:}")

private String port;

public static void main(String[] args) {

SpringApplication.run(NginxtestApplication.class, args);

}

@GetMapping("")

public String hello() {

System.out.println("call me " + port);

return "i am " + port;

}

}

After packaging the project, we execute the following command

java -jar test.jar --server.port=8001 java -jar test.jar --server.port=8002

Next, we open the nginx configuration file

http {

upstream upstream_name{

server 192.168.0.28:8001;

server 192.168.0.28:8002;

}

server {

listen 8080;

server_name localhost;

location / {

proxy_pass http://upstream_name;

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

}

}

}

I didn't post some default configurations here.

First, add http upstream upstream_name {} To configure the server to map.

upstream_name you can specify as the domain name of the service or the code of the project.

The location under server will / Forward all requests under to http://upstream_name , That is, on a server in the server list configured above. nginx will confirm the specific server according to the configured scheduling algorithm.

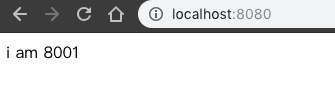

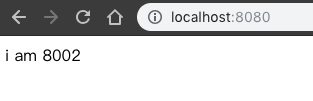

We open localhost:8080 in the browser. Refresh a few more times to see that the content on the page has changed.

nginx load balancing strategy

There are four load balancing strategies for nginx:

Polling (default)

The most basic configuration method is the default policy of upstream. Each request will be allocated to different back-end servers one by one in chronological order.

Parameters are:

| parameter | describe |

|---|---|

| fail_timeout | And Max_ Use with failures |

| max_fails | Set in fail_ The maximum number of failures within the time set by the timeout parameter. If all requests to the server fail within this time, the server is considered to be down |

| fail_time | The length of time that the server will be considered down. The default is 10s. |

| backup | Mark the server as an alternate server. When the primary server stops, requests are sent to it. |

| down | Mark that the server is permanently down. |

be careful:

- During polling, if the server goes down, the server will be automatically eliminated.

- The default configuration is the polling policy.

- This strategy is suitable for stateless and fast services with equivalent server configuration.

weight

The probability of enemy occupation is formulated based on the polling strategy. for example

upstream foo {

server localhost:8001 weight=2;

server localhost:8002;

server localhost:8003 backup;

server localhost:8004 max_fails=3 fail_timeout=20s;

}

In this example, the weight parameter is used to determine the probability of polling, and the default value of weight is 1; The value of weight is proportional to the probability of being accessed.

be careful:

- The higher the weight, the more requests are allocated to be processed.

- This policy can be used with least_conn and IP_ Use with hash.

- This strategy is more suitable for the situation where the hardware configuration of the server is quite different.

ip_hash

The load balancer can ensure that requests from the same client are sent to the same server all the time according to the allocation of client IP addresses. In this way, each visitor has fixed access to a back-end server.

upstream foo {

ip_hash;

server localhost:8001 weight=2;

server localhost:8002;

server localhost:8003;

server localhost:8004 max_fails=3 fail_timeout=20s;

}

be careful:

- Before nginx version 1.3.1, you cannot use IP_ weight is used in hash.

- ip_hash cannot be used with backup.

- This strategy is suitable for stateful services, such as session.

- When a server needs to be removed, it must be manually down.

least_conn minimum connection

Forward the request to the back-end server with fewer connections. Polling algorithm is to forward requests to each back end evenly to make their load roughly the same; However, some requests take a long time, resulting in a high load on the back end. In this case, least_conn this way can achieve better load balancing effect

upstream foo {

least_conn;

server localhost:8001 weight=2;

server localhost:8002;

server localhost:8003 backup;

server localhost:8004 max_fails=3 fail_timeout=20s;

}

be careful:

- This load balancing strategy is suitable for server overload caused by different request processing times.

In addition to the above scheduling policies, there are some third-party scheduling policies that can be integrated into nginx.

In practical application, different strategies need to be selected according to different scenarios, most of which are used in combination to achieve the actual performance.