1, Basic concept of Nginx

1. About Nginx

- What is Nginx

Nginx (engine x) is a high-performance HTTP and reverse proxy server, characterized by less memory consumption and strong concurrency. It has been reported that it can support up to 50000 concurrent connections

2. Reverse proxy

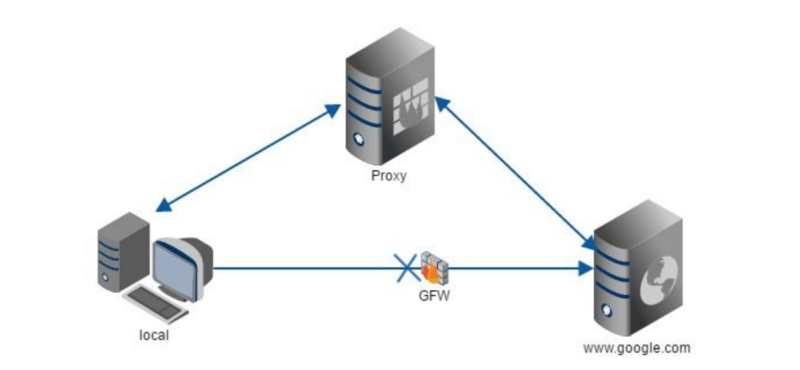

- Forward agent

The forward proxy is a kind of client proxy. The client interacts with the server through the client proxy. For the server, the client is hidden by the client proxy, and the server is not aware of the client. A typical application scenario is to surf the Internet scientifically, and configure a foreign proxy server through the browser to bypass the purpose of accessing the target resources (GFW)

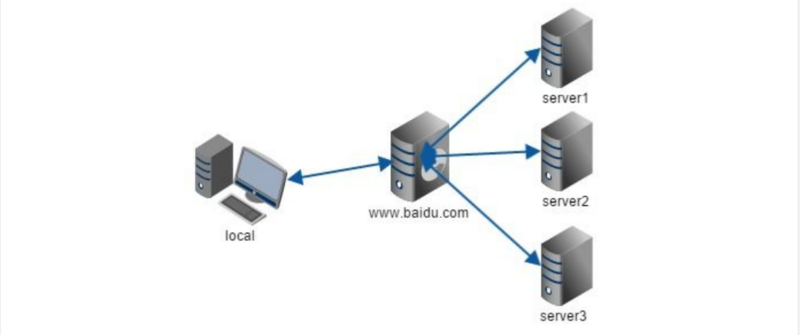

- Reverse proxy

Reverse proxy is a kind of server-side proxy. The client is insensitive to the server. We send the request to the reverse proxy server. The reverse proxy server selects the target server to obtain the data and returns it to the client. Exposed is the reverse proxy server, hiding the real server behind the reverse proxy server.

- load balancing

When the performance of a single server reaches the bottleneck, expand the number of servers horizontally, and then distribute the request to each server. Change the situation that the original request is centralized to a single server to distribute the request to each server according to a certain strategy, so as to achieve the effect of balanced load comparison and distribution.

- Dynamic and static separation

The dynamic page and static page are analyzed by different servers to improve the response efficiency and reduce the pressure of the server. For example, JSP is parsed by tomcat server, while pictures, JS files and static HTML files are processed by special static resource services.

2, Nginx installation

1. Nginx dependency

- pcre : regular expression function library written in C language

- openssl : Secure Socket Layer password Library

- zlib : library for data compression

2. Source code installation

- Download the source package, Go to the official website to download

- Install Nginx dependencies

yum -y install gcc zlib zlib-devel pcre-devel openssl openssl-devel

-

Source code installation Nginx

- Decompress nginx-xx.tar.gz package

- Enter the decompression directory and execute. / configure

- make && make install

- View developed port numbers

firewall-cmd --list-all

- Set open port number

firewall-cmd --add-port=80/tcp --permanent

- service iptables restart

firewall-cmd --reload

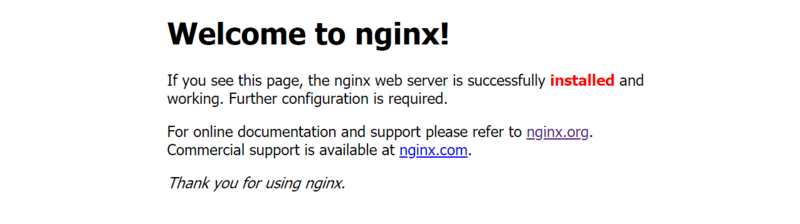

- Verify whether Nginx is installed successfully, and the browser accesses. The following page is returned successfully

3, Nginx common commands

Nginx source code is installed by default. Its command is in / usr/local/nginx/sbin directory

- View the version number of Nginx

/usr/local/nginx/sbin/nginx -v

- Start Nginx

/usr/local/nginx/sbin/nginx

- View Nginx processes

ps -ef | grep nginx

- Close Nginx

/usr/local/nginx/sbin/nginx -s stop

- Reload Nginx

If you change the Nginx configuration file, you can use the reload command to make the configuration effective

/usr/local/nginx/sbin/nginx -s reload

4, Configuration file for Nginx

1. Location of Nginx configuration file

/usr/local/nginx/conf/nginx.conf

2. Composition of Nginx configuration file

1. Delete default for comment Nginx.conf to configure

#Global block worker_processes 1; #events block events { worker_connections 1024; } #http block http { include mime.types; default_type application/octet-stream; sendfile on; keepalive_timeout 65; server { listen 80; server_name localhost; location / { root html; index index.html index.htm; } error_page 500 502 503 504 /50x.html; location = /50x.html { root html; } } }

2. Nginx configuration instance

#Define users and user groups for nginx to run user www www; #The number of nginx processes. It is recommended to set it equal to the total number of CPU cores. worker_processes 8; #By default, nginx does not enable the use of multi-core CPU by adding workers_ cpu_ Affinity configuration parameters to make full use of multi-core CPUs worker_cpu_affinity 00000001 00000010 00000100 00001000 00010000 00100000 01000000 10000000; #Global error log definition type, [debug | info | notice | warn | error | crit] error_log /var/log/nginx/error.log info; #Process file pid /var/run/nginx.pid; #The theoretical value of the maximum number of file descriptors opened by a nginx process should be the maximum number of open files (system value ulimit-n) divided by the number of nginx processes, but the allocation requests of nginx are not uniform, so it is recommended to keep the same value as ulimit-n. worker_rlimit_nofile 65535; #Working mode and maximum number of connections events { #Refer to the event model, use [kqueue | rtsig | epoll | / dev / poll | select | poll]; the epoll model is the high-performance network I/O model in the kernel of Linux 2.6 and above. If running on FreeBSD, use the kqueue model. #epoll is a way of multiplexing IO(I/O Multiplexing), but it is only used in Linux 2.6 or above kernel, which can greatly improve the performance of nginx use epoll; ############################################################################ #Maximum number of concurrent links for a single background worker process #Event module instruction, which defines the maximum number of connections per process of nginx. The default is 1024. Maximum number of customer connections by worker_processes and workers_ Connections decision #I.e. max_client=worker_processes*worker_connections, when acting as reverse proxy: max_client=worker_processes*worker_connections / 4 worker_connections 65535; ############################################################################ } #Set up http server http { include mime.types; #File extension and file type mapping table default_type application/octet-stream; #Default file type #charset utf-8; #Default encoding server_names_hash_bucket_size 128; #hash table size of server name client_header_buffer_size 32k; #Upload file size limit large_client_header_buffers 4 64k; #Set request delay client_max_body_size 8m; #Set request delay sendfile on; #Turn on the efficient file transfer mode. The sendfile instruction specifies whether nginx calls the sendfile function to output the file. It is set to on for normal applications. If it is used for heavy load applications such as downloading, it can be set to off to balance the processing speed of disk and network I/O and reduce the load of the system. Note: if the image display is abnormal, change this to off. autoindex on; #Turn on directory list access, download the server appropriately, and turn off by default. tcp_nopush on; #Prevent network congestion tcp_nodelay on; #Prevent network congestion ##Various parameter settings of connection client timeout## keepalive_timeout 120; #The unit is seconds, when the client connects. After the timeout, the server automatically closes the connection. If the nginx daemons haven't received the http request from the browser in the waiting time, the http connection will be closed client_header_timeout 10; #Timeout for client request headers client_body_timeout 10; #Client request principal timeout reset_timedout_connection on; #Tell nginx to close unresponsive client connections. This will free up the memory space occupied by that client send_timeout 10; #Client response timeout, between client read operations. If the client does not read any data during this period of time, nginx will close the connection ################################ #FastCGI related parameters are to improve the performance of the website: reduce resource occupation and improve the access speed. The following parameters can be understood literally. fastcgi_connect_timeout 300; fastcgi_send_timeout 300; fastcgi_read_timeout 300; fastcgi_buffer_size 64k; fastcgi_buffers 4 64k; fastcgi_busy_buffers_size 128k; fastcgi_temp_file_write_size 128k; ###Set as proxy cache server####### ###Write to temp before moving to cache #proxy_cache_path /var/tmp/nginx/proxy_cache levels=1:2 keys_zone=cache_one:512m inactive=10m max_size=64m; ###Proxy above_ Temp and proxy_cache needs to be in the same partition ###levels=1:2 indicates the cache level. The first level directory of the cache directory is 1 character, and the second level directory is 2 character keys_zone=cache_one:128m The name of the cache space is cache_one size 512m ###max_size=64m, if a single file exceeds 128m, it will not cache inactive=10m, if the data is cached, it will be deleted if it is not accessed in 10 minutes #########end#################### #####Compress the transfer file########### #gzip module settings gzip on; #Turn on gzip to compress output gzip_min_length 1k; #Minimum compressed file size gzip_buffers 4 16k; #Compress buffer gzip_http_version 1.0; #Compressed version (default 1.1, use 1.0 if front end is squid2.5) gzip_comp_level 2; #Compression level, gzip compression ratio, 1 is the smallest, processing is the fastest; 9 is the largest compression ratio, processing is the slowest, transmission speed is the fastest, and CPU consumption is the most; gzip_types text/plain application/x-javascript text/css application/xml; #The compression type already contains text/html by default, so there is no need to write any more below. There will be no problem in writing, but there will be a warn. gzip_vary on; ############################## #limit_zone crawler $binary_remote_addr 10m; #You need to use the upstream blog.ha97.com { #upstream load balancing, weight is the weight, which can be defined according to the machine configuration. The weight parameter represents the weight, and the higher the weight is, the greater the probability of being assigned. server 192.168.80.121:80 weight=3; server 192.168.80.122:80 weight=2; server 192.168.80.123:80 weight=3; } #Configuration of virtual host server { #Listening port listen 80; #############https################## #listen 443 ssl; #ssl_certificate /opt/https/xxxxxx.crt; #ssl_certificate_key /opt/https/xxxxxx.key; #ssl_protocols SSLv3 TLSv1; #ssl_ciphers HIGH:!ADH:!EXPORT57:RC4+RSA:+MEDIUM; #ssl_prefer_server_ciphers on; #ssl_session_cache shared:SSL:2m; #ssl_session_timeout 5m; ####################################end #There can be multiple domain names separated by spaces server_name www.ha97.com ha97.com; index index.html index.htm index.php; root /data/www/ha97; location ~ .*.(php|php5)?$ { fastcgi_pass 127.0.0.1:9000; fastcgi_index index.php; include fastcgi.conf; } #Picture cache time settings location ~ .*.(gif|jpg|jpeg|png|bmp|swf)$ { expires 10d; } #JS and CSS cache time settings location ~ .*.(js|css)?$ { expires 1h; } #Log format setting log_format access '$remote_addr - $remote_user [$time_local] "$request" ' '$status $body_bytes_sent "$http_referer" ' '"$http_user_agent" $http_x_forwarded_for'; #Define the access log of this virtual host access_log /var/log/nginx/ha97access.log access; #Enable reverse proxy for '/' location / { proxy_pass http://127.0.0.1:88; proxy_redirect off; proxy_set_header X-Real-IP $remote_addr; #The back-end Web server can obtain the user's real IP through x-forward-for proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for; #The following is the configuration of some reverse agents, optional. proxy_set_header Host $host; client_max_body_size 10m; #Maximum single file bytes allowed for client requests client_body_buffer_size 128k; #The maximum number of bytes requested by the buffer agent to buffer the client, ##Proxy settings the following settings are nginx Settings for communication with back-end servers## proxy_connect_timeout 90; #Timeout of nginx connection with backend server (proxy connection timeout) proxy_send_timeout 90; #Back end server data return time (agent send timeout) proxy_read_timeout 90; #After the connection is successful, the response time of back-end server (agent receiving timeout) proxy_buffering on; #This instruction turns on the response content buffer of the proxy server from the back end. After this parameter is turned on, proxy_buffers and proxy_busy_buffers_size parameter will work proxy_buffer_size 4k; #Set the buffer size for the proxy server (nginx) to save the user header information proxy_buffers 4 32k; #proxy_buffers: the average setting of web pages under 32k proxy_busy_buffers_size 64k; #Buffer size under high load (proxy_buffers*2) proxy_max_temp_file_size 2048m; #The default is 1024m, which is used to set when the web page content is greater than proxy_ Buffer, the maximum size of the temporary file. If the file is larger than this value, it will synchronously deliver the request from the upstream server instead of buffering to disk proxy_temp_file_write_size 512k; This is when the response of the proxy server is too large nginx The amount of data written to the temporary file at one time. proxy_temp_path /var/tmp/nginx/proxy_temp; ##You must manually create the cache directory before defining it proxy_headers_hash_max_size 51200; proxy_headers_hash_bucket_size 6400; ####################################################### } #Set the address for viewing nginx status location /nginxStatus { stub_status on; access_log on; auth_basic "nginxStatus"; auth_basic_user_file conf/htpasswd; #The contents of the htpasswd file can be generated using the htpasswd tool provided by apache. } #Local dynamic static separation reverse agent configuration #All jsp pages are handled by tomcat or resin location ~ .(jsp|jspx|do)?$ { proxy_set_header Host $host; proxy_set_header X-Real-IP $remote_addr; proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for; proxy_pass http://127.0.0.1:8080; } #All static files are read directly by nginx without tomcat or resin location ~ .*.(htm|html|gif|jpg|jpeg|png|bmp|swf|ioc|rar|zip|txt|flv|mid|doc|ppt|pdf|xls|mp3|wma)$ { expires 15d; } location ~ .*.(js|css)?$ { expires 1h; } } }

- Global block

From the beginning of the configuration file to the events block, some configuration instructions that affect the overall operation of the Nginx server will be set, mainly including the configuration of users (groups) running the Nginx server, the number of worker process es allowed to be generated, process PID storage path, log storage path and type, configuration file introduction, etc.

- events block

The instructions involved in the events block mainly affect the network connection between the Nginx server and the user. Common settings include whether to enable the serialization of network connections under multiple worker processes, whether to allow multiple network connections to be received at the same time, which event driven model is selected to handle the connection request, and the maximum number of connections that each worker process can support at the same time. This part of configuration has a great impact on the performance of Nginx, so it should be flexibly configured in practice.

- http block

This is the most frequently modified part of Nginx configuration. Most functions such as agent, cache, log definition and the configuration of third-party modules are here. The http block contains the http global block and the server block.

- http global block

The instructions of http global block configuration include file import, MIME-TYPE definition, log customization, connection timeout, the maximum number of single connection requests, etc.

- server block

Each server block is equivalent to a virtual host. Each server block is also divided into global server blocks and can contain multiple location blocks at the same time.

- Global server block

The most common configuration is the monitoring port, name, IP and other configurations of the virtual host.

- location block

The main function of this section is based on the request string received by the Nginx server (for example: http://server_name/uri-string ), pattern matching of strings (/ URI string) other than the virtual host name; processing of specific requests, address orientation, data caching, response control and other functions, as well as configuration of many third-party modules are also carried out here.

5, Nginx configuration instance

1. Reverse proxy instance

- Implementation effect: use Nginx reverse proxy to jump to different port services according to access path

- Implementation configuration

server { listen 9001; server_name localhost; location ~ /aaa/ { proxy_pass http://localhost:8001; } location ~ /bbb/ { proxy_pass http://localhost:8002; } }

-

location instruction description

- =: before being used for a uri without regular expressions, the request string is required to match the uri strictly. If the match is successful, continue to drill down and process the request immediately

- ~: used to indicate that a uri contains regular expressions and is case sensitive

- ~*: used to indicate that a uri contains regular expressions and is case insensitive

- ^~: before using the uri without regular expression, ask the Nginx server to find the location with the highest matching degree between the identification uri and the request string, and use this location to process the request immediately instead of using the regular uri and the request string in the location block for matching

2. Load balancing instance

Nginx load balancing strategy

- Polling (default): each request is allocated to different back-end servers in chronological order. If the back-end server is down, it can be automatically eliminated

upstream backserver { server 192.168.0.14; server 192.168.0.15; }

- Specify the weight: specify the polling probability, weight is proportional to the access ratio, which is used in the case of uneven performance of the back-end server

upstream backserver { server 192.168.0.14 weight=8; server 192.168.0.15 weight=10; }

- ip_hash: each request is allocated according to the hash result of the access ip, so that each visitor accesses a back-end server, which can solve the session problem

upstream backserver { ip_hash; server 192.168.0.14:88; server 192.168.0.15:80; }

- fair (third party): allocate requests according to the response time of the back-end server, and give priority to those with short response time

upstream backserver { server server1; server server2; fair; }

- url_hash (third party): allocate the request according to the hash result of the access URL, so that each URL is directed to the same back-end server, which is more effective when the back-end server is cache

upstream backserver { server server1:3128; server server2:3128; hash $request_uri; hash_method crc32; }

Load balancing configuration example

http { include mime.types; default_type application/octet-stream; sendfile on; keepalive_timeout 65; upstream myserver { ip_hash; server 192.168.15.146:8080 weight=1; server 192.168.15.146:8081 weight=1; } server { listen 80; server_name localhost; location / { root html; index index.html index.htm; } location /app { proxy_pass http://myserver; proxy_connect_timeout 10; } error_page 500 502 503 504 /50x.html; location = /50x.html { root html; } } }

3. Examples of dynamic and static separation

upstream static { server 192.168.69.113:80; } upstream java { server 192.168.69.113:8080; } server { listen 80; server_name 192.168.69.112; location / { root /soft/code; index index.html; } location ~ .*\.(png|jpg|gif)$ { proxy_pass http://static; include proxy_params; } location ~ .*\.jsp$ { proxy_pass http://java; include proxy_params; } }