NFS

brief introduction

-

NFS (Network File System), namely Network File System, is one of the file systems supported by FreeBSD. It allows computers in the network to share resources through TCP/IP network. In NFS applications, local NFS client applications can transparently read and write files on remote NFS servers, just like accessing local files.

-

nfs is suitable for file sharing between Linux and Unix, but cannot realize file sharing between Linux and Windows

-

nfs is a protocol running in the application layer, which listens on 2049/tcp and 2049/udp sockets

-

nfs service can only authenticate based on IP

-

NFS is an application based on UDP/IP protocol. Its implementation mainly adopts remote procedure call RPC mechanism. RPC provides a group of operations to access remote files independent of machine, operating system and low-level transfer protocol.

-

NFS is generally used to store static data such as shared videos and pictures

Application scenario

nfs has many practical application scenarios. Here are some common scenarios:

- Multiple machines share a CDROM or other device. This is cheaper and easier to install software on multiple machines

- In a large network, it may be convenient to configure a central NFS server to place the home directory of all users. These directories can be output to the network so that users can always get the same home directory no matter which workstation they log in on

- Different clients can watch video files on NFS to save local space

- The work data completed on the client can be backed up and saved to the user's own path on the NFS server

Composition of nfs

nfs has at least two main parts:

- An nfs server

- Several clients

The client remotely accesses the data stored on the NFS server through the TCP/IP network

Before the NFS server is officially enabled, you need to configure some NFS parameters according to the actual environment and requirements

nfs working mechanism

nfs is based on rpc to realize network file system sharing. So let's look at rpc first.

RPC

RPC (Remote Procedure Call Protocol), a Remote Procedure Call Protocol, is a protocol that requests services from remote computer programs through the network without understanding the underlying network technology.

RPC Protocol assumes the existence of some transmission protocols, such as TCP or UDP, to carry information data between communication programs. In the OSI network communication model, RPC spans the transport layer and application layer.

RPC adopts client / server mode. The requester is a client, and the service provider is a server.

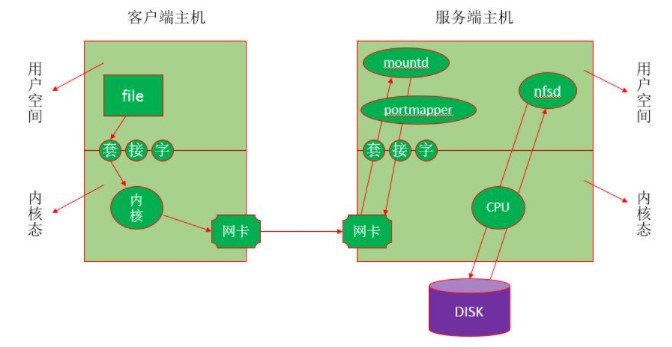

The working mechanism of rpc is shown in the figure above, which is described below:

- The client program initiates an RPC system call and sends it to another host (server) based on TCP protocol

- The server listens on a socket. After receiving the system call request from the client, it executes the received request and its passed parameters through the local system call, and returns the result to the local service process

- After receiving the returned execution result, the service process of the server encapsulates it into a response message, and then returns it to the client through rpc Protocol

- The client call process receives the reply information, gets the result of the process, and then calls the execution to proceed.

nfs working mechanism

//The NFS server side runs four processes:

nfsd

portmapper

mountd

idmapd

nfsd //The nfs daemon listens on 2049/tcp and 2049/udp ports

//It is not responsible for file storage (the local kernel of NFS server is responsible for scheduling storage). It is used to understand the rpc request initiated by the client, transfer it to the local kernel, and then store it on the specified file system

portmapper //RPC service of NFS server, which listens on 111/TCP and 111/UDP sockets and is used to manage remote procedure calls (RPCs)

mountd //It is used to verify whether the client is in the list of clients allowed to access this NFS file system. If yes, access is allowed (issue a token and go to nfsd with the token). Otherwise, access is denied

//The service port of mountd is random, and the random port number is provided by the rpc service (portmapper)

idmapd //Realize the centralized mapping of user accounts. All accounts are mapped to NFSNOBODY, but they can be accessed as local users

nfs workflow:

- The client initiates an instruction to view file information (ls file) to the kernel. The kernel knows through the NFS module that this file is not a file in the local file system, but a file on the remote NFS host

- The kernel of the client host encapsulates the instruction (system call) to view the file information into an RPC request through the RPC Protocol and sends it to the portmapper of the NFS server host through port 111 of TCP

- The portmapper (RPC service process) of the NFS server host tells the client that the mountd service of the NFS server is on a port, and you go to it for verification

Because mountd must register a port number with portmapper when providing services, portmapper knows which port it works on

- After the client knows the mountd process port number of the server, it requests verification through the known mountd port number of the server

- After receiving the verification request, mountd verifies whether the requesting client is in the list of clients allowed to access the NFS file system. If it is, access is allowed (issue a token and go to nfsd with the token). Otherwise, access is denied

- After the verification is passed, the client holds the token issued by mountd to go to the nfsd process of the server and request to view a file

- The nfsd process on the server side initiates a local system call to request the kernel to view the information of the file to be viewed by the client

- The kernel of the server executes the system call of the nfsd request and returns the result to the nfsd service

- After receiving the result returned by the kernel, the nfsd process encapsulates it into rpc response message and returns it to the client through tcp/ip protocol

nfs configuration file

The main configuration file of NFS is / etc/exports. In this file, you can define parameters such as output directory (i.e. shared directory), access permissions and accessible hosts of NFS system. The file is empty by default and is not configured to output any shared directories. This is based on security considerations. In this way, even if the system starts NFS, it will not output any shared resources.

Each line in the exports file provides the setting of a shared directory. The command format is:

<Output directory> Client 1(Option 1,Option 2,...) Client 2(Option 1,Option 2,...)

Except that the output directory is a required parameter, other parameters are optional. In addition, the output directory in the format is separated from the client, and between the client and the client, but there can be no space between the client and the option.

A client is a computer on the network that can access this NFS shared directory. The designation of the client is very flexible. It can be the IP or domain name of a single host, or the host in a subnet or domain.

Common client assignment methods:

| client | explain |

|---|---|

| 172.16.12.129 | Specify the host with the IP address |

| 172.16.12.0/24 (or 172.16.12. *) | Specify all hosts in the subnet |

| (www.ffdf.com) | Specify the host of the domain name |

| *.ffdf.com | Specify all hosts in the ffdf.com domain |

| *(or default) | All hosts |

Options are used to set access permissions, user mappings, and so on for shared directories. There are many options in the exports file, which can be divided into three categories:

- Access options (used to control access to shared directories)

- User mapping options

- By default, when the client accesses the NFS server, if the remote access user is root, the NFS server will map it into a local anonymous user (the user is nobody) and its user group into an anonymous user group (the user group is also nobody), which helps to improve the security of the system.

- Other options

Access options:

| Access options | explain |

|---|---|

| ro | Set output directory read-only |

| rw | Set output directory read / write |

User mapping options:

| User mapping options | explain |

|---|---|

| all_squash | Map all ordinary users and groups of remote access to anonymous users or user groups (nfsnobody) |

| no_all_squash | Do not map all ordinary users and user groups of remote access to anonymous users or user groups (default setting) |

| root_squash | Map the root user and its user group to anonymous users or user groups (default setting) |

| no_root_squash | The root user and the user group to which they belong are not mapped to anonymous users or user groups |

| anonuid=xxx | Map all users of remote access to anonymous users and specify the anonymous user as the local user account (UID=xxx) |

| anongid=xxx | Map all remote access user groups to anonymous user groups, and specify the anonymous user group as the local user group (GID=xxx) |

Other common options:

| Other options | explain |

|---|---|

| secure | Restrict clients to connect to NFS servers only from TCP/IP ports less than 1024 (default setting) |

| insecure | Allow clients to connect to NFS servers from TCP/IP ports greater than 1024 |

| sync | Writing data into memory buffer or disk synchronously is inefficient, but it can ensure data consistency |

| async | Save the data in the memory buffer before writing to disk if necessary |

| wdelay | Check whether there are related write operations. If so, these write operations are executed together to improve efficiency (default setting) |

| no_wdelay | If there is a write operation, it will be executed immediately. It should be used with sync configuration |

| subtree_check | If output directory is a subdirectory, NFS server checks permissions of the its parent directory (default) |

| no_subtree_check | Even if the output directory is a subdirectory, NFS does not check the permissions of its parent directory, which can improve efficiency |

| nohide | If you mount one directory on top of the another, original directory is usually hidden or appears empty. To disable this behavior, enable the hide option |

nfs installation

Installation (all required):

yum -y install nfs-utils

Start: (only start the server)

[root@server ~]# systemctl start nfs-server rpcbind [root@server ~]# Systemctl enable NFS server / / set startup and self startup Created symlink /etc/systemd/system/multi-user.target.wants/nfs-server.service → /usr/lib/systemd/system/nfs-server.service.

Set the shared directory on the server:

//The server sets the shared directory, the / opt/nfs/shared shared directory is readable, the user NFS upload is created, the uid and gid are 300, and all remote access users are mapped to anonymous users NFS upload. In the / opt/nfs/upload directory, the / opt/nfs/upload directory is readable and writable, and the accessible network segment is 192.168.220.0 [root@sever ~]# useradd -u 300 nfs-upload [root@sever ~]# groupmod -g 300 nfs-upload [root@server ~]# cat /etc/exports /opt/nfs/shared *(ro) /opt/nfs/upload 192.168.220.*(rw,anonuid=300,anongid=300) [root@server ~]# systemctl restart nfs-server

see:

Use the showmount command to test the output directory status of the NFS server

//Syntax: showmount [options] [NFS server name or address]

//Common options are:

-a //Displays all client hosts of the specified NFS server and the directories to which they are connected

-d //Displays all output directories connected by clients in the specified NFS server

-e //Displays the shared directory for all outputs on the specified NFS server

//The client can't view it (pay attention to the firewalls and SELinux at both ends) [root@client ~]# Showmount - e 192.168.220.9 (server IP) Export list for 192.168.220.9: /opt/nfs/shared * /opt/nfs/upload 192.168.220.*

Client mount: temporary mount:

mount -t nfs SERVER:/path/to/sharedfs /path/to/mount_point

Client permanent mount: write into the configuration file;

[root@client ~]# vim /etc/fstab ...... 192.168.220.9(Server IP):/opt/nfs/shared /mnt(Mount to local/mnt Directory) nfs defaults,_netdev(Network. If you do not write this, if the server is shut down and cannot be mounted, the client will not get up) 0(Do not check) 0(No backup) [root@client ~]# mountt -a [root@client ~]# df -h Filesystem Size Used Avail Use% Mounted on devtmpfs 876M 0 876M 0% /dev tmpfs 895M 0 895M 0% /dev/shm tmpfs 895M 8.8M 887M 1% /run tmpfs 895M 0 895M 0% /sys/fs/cgroup /dev/mapper/cs-root 17G 1.8G 16G 11% / /dev/sda1 1014M 195M 820M 20% /boot tmpfs 179M 0 179M 0% /run/user/0 192.168.220.9:/opt/nfs/shared 17G 1.8G 16G 11% /mnt //Mount succeeded 192.168.220.9:/opt/nfs/upload 17G 1.8G 16G 11% /media //Mount succeeded //The server enters the directory to create a file [root@server ~]# cd /opt/nfs/shared/ [root@server shared]# touch avc [root@server shared]# ls avc //The client can view by attaching to the local directory [root@client ~]# cd /mnt [root@client mnt]# ls avc //If the client wants to delete / create files, it must set setfacl - m u: NFS upload: RWX / opt / NFS / upload / on the server. Because the client is accessed by root user, it is mapped anonymously as NFS upload, and the shared directory is set with read-write (rw) permission before deleting / creating files on the client [root@sever ~]# getfacl /opt/nfs/shared/ getfacl: Removing leading '/' from absolute path names # file: opt/nfs/shared/ # owner: root # group: root user::rwx user:nobody:rwx group::r-x mask::rwx other::r-x //Creating files on the client xixi [root@client media]# touch xixi [root@client media]# ll total 0 -rw-r--r--. 1 root root 0 Sep 24 06:33 test -rw-r--r--. 1 300 300 0 Sep 24 08:23 xixi //Server view [root@sever ~]# cd /opt/nfs/upload/ [root@sever upload]# ll total 0 -rw-r--r--. 1 root root 0 Sep 24 06:33 test -rw-r--r--. 1 nfs-upload nfs-upload 0 Sep 24 08:23 xixi //The user has been mapped to NFS upload

Special options available for client mount:

- rsize: its value is the number of bytes (buffer) read from the server. The default is 1024. If a higher value, such as 8192, is used, the transmission speed can be increased

- wsize: its value is the number of bytes written to the server (buffer). The default is 1024. If a higher value, such as 8192, is used, the transmission speed can be increased

exportfs //Special tool for maintaining file system tables exported by exports file

-a //Output all directories set in the / etc/exports file

-r //Reread the settings in the / etc/exports file and make them take effect immediately without restarting the service

-u //Stop outputting a directory

-v //Displays the directory on the screen when outputting the directory

Check the options used by the output directory:

In the configuration file / etc/exports, even if only one or two options are set on the command line, there are actually many default options when actually outputting the directory. You can see what options are used by looking at the / var/lib/nfs/etab file

[root@localhost ~]# cat /var/lib/nfs/etab [root@sever nfs]# cat etab /opt/nfs/upload 192.168.220.*(rw,sync,wdelay,hide,nocrossmnt,secure,root_squash,no_all_squash,no_subtree_check,secure_locks,acl,no_pnfs,anonuid=300,anongid=300,sec=sys,rw,secure,root_squash,no_all_squash) /opt/nfs/shared *(rw,sync,wdelay,hide,nocrossmnt,secure,root_squash,no_all_squash,no_subtree_check,secure_locks,acl,no_pnfs,anonuid=65534,anongid=65534,sec=sys,rw,secure,root_squash,no_all_squash) [root@sever nfs]# pwd /var/lib/nfs