agent

When writing web crawlers, we often encounter the problem of frequent IP access being blocked. The commonly used solution is to proxy ip.To do this, I wrote a sample program that randomly generates an available proxy ip.

program

package proxy;

import java.io.IOException;

import java.net.URISyntaxException;

import java.util.ArrayList;

import java.util.List;

import org.apache.http.client.ClientProtocolException;

import org.hfutec.utils.HFUTRequest;

import org.jsoup.Jsoup;

import org.jsoup.nodes.Document;

import org.jsoup.nodes.Element;

import org.jsoup.select.Elements;

public class ProxyGenerator {

public static HFUTRequest hfutRequest = new HFUTRequest();

public static void main(String[] args) throws ClientProtocolException, IOException, URISyntaxException {

List<String> list=GeneratorIpList();

String ipString =GeneratorOneIp(list);

System.out.println(ipString);

}

//Crawl ip list

public static List<String> GeneratorIpList() throws ClientProtocolException, IOException, URISyntaxException{

String html =hfutRequest.getHTMLContentByHttpGetMethod("https://free-proxy-list.net/");

Document document=Jsoup.parse(html);

Elements elements=document.select("div[class=table-responsive]").select("table").select("tbody").select("tr");

List<String> list=new ArrayList<>();

for (Element ele:elements) {

String ip=ele.select("td").get(0).text();

int post=Integer.parseInt(ele.select("td").get(1).text());

list.add(ip+"\t"+post);

}

return list;

}

//Randomly generate an available ip

public static String GeneratorOneIp(List<String> list) throws ClientProtocolException, IOException, URISyntaxException{

int size= list.size();

String ipstring="";

//Generate Random Numbers

for (int i = 0; i < size; i++) {

int num =(int)(Math.random() * size);

int code=hfutRequest.initHttpClientWithProxyCode(list.get(num).split("\t")[0], Integer.parseInt(list.get(num).split("\t")[1]));

System.out.println(code);

ipstring=list.get(i);

if (code == 200){

break;

}

}

return ipstring;

}

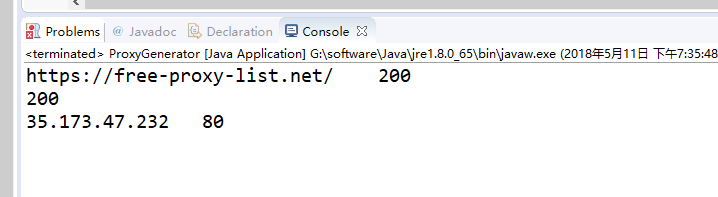

}As shown in the figure below, output an available proxy ip through which you can crawl. Of course, when crawling, we can switch an available ip for each address we crawl.

Proxy Request

The following lines of code allow you to implement proxy requests.

HFUTRequest hfutRequest = new HFUTRequest();

hfutRequest.initHttpClientWithProxy("151.80.140.233",53281);

String html =hfutRequest.getHTMLContentByHttpGetMethod("https://www.douban.com/group/topic/108062524/");

System.out.println(html);